How to show the whole image when using OpenCV warpPerspective

Solution 1

Yes, but you should realise that the output image might be very large. I quickly wrote the following Python code, but even a 3000 x 3000 image could not fit the output, it is just way too big due to the transformation. Although, here is my code, I hope it will be of use to you.

import cv2

import numpy as np

import cv #the old cv interface

img1_square_corners = np.float32([[253,211], [563,211], [563,519],[253,519]])

img2_quad_corners = np.float32([[234,197], [520,169], [715,483], [81,472]])

h, mask = cv2.findHomography(img1_square_corners, img2_quad_corners)

im = cv2.imread("image1.png")

Create an output image here, I used (3000, 3000) as an example.

out_2 = cv.fromarray(np.zeros((3000,3000,3),np.uint8))

By using the old cv interface, I wrote directly to the output, and so it does not get cropped. I tried this using the cv2 interface, but for some reason it did not work... Maybe someone can shed some light on that?

cv.WarpPerspective(cv.fromarray(im), out_2, cv.fromarray(h))

cv.ShowImage("test", out_2)

cv.SaveImage("result.png", out_2)

cv2.waitKey()

Anyway, this gives a very large image, that contains your original image 1, warped. The entire image will be visible if you specify the output image to be large enough. (Which might be very large indeed!)

I hope that this code may help you.

Solution 2

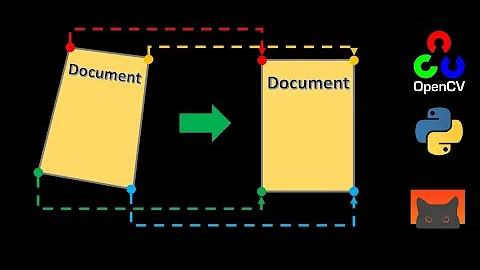

My solution is to calculate the result image size, and then do a translation.

def warpTwoImages(img1, img2, H):

'''warp img2 to img1 with homograph H'''

h1,w1 = img1.shape[:2]

h2,w2 = img2.shape[:2]

pts1 = float32([[0,0],[0,h1],[w1,h1],[w1,0]]).reshape(-1,1,2)

pts2 = float32([[0,0],[0,h2],[w2,h2],[w2,0]]).reshape(-1,1,2)

pts2_ = cv2.perspectiveTransform(pts2, H)

pts = concatenate((pts1, pts2_), axis=0)

[xmin, ymin] = int32(pts.min(axis=0).ravel() - 0.5)

[xmax, ymax] = int32(pts.max(axis=0).ravel() + 0.5)

t = [-xmin,-ymin]

Ht = array([[1,0,t[0]],[0,1,t[1]],[0,0,1]]) # translate

result = cv2.warpPerspective(img2, Ht.dot(H), (xmax-xmin, ymax-ymin))

result[t[1]:h1+t[1],t[0]:w1+t[0]] = img1

return result

dst_pts = float32([kp1[m.queryIdx].pt for m in good]).reshape(-1,1,2)

src_pts = float32([kp2[m.trainIdx].pt for m in good]).reshape(-1,1,2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

result = warpTwoImages(img1_color, img2_color, M)

Solution 3

First, follow the earlier solution to compute the homography matrix. After you have the homography matrix, you need to warp the image with respect to the Homography matrix. Lastly, merge the warped image.

Here, I will share another idea that could be used to merge the warped images. (The earlier answer, uses a range of indices to be overlay, here I'm using the masking of ROI)

Mask the Region of Interest (ROI) and the Image with Black. Then add the image with the ROI. (See OpenCV Bitmask Tutorial)

def copyOver(source, destination):

result_grey = cv2.cvtColor(source, cv2.COLOR_BGR2GRAY)

ret, mask = cv2.threshold(result_grey, 10, 255, cv2.THRESH_BINARY)

mask_inv = cv2.bitwise_not(mask)

roi = cv2.bitwise_and(source, source, mask=mask)

im2 = cv2.bitwise_and(destination, destination, mask=mask_inv)

result = cv2.add(im2, roi)

return result

warpedImageB = cv2.warpPerspective(imageB, H, (imageA.shape[1], imageA.shape[0]))

result = copyOver(imageA, warpedImageB)

First Image:

Second Image:

Related videos on Youtube

Comments

-

Rafiq Rahim almost 2 years

I have 2 test images here. My is question is, how to map the square in first image to the quadrilateral in the second image without cropping the image.

Image 1:

Image 2:

Here is my current code using openCV warpPerspective function.

import cv2 import numpy as np img1_square_corners = np.float32([[253,211], [563,211], [563,519],[253,519]]) img2_quad_corners = np.float32([[234,197], [520,169], [715,483], [81,472]]) h, mask = cv2.findHomography(img1_square_corners, img2_quad_corners) im = cv2.imread("image1.png") out = cv2.warpPerspective(im, h, (800,800)) cv2.imwrite("result.png", out)Result:

As you can see, because of dsize=(800,800) parameter in the warpPerspective function, I can't get full view of image 1. If I adjust the dsize, the square won't map properly. Is there any way to resize the output image so that I can get whole picture of image 1?

-

Hammer over 11 yearscould you elaborate on "If I adjust the dsize, the square won't map properly"? Is it warped? translated? scaled? ect. Another image would be helpful

-

-

Rafiq Rahim over 11 yearsAt first I was going to add translation factor to the homography, get the views of different regions of transformed image and then combine the views altogether. But your solution seems better! Thank you!

-

hjweide over 11 yearsNo problem, I am glad my solution helped you.

-

Mark Toledo over 5 yearsIs there an automated way to remove the excess "black" areas for the stitched image? Or warp the stitched image to fill the whole image area?

Mark Toledo over 5 yearsIs there an automated way to remove the excess "black" areas for the stitched image? Or warp the stitched image to fill the whole image area? -

Yeo over 5 years@MarkToledo I haven't tried, but perhaps you could try to find the the largest bounding rectangle to and crop that. Although, I haven't tried it, I can foresee that it is not easy.

-

abggcv over 5 yearstried this way but there is a fault in line

abggcv over 5 yearstried this way but there is a fault in linewarpedImageB = cv2.warpPerspective(imageB, H, (imageA.shape[1], imageA.shape[0]))because this will result in cropping of some part of imageB after warping. Look at the answer of @articfox where a translation is added to include full image after warping. -

oezguensi over 2 yearsI am struggling to extend this algorithm to multiple images. Could you give me a hint to what would need to be extended?

![Warp Perspective / Bird View [6] | OpenCV Python Tutorials for Beginners 2020](https://i.ytimg.com/vi/Tm_7fGolVGE/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCa5jxbWb41xtPChEbXSW_0lPsjpg)