How to store one billion files on ext4?

When creating an ext4 file system, you can specify the usage type:

mkfs.ext4 -T usage-type /dev/something

The available usage types are listed in /etc/mke2fs.conf. The main difference between usage types is the inode ratio. The lower the inode ratio, the more you can create files in your file system.

The usage type in mke2fs.conf which allocates the highest number of inodes in the file system is "news". With this usage type on a 1 TB hard drive, ext4 creates 244 million inodes.

# tune2fs -l /dev/sdb1 | grep -i "inode count"

Inode count: 244219904

# sgdisk --print /dev/sdb

Disk /dev/sdb: 1953525168 sectors, 931.5 GiB

This means that it would require more than 4 TB to create an ext4 file system with "-Tnews" that could possibly hold 1 billion inodes.

Related videos on Youtube

redice

Updated on May 24, 2020Comments

-

redice almost 4 years

I only created about 8 million files, then there was no free inode in /dev/sdb1.

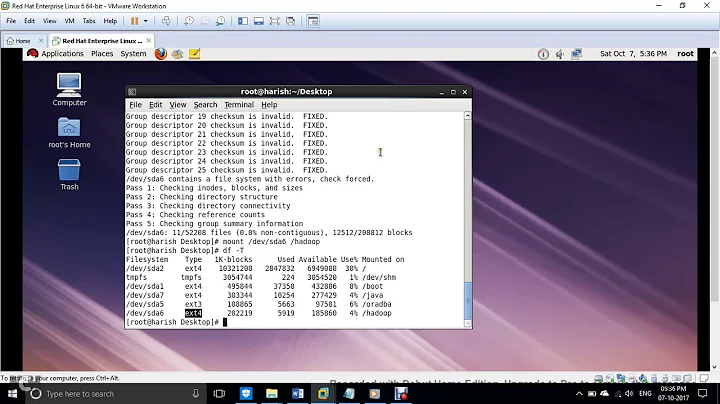

[spider@localhost images]$ df -i Filesystem Inodes IUsed IFree IUse% Mounted on /dev/sdb1 8483456 8483456 0 100% /homeSomeone says can specify the inode count when format the partition.

e.g. mkfs.ext4 -N 1000000000.

I tried but got an error:

"inode_size (256) * inodes_count (1000000000) too big...specify higher inode_ratio (-i) or lower inode count (-N). ".

What's the appropriate inode_ratio value?

I heard the min inode_ratio value is 1024 for ext4.

Is it possible to store one billion files on a single partition? How to? And someone says it will be very slow.

-

Elliott Frisch over 10 yearsI don't think you can with

Elliott Frisch over 10 yearsI don't think you can withext4. But you might with zfs. -

redice over 10 yearsI didn't hear of zfs before, is it easy to use?

-

Elliott Frisch over 10 yearsIt's a file system. It has a lot of features.

Elliott Frisch over 10 yearsIt's a file system. It has a lot of features. -

alvits over 10 yearsThe smallest file system block size allowed is usually 1024 bytes (1k). The smallest bytes per inode you can specify is as small as the the file system block size. Specifying a smaller then file system block will create more inodes that can ever be used. With file system block size at 1k and bytes per inode at 1k, a 1 TB disk will almost have 1 billion inodes.

-

Basile Starynkevitch over 10 yearsHowever, I would avoid having many million files in the same directory.... So use a

Basile Starynkevitch over 10 yearsHowever, I would avoid having many million files in the same directory.... So use a/data/dir012/subdir234/file4567.txtnaming scheme... -

Basile Starynkevitch over 10 yearsConsider also, especially if each file is small (less than 8Kbytes) using a database (MariaDB, PostGresql, MongoDb, ...) or

Basile Starynkevitch over 10 yearsConsider also, especially if each file is small (less than 8Kbytes) using a database (MariaDB, PostGresql, MongoDb, ...) orsqliteorgdbm -

Basile Starynkevitch over 10 years@ElliotFrisch: recent Ext4, at least suitably configured & tuned, are expected to be able to hold many billions of files in a file system.

Basile Starynkevitch over 10 years@ElliotFrisch: recent Ext4, at least suitably configured & tuned, are expected to be able to hold many billions of files in a file system. -

redice over 10 years@alvits What's the appropriate file system block size? Is 1k too small? How did you figure out that "With file system block size at 1k and bytes per inode at 1k, a 1 TB disk will almost have 1 billion inodes."?

-

redice over 10 years@Basile Starynkevitch Why use "/data/dir012/subdir234/file4567.txt"?

-

Basile Starynkevitch over 10 years@redice: you want to avoid huge directories. Limit the number of directory entries to a few thousands.

Basile Starynkevitch over 10 years@redice: you want to avoid huge directories. Limit the number of directory entries to a few thousands. -

alvits over 10 years@redice -

Total size / block size = total number of blocks. For aninodeto be used, it must not be more than the number of blocks. If total size = 1TB and block size = 1KB, then 1TB / 1KB = 1 billion blocks. Here's why you can't have more inodes than blocks. If you have a file system that can hold 1 million blocks and you forced it to have 2 million blocks, what will happen after you saved 1 million files (assuming files are equal to or smaller than block size), you will run out of blocks (space). If you have more blocks than inode (typical), you will run out of inodes if files are small -

Basile Starynkevitch over 10 yearsWhat is the use case? What are these billion files containing? (images, textual data, ...?)? Why don't you consider other data storage techniques (databases, indexed files à la GDBM, ...)

Basile Starynkevitch over 10 yearsWhat is the use case? What are these billion files containing? (images, textual data, ...?)? Why don't you consider other data storage techniques (databases, indexed files à la GDBM, ...) -

redice over 10 years@Basile Starynkevitch All are images. I just afraid the database is slow than file system.

-

Basile Starynkevitch over 10 yearsYou should not care: your problem is I/O bound not CPU bound, so the few 0.01% added by a database is completely noise.

Basile Starynkevitch over 10 yearsYou should not care: your problem is I/O bound not CPU bound, so the few 0.01% added by a database is completely noise. -

oPless over 9 yearsin addition to zfs, btrfs and xfs don't suffer with inode issues. I tend to prefer btrfs

-

phuclv almost 6 years

phuclv almost 6 years

-

-

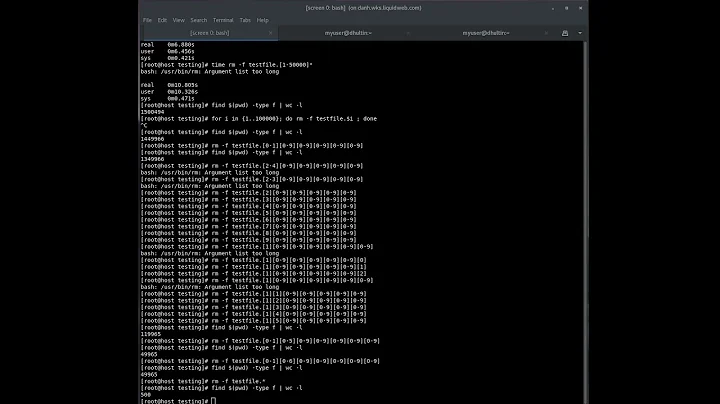

redice over 10 yearsWill it be slow if creating so many inodes?

-

Pilchy over 10 yearsYes, it will take a very long time. For instance, on my deskop machine, 14 seconds are needed to create 10000 files in a single directory and more than 2 minutes to create 100000 files, so creating 1 billion files probably requires days or weeks.

-

hanshenrik over 7 yearsbtrfs has no problems creating 1 billion files. creating 100,000 files takes 3 seconds ON MY LAPTOP with btrfs

c #include <stdio.h> #include <sys/types.h> #include <sys/stat.h> #include <fcntl.h> #include <stdint.h> #include <unistd.h> int main(){ char buf[10+1];//need 11 for the terminating NULL character of 1 billion. for(uint_fast32_t i=0;i<100000;++i){ sprintf(buf,"%i",i); close(open(buf,O_CREAT)); } } -

hanshenrik over 7 years- and that's a single-threaded approach. try multithreading that

-

hanshenrik over 7 yearsadding -O3 (max optimize) to gcc, turned it down to:

real 0m1.876s user 0m0.020s sys 0m1.808stl;dr: it takes <2 seconds to create 100,000 files on my laptop with btrfs. lel