How to use GLUT/OpenGL to render to a file?

Solution 1

You almost certainly don't want GLUT, regardless. Your requirements don't fit what it's intended to do (and even when your requirements do fit its intended purpose, you usually don't want it anyway).

You can use OpenGL. To generate output in a file, you basically set up OpenGL to render to a texture, and then read the resulting texture into main memory and save it to a file. At least on some systems (e.g., Windows), I'm pretty sure you'll still have to create a window and associate the rendering context with the window, though it will probably be fine if the window is always hidden.

Solution 2

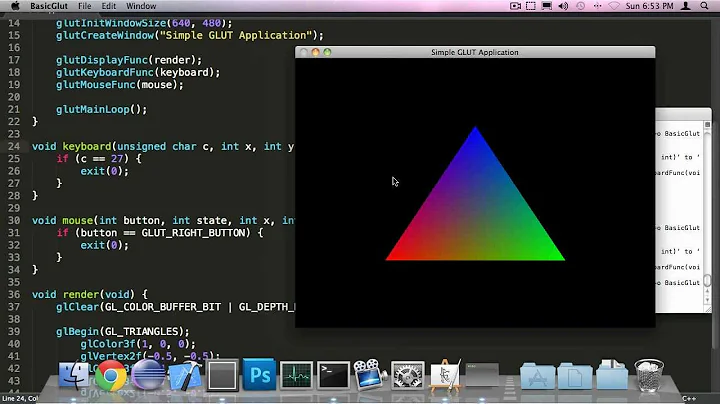

glReadPixels runnable PBO example

The example below generates either:

- one ppm per frame at 200 FPS and no extra dependencies,

- one png per frame at 600 FPS with libpng

- one mpg for all frames at 1200 FPS with FFmpeg

on a ramfs. The better the compression, the larger the FPS, so we must be memory IO bound.

FPS is larger than 200 on my 60 FPS screen, and all images are different, so I'm sure that it's not limited to the screen's FPS.

The GIF in this answer was generated from the video as explained at: https://askubuntu.com/questions/648603/how-to-create-an-animated-gif-from-mp4-video-via-command-line/837574#837574

glReadPixels is the key OpenGL function that reads pixels from screen. Also have a look at the setup under init().

glReadPixels reads the bottom line of pixels first, unlike most image formats, so converting that is usually needed.

offscreen.c

#ifndef PPM

#define PPM 1

#endif

#ifndef LIBPNG

#define LIBPNG 1

#endif

#ifndef FFMPEG

#define FFMPEG 1

#endif

#include <assert.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#define GL_GLEXT_PROTOTYPES 1

#include <GL/gl.h>

#include <GL/glu.h>

#include <GL/glut.h>

#include <GL/glext.h>

#if LIBPNG

#include <png.h>

#endif

#if FFMPEG

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libavutil/opt.h>

#include <libswscale/swscale.h>

#endif

enum Constants { SCREENSHOT_MAX_FILENAME = 256 };

static GLubyte *pixels = NULL;

static GLuint fbo;

static GLuint rbo_color;

static GLuint rbo_depth;

static int offscreen = 1;

static unsigned int max_nframes = 128;

static unsigned int nframes = 0;

static unsigned int time0;

static unsigned int height = 128;

static unsigned int width = 128;

#define PPM_BIT (1 << 0)

#define LIBPNG_BIT (1 << 1)

#define FFMPEG_BIT (1 << 2)

static unsigned int output_formats = PPM_BIT | LIBPNG_BIT | FFMPEG_BIT;

/* Model. */

static double angle;

static double delta_angle;

#if PPM

/* Take screenshot with glReadPixels and save to a file in PPM format.

*

* - filename: file path to save to, without extension

* - width: screen width in pixels

* - height: screen height in pixels

* - pixels: intermediate buffer to avoid repeated mallocs across multiple calls.

* Contents of this buffer do not matter. May be NULL, in which case it is initialized.

* You must `free` it when you won't be calling this function anymore.

*/

static void screenshot_ppm(const char *filename, unsigned int width,

unsigned int height, GLubyte **pixels) {

size_t i, j, cur;

const size_t format_nchannels = 3;

FILE *f = fopen(filename, "w");

fprintf(f, "P3\n%d %d\n%d\n", width, height, 255);

*pixels = realloc(*pixels, format_nchannels * sizeof(GLubyte) * width * height);

glReadPixels(0, 0, width, height, GL_RGB, GL_UNSIGNED_BYTE, *pixels);

for (i = 0; i < height; i++) {

for (j = 0; j < width; j++) {

cur = format_nchannels * ((height - i - 1) * width + j);

fprintf(f, "%3d %3d %3d ", (*pixels)[cur], (*pixels)[cur + 1], (*pixels)[cur + 2]);

}

fprintf(f, "\n");

}

fclose(f);

}

#endif

#if LIBPNG

/* Adapted from https://github.com/cirosantilli/cpp-cheat/blob/19044698f91fefa9cb75328c44f7a487d336b541/png/open_manipulate_write.c */

static png_byte *png_bytes = NULL;

static png_byte **png_rows = NULL;

static void screenshot_png(const char *filename, unsigned int width, unsigned int height,

GLubyte **pixels, png_byte **png_bytes, png_byte ***png_rows) {

size_t i, nvals;

const size_t format_nchannels = 4;

FILE *f = fopen(filename, "wb");

nvals = format_nchannels * width * height;

*pixels = realloc(*pixels, nvals * sizeof(GLubyte));

*png_bytes = realloc(*png_bytes, nvals * sizeof(png_byte));

*png_rows = realloc(*png_rows, height * sizeof(png_byte*));

glReadPixels(0, 0, width, height, GL_RGBA, GL_UNSIGNED_BYTE, *pixels);

for (i = 0; i < nvals; i++)

(*png_bytes)[i] = (*pixels)[i];

for (i = 0; i < height; i++)

(*png_rows)[height - i - 1] = &(*png_bytes)[i * width * format_nchannels];

png_structp png = png_create_write_struct(PNG_LIBPNG_VER_STRING, NULL, NULL, NULL);

if (!png) abort();

png_infop info = png_create_info_struct(png);

if (!info) abort();

if (setjmp(png_jmpbuf(png))) abort();

png_init_io(png, f);

png_set_IHDR(

png,

info,

width,

height,

8,

PNG_COLOR_TYPE_RGBA,

PNG_INTERLACE_NONE,

PNG_COMPRESSION_TYPE_DEFAULT,

PNG_FILTER_TYPE_DEFAULT

);

png_write_info(png, info);

png_write_image(png, *png_rows);

png_write_end(png, NULL);

png_destroy_write_struct(&png, &info);

fclose(f);

}

#endif

#if FFMPEG

/* Adapted from: https://github.com/cirosantilli/cpp-cheat/blob/19044698f91fefa9cb75328c44f7a487d336b541/ffmpeg/encode.c */

static AVCodecContext *c = NULL;

static AVFrame *frame;

static AVPacket pkt;

static FILE *file;

static struct SwsContext *sws_context = NULL;

static uint8_t *rgb = NULL;

static void ffmpeg_encoder_set_frame_yuv_from_rgb(uint8_t *rgb) {

const int in_linesize[1] = { 4 * c->width };

sws_context = sws_getCachedContext(sws_context,

c->width, c->height, AV_PIX_FMT_RGB32,

c->width, c->height, AV_PIX_FMT_YUV420P,

0, NULL, NULL, NULL);

sws_scale(sws_context, (const uint8_t * const *)&rgb, in_linesize, 0,

c->height, frame->data, frame->linesize);

}

void ffmpeg_encoder_start(const char *filename, int codec_id, int fps, int width, int height) {

AVCodec *codec;

int ret;

avcodec_register_all();

codec = avcodec_find_encoder(codec_id);

if (!codec) {

fprintf(stderr, "Codec not found\n");

exit(1);

}

c = avcodec_alloc_context3(codec);

if (!c) {

fprintf(stderr, "Could not allocate video codec context\n");

exit(1);

}

c->bit_rate = 400000;

c->width = width;

c->height = height;

c->time_base.num = 1;

c->time_base.den = fps;

c->gop_size = 10;

c->max_b_frames = 1;

c->pix_fmt = AV_PIX_FMT_YUV420P;

if (codec_id == AV_CODEC_ID_H264)

av_opt_set(c->priv_data, "preset", "slow", 0);

if (avcodec_open2(c, codec, NULL) < 0) {

fprintf(stderr, "Could not open codec\n");

exit(1);

}

file = fopen(filename, "wb");

if (!file) {

fprintf(stderr, "Could not open %s\n", filename);

exit(1);

}

frame = av_frame_alloc();

if (!frame) {

fprintf(stderr, "Could not allocate video frame\n");

exit(1);

}

frame->format = c->pix_fmt;

frame->width = c->width;

frame->height = c->height;

ret = av_image_alloc(frame->data, frame->linesize, c->width, c->height, c->pix_fmt, 32);

if (ret < 0) {

fprintf(stderr, "Could not allocate raw picture buffer\n");

exit(1);

}

}

void ffmpeg_encoder_finish(void) {

uint8_t endcode[] = { 0, 0, 1, 0xb7 };

int got_output, ret;

do {

fflush(stdout);

ret = avcodec_encode_video2(c, &pkt, NULL, &got_output);

if (ret < 0) {

fprintf(stderr, "Error encoding frame\n");

exit(1);

}

if (got_output) {

fwrite(pkt.data, 1, pkt.size, file);

av_packet_unref(&pkt);

}

} while (got_output);

fwrite(endcode, 1, sizeof(endcode), file);

fclose(file);

avcodec_close(c);

av_free(c);

av_freep(&frame->data[0]);

av_frame_free(&frame);

}

void ffmpeg_encoder_encode_frame(uint8_t *rgb) {

int ret, got_output;

ffmpeg_encoder_set_frame_yuv_from_rgb(rgb);

av_init_packet(&pkt);

pkt.data = NULL;

pkt.size = 0;

ret = avcodec_encode_video2(c, &pkt, frame, &got_output);

if (ret < 0) {

fprintf(stderr, "Error encoding frame\n");

exit(1);

}

if (got_output) {

fwrite(pkt.data, 1, pkt.size, file);

av_packet_unref(&pkt);

}

}

void ffmpeg_encoder_glread_rgb(uint8_t **rgb, GLubyte **pixels, unsigned int width, unsigned int height) {

size_t i, j, k, cur_gl, cur_rgb, nvals;

const size_t format_nchannels = 4;

nvals = format_nchannels * width * height;

*pixels = realloc(*pixels, nvals * sizeof(GLubyte));

*rgb = realloc(*rgb, nvals * sizeof(uint8_t));

/* Get RGBA to align to 32 bits instead of just 24 for RGB. May be faster for FFmpeg. */

glReadPixels(0, 0, width, height, GL_RGBA, GL_UNSIGNED_BYTE, *pixels);

for (i = 0; i < height; i++) {

for (j = 0; j < width; j++) {

cur_gl = format_nchannels * (width * (height - i - 1) + j);

cur_rgb = format_nchannels * (width * i + j);

for (k = 0; k < format_nchannels; k++)

(*rgb)[cur_rgb + k] = (*pixels)[cur_gl + k];

}

}

}

#endif

static void model_init(void) {

angle = 0;

delta_angle = 1;

}

static int model_update(void) {

angle += delta_angle;

return 0;

}

static int model_finished(void) {

return nframes >= max_nframes;

}

static void init(void) {

int glget;

if (offscreen) {

/* Framebuffer */

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

/* Color renderbuffer. */

glGenRenderbuffers(1, &rbo_color);

glBindRenderbuffer(GL_RENDERBUFFER, rbo_color);

/* Storage must be one of: */

/* GL_RGBA4, GL_RGB565, GL_RGB5_A1, GL_DEPTH_COMPONENT16, GL_STENCIL_INDEX8. */

glRenderbufferStorage(GL_RENDERBUFFER, GL_RGB565, width, height);

glFramebufferRenderbuffer(GL_DRAW_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_RENDERBUFFER, rbo_color);

/* Depth renderbuffer. */

glGenRenderbuffers(1, &rbo_depth);

glBindRenderbuffer(GL_RENDERBUFFER, rbo_depth);

glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT16, width, height);

glFramebufferRenderbuffer(GL_DRAW_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_RENDERBUFFER, rbo_depth);

glReadBuffer(GL_COLOR_ATTACHMENT0);

/* Sanity check. */

assert(glCheckFramebufferStatus(GL_FRAMEBUFFER));

glGetIntegerv(GL_MAX_RENDERBUFFER_SIZE, &glget);

assert(width < (unsigned int)glget);

assert(height < (unsigned int)glget);

} else {

glReadBuffer(GL_BACK);

}

glClearColor(0.0, 0.0, 0.0, 0.0);

glEnable(GL_DEPTH_TEST);

glPixelStorei(GL_PACK_ALIGNMENT, 1);

glViewport(0, 0, width, height);

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

glMatrixMode(GL_MODELVIEW);

time0 = glutGet(GLUT_ELAPSED_TIME);

model_init();

#if FFMPEG

ffmpeg_encoder_start("tmp.mpg", AV_CODEC_ID_MPEG1VIDEO, 25, width, height);

#endif

}

static void deinit(void) {

printf("FPS = %f\n", 1000.0 * nframes / (double)(glutGet(GLUT_ELAPSED_TIME) - time0));

free(pixels);

#if LIBPNG

if (output_formats & LIBPNG_BIT) {

free(png_bytes);

free(png_rows);

}

#endif

#if FFMPEG

if (output_formats & FFMPEG_BIT) {

ffmpeg_encoder_finish();

free(rgb);

}

#endif

if (offscreen) {

glDeleteFramebuffers(1, &fbo);

glDeleteRenderbuffers(1, &rbo_color);

glDeleteRenderbuffers(1, &rbo_depth);

}

}

static void draw_scene(void) {

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

glRotatef(angle, 0.0f, 0.0f, -1.0f);

glBegin(GL_TRIANGLES);

glColor3f(1.0f, 0.0f, 0.0f);

glVertex3f( 0.0f, 0.5f, 0.0f);

glColor3f(0.0f, 1.0f, 0.0f);

glVertex3f(-0.5f, -0.5f, 0.0f);

glColor3f(0.0f, 0.0f, 1.0f);

glVertex3f( 0.5f, -0.5f, 0.0f);

glEnd();

}

static void display(void) {

char filename[SCREENSHOT_MAX_FILENAME];

draw_scene();

if (offscreen) {

glFlush();

} else {

glutSwapBuffers();

}

#if PPM

if (output_formats & PPM_BIT) {

snprintf(filename, SCREENSHOT_MAX_FILENAME, "tmp.%d.ppm", nframes);

screenshot_ppm(filename, width, height, &pixels);

}

#endif

#if LIBPNG

if (output_formats & LIBPNG_BIT) {

snprintf(filename, SCREENSHOT_MAX_FILENAME, "tmp.%d.png", nframes);

screenshot_png(filename, width, height, &pixels, &png_bytes, &png_rows);

}

#endif

# if FFMPEG

if (output_formats & FFMPEG_BIT) {

frame->pts = nframes;

ffmpeg_encoder_glread_rgb(&rgb, &pixels, width, height);

ffmpeg_encoder_encode_frame(rgb);

}

#endif

nframes++;

if (model_finished())

exit(EXIT_SUCCESS);

}

static void idle(void) {

while (model_update());

glutPostRedisplay();

}

int main(int argc, char **argv) {

int arg;

GLint glut_display;

/* CLI args. */

glutInit(&argc, argv);

arg = 1;

if (argc > arg) {

offscreen = (argv[arg][0] == '1');

} else {

offscreen = 1;

}

arg++;

if (argc > arg) {

max_nframes = strtoumax(argv[arg], NULL, 10);

}

arg++;

if (argc > arg) {

width = strtoumax(argv[arg], NULL, 10);

}

arg++;

if (argc > arg) {

height = strtoumax(argv[arg], NULL, 10);

}

arg++;

if (argc > arg) {

output_formats = strtoumax(argv[arg], NULL, 10);

}

/* Work. */

if (offscreen) {

/* TODO: if we use anything smaller than the window, it only renders a smaller version of things. */

/*glutInitWindowSize(50, 50);*/

glutInitWindowSize(width, height);

glut_display = GLUT_SINGLE;

} else {

glutInitWindowSize(width, height);

glutInitWindowPosition(100, 100);

glut_display = GLUT_DOUBLE;

}

glutInitDisplayMode(glut_display | GLUT_RGBA | GLUT_DEPTH);

glutCreateWindow(argv[0]);

if (offscreen) {

/* TODO: if we hide the window the program blocks. */

/*glutHideWindow();*/

}

init();

glutDisplayFunc(display);

glutIdleFunc(idle);

atexit(deinit);

glutMainLoop();

return EXIT_SUCCESS;

}

Compile with:

sudo apt-get install libpng-dev libavcodec-dev libavutil-dev

gcc -DPPM=1 -DLIBPNG=1 -DFFMPEG=1 -ggdb3 -std=c99 -O0 -Wall -Wextra \

-o offscreen offscreen.c -lGL -lGLU -lglut -lpng -lavcodec -lswscale -lavutil

Run 10 frames "offscreen" (mostly TODO, works but has no advantage), with size 200 x 100 and all output formats:

./offscreen 1 10 200 100 7

CLI format is:

./offscreen [offscreen [nframes [width [height [output_formats]]]]]

and output_formats is a bitmask:

ppm >> 0 | png >> 1 | mpeg >> 2

Run on-screen (does not limit my FPS either):

./offscreen 0

Benchmarked on Ubuntu 15.10, OpenGL 4.4.0 NVIDIA 352.63, Lenovo Thinkpad T430.

Also tested on ubuntu 18.04, OpenGL 4.6.0 NVIDIA 390.77, Lenovo Thinkpad P51.

TODO: find a way to do it on a machine without GUI (e.g. X11). It seems that OpenGL is just not made for offscreen rendering, and that reading pixels back to the GPU is implemented on the interface with the windowing system (e.g. GLX). See: OpenGL without X.org in linux

TODO: use a 1x1 window, make it un-resizable, and hide it to make things more robust. If I do either of those, the rendering fails, see code comments. Preventing resize seems impossible in Glut, but GLFW supports it. In any case, those don't matter much as my FPS is not limited by the screen refresh frequency, even when offscreen is off.

Other options besides PBO

- render to backbuffer (default render place)

- render to a texture

- render to a

Pixelbufferobject (PBO)

Framebuffer and Pixelbuffer are better than the backbuffer and texture since they are made for data to be read back to CPU, while the backbuffer and textures are made to stay on the GPU and show on screen.

PBO is for asynchronous transfers, so I think we don't need it, see: What are the differences between a Frame Buffer Object and a Pixel Buffer Object in OpenGL?,

Maybe off-screen Mesa is worth looking into: http://www.mesa3d.org/osmesa.html

Vulkan

It seems that Vulkan is designed to support offscreen rendering better than OpenGL.

This is mentioned on this NVIDIA overview: https://developer.nvidia.com/transitioning-opengl-vulkan

This is a runnable example that I just managed to run locally: https://github.com/SaschaWillems/Vulkan/tree/b9f0ac91d2adccc3055a904d3a8f6553b10ff6cd/examples/renderheadless/renderheadless.cpp

After installing the drivers and ensuring that the GPU is working I can do:

git clone https://github.com/SaschaWillems/Vulkan

cd Vulkan

git checkout b9f0ac91d2adccc3055a904d3a8f6553b10ff6cd

python download_assets.py

mkdir build

cd build

cmake ..

make -j`nproc`

cd bin

./renderheadless

and this immediately generates an image headless.ppm without opening any windows:

I have also managed to run this program an Ubuntu Ctrl + Alt + F3 non-graphical TTY, which further indicates it really does not need a screen.

Other examples that might be of interest:

- https://github.com/SaschaWillems/Vulkan/blob/b9f0ac91d2adccc3055a904d3a8f6553b10ff6cd/examples/screenshot/screenshot.cpp starts a GUI, and you can click a button to take a screenshot, and it gets saved to `screenshot

- https://github.com/SaschaWillems/Vulkan/blob/b9f0ac91d2adccc3055a904d3a8f6553b10ff6cd/examples/offscreen/offscreen.cpp render an image twice to create a reflection effect

Related: Is it possible to do offscreen rendering without Surface in Vulkan?

Tested on Ubuntu 20.04, NVIDIA driver 435.21, NVIDIA Quadro M1200 GPU.

apiretrace

https://github.com/apitrace/apitrace

Just works, and does not require you to modify your code at all:

git clone https://github.com/apitrace/apitrace

cd apitrace

git checkout 7.0

mkdir build

cd build

cmake ..

make

# Creates opengl_executable.out.trace

./apitrace trace /path/to/opengl_executable.out

./apitrace dump-images opengl_executable.out.trace

Also available on Ubuntu 18.10 with:

sudo apt-get install apitrace

You now have a bunch of screenshots named as:

animation.out.<n>.png

TODO: working principle.

The docs also suggest this for video:

apitrace dump-images -o - application.trace \

| ffmpeg -r 30 -f image2pipe -vcodec ppm -i pipe: -vcodec mpeg4 -y output.mp4

See also:

- images to GIF: https://unix.stackexchange.com/questions/24014/creating-a-gif-animation-from-png-files/489210#489210

- images to video: How to create a video from images with FFmpeg?

WebGL + canvas image saving

This is mostly a toy due to performance, but it kind of works for really basic use cases:

- demo: https://cirosantilli.com#webgl

- source: https://github.com/cirosantilli/cirosantilli.github.io/blame/fab7f46ec85a5357170a1bee9b3b0580bde6bd88/README.adoc#L2083

- bibliography: how to save canvas as png image?

Bibliography

- How to use GLUT/OpenGL to render to a file?

- How to take screenshot in OpenGL

- How to render offscreen on OpenGL?

- glReadPixels() "data" argument usage?

- Render OpenGL ES 2.0 to image

- http://www.songho.ca/opengl/gl_fbo.html

- http://www.mesa3d.org/brianp/sig97/offscrn.htm

- Render off screen (with FBO and RenderBuffer) and pixel transfer of color, depth, stencil

- https://gamedev.stackexchange.com/questions/59204/opengl-fbo-render-off-screen-and-texture

- What are the differences between a Frame Buffer Object and a Pixel Buffer Object in OpenGL?

- glReadPixels() "data" argument usage?

FBO larger than window:

- OpenGL how to create, and render to, a framebuffer that's larger than the window?

- FBO lwjgl bigger than Screen Size - What I'm doing wrong?

- Renderbuffers larger than window size - OpenGL

- problem saving openGL FBO larger than window

No Window / X11:

- OpenGL without X.org in linux

- Can you create OpenGL context without opening a window?

- Using OpenGL Without X-Window System

Solution 3

Not to take away from the other excellent answers, but if you want an existing example we have been doing Offscreen GL rendering for a few years now in OpenSCAD, as part of the Test Framework rendering to .png files from the commandline. The relevant files are at https://github.com/openscad/openscad/tree/master/src under the Offscreen*.cc

It runs on OSX (CGL), Linux X11 (GLX), BSD (GLX), and Windows (WGL), with some quirks due to driver differences. The basic trick is to forget to open a window (like, Douglas Adams says the trick to flying is to forget to hit the ground). It even runs on 'headless' linux/bsd if you have a virtual X11 server running like Xvfb or Xvnc. There is also the possibility to use Software Rendering on Linux/BSD by setting the environment variable LIBGL_ALWAYS_SOFTWARE=1 before running your program, which can help in some situations.

This is not the only system to do this, I believe the VTK imaging system does something similar.

This code is a bit old in it's methods, (I ripped the GLX code out of Brian Paul's glxgears), especially as new systems come along, OSMesa, Mir, Wayland, EGL, Android, Vulkan, etc, but notice the OffscreenXXX.cc filenames where XXX is the GL context subsystem, it can in theory be ported to different context generators.

Solution 4

Not sure OpenGL is the best solution.

But you can always render to an off-screen buffer.

The typical way to write openGL output to a file is to use readPixels to copy the resulting scene pixel-pixel to an image file

Solution 5

You could use SFML http://www.sfml-dev.org/. You can use the image class to save your rendered output.

http://www.sfml-dev.org/documentation/1.6/classsf_1_1Image.htm

To get your rendered output, you can render to a texture or copy your screen.

Rendering to a texture:

- http://nehe.gamedev.net/data/lessons/lesson.asp?lesson=36

- http://zavie.free.fr/opengl/index.html.en#rendertotexture

Copying screen output:

Related videos on Youtube

Alex319

Updated on July 09, 2022Comments

-

Alex319 almost 2 years

I have a program which simulates a physical system that changes over time. I want to, at predetermined intervals (say every 10 seconds) output a visualization of the state of the simulation to a file. I want to do it in such a way that it is easy to "turn the visualization off" and not output the visualization at all.

I am looking at OpenGL and GLUT as graphics tools to do the visualization. However the problem seems to be that first of all, it looks like it only outputs to a window and can't output to a file. Second, in order to generate the visualization you have to call GLUTMainLoop and that stops the execution of the main function - the only functions that get called from then on are calls from the GUI. However I do not want this to be a GUI based application - I want it to just be an application that you run from the command line, and it generates a series of images. Is there a way to do this in GLUT/OpenGL? Or is OpenGL the wrong tool for this completely and I should use something else

-

Phil H over 11 yearsHow big is the state of the simulation, if you were to write that instead of the visualisation?

-

-

Ciro Santilli OurBigBook.com over 7 yearsExtract a minimal runnable example and have my upvote :-)

Ciro Santilli OurBigBook.com over 7 yearsExtract a minimal runnable example and have my upvote :-) -

mgmalheiros over 7 yearsAfter the line

png_write_end(png, NULL);you should deallocate the PNG structures with a line likepng_destroy_write_struct(&png, &info);. Thank you for the handy code! -

Ciro Santilli OurBigBook.com over 7 years@mgmalheiros boa, Marcelo!

Ciro Santilli OurBigBook.com over 7 years@mgmalheiros boa, Marcelo!