How to use PyCharm to debug Scrapy projects

Solution 1

The scrapy command is a python script which means you can start it from inside PyCharm.

When you examine the scrapy binary (which scrapy) you will notice that this is actually a python script:

#!/usr/bin/python

from scrapy.cmdline import execute

execute()

This means that a command like

scrapy crawl IcecatCrawler can also be executed like this: python /Library/Python/2.7/site-packages/scrapy/cmdline.py crawl IcecatCrawler

Try to find the scrapy.cmdline package.

In my case the location was here: /Library/Python/2.7/site-packages/scrapy/cmdline.py

Create a run/debug configuration inside PyCharm with that script as script. Fill the script parameters with the scrapy command and spider. In this case crawl IcecatCrawler.

Like this:

Put your breakpoints anywhere in your crawling code and it should work™.

Solution 2

You just need to do this.

Create a Python file on crawler folder on your project. I used main.py.

- Project

- Crawler

- Crawler

- Spiders

- ...

- main.py

- scrapy.cfg

- Crawler

- Crawler

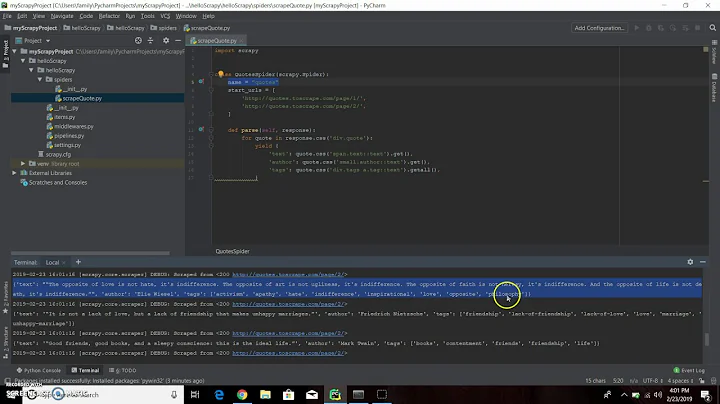

Inside your main.py put this code below.

from scrapy import cmdline

cmdline.execute("scrapy crawl spider".split())

And you need to create a "Run Configuration" to run your main.py.

Doing this, if you put a breakpoint at your code it will stop there.

Solution 3

As of 2018.1 this became a lot easier. You can now select Module name in your project's Run/Debug Configuration. Set this to scrapy.cmdline and the Working directory to the root dir of the scrapy project (the one with settings.py in it).

Like so:

Now you can add breakpoints to debug your code.

Solution 4

I am running scrapy in a virtualenv with Python 3.5.0 and setting the "script" parameter to /path_to_project_env/env/bin/scrapy solved the issue for me.

Solution 5

intellij idea also work.

create main.py:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

#coding=utf-8

import sys

from scrapy import cmdline

def main(name):

if name:

cmdline.execute(name.split())

if __name__ == '__main__':

print('[*] beginning main thread')

name = "scrapy crawl stack"

#name = "scrapy crawl spa"

main(name)

print('[*] main thread exited')

print('main stop====================================================')

show below:

Related videos on Youtube

William Kinaan

Updated on November 14, 2020Comments

-

William Kinaan over 3 years

William Kinaan over 3 yearsI am working on Scrapy 0.20 with Python 2.7. I found PyCharm has a good Python debugger. I want to test my Scrapy spiders using it. Anyone knows how to do that please?

What I have tried

Actually I tried to run the spider as a script. As a result, I built that script. Then, I tried to add my Scrapy project to PyCharm as a model like this:File->Setting->Project structure->Add content root.But I don't know what else I have to do

-

Aymon Fournier over 9 years(<type 'exceptions.SyntaxError'>, SyntaxError("Non-ASCII character '\\xf3' in file /Library/python/2.7/site-packages/scrapy/cmdline.pyc on line 1, but no encoding declared;

Aymon Fournier over 9 years(<type 'exceptions.SyntaxError'>, SyntaxError("Non-ASCII character '\\xf3' in file /Library/python/2.7/site-packages/scrapy/cmdline.pyc on line 1, but no encoding declared; -

Pullie over 9 years@AymonFournier: Different problem, not related to the original question. See: stackoverflow.com/questions/10589620/…£

-

Nour Wolf over 9 yearsGreat solution! I also tried using the scrapy binary itself located mostly in: /usr/bin/scrapy as the script with same parameters or any other scrapy commands you want to debug and it worked just perfect. make sure the working directory is pointing to your scrapy project root where scrapy.cfg is located.

-

gm2008 about 9 yearsI am using Komodo IDE and ActivePython. Both this solution and Pullie's solution work.

gm2008 about 9 yearsI am using Komodo IDE and ActivePython. Both this solution and Pullie's solution work. -

Artur Gaspar almost 9 years@AymonFournier It seems you are trying to run a .pyc file. Run the corresponding .py file instead (scrapy/cmdline.py).

-

aristotll over 8 yearsThis is a awesome solution.

aristotll over 8 yearsThis is a awesome solution. -

suntoch over 8 yearsIf I'm doing that, my settings module is not found.

ImportError: No module named settingsI have checked that the working directory is the project directory. It's used within a Django project. Anyone else stumbled upon this problem? -

user1592380 almost 8 yearsI'm suprised this works, I thought scrapy didn't work with python 3

-

javamonkey79 over 7 yearsUsing this method, the configuration for scrapy seems to be ignored. I'm not sure why.

-

wyx over 7 yearsThis method is more useful.

-

effel over 7 yearsThanks, this worked with Python 3.5 and virtualenv. "script" as @rioted said and setting "working directory" to

effel over 7 yearsThanks, this worked with Python 3.5 and virtualenv. "script" as @rioted said and setting "working directory" toproject/crawler/crawler, i.e., the directory holding__init__.py. -

zsljulius over 6 yearsThis one saves my life! Thanks!

-

miguelfg over 6 yearsYou might want to configure multiple executions for different spiders, so accept spider name as an argument of your run config. Then import sys spider = sys.argv[1] cmdline.execute("scrapy crawl {}".format(spider).split())

miguelfg over 6 yearsYou might want to configure multiple executions for different spiders, so accept spider name as an argument of your run config. Then import sys spider = sys.argv[1] cmdline.execute("scrapy crawl {}".format(spider).split()) -

José Tomás Tocino over 6 yearsDefinitely the cleanest and fastest way of doing it, also the best way to store it in your CVS.

-

crifan over 6 yearsNot forget to config

crifan over 6 yearsNot forget to configWorking directory, otherwise will errorno active project, Unknown command: crawl, Use "scrapy" to see available commands, Process finished with exit code 2 -

NFB over 6 years@miguelfg, can you elaborate on how to pass a spider name as an argument in run config without doing so manually each time you run the project?

NFB over 6 years@miguelfg, can you elaborate on how to pass a spider name as an argument in run config without doing so manually each time you run the project? -

malla about 6 yearsI tried the accepted answer but couldn't make it work. This solution is easier and works perfectly.

malla about 6 yearsI tried the accepted answer but couldn't make it work. This solution is easier and works perfectly. -

Utkarsh Sharma over 4 yearsYou are a genius. Thanks for this solution.

Utkarsh Sharma over 4 yearsYou are a genius. Thanks for this solution. -

Rob about 3 yearsI use something similar to this called

Rob about 3 yearsI use something similar to this calledrunner.py. The reason this is important is because it intentionally loads the project settings file. You must do this if you are trying to load pipeline(s). -

Amrit almost 3 yearsit says : from scrapy.http.headers import Headers ImportError: cannot import name 'Headers' from partially initialized module 'scrapy.http.headers most likely due to a circular import Python38

Amrit almost 3 yearsit says : from scrapy.http.headers import Headers ImportError: cannot import name 'Headers' from partially initialized module 'scrapy.http.headers most likely due to a circular import Python38