import from CSV into Ruby array, with 1st field as hash key, then lookup a field's value given header row

Solution 1

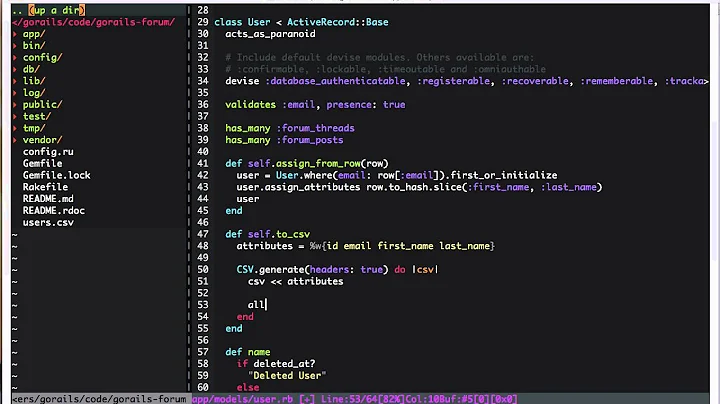

To get the best of both worlds (very fast reading from a huge file AND the benefits of a native Ruby CSV object) my code had since evolved into this method:

$stock="XTEX"

csv_data = CSV.parse IO.read(%`|sed -n "1p; /^#{$stock},/p" stocks.csv`), {:headers => true, :return_headers => false, :header_converters => :symbol, :converters => :all}

# Now the 1-row CSV object is ready for use, eg:

$company = csv_data[:company][0]

$volatility_month = csv_data[:volatility_month][0].to_f

$sector = csv_data[:sector][0]

$industry = csv_data[:industry][0]

$rsi14d = csv_data[:relative_strength_index_14][0].to_f

which is closer to my original method, but only reads in one record plus line 1 of the input csv file containing the headers. The inline sed instructions take care of that--and the whole thing is noticably instant. This this is better than last because now I can access all the fields from Ruby, and associatively, not caring about column numbers anymore as was the case with awk.

Solution 2

Like this (it works with other CSVs too, not just the one you specified):

require 'csv'

tickers = {}

CSV.foreach("stocks.csv", :headers => true, :header_converters => :symbol, :converters => :all) do |row|

tickers[row.fields[0]] = Hash[row.headers[1..-1].zip(row.fields[1..-1])]

end

Result:

{"ZUMZ"=>{:price=>30.0, :market_cap=>933.9}, "XTEX"=>{:price=>16.02, :market_cap=>811.57}, "AAC"=>{:price=>9.83, :market_cap=>80.02}}

You can access elements in this data structure like this:

puts tickers["XTEX"][:price] #=> 16.02

Edit (according to comment): For selecting elements, you can do something like

tickers.select { |ticker, vals| vals[:price] > 10.0 }

Solution 3

CSV.read(file_path, headers:true, header_converters: :symbol, converters: :all).collect do |row|

Hash[row.collect { |c,r| [c,r] }]

end

Solution 4

To add on to Michael Kohl's answer, if you want to access the elements in the following manner

puts tickers[:price]["XTEX"] #=> 16.02

You can try the following code snippet:

CSV.foreach("Workbook1.csv", :headers => true, :header_converters => :symbol, :converters => :all) do |row|

hash_row = row.headers[1..-1].zip( (Array.new(row.fields.length-1, row.fields[0]).zip(row.fields[1..-1])) ).to_h

hash_row.each{|key, value| tickers[key] ? tickers[key].merge!([value].to_h) : tickers[key] = [value].to_h}

end

Related videos on Youtube

Marcos

Pioneers are the ones with arrows in their backs. Investment Portfolio Software, Trade Automation Designer of infinite compression algorithms, and other research projects

Updated on March 17, 2020Comments

-

Marcos over 4 years

Maybe somebody can help me.

Starting with a CSV file like so:

Ticker,"Price","Market Cap" ZUMZ,30.00,933.90 XTEX,16.02,811.57 AAC,9.83,80.02I manage to read them into an array:

require 'csv' tickers = CSV.read("stocks.csv", {:headers => true, :return_headers => true, :header_converters => :symbol, :converters => :all} )To verify data, this works:

puts tickers[1][:ticker] ZUMZHowever this doesn't:

puts tickers[:ticker => "XTEX"][:price]How would I go about turning this array into a hash using the ticker field as unique key, such that I could easily look up any other field associatively as defined in line 1 of the input? Dealing with many more columns and rows.

Much appreciated!

-

Marcos over 11 yearsEDIT: the solution I now use is stackoverflow.com/a/9851131/1069375

-

-

Marcos over 12 yearsWow quick reply thank you!! Still getting the hang of this lang. Next I'm researching how to apply one or more filters(eg. return this hashed array w/all prices over 2.01)

-

Michael Kohl over 12 yearsIf this answer helped you, please upvote and/or accept (the little tick mark below the voting arrows) it, that's StackOverflow etiquette. I'll update my answer to address the filtering question :-)

-

Marcos over 12 yearsMy 2.8MB file with under 7000 rows and ~40 columns takes way too long on this foreach loop, over 5min, reading in only a few cols [1..4] testing in irb. Have to stick to snappy fast awk query to keep my script under 20s till I can figure this out within Ruby :(

-

Marcos over 12 yearsDang, ain't got the reputation to upvote you...been consulting this great site for yrs but only now created acct :)

-

Michael Kohl over 12 yearsThen just accept the answer, you should always be able to do that. As for the speed, Ruby isn't known for being fast, but 5 minutes does sound like a lot. You could try JRuby, but if you have a 20s limit, JVM startup may offset potential speed gains. But then there's nothing wrong with using

awkfor parsing CSV, it's actually very well suited for the task :-) -

Marcos over 12 yearsBTW my sample CSV datasource tinyurl.com/AllStocksFinviz if it helps. Thanks for all explaining & examples!

-

Michael Kohl over 12 yearsThis discussion is getting too long, but on my laptop this is how long it took me to parse the entire file (all columns):

ruby foo.rb Downloads/finviz.csv 36,00s user 1,07s system 92% cpu 40,260 total. If you are on 1.8 you should try theFasterCSVgem. -

pguardiario over 12 yearstry without the :converters => :all param. I'm not sure why that's there and it will likely slow it down a bit.

-

Marcos over 12 yearsThe problem with

-F,is thatawktreats every comma as delimiter even when it occurs inside a quoted field, like a company's name:"Apple, Inc."Only"Applereturns when I ask for field$15of that row. -

Marcos over 12 yearsNow that my framework is maturing, and needing more fields of various types to read and process flexibly, I'm looking to shift back from my fast

awksolution to 100% native Ruby. But it has to be just as fast and available to my scripts, so it seemsmemcachedwill be a good fit for me. I.e. I will structure to preload my CSV file into a memory object for Ruby to later use instantly. -

Marnen Laibow-Koser over 11 yearsThis isn't Perl -- you don't need the

$, and in fact you shouldn't have it, because in Ruby,$indicates global variables, and use of globals is generally bad practice. -

Marcos over 11 yearsIn this case the globals are intentional. But I do realize that if the entire program were more OO, things would be written better.

-

Marnen Laibow-Koser over 11 yearsExactly. Excessive use of globals (i.e. really any use except for things like configuration data) usually indicates a design problem that you'll want to fix.

-

brentiumbrent over 11 yearsI wrote a gem that yields hashed CSV records, with no extra effort: rubygems.org/gems/hasherize_csv

-

Marcos almost 10 yearsDownvoter note: This solution crossing multiple technologies (not just Ruby) has been our chosen one for years, since the original purely-Ruby answer, while it works, is highly inefficient, and simply too slow in production.