Improve performance on SQL query with Nested Loop - PostgreSQL

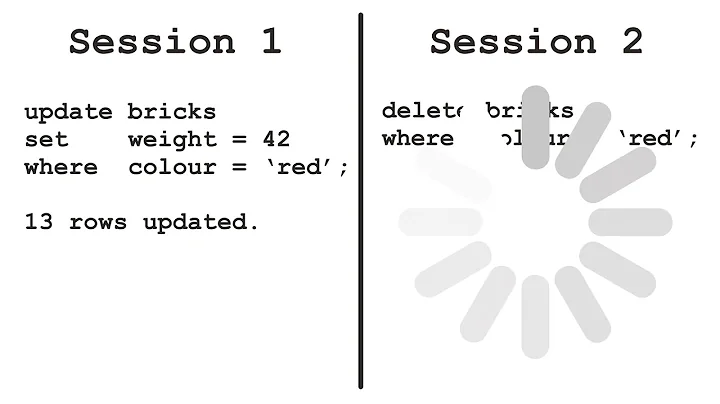

Ok, it looks like there's problem with optimizer estimations. He thiks that for april there will be only 1 row so he choose NESTED LOOP which is very inefficient for big number of rows (118,990 in that case).

- Perform

VACUUM ANALYZEfor every table. This will clean up dead tuples and refresh statistics. - consider adding index based on

dateslikeCREATE INDEX date_stat_idx ON <table with date_stat> USING btree (date_stat);

Rerun the query,

Related videos on Youtube

Dabeliuteef

Updated on June 04, 2022Comments

-

Dabeliuteef almost 2 years

Dabeliuteef almost 2 yearsI am using PostgreSQL and I have a weird problem with my SQL query. Depending on wich date paramter I'm using. My request doesn't do the same operation.

This is my working query :

SELECT DISTINCT app.id_application FROM stat sj LEFT OUTER JOIN groupe gp ON gp.id_groupe = sj.id_groupe LEFT OUTER JOIN application app ON app.id_application = gp.id_application WHERE date_stat >= '2016/3/01' AND date_stat <= '2016/3/31' AND ( date_stat = date_gen-1 or (date_gen = '2016/04/01' AND date_stat = '2016/3/31')) AND app.id_application IS NOT NULLThis query takes around 2 secondes (which is OKAY for me because I have a lots of rows). When I run EXPLAIN ANALYSE for this query I have this:

HashAggregate (cost=375486.95..375493.62 rows=667 width=4) (actual time=2320.541..2320.656 rows=442 loops=1) -> Hash Join (cost=254.02..375478.99 rows=3186 width=4) (actual time=6.144..2271.984 rows=263274 loops=1) Hash Cond: (gp.id_application = app.id_application) -> Hash Join (cost=234.01..375415.17 rows=3186 width=4) (actual time=5.926..2200.671 rows=263274 loops=1) Hash Cond: (sj.id_groupe = gp.id_groupe) -> Seq Scan on stat sj (cost=0.00..375109.47 rows=3186 width=8) (actual time=3.196..2068.357 rows=263274 loops=1) Filter: ((date_stat >= '2016-03-01'::date) AND (date_stat <= '2016-03-31'::date) AND ((date_stat = (date_gen - 1)) OR ((date_gen = '2016-04-01'::date) AND (date_stat = '2016-03-31'::date)))) Rows Removed by Filter: 7199514 -> Hash (cost=133.45..133.45 rows=8045 width=12) (actual time=2.677..2.677 rows=8019 loops=1) Buckets: 1024 Batches: 1 Memory Usage: 345kB -> Seq Scan on groupe gp (cost=0.00..133.45 rows=8045 width=12) (actual time=0.007..1.284 rows=8019 loops=1) -> Hash (cost=11.67..11.67 rows=667 width=4) (actual time=0.206..0.206 rows=692 loops=1) Buckets: 1024 Batches: 1 Memory Usage: 25kB -> Seq Scan on application app (cost=0.00..11.67 rows=667 width=4) (actual time=0.007..0.101 rows=692 loops=1) Filter: (id_application IS NOT NULL) Total runtime: 2320.855 msNow, When I'm trying the same query for the current month (we are the 6th of April, so I'm trying to get all the application_id of April) with the same query

SELECT DISTINCT app.id_application FROM stat sj LEFT OUTER JOIN groupe gp ON gp.id_groupe = sj.id_groupe LEFT OUTER JOIN application app ON app.id_application = gp.id_application WHERE date_stat >= '2016/04/01' AND date_stat <= '2016/04/30' AND ( date_stat = date_gen-1 or ( date_gen = '2016/05/01' AND date_job = '2016/04/30')) AND app.id_application IS NOT NULLThis query takes now 120 seconds. So I also ran EXPLAIN ANALYZE on this query and now it doesn't have the same operations:

HashAggregate (cost=375363.50..375363.51 rows=1 width=4) (actual time=186716.468..186716.532 rows=490 loops=1) -> Nested Loop (cost=0.00..375363.49 rows=1 width=4) (actual time=1.945..186619.404 rows=118990 loops=1) Join Filter: (gp.id_application = app.id_application) Rows Removed by Join Filter: 82222090 -> Nested Loop (cost=0.00..375343.49 rows=1 width=4) (actual time=1.821..171458.237 rows=118990 loops=1) Join Filter: (sj.id_groupe = gp.id_groupe) Rows Removed by Join Filter: 954061820 -> Seq Scan on stat sj (cost=0.00..375109.47 rows=1 width=8) (actual time=0.235..1964.423 rows=118990 loops=1) Filter: ((date_stat >= '2016-04-01'::date) AND (date_stat <= '2016-04-30'::date) AND ((date_stat = (date_gen - 1)) OR ((date_gen = '2016-05-01'::date) AND (date_stat = '2016-04-30'::date)))) Rows Removed by Filter: 7343798 -> Seq Scan on groupe gp (cost=0.00..133.45 rows=8045 width=12) (actual time=0.002..0.736 rows=8019 loops=118990) -> Seq Scan on application app (cost=0.00..11.67 rows=667 width=4) (actual time=0.003..0.073 rows=692 loops=118990) Filter: (id_application IS NOT NULL) Total runtime: 186716.635 msSo I decided to search where the problem came from by reducing the number of conditions from my query until the performances is acceptable again.

So with only this parameter

WHERE date_stat >= '2016/04/01'It takes only 1.9secondes (like the first working query) and it's also working with 2 parameters :

WHERE date_stat >= '2016/04/01' AND app.id_application IS NOT NULLBUT when I try to add one of those line I have the Nested loop in the Explain

AND date_stat <= '2016/04/30' AND ( date_stat = date_gen-1 or ( date_gen = '2016/05/01' AND date_stat = '2016/04/30'))Does someone have any idea where it could come from?

-

Gabriel's Messanger about 8 yearsPerform

EXPLAIN ANALYZEon both queries and add both outputs to your question. Also do you have any indexes on that tables? -

a_horse_with_no_name about 8 yearsThe problem is that in the second query Postgres completely underestimates the rows that are returned by that condition (estimated: 1 row, actual: 118990 rows). So this looks like stale statistics (in the first query the number of rows is also underestimated, but that doesn't cause a bad plan). Check if running

a_horse_with_no_name about 8 yearsThe problem is that in the second query Postgres completely underestimates the rows that are returned by that condition (estimated: 1 row, actual: 118990 rows). So this looks like stale statistics (in the first query the number of rows is also underestimated, but that doesn't cause a bad plan). Check if runninganalyze stat;changes anything. It doesn't look like as if you have an index onstat (date_stat). Creating one should help as well.

-