Insert the whole value of DataTable bulk into postgreSQL table

Solution 1

Simple Insert Using Parameters

Your project will need to reference the following assembly: Npgsql. If this reference is not visible within Visual Studio, then:

- browse to the connector's installation folder

- Execute:

GACInstall.exe - Restart Visual Studio.

Sample Table

CREATE TABLE "OrderHistory"

(

"OrderId" bigint NOT NULL,

"TotalAmount" bigint,

CONSTRAINT "OrderIdPk" PRIMARY KEY ("OrderId")

)

WITH (

OIDS=FALSE

);

ALTER TABLE "OrderHistory"

OWNER TO postgres;

GRANT ALL ON TABLE "OrderHistory" TO postgres;

GRANT ALL ON TABLE "OrderHistory" TO public;

ALTER TABLE "OrderHistory" ALTER COLUMN "OrderId" SET (n_distinct=1);

GRANT SELECT("OrderId"), UPDATE("OrderId"), INSERT("OrderId"), REFERENCES("OrderId") ON "OrderHistory" TO public;

GRANT SELECT("TotalAmount"), UPDATE("TotalAmount"), INSERT("TotalAmount"), REFERENCES("TotalAmount") ON "OrderHistory" TO public;

Sample Code

Be sure to use the following directives:

using Npgsql;

using NpgsqlTypes;

Enter the following source code into your method:

// Make sure that the user has the INSERT privilege for the OrderHistory table.

NpgsqlConnection connection = new NpgsqlConnection("PORT=5432;TIMEOUT=15;POOLING=True;MINPOOLSIZE=1;MAXPOOLSIZE=20;COMMANDTIMEOUT=20;COMPATIBLE=2.2.4.3;DATABASE=test;HOST=127.0.0.1;PASSWORD=test;USER ID=test");

connection.Open();

DataSet dataSet = new DataSet();

NpgsqlDataAdapter dataAdapter = new NpgsqlDataAdapter("select * from OrderHistory where OrderId=-1", connection);

dataAdapter.InsertCommand = new NpgsqlCommand("insert into OrderHistory(OrderId, TotalAmount) " +

" values (:a, :b)", connection);

dataAdapter.InsertCommand.Parameters.Add(new NpgsqlParameter("a", NpgsqlDbType.Bigint));

dataAdapter.InsertCommand.Parameters.Add(new NpgsqlParameter("b", NpgsqlDbType.Bigint));

dataAdapter.InsertCommand.Parameters[0].Direction = ParameterDirection.Input;

dataAdapter.InsertCommand.Parameters[1].Direction = ParameterDirection.Input;

dataAdapter.InsertCommand.Parameters[0].SourceColumn = "OrderId";

dataAdapter.InsertCommand.Parameters[1].SourceColumn = "TotalAmount";

dataAdapter.Fill(dataSet);

DataTable newOrders = dataSet.Tables[0];

DataRow newOrder = newOrders.NewRow();

newOrder["OrderId"] = 20;

newOrder["TotalAmount"] = 20.0;

newOrders.Rows.Add(newOrder);

DataSet ds2 = dataSet.GetChanges();

dataAdapter.Update(ds2);

dataSet.Merge(ds2);

dataSet.AcceptChanges();

connection.Close();

Thoughts On Performance

The original posting made no mention of performance requirements. It was requested that the solution must:

- insert using a

DataTable - insert data without using a loop

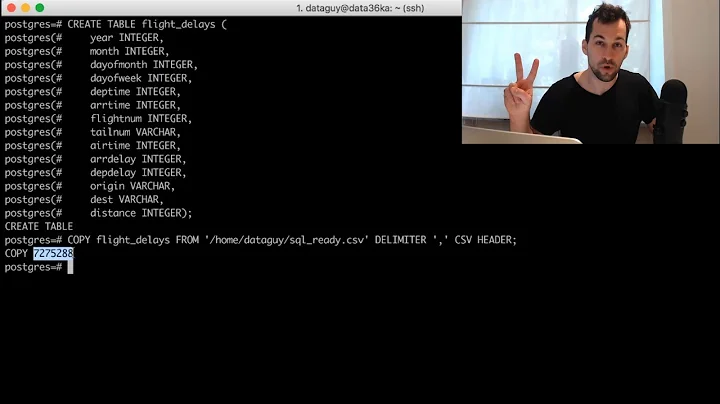

If you are inserting significant amounts of data, then I would suggest that you take a look at your performance options. The Postgres documentation suggests that you:

- Disable Autocommit

- Use the

COPYcommand - Remove indexes

- Remove Foreign Key Constraints

- etc.

For more information about optimizing Postgres inserts, please take a look at:

- PostgresSql.org: Inserting Data

- PostgresSql.org: Insert + Performance Tips

- StackOverflow: How to speed up insertion performance in PostgreSQL

Also, there are a lot of other factors that can impact a system's performance. For a high level introduction, take a look at:

-

ADO.NET SQL Server Performance bottleneck

- This posting outlines general (i.e. non-SqlServer) strategies for optimizing performance.

Other Options

- Does the .NET connector support the Postgres

Copycommand?- If not, you can download the source code for the

Npgsqlconnector and add your ownBulkCopy()method. Be sure to review the source code's licensing agreement first.

- If not, you can download the source code for the

- Check to see if Postgres supports Table Value Parameters.

- This approach allows you to pass in a table into a

Postgresfunction which can then insert the data directly into the destination.

- This approach allows you to pass in a table into a

- Purchase a Postgres .NET connector from a vendor which includes the required feature.

Additional References

- Postgres .NET Connector - free & open source

Solution 2

I've got the same problem a time ago. It seems there is no "ready to use" solution, till yet.

I've read this post and build a similar solution at that time, which is in productive use till today. Its based on text querys which reads files from STDIN. It uses the ADO.NET Postgre Data Provider Npgsql. You can create a large string (or temporary file, cause of memory usage) based on your DataTable and use that one as text query with the COPY command. In our case it was much more faster than inser teach row.

Maybe this isn't a complete solution, but may a good point to start and anything i know about it. :)

Solution 3

I have also found, that there are no 'ready to use' solution yet. Probably you can check my other answer in which I describe a little helper I have created for this problem, making use of another helper really easy: https://stackoverflow.com/a/46063313/6654362 I think that's currently the best solution. I posted the solution from the link in case the post died.

Edit: I have recently run into similar problem, but we were using Postgresql. I wanted to use effective bulkinsert, what turned out to be pretty difficult. I haven't found any proper free library to do so on this DB. I have only found this helper: https://bytefish.de/blog/postgresql_bulk_insert/ which is also on Nuget. I have written a small mapper, which auto mapped properties the way Entity Framework:

public static PostgreSQLCopyHelper<T> CreateHelper<T>(string schemaName, string tableName)

{

var helper = new PostgreSQLCopyHelper<T>("dbo", "\"" + tableName + "\"");

var properties = typeof(T).GetProperties();

foreach(var prop in properties)

{

var type = prop.PropertyType;

if (Attribute.IsDefined(prop, typeof(KeyAttribute)) || Attribute.IsDefined(prop, typeof(ForeignKeyAttribute)))

continue;

switch (type)

{

case Type intType when intType == typeof(int) || intType == typeof(int?):

{

helper = helper.MapInteger("\"" + prop.Name + "\"", x => (int?)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

case Type stringType when stringType == typeof(string):

{

helper = helper.MapText("\"" + prop.Name + "\"", x => (string)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

case Type dateType when dateType == typeof(DateTime) || dateType == typeof(DateTime?):

{

helper = helper.MapTimeStamp("\"" + prop.Name + "\"", x => (DateTime?)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

case Type decimalType when decimalType == typeof(decimal) || decimalType == typeof(decimal?):

{

helper = helper.MapMoney("\"" + prop.Name + "\"", x => (decimal?)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

case Type doubleType when doubleType == typeof(double) || doubleType == typeof(double?):

{

helper = helper.MapDouble("\"" + prop.Name + "\"", x => (double?)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

case Type floatType when floatType == typeof(float) || floatType == typeof(float?):

{

helper = helper.MapReal("\"" + prop.Name + "\"", x => (float?)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

case Type guidType when guidType == typeof(Guid):

{

helper = helper.MapUUID("\"" + prop.Name + "\"", x => (Guid)typeof(T).GetProperty(prop.Name).GetValue(x, null));

break;

}

}

}

return helper;

}

I use it the following way (I had entity named Undertaking):

var undertakingHelper = BulkMapper.CreateHelper<Model.Undertaking>("dbo", nameof(Model.Undertaking));

undertakingHelper.SaveAll(transaction.UnderlyingTransaction.Connection as Npgsql.NpgsqlConnection, undertakingsToAdd));

I showed an example with transaction, but it can also be done with normal connection retrieved from context. undertakingsToAdd is enumerable of normal entity records, which I want to bulkInsert into DB.

This solution, to which I've got after few hours of research and trying, is as you could expect much faster and finally easy to use and free! I really advice you to use this solution, not only for the reasons mentioned above, but also because it's the only one with which I had no problems with Postgresql itself, many other solutions work flawlessly for example with SqlServer.

Related videos on Youtube

Karan Singh

Updated on October 12, 2020Comments

-

Karan Singh over 3 years

In SQL we do something like this for bulk insert to datatable

SqlBulkCopy copy = new SqlBulkCopy(sqlCon); copy.DestinationTableName = strDestinationTable; copy.WriteToServer(dtFrom); Blockquotebut in PostgreSQL how to do this operation

-

Vivek S. almost 9 years

Vivek S. almost 9 years -

Karan Singh almost 9 yearsgreat article sir @wingedpanther still not getting idea about that because requirement is " DataTable" data directly insert into table without loop in c# MVC DataTable contains more then 1 lac record

-

Vivek S. almost 9 yearsfor bulk insert in PostgreSQL Database you can use

Vivek S. almost 9 yearsfor bulk insert in PostgreSQL Database you can useCOPY, import the data into acsv(avoid datatable) and thencopythem fromcsvtotable -

Amirhossein Mehrvarzi almost 9 yearsYou may wanna convert it into

Amirhossein Mehrvarzi almost 9 yearsYou may wanna convert it intoCSVthen insert into the desired place. or not?

-

-

Konstantin almost 9 yearsI think this is the best solution. But also set dataAdapter.UpdateBatchSize and start a transaction (connection.BeginTransaction()). For Mysql this gives an enormous boost, aobut 30x.

-

Anonymous Creator almost 6 yearsI am getting this exception. any idea? -> InvalidOperationException: Update requires a valid UpdateCommand when passed DataRow collection with modified rows.

Anonymous Creator almost 6 yearsI am getting this exception. any idea? -> InvalidOperationException: Update requires a valid UpdateCommand when passed DataRow collection with modified rows. -

Anonymous Creator almost 6 yearsOk. I tried to add rows using newOrders.Merge(dt); But it didnt work (gave exception of above comment). So still I have to loop through datatable to add rows.

Anonymous Creator almost 6 yearsOk. I tried to add rows using newOrders.Merge(dt); But it didnt work (gave exception of above comment). So still I have to loop through datatable to add rows. -

rmpt almost 6 yearslogin is required

-

devmb almost 6 yearsYes, looks like the article has been removed.

-

HamidKhan almost 5 yearscan you please provide new link, if you have

-

devmb almost 5 years@HamidKhan Sorry i cant. It was an article series by Alexander Kuznetsov on SQLblog.com from 2013 which has been removed. I guess it's outdated.