Instance Normalisation vs Batch normalisation

Solution 1

Definition

Let's begin with the strict definition of both:

As you can notice, they are doing the same thing, except for the number of input tensors that are normalized jointly. Batch version normalizes all images across the batch and spatial locations (in the CNN case, in the ordinary case it's different); instance version normalizes each element of the batch independently, i.e., across spatial locations only.

In other words, where batch norm computes one mean and std dev (thus making the distribution of the whole layer Gaussian), instance norm computes T of them, making each individual image distribution look Gaussian, but not jointly.

A simple analogy: during data pre-processing step, it's possible to normalize the data on per-image basis or normalize the whole data set.

Credit: the formulas are from here.

Which normalization is better?

The answer depends on the network architecture, in particular on what is done after the normalization layer. Image classification networks usually stack the feature maps together and wire them to the FC layer, which share weights across the batch (the modern way is to use the CONV layer instead of FC, but the argument still applies).

This is where the distribution nuances start to matter: the same neuron is going to receive the input from all images. If the variance across the batch is high, the gradient from the small activations will be completely suppressed by the high activations, which is exactly the problem that batch norm tries to solve. That's why it's fairly possible that per-instance normalization won't improve network convergence at all.

On the other hand, batch normalization adds extra noise to the training, because the result for a particular instance depends on the neighbor instances. As it turns out, this kind of noise may be either good and bad for the network. This is well explained in the "Weight Normalization" paper by Tim Salimans at al, which name recurrent neural networks and reinforcement learning DQNs as noise-sensitive applications. I'm not entirely sure, but I think that the same noise-sensitivity was the main issue in stylization task, which instance norm tried to fight. It would be interesting to check if weight norm performs better for this particular task.

Can you combine batch and instance normalization?

Though it makes a valid neural network, there's no practical use for it. Batch normalization noise is either helping the learning process (in this case it's preferable) or hurting it (in this case it's better to omit it). In both cases, leaving the network with one type of normalization is likely to improve the performance.

Solution 2

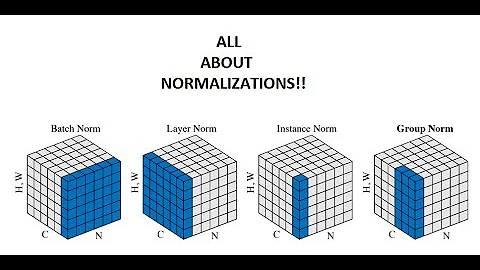

Great question and already answered nicely. Just to add: I found this visualisation From Kaiming He's Group Norm paper helpful.

Source: link to article on Medium contrasting the Norms

Solution 3

I wanted to add more information to this question since there are some more recent works in this area. Your intuition

use instance normalisation for image classification where class label should not depend on the contrast of input image

is partly correct. I would say that a pig in broad daylight is still a pig when the image is taken at night or at dawn. However, this does not mean using instance normalization across the network will give you better result. Here are some reasons:

- Color distribution still play a role. It is more likely to be a apple than an orange if it has a lot of red.

- At later layers, you can no longer imagine instance normalization acts as contrast normalization. Class specific details will emerge in deeper layers and normalizing them by instance will hurt the model's performance greatly.

IBN-Net uses both batch normalization and instance normalization in their model. They only put instance normalization in early layers and have achieved improvement in both accuracy and ability to generalize. They have open sourced code here.

Solution 4

IN provide visual and appearance in-variance and BN accelerate training and preserve discriminative feature. IN is preferred in Shallow layer(starting layer of CNN) so remove appearance variation and BN is preferred in deep layers(last CNN layer) should be reduce in order to maintain discrimination.

Related videos on Youtube

Ruppesh Nalwaya

Updated on July 05, 2022Comments

-

Ruppesh Nalwaya almost 2 years

I understand that Batch Normalisation helps in faster training by turning the activation towards unit Gaussian distribution and thus tackling vanishing gradients problem. Batch norm acts is applied differently at training(use mean/var from each batch) and test time (use finalized running mean/var from training phase).

Instance normalisation, on the other hand, acts as contrast normalisation as mentioned in this paper https://arxiv.org/abs/1607.08022 . The authors mention that the output stylised images should be not depend on the contrast of the input content image and hence Instance normalisation helps.

But then should we not also use instance normalisation for image classification where class label should not depend on the contrast of input image. I have not seen any paper using instance normalisation in-place of batch normalisation for classification. What is the reason for that? Also, can and should batch and instance normalisation be used together. I am eager to get an intuitive as well as theoretical understanding of when to use which normalisation.

-

Richard about 6 yearsYou say "in CNN it's different", but the formulas you provide here are the formulas for CNNs. In standard batch normalization, elements are normalized only across the batch dimension. In the CNN case here, elements are normalized across batch and spatial dimensions. The answer you link to explains it correctly.

-

Ric Hard almost 6 yearsI want to add that there is a recent paper published suggesting a layer that combines different normalizations with learnable parameters. So to let the network "decide", which normalization to take into account "Differentiable Learning-to-Normalize via Switchable Normalization"

-

Guillem Cucurull almost 6 yearsAlso, with Instance Normalization the behaviour at train and inference is the same. During inference, the statistics using for normalization are computed from the input images, rather than using the statistics computed in the training set.

Guillem Cucurull almost 6 yearsAlso, with Instance Normalization the behaviour at train and inference is the same. During inference, the statistics using for normalization are computed from the input images, rather than using the statistics computed in the training set. -

Tahlor over 5 yearsC = channels; N = batch size; H,W = 1D representation of outputs in channel

-

Sergey Ivanov about 5 yearsIn the formulas of standard deviation what is m and u_i?

-

maheshkumar over 4 yearsThe combination of batch and instance normalization has been explored in problems related to domain adaptation and style transfer in the paper Batch-Instance Normalization (arxiv.org/pdf/1805.07925.pdf).

-

bers over 4 yearsMaybe obvious, maybe not, certainly interesting for some: for

T=1, instance and batch normalization are identical. -

Gulzar over 3 yearsWhat about channel norm? Is that a thing?

-

max about 3 years"the same neuron is going to receive the input from all images": could you clarify what you mean? Each image is treated independently by a CNN, the only point where anything happens across images is when you sum up the losses across the batch. So I'm not sure why it's a problem that one image has different statistics than another, any more so than when one batch has different statistics than another batch.

-

Aray Karjauv about 3 yearsit would be very helpful if you could provide a proof for this statement.

Aray Karjauv about 3 yearsit would be very helpful if you could provide a proof for this statement. -

Stone over 2 years@Gulzar: Isn't Layer Norm in the visualization "channel norm"?

-

Thunder over 2 years