Interpolation over an irregular grid

Solution 1

Try the combination of inverse-distance weighting and scipy.spatial.KDTree described in SO inverse-distance-weighted-idw-interpolation-with-python. Kd-trees work nicely in 2d 3d ..., inverse-distance weighting is smooth and local, and the k= number of nearest neighbours can be varied to tradeoff speed / accuracy.

Solution 2

There is a nice inverse distance example by Roger Veciana i Rovira along with some code using GDAL to write to geotiff if you're into that.

This is of coarse to a regular grid, but assuming you project the data first to a pixel grid with pyproj or something, all the while being careful what projection is used for your data.

A copy of his algorithm and example script:

from math import pow

from math import sqrt

import numpy as np

import matplotlib.pyplot as plt

def pointValue(x,y,power,smoothing,xv,yv,values):

nominator=0

denominator=0

for i in range(0,len(values)):

dist = sqrt((x-xv[i])*(x-xv[i])+(y-yv[i])*(y-yv[i])+smoothing*smoothing);

#If the point is really close to one of the data points, return the data point value to avoid singularities

if(dist<0.0000000001):

return values[i]

nominator=nominator+(values[i]/pow(dist,power))

denominator=denominator+(1/pow(dist,power))

#Return NODATA if the denominator is zero

if denominator > 0:

value = nominator/denominator

else:

value = -9999

return value

def invDist(xv,yv,values,xsize=100,ysize=100,power=2,smoothing=0):

valuesGrid = np.zeros((ysize,xsize))

for x in range(0,xsize):

for y in range(0,ysize):

valuesGrid[y][x] = pointValue(x,y,power,smoothing,xv,yv,values)

return valuesGrid

if __name__ == "__main__":

power=1

smoothing=20

#Creating some data, with each coodinate and the values stored in separated lists

xv = [10,60,40,70,10,50,20,70,30,60]

yv = [10,20,30,30,40,50,60,70,80,90]

values = [1,2,2,3,4,6,7,7,8,10]

#Creating the output grid (100x100, in the example)

ti = np.linspace(0, 100, 100)

XI, YI = np.meshgrid(ti, ti)

#Creating the interpolation function and populating the output matrix value

ZI = invDist(xv,yv,values,100,100,power,smoothing)

# Plotting the result

n = plt.normalize(0.0, 100.0)

plt.subplot(1, 1, 1)

plt.pcolor(XI, YI, ZI)

plt.scatter(xv, yv, 100, values)

plt.title('Inv dist interpolation - power: ' + str(power) + ' smoothing: ' + str(smoothing))

plt.xlim(0, 100)

plt.ylim(0, 100)

plt.colorbar()

plt.show()

Solution 3

There's a bunch of options here, which one is best will depend on your data... However I don't know of an out-of-the-box solution for you

You say your input data is from tripolar data. There are three main cases for how this data could be structured.

- Sampled from a 3d grid in tripolar space, projected back to 2d LAT, LON data.

- Sampled from a 2d grid in tripolar space, projected into 2d LAT LON data.

- Unstructured data in tripolar space projected into 2d LAT LON data

The easiest of these is 2. Instead of interpolating in LAT LON space, "just" transform your point back into the source space and interpolate there.

Another option that works for 1 and 2 is to search for the cells that maps from tripolar space to cover your sample point. (You can use a BSP or grid type structure to speed up this search) Pick one of the cells, and interpolate inside it.

Finally there's a heap of unstructured interpolation options .. but they tend to be slow. A personal favourite of mine is to use a linear interpolation of the nearest N points, finding those N points can again be done with gridding or a BSP. Another good option is to Delauney triangulate the unstructured points and interpolate on the resulting triangular mesh.

Personally if my mesh was case 1, I'd use an unstructured strategy as I'd be worried about having to handle searching through cells with overlapping projections. Choosing the "right" cell would be difficult.

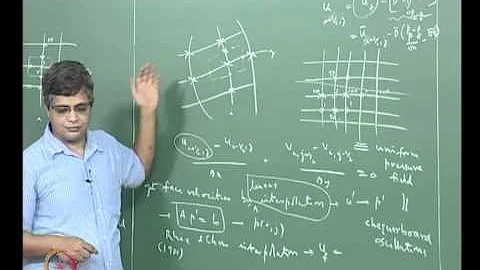

Related videos on Youtube

user391045

Updated on July 09, 2022Comments

-

user391045 almost 2 years

So, I have three numpy arrays which store latitude, longitude, and some property value on a grid -- that is, I have LAT(y,x), LON(y,x), and, say temperature T(y,x), for some limits of x and y. The grid isn't necessarily regular -- in fact, it's tripolar.

I then want to interpolate these property (temperature) values onto a bunch of different lat/lon points (stored as lat1(t), lon1(t), for about 10,000 t...) which do not fall on the actual grid points. I've tried matplotlib.mlab.griddata, but that takes far too long (it's not really designed for what I'm doing, after all). I've also tried scipy.interpolate.interp2d, but I get a MemoryError (my grids are about 400x400).

Is there any sort of slick, preferably fast way of doing this? I can't help but think the answer is something obvious... Thanks!!

-

Dave X over 7 yearsThe 'irregular grid' in the title threw me off a bit. You have a sample of points that happens to be distributed across space, but you do not have the structure of the grid as in matplotlib.org/examples/pylab_examples/tripcolor_demo.html Your data are scattered points across a field that you can assume is somewhat smooth. Interpolation over an irregular or unstructured grid or mesh that can respect discontinuities in the field can be done with matplotlib.tri matplotlib.org/api/tri_api.html .

-

-

Jon Cage almost 14 years+1: ..for the mention of BSP trees and generally putting my what I was getting at more elequently than I managed :-) You could form the BSP by centering each BSP node on one of the new data points and then simply drilling down to find all the neighbouring points.

-

user391045 almost 14 yearsHmm, that certainly looks nice, though a bit of work to reimplement! I'll be looking into it. Thanks!

-

user391045 almost 14 yearsRight idea with the grid, though I'm actually tracking the properties of a virtual particle as it travels through the mesh, so the blue dots should look more like a trail of breadcrumbs: !mesh Hopefully that picture works. The image rendering idea is interesting -- I do have the PIL available, so I may give it a go. Thanks!

-

user391045 almost 14 yearsNice! The consensus seems to be that I'm going to have to work at this a bit, but that's okay. I do like your suggestion of a BSP technique... Thanks a lot!

-

user391045 almost 14 yearsYou, my friend, are a genius. That KDTree class is brilliant! Exactly what I needed...

-

Michael Anderson almost 14 yearsI had some troubles with using vanilla inverse weighting. Found that it had some serious artifacting when the sample point was outside a cluster of points. I over came this by fitting a linear approximation (instead of a constant approximation) to the weighted data for the N-nearest neighbours. This produced pretty good results with the same amount of searching, just the overhead of solving a NxN linear system.

-

denis almost 14 years@Michael, is your data 2d, how scattered, what's Nnear ? Could you give an example of distances and values that misbehave ? E.g distances 1 1 1 1 1 10, values 1 1 1 1 1 10 => interpolate (6 / 5.1) = 1.18. Also, NxN ? In 2d, fitting a plane ax + by + c to N points (with weights say 1/dist) is either numpy.linalg .lstsq Nx3 or .solve 3x3 .

-

Michael Anderson almost 14 yearsMy data was 3D, but the problem occurs even in 1D. Take N=3 with linear data (1,1) (2,2),(3,3), sample at 2.5 and you get a result of about 2.3 (an under estimate by 10%). Things are worse if we estimate at 3.5, producing a value near 2.5 instead of the "real" 3.5 . Some will say we're now doing extrapolation rather than interpolation, but if our data points are at 1,2,3,10 then 1,2,3 are still the three closest points to 3.5 .. and we'd end up with the same result. This is what I mean by values outside of clusters. Fitting a line gives the "right" result - at least for my data

-

John about 11 yearsNo need to reimplement, just call. See QGIS with the SEXTANTE toolbox.

-

Dave X over 7 yearsOne part of case 3 might be that you have a data defined on an unstructured grid where a generated Delauney convex hull might not be appropriate. E.g. matplotlib.org/examples/pylab_examples/tripcolor_demo.html Then interpolating on the given triangular mesh might be good: matplotlib.org/api/tri_api.html