Is floating-point math consistent in C#? Can it be?

Solution 1

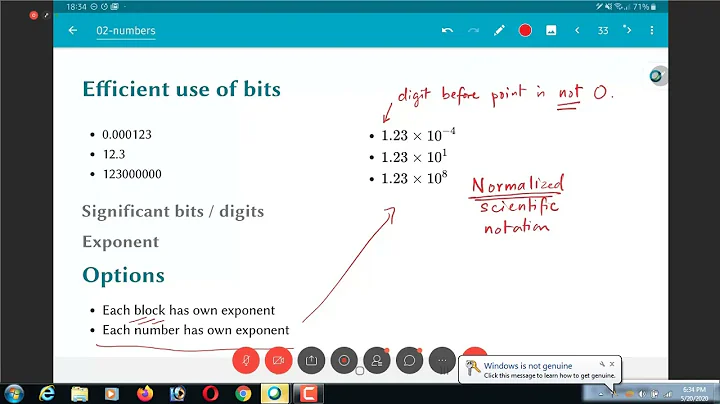

I know of no way to way to make normal floating points deterministic in .net. The JITter is allowed to create code that behaves differently on different platforms(or between different versions of .net). So using normal floats in deterministic .net code is not possible.

The workarounds I considered:

- Implement FixedPoint32 in C#. While this is not too hard(I have a half finished implementation) the very small range of values makes it annoying to use. You have to be careful at all times so you neither overflow, nor lose too much precision. In the end I found this not easier than using integers directly.

- Implement FixedPoint64 in C#. I found this rather hard to do. For some operations intermediate integers of 128bit would be useful. But .net doesn't offer such a type.

- Implement a custom 32 bit floatingpoint. The lack of a BitScanReverse intrinsic causes a few annoyances when implementing this. But currently I think this is the most promising path.

- Use native code for the math operations. Incurs the overhead of a delegate call on every math operation.

I've just started a software implementation of 32 bit floating point math. It can do about 70million additions/multiplications per second on my 2.66GHz i3. https://github.com/CodesInChaos/SoftFloat . Obviously it's still very incomplete and buggy.

Solution 2

The C# specification (§4.1.6 Floating point types) specifically allows floating point computations to be done using precision higher than that of the result. So, no, I don't think you can make those calculations deterministic directly in .Net. Others suggested various workarounds, so you could try them.

Solution 3

The following page may be useful in the case where you need absolute portability of such operations. It discusses software for testing implementations of the IEEE 754 standard, including software for emulating floating point operations. Most information is probably specific to C or C++, however.

http://www.math.utah.edu/~beebe/software/ieee/

A note on fixed point

Binary fixed point numbers can also work well as a substitute for floating point, as is evident from the four basic arithmetic operations:

- Addition and subtraction are trivial. They work the same way as integers. Just add or subtract!

- To multiply two fixed point numbers, multiply the two numbers then shift right the defined number of fractional bits.

- To divide two fixed point numbers, shift the dividend left the defined number of fractional bits, then divide by the divisor.

- Chapter four of Hattangady (2007) has additional guidance on implementing binary fixed point numbers (S.K. Hattangady, "Development of a Block Floating Point Interval ALU for DSP and Control Applications", Master's thesis, North Carolina State University, 2007).

Binary fixed point numbers can be implemented on any integer data type such as int, long, and BigInteger, and the non-CLS-compliant types uint and ulong.

As suggested in another answer, you can use lookup tables, where each element in the table is a binary fixed point number, to help implement complex functions such as sine, cosine, square root, and so on. If the lookup table is less granular than the fixed point number, it is suggested to round the input by adding one half of the granularity of the lookup table to the input:

// Assume each number has a 12 bit fractional part. (1/4096)

// Each entry in the lookup table corresponds to a fixed point number

// with an 8-bit fractional part (1/256)

input+=(1<<3); // Add 2^3 for rounding purposes

input>>=4; // Shift right by 4 (to get 8-bit fractional part)

// --- clamp or restrict input here --

// Look up value.

return lookupTable[input];

Solution 4

Is this a problem for C#?

Yes. Different architectures are the least of your worries, different framerates etc. can lead to deviations due to inaccuracies in float representations - even if they are the same inaccuracies (e.g. same architecture, except a slower GPU on one machine).

Can I use System.Decimal?

There is no reason you can't, however it's dog slow.

Is there a way to force my program to run in double precision?

Yes. Host the CLR runtime yourself; and compile in all the nessecary calls/flags (that change the behaviour of floating point arithmetic) into the C++ application before calling CorBindToRuntimeEx.

Are there any libraries that would help keep floating point calculations consistent?

Not that I know of.

Is there another way to solve this?

I have tackled this problem before, the idea is to use QNumbers. They are a form of reals that are fixed-point; but not fixed point in base-10 (decimal) - rather base-2 (binary); because of this the mathematical primitives on them (add, sub, mul, div) are much faster than the naive base-10 fixed points; especially if n is the same for both values (which in your case it would be). Furthermore because they are integral they have well-defined results on every platform.

Keep in mind that framerate can still affect these, but it is not as bad and is easily rectified using syncronisation points.

Can I use more mathematical functions with QNumbers?

Yes, round-trip a decimal to do this. Furthermore, you should really be using lookup tables for the trig (sin, cos) functions; as those can really give different results on different platforms - and if you code them correctly they can use QNumbers directly.

Solution 5

According to this slightly old MSDN blog entry the JIT will not use SSE/SSE2 for floating point, it's all x87. Because of that, as you mentioned you have to worry about modes and flags, and in C# that's not possible to control. So using normal floating point operations will not guarantee the exact same result on every machine for your program.

To get precise reproducibility of double precision you are going to have to do software floating point (or fixed point) emulation. I don't know of C# libraries to do this.

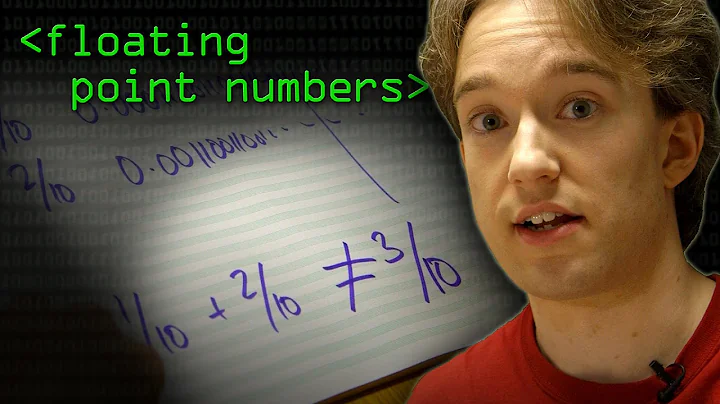

Depending on the operations you need, you might be able to get away with single precision. Here's the idea:

- store all values you care about in single precision

- to perform an operation:

- expand inputs to double precision

- do operation in double precision

- convert result back to single precision

The big issue with x87 is that calculations might be done in 53-bit or 64-bit accuracy depending on the precision flag and whether the register spilled to memory. But for many operations, performing the operation in high precision and rounding back to lower precision will guarantee the correct answer, which implies that the answer will be guaranteed to be the same on all systems. Whether you get the extra precision won't matter, since you have enough precision to guarantee the right answer in either case.

Operations that should work in this scheme: addition, subtraction, multiplication, division, sqrt. Things like sin, exp, etc. won't work (results will usually match but there is no guarantee). "When is double rounding innocuous?" ACM Reference (paid reg. req.)

Hope this helps!

Related videos on Youtube

BlueRaja - Danny Pflughoeft

Ringotan - Learn Japanese Kanji A blog, sort of

Updated on December 05, 2021Comments

-

BlueRaja - Danny Pflughoeft over 2 years

BlueRaja - Danny Pflughoeft over 2 yearsNo, this is not another "Why is (1/3.0)*3 != 1" question.

I've been reading about floating-points a lot lately; specifically, how the same calculation might give different results on different architectures or optimization settings.

This is a problem for video games which store replays, or are peer-to-peer networked (as opposed to server-client), which rely on all clients generating exactly the same results every time they run the program - a small discrepancy in one floating-point calculation can lead to a drastically different game-state on different machines (or even on the same machine!)

This happens even amongst processors that "follow" IEEE-754, primarily because some processors (namely x86) use double extended precision. That is, they use 80-bit registers to do all the calculations, then truncate to 64- or 32-bits, leading to different rounding results than machines which use 64- or 32- bits for the calculations.

I've seen several solutions to this problem online, but all for C++, not C#:

- Disable double extended-precision mode (so that all

doublecalculations use IEEE-754 64-bits) using_controlfp_s(Windows),_FPU_SETCW(Linux?), orfpsetprec(BSD). - Always run the same compiler with the same optimization settings, and require all users to have the same CPU architecture (no cross-platform play). Because my "compiler" is actually the JIT, which may optimize differently every time the program is run, I don't think this is possible.

- Use fixed-point arithmetic, and avoid

floatanddoublealtogether.decimalwould work for this purpose, but would be much slower, and none of theSystem.Mathlibrary functions support it.

So, is this even a problem in C#? What if I only intend to support Windows (not Mono)?

If it is, is there any way to force my program to run at normal double-precision?

If not, are there any libraries that would help keep floating-point calculations consistent?

-

BlueRaja - Danny Pflughoeft almost 13 yearsI've seen this question, but every answer either repeats the problem with no solution, or says "ignore it," which is not an option. I asked a similar question on gamedev, but (because of the audience) most of the answers seem to be geared towards C++.

BlueRaja - Danny Pflughoeft almost 13 yearsI've seen this question, but every answer either repeats the problem with no solution, or says "ignore it," which is not an option. I asked a similar question on gamedev, but (because of the audience) most of the answers seem to be geared towards C++. -

driushkin almost 13 yearsnot an answer, but I'm sure you in most domains you could design your system in such a way that all shared state is deterministic, and there is no significant performance degradation because of that

-

Peter O. almost 13 yearsAre you aware of floating point emulation? If absolute portability of floating point operations is a concern, maybe a library dealing with floating point emulation could help. I'm not aware of any, though, and some performance may have to be sacrificed in the process.

-

CodesInChaos almost 13 years@Peter do you know of any fast floating point emulation for .net?

-

habakuk almost 13 yearsHere are some informations about System.Decimal - maybe it is interesting: csharpindepth.com/Articles/General/Decimal.aspx

habakuk almost 13 yearsHere are some informations about System.Decimal - maybe it is interesting: csharpindepth.com/Articles/General/Decimal.aspx -

Peter O. almost 13 yearsWhat floating point operations and math functions do you intend to use for your project? I'm currently working on a 64-bit floating point library (see my answer) and would like to know what features you would like to see in my library.

-

Gravity almost 13 yearsIsn't

decimalessentially a type of floating-point emulation? -

CodesInChaos almost 13 years@Gravity Yes it is. But it's not a good fit for games.

-

Josh over 12 yearsDoes Java suffer from this problem?

-

porges over 12 years@Josh: Java has the

strictfpkeyword, which forces all calculations to be done in the stated size (floatordouble) rather than an extended size. However, Java still has many problems with IEE-754 support. Very (very, very) few programming languages support IEE-754 well. -

Peter Cordes almost 6 yearsRelated: x86 assembly language gives you deterministic FP (except maybe for

Peter Cordes almost 6 yearsRelated: x86 assembly language gives you deterministic FP (except maybe forrsqrtps), but the trick is getting the same or similar sources to always compile the same. It's problematic even in C/C++ if you allow different compilers. Related: Does any floating point-intensive code produce bit-exact results in any x86-based architecture?. But at least with an ahead-of-time compiled language, you can usually get determinism if you avoid any FP library functions that are allowed to be different on different platforms.

- Disable double extended-precision mode (so that all

-

svick almost 13 yearsImagine a P2P shooter. You shoot at a guy, you hit him and he dies, but it's very close, you almost missed. On the other guy's PC uses slightly different calculations and it computes that you miss. Do you see the problem now? In this case, increasing the size of registers will not help (at least not completely). Using the exact same calculation on each computer will.

-

CodesInChaos almost 13 yearsIn this scenario one usually doesn't care about how close the result is to the actual result(as long as it's reasonable), but what matters is that it's exactly the same for all users.

-

AxFab almost 13 yearsYou right, I didn't though about this kind of scenario. However I'm agree with @CodeInChaos on this one. I didn't found that really smart to take an important decision twice. This is more a software architecture issue. One program, the shooter's application for exemple, should made the calculation and send result to the others. You will never have errors in this way. You have a hit or not, but only one take the descision. Like say @driushkin

-

BlueRaja - Danny Pflughoeft almost 13 years@Aesgar: Yes, that is how most shooters work; that "authority" is called the server, and we call the overall architecture a "client/server" architecture. However, there is another kind of architecture: peer-to-peer. In P2P, there is no server; rather, all clients must verify all actions with each other before anything happens. This increases the lag, making it not acceptable for shooters, but vastly decreases the network traffic, making it perfect for games where a small lag (~250ms) is acceptable, but syncing the entire game state is not. Namely, RTS games like C&C and Starcraft use P2P.

BlueRaja - Danny Pflughoeft almost 13 years@Aesgar: Yes, that is how most shooters work; that "authority" is called the server, and we call the overall architecture a "client/server" architecture. However, there is another kind of architecture: peer-to-peer. In P2P, there is no server; rather, all clients must verify all actions with each other before anything happens. This increases the lag, making it not acceptable for shooters, but vastly decreases the network traffic, making it perfect for games where a small lag (~250ms) is acceptable, but syncing the entire game state is not. Namely, RTS games like C&C and Starcraft use P2P. -

AxFab almost 13 yearsThat'll be a weird game if only the server can shoot :D. P2P is a communication protocole but it'll not stop the message: 'This one take my bullet.' If only one program test it, you could even have time to improve more sophisticate physics. And more important it'll ignore your bug due to hardware limitation !

-

CodesInChaos almost 13 yearsThis kind of network code relies on the code behaving in exactly the same way on all participating computers if the input is exactly the same. It then only sends the input. The nice thing about this is that the traffic is very low even when you have very many objects. Thus it is used in almost all RTS games I've seen. An old article on this: gamasutra.com/view/feature/3094/…

-

causa prima almost 13 yearsIn a p2p game you don't have a trusted machine to rely on. If you allow one station to decide whether his bullet hit or not you open up the possibility of a client cheating. Furthermore, the links can't even handle the amount of data that sometimes results--the games work by sending the orders rather than the results. I play RTS games and many times I've seen so much junk flying around there's no way it could be sent over normal household uplinks.

-

svick almost 13 yearsNote that

BigIntegeris only available in .Net 4.0. -

BlueRaja - Danny Pflughoeft almost 13 yearsYou should upload this to an open-source code project site, like sourceforge or github. This makes it easier to find, easier to contribute to, easier to put on your resume etc. Also, a few source-code tips (feel free to ignore): Use

BlueRaja - Danny Pflughoeft almost 13 yearsYou should upload this to an open-source code project site, like sourceforge or github. This makes it easier to find, easier to contribute to, easier to put on your resume etc. Also, a few source-code tips (feel free to ignore): Useconstinstead ofstaticfor constants, so the compiler can optimize them; prefer member functions to static functions (so we can call, ex.myDouble.LeadingZeros()instead ofIntDouble.LeadingZeros(myDouble)); try to avoid single-letter variable names (MultiplyAnyLength, for example, has 9, making it very hard to follow) -

BlueRaja - Danny Pflughoeft almost 13 yearsBe careful using

BlueRaja - Danny Pflughoeft almost 13 yearsBe careful usinguncheckedand non-CLS-compliant types likeulong,uint, etc. for speed purposes - because they are so rarely used, the JIT does not optimize them as aggressively, so using them can actually be slower than using normal types likelongandint. Also, C# has operator overloading, which this project would benefit greatly from. Finally, are there any associated unit-tests? Besides those small things, amazing job Peter, this is ridiculously impressive! -

Peter O. almost 13 yearsThank you for the comments. I do perform unit tests on the code. They are rather extensive, though, much too extensive to release for now. I even write unit-testing helper routines to make writing multiple tests easier. I don't use overloaded operators for now because I have plans of translating the code to Java when I'm done.

-

CodesInChaos almost 13 yearsThe funny thing is when I posted on your blog I didn't notice that blog was yours. I had just decided to try google+ and in its C# spark it suggested that blog entry. So I thought "What a remarkable coincidence for us two to start writing such a thing at the same time". But of course we had the same trigger :)

-

CodesInChaos almost 13 yearsMy guess is that the performance hit of

BigIntegerexceeds even the performance hit by Decimal. -

CodesInChaos almost 13 years"compile to a 32-bit .dll and then use ... AnyCpu" I think this will only work when running on a 32 bit system. On a 64bit system only a program targetting

x86will be able to load the 32 bit dll. -

Barry Kaye over 12 yearsA couple of times in the answers here there is reference to the performance hit of using

Decimal(@Jonathan Dickinson - 'dog slow') orBigInteger(@CodeInChaos comment above) - can someone please provide a little explanation on these performance hits and as to whether/why they are really show-stoppers to providing a solution. -

Barry Kaye over 12 years@Yahia - thank you for the edit - interesting reading, however, could you please just also give a ball-park guesstimate as to the performance hit of not-using 'float' are we talking 10% slower or 10 times slower - I just want to get a feel for the order of magnitude implied.

-

Yahia over 12 yearsit more likeley in the area of 1:5 than "only 10%"

-

BlueRaja - Danny Pflughoeft over 12 yearsNot sure what you're talking about with framerates being issue. Clearly you'd want to have a fixed update-rate (see for example here) - whether or not that's the same as the display-framerate is irrelevant. As long as the inaccuracies are the same on all machines, we're good. I don't understand your third answer at all.

BlueRaja - Danny Pflughoeft over 12 yearsNot sure what you're talking about with framerates being issue. Clearly you'd want to have a fixed update-rate (see for example here) - whether or not that's the same as the display-framerate is irrelevant. As long as the inaccuracies are the same on all machines, we're good. I don't understand your third answer at all. -

BlueRaja - Danny Pflughoeft over 12 yearsalways truncating every operation to 30 or 31 bits - this is exactly what the

BlueRaja - Danny Pflughoeft over 12 yearsalways truncating every operation to 30 or 31 bits - this is exactly what thefloatdatatype does on x86 machines - however this will cause slightly different results from machines which do all their calculations using only 32-bits, and these small changes will propagate over time. Hence, the question. -

Peter O. over 12 years@BlueRaja: The answer "Is there a way to force my program to run in double precision?" would either amount to reimplementing the entire Common Language Runtime, which would be extremely complicated, or using native calls to a C++ DLL from the C# application, as hinted in user shelleybutterfly's answer. Think of "QNumbers" merely as binary fixed point numbers, as hinted in my answer (I had not until now seen binary fixed point numbers being called "QNumbers".)

-

CodesInChaos over 12 yearsI just realized that the C# specification doesn't really matter if one distributes compiled assemblies. It only matters if one wants source compatibility. What really matters is the CLR specification. But I'm pretty sure it's guarantees are just as weak as the C# guarantees.

-

Witness Protection ID 44583292 over 12 yearsIf "N bits of precision" means any calculation is accurate to that many bits, and machine A is accurate to 32 bits while machine B is accurate to 48 bits, then the first 32 bits of any calc by both machines should be identical. Wouldn't truncating to 32 bits or less after every operation keep both machines exactly in sync? If not, what's an example?

-

Jonathan Dickinson over 12 years@Pieter O. You do not need to reimplement the runtime. The server I work on at my company hosts the CLR runtime as a native C++ application (so does SQL Server). I suggest you google CorBindToRuntimeEx.

-

Jonathan Dickinson over 12 years@BlueRaja it depends on the game in question. Applying fixed framerate steps to all games is not a viable option - because the AOE algorithm introduces artificial latency; which is unnacceptable in e.g. a FPS.

-

BlueRaja - Danny Pflughoeft over 12 years@Jonathan: This is only an issue in peer-to-peer games which send the input only - for these, you have to have a fixed update-rate. Most FPS's do not work like this, but the few that do necessarily have a fixed update-rate. See this question.

BlueRaja - Danny Pflughoeft over 12 years@Jonathan: This is only an issue in peer-to-peer games which send the input only - for these, you have to have a fixed update-rate. Most FPS's do not work like this, but the few that do necessarily have a fixed update-rate. See this question. -

Jonathan Dickinson over 12 years@BlueRaja thanks for that. Quite informative - I still think fixed point can be important (but less so) in fixed time step scenarios, because after all you are at the mercy of the OS scheduler (but again, it's not that bad).

-

Eric J. about 12 yearsIt's also a problem that .NET 5, or 6, or 42, might not use the x87 calculation mode anymore. There's nothing in the standard that requires it to.

Eric J. about 12 yearsIt's also a problem that .NET 5, or 6, or 42, might not use the x87 calculation mode anymore. There's nothing in the standard that requires it to. -

Rune FS over 11 yearsthere's an "unlimited" sized integer available BigInteger though not as fast as native int or long it is there so .NET does offer such a type (created for F# I believe but can be used in C#)

Rune FS over 11 yearsthere's an "unlimited" sized integer available BigInteger though not as fast as native int or long it is there so .NET does offer such a type (created for F# I believe but can be used in C#) -

Antimony about 11 yearsWhy bother porting this to Java? Java already has guaranteed deterministic floating point math via

strictfp. -

Cole Tobin about 9 yearsAnother option is GNU MP wrapper for .NET. It's a wrapper around The GNU Multiple Precision Library which supports "inifinite" precision integers, rationals (fractions), and floating point numbers.

-

Roman Starkov about 9 yearsIf you're going to do any of these, you might as well try

Roman Starkov about 9 yearsIf you're going to do any of these, you might as well trydecimalfirst, as it's much simpler to do. Only if it's too slow for the task at hand would other approaches be worth thinking about. -

IS4 about 8 yearsWouldn't casting to

doubleeach time after an operation strip away the unwanted bits, yielding consistent results? -

svick about 8 years@IllidanS4 I don't think that would guarantee consistent results.

-

zigzag over 7 yearsI have learned about one special case where floating points are deterministic. Explanation I got is: For multiplication/division, if one of the FP numbers is power of two number (2^x), significant/mantissa won't change during calculation. Only exponent will change (point will move). So rounding will never happen. Result will be deterministic.

-

zigzag over 7 yearsExample: A number like 2^32 is represented as (exponent: 32, mantissa: 1). If we multiply this with another float (exp, man), the result is (exp + 32, man * 1). For division, the result is (expo - 32, man * 1). Multiplying the mantissa by 1 doesn't change the mantissa, so it doesn't matter how many bits it has.

-

KriptSkitty almost 6 yearsApologies for the downvote. I mis-clicked (if that is a word) on my phone, and now I can’t change it.

KriptSkitty almost 6 yearsApologies for the downvote. I mis-clicked (if that is a word) on my phone, and now I can’t change it.