Is it possible to change the sector size of a partition for zfs raidz pool in linux?

I found the necessary option! the pool is currently resilvering the new partition after issuing the following command:

zpool replace -o ashift=9 zfs_raid <virtual device> /dev/sdd1

Although this is possible , it's not advisable because you get terrible performance by forcing a 4k type drive to be written as a 512b. I have learned the hard way that one should add

-o ashift=12

when creating the pool to avoid having to recreate it later as it's currently not possible to 'migrate' to the 4k sector size.

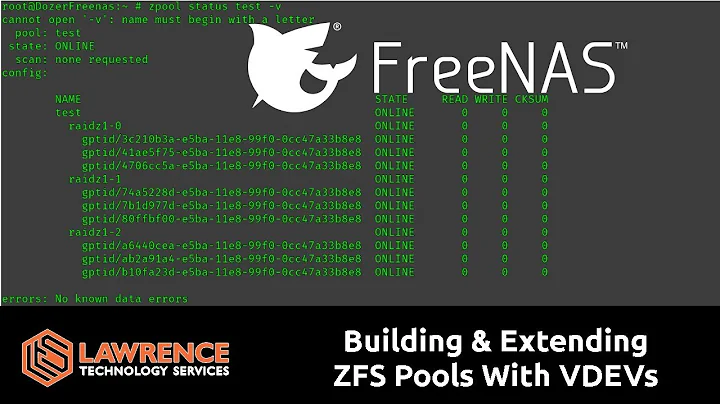

Related videos on Youtube

barrymac

Updated on September 18, 2022Comments

-

barrymac almost 2 years

I've been migrating a zfs raidz pool on linux to new discs via virtual devices which were sparse files. I've used partitions on the discs as the discs are different sizes of 1.9T each. The last disc to add is a 4Tb disc and I partitioned it as the others with a 1.9T partition to add to the pool. It's using a GPT partition table. When I try to replace the last file with the 1.9T partition on the 4T disc I get the following

zpool replace -f zfs_raid /zfs_jbod/zfs_raid/zfs.2 /dev/sdd1 cannot replace /zfs_jbod/zfs_raid/zfs.2 with /dev/sdd1: devices have different sector alignmentHow can I change the partition sector size to be 512 like the others, or failing that is it possible to change the other pool devices to 4024 ? Apparently the logical sector sizes are all 512

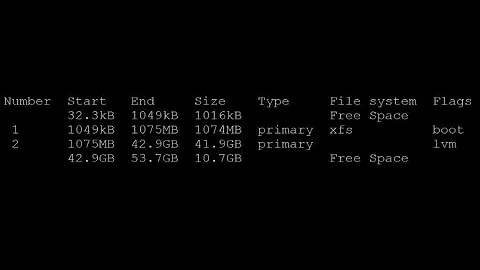

cat /sys/block/sdd/queue/hw_sector_size Disk /dev/sdd: 4000.8 GB, 4000787030016 bytes, 7814037168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytesAs I have repartitioned the disc that contained the original 4th file based device that I'm trying to replace but it didn't work, I recreated the device file so it's currently resilvering that.

zpool status output:

NAME STATE READ WRITE CKSUM zfs_raid DEGRADED 0 0 0 raidz1-0 DEGRADED 0 0 0 sda3 ONLINE 0 0 0 sdc2 ONLINE 0 0 0 sdb1 ONLINE 0 0 0 replacing-3 OFFLINE 0 0 0 /zfs_jbod/zfs_raid/zfs.2 OFFLINE 0 0 0 /mnt/butter2/zfs.4 ONLINE 0 0 0 (resilvering)-

BitsOfNix almost 11 yearsCan't you do instead zfs attach zfs_raid <file> <device> and after sync zfs detach zfs_raid <file>

-

barrymac almost 11 yearswouldn't attaching a 5th device expand the array irreversibly? or maybe you mean something like adding a spare?

-

BitsOfNix almost 11 yearsNot adding a spare or add new disk, doing zpool attach pool old_device new_device, this will mirror the old_device to the new_device, then you detach the old_device from your mirror after resilver: docs.oracle.com/cd/E26502_01/html/E29007/gayrd.html#scrolltoc <- info about attach/detach and the differences between add and attach.

-

barrymac almost 11 yearsThat did look promising by unfortunately returned a "cannot attach /dev/sdd1 to /zfs_jbod/zfs_raid/zfs.2: can only attach to mirrors and top-level disks"

-

BitsOfNix almost 11 yearsCould you put your current zpool status zfs_raid output, to see the raid layout?

-

barrymac almost 11 yearsAs I have repartitioned the disc that contained the original 4th file based device that I'm trying to replace but it didn't work, I recreated the device file so it's currently resilvering that.

-

BitsOfNix almost 11 yearsrunning out of ideas here, but can you partition be unaligned? You can check with fdisk /dev/sdd. The start should be multiple of 8. If not, I'm afraid that you will need to backup, destroy, create a new pool (maybe specifying ashift=12 would be a good idea to force it) and restore !!!

-

barrymac almost 11 yearsUnfortunately it is looking like more and more like a recreate is necessary! I tried aligning it with cgdisk but didn't make a difference. As it's a home nas I don't have the storage available to do a local backup. :-(

-