Is it possible to create UI elements with the NDK ? - lack of specs in Android docs

Solution 1

Sure you can. Read up on calling Java from C(++), and call the respective Java functions - either construct UI elements (layouts, buttons, etc.) one by one, or load an XML layout. There's no C-specific interface for that, but the Java one is there to call.

Unless it's a game and you intend to do your own drawing via OpenGL ES. I'm not sure if you can mix and match.

In a NativeActivity, you can still get a pointer to the Java Activity object and call its methods - it's the clazz member of the ANativeActivity structure that's passed to your android_main as a parameter, via the android_app structure. Take that pointer, take the JNIEnv* from the same, and assign a layout.

How will this interoperate with OpenGL drawing, I'm not sure.

EDIT: about putting together your own input processing. The key callback is onInputEvent(struct android_app* app, AInputEvent* event) within android_app structure. Place your callback there, Android will call it whenever appropriate. Use AInputEvent_getType(event) to retrieve event type; touch events have type AINPUT_EVENT_TYPE_MOTION.

EDIT2: here's a minimum native app that grabs the touch events:

#include <jni.h>

#include <android_native_app_glue.h>

#include <android/log.h>

static int32_t OnInput(struct android_app* app, AInputEvent* event)

{

__android_log_write(ANDROID_LOG_ERROR, "MyNativeProject", "Hello input event!");

return 0;

}

extern "C" void android_main(struct android_app* App)

{

app_dummy();

App->onInputEvent = OnInput;

for(;;)

{

struct android_poll_source* source;

int ident;

int events;

while ((ident = ALooper_pollAll(-1, NULL, &events, (void**)&source)) >= 0)

{

if(source != NULL)

source->process(App, source);

if (App->destroyRequested != 0)

return;

}

}

}

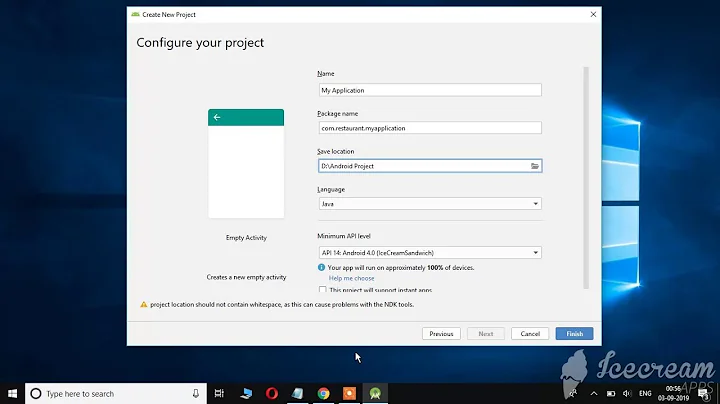

You need, naturally, to add a project around it, with a manifest, Android.mk and everything. Android.mk will need the following as the last line:

$(call import-module,android/native_app_glue)

The native_app_glue is a static library that provides some C bridging for the APIs that are normally consumed via Java.

You can do it without a glue library as well. But then you'll need to provide your own function ANativeActivity_onCreate, and a bunch of other callbacks. The android_main/android_app combo is an interface defined by the glue library.

EDIT: For touch coordinates, use AMotionEvent_getX/Y(), passing the event object as the first parameter and index of the pointer as the second. Use AMotionEvent_getPointerCount() to retrieve the number of pointers (touch points). That's your native processing of multitouch events.

I'm supposed to detect the [x,y] position everytime, compare it to the location of my joystick, store the previous position, compare the previous position and the next one to get the direction ?

In short, yes, you are. There's no builtin platform support for virtual joysticks; you deal with touches and coordinates, and you translate that into your app's UI metaphor. That's pretty much the essence of programming.

Not "everytime" though - only when it's changing. Android is an event-driven system.

Now, about your "I want it on the OS level" sentiment. It's WRONG on many levels. First, the OS does not owe you anything. The OS is what it is, take it or leave it. Second, unwillingness to extend an effort (AKA being lazy) is generally frowned upon in the software community. Third, the OS code is still code. Moving something into the OS might gain you some efficiency, but why do you think it will make a user perceptible difference? It's touch processing we're talking about - not a particularly CPU intensive task. Did you actually build an app, profile and find its performance lacking? Until you do, don't ever guess where the bottleneck would be. The word for that is "premature optimization", and it's something that everyone and their uncle's cat would warn you against.

Solution 2

Rawdrawandroid shows nothing is impossible. Using that, you can write UI and handle UI event entirely in C/C++ .

Note: No java, classes.dex, byte-code would be used.

Sample App: https://play.google.com/store/apps/details?id=org.cnlohr.colorchord

Please read how rawdraw developer addresses common situations:

With this framework you get:

- To make a window with OpenGL ES support

- Accelerometer/gyro input, multi-touch

- An android keyboard for key input

- Ability to store asset files in your APK and read them with AAssetManager

- Permissions support for using things like sound. Example in https://github.com/cnlohr/cnfa

- Directly access USB devices. Example in https://github.com/cnlohr/androidusbtest

NDK UI is possible to write other than in java.

A little bit of this also has to do to stick it to all those Luddites on the internet who post "that's impossible" or "you're doing it wrong" to Stack Overflow questions... Requesting permissions in the JNI "oh you have to do that in Java" or other dumb stuff like that. I am completely uninterested in your opinions of what is or is not possible. This is computer science. There aren't restrictions. I can do anything I want. It's just bits. You don't own me.

You access android api from C, same like java can:

P.S. If you want a bunch of examples of how to do a ton of things in C on Android that you "need" java for, scroll to the bottom of this file: https://github.com/cntools/rawdraw/blob/master/CNFGEGLDriver.c - it shows how to use the JNI to marshall a ton of stuff to/from the Android API without needing to jump back into Java/Kotlin land.

Related videos on Youtube

user1717079

Updated on July 03, 2022Comments

-

user1717079 almost 2 years

After reading the related docs I don't get if I can create things like buttons or other UI elements used to get user inputs with just the use of C++/C code compiled with the NDK.

There are no problems when i want to handle a "window" or activity that needs to stay in focus, but I don't get how to build an UI with elements for callbacks and user input.

It's strange that there is a windowing framework in place but without any trace of callbacks for UI elements.

Can I build touch buttons or a virtual gamepad with the NDK ?

I appreciate the effort and the fact that we are getting closer to my point but apparently i wasn't explaining myself good enough.

I found this image here

Now my problem and the focus of this question is:

Supposing that i can place and draw this virtual joystick, how i can detect only the movements and have a callback like

Joystick.onUporJoystick.onDownwith Android and using only the NDK ?If there are no callbacks of this kind available from the NDK, I'm supposed to detect the [x,y] position everytime, compare it to the location of my joystick, store the previous position, compare the previous position and the next one to get the direction ?

Since the sensor throws events at a really fast rate, i think that building this on my own considering only the raw X,Y couple, will end up in having a really inefficient control system because it will not be optimized at OS level with the appropriate sensor calls.

According to the NativeActivity example it's also unclear how to handle multiple touch point, for example how i can handle 2 touch event at the same time ?

Just consider the image above and think about having only x,y coordinate for 1 touch point and how i can solve this in an efficient way that is supported by the NDK.

Thanks.

-

user1717079 over 11 yearsthis is the usual approach, i was asking about a 100% native activity; so i need to use the JNI and i can't avoid this ? supposing that i have the code base for my engine in C/C++ your hint is about calling java function with the JNI ? In this case what is the entry point of my application ? Java or C/C++ ? I suppose that in this scenario i have to build the entire UI, window included, with Java so my entry point is the Java code, but what about connecting the EGL native buffer with Java ? I get your hint but the implementation is not clear to me, especially for the EGL buffer part.

-

Seva Alekseyev over 11 yearsThere's a difference between a 100% native activity that's still a Java class (with all its methods being native), and a

Seva Alekseyev over 11 yearsThere's a difference between a 100% native activity that's still a Java class (with all its methods being native), and aNativeActivity. The former can and should use Android layouts, the latter cannot. Decide which one do you want. Is it a game? If not, then probably the former. Yes, to call back into Java you need JNI. Also, SO is not a discussion forum - if you have additional questions, ask them as new questions. -

user1717079 over 11 yearsii was always talking about a NativeActivity, i would like to deal only with my codebase in C/C++, only with the NDK, can you confirm or adapt your answer ? both the NDK language and the NDK example use the term NativeActivity so with native i mean 100% C/C++. Now can you clarify this and i will ask about EGL in other question ? Again, there is this chance to build UI callbacks only with the NDK ? And yes, it's about a game or something similar with OpenGL related technologies.

-

Seva Alekseyev over 11 yearsFor the record,

Seva Alekseyev over 11 yearsFor the record,NativeActivityis NOT the same as a purely C++ activity.NativeActivityis one mode of wrapping native code, but not the only one. -

user1717079 over 11 years

<ndk root>/samples/native-activity: i can build an UI with code like this ? -

Seva Alekseyev over 11 yearsSaw the example - it sets itself up for purely GLES drawing. It depends on what are you calling "UI". If you mean "UI with builtin controls" - then probably no. If you mean "some means of interacting with the user" - then yes. As long as you do your own drawing and touch processing. You know, creating a button-like control by hand is possible and not even that hard.

Seva Alekseyev over 11 yearsSaw the example - it sets itself up for purely GLES drawing. It depends on what are you calling "UI". If you mean "UI with builtin controls" - then probably no. If you mean "some means of interacting with the user" - then yes. As long as you do your own drawing and touch processing. You know, creating a button-like control by hand is possible and not even that hard. -

user1717079 over 11 yearscan please give me an hint or a solution for building my own UI system with callbacks, the basic idea is really simple, i need to detect touch movements and touch event, kinda like virtual joystick and buttons, i don't know how to deal with this in a efficient way. please help, thanks.

-

Seva Alekseyev over 11 yearsEdited. There's a callback in the NativeActivity glue that receives touch events.

Seva Alekseyev over 11 yearsEdited. There's a callback in the NativeActivity glue that receives touch events. -

user1717079 over 11 yearsi have looked at this example before, i have added more details in my original post, i hope that is more clear now, thanks.

-

user1717079 over 11 yearsit's not really a problem but that callback "Joystick.onUp" could keep my code much more oriented to a "idiomatic approach" and avoid the entire state-machine-related code, also having an OS optimized code can have positive aspects on the looper and queue events management, it's just the fact that most of this things required by the NDK are not really related on being native, they look more like a really raw approach and i think that a few more optimized callbacks could do the trick. By the way, thanks, something it's more clear now.

-

Seva Alekseyev over 11 yearsJust split your app into logical layers (at least in your head). The low level touch processing layer would generate callbacks to the upper layer, which would be nice and idiomatic. For the record, there's no support for virtual joysticks in the Java world either.

Seva Alekseyev over 11 yearsJust split your app into logical layers (at least in your head). The low level touch processing layer would generate callbacks to the upper layer, which would be nice and idiomatic. For the record, there's no support for virtual joysticks in the Java world either.

![[Android] Showing and Hiding UI Elements with Kotlin in Android Studio](https://i.ytimg.com/vi/Ko2HNdFY9zY/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBpAf6Y2z6C1gphVn0ttGv1RjDeSA)