Is it possible to detach and reattach a ZFS disk without requiring a full resilver?

Solution 1

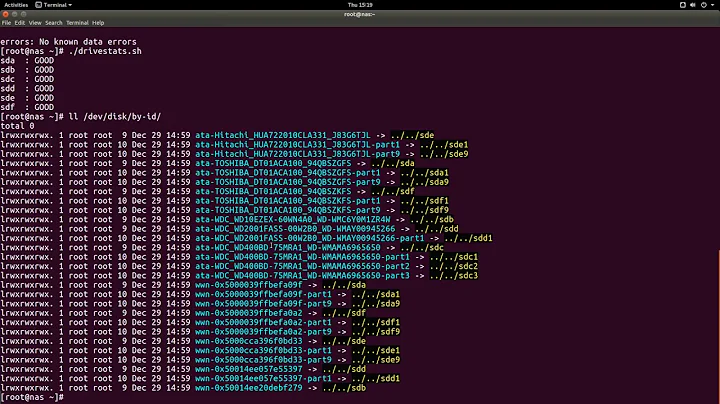

After further experimentation I've found a fair solution, however it comes with a significant trade-off. Disks which have been offline'd but not detached can later be brought back online with only an incremental resilvering operation ("When a device is brought online, any data that has been written to the pool is resynchronized with the newly available device."). In my tests this brings resilvering time for a 3-disk mirror down from 28 hours to a little over 30 minutes, with about 40GB of data-delta.

The trade-off is that any pool with an offline disk will be flagged as degraded. Provided there are still at least two online disks (in a mirrored pool) this is effectively a warning--integrity and redundancy remain intact.

As others mentioned this overall approach is far from ideal--sending snapshots to a remote pool would be far more suitable, but in my case is not feasible.

To summarize, if you need to remove a disk from a pool and later add it back without requiring a full resilvering then the approach I'd recommend is:

- offline the disk in the pool:

zpool offline pool disk - spin down the drive (if it is to be physically pulled):

hdparm -Y /dev/thedisk - leave the pool in a degraded state with the drive offlined

- to add the disk back to the pool:

zpool online pool disk

And, since this is as-yet untested, there is the risk that the delta resilvering operation is not accurate. The "live" pool and/or the offline disks may experience issues. I'll update if that happens to me, but for now will experiment with this approach.

Solution 2

Don't go down the road of breaking the ZFS array to "rotate" disks offsite. As you've seen, the rebuild time is high and the resilvering process will read/verify the used size of the dataset.

If you have the ability, snapshots and sending data to a remote system is a clean, non-intrusive approach. I suppose you could go through the process of having a dedicated single-disk pool, copy to it, and zpool export/import... but it's not very elegant.

Solution 3

Update on 2015 Oct 15: Today I discovered the zpool split command, which splits a new pool (with a new name) off of an existing pool. split is much cleaner than offline and detach, as both pools can then exist (and be scrubbed separately) on the same system. The new pool can also be cleanly (and properly) export[ed] prior to being unplugged from the system.

(My original post follows below.)

Warning! Various comments on this page imply that it is (or might be) possible to zpool detach a drive, and then somehow reattach the drive and access the data it contains.

However, according to this thread (and my own experimentation)

zpool detach removes the "pool information" from the detached drive. In other words, a detach is like a quick reformatting of the drive. After a detach lots of data may still be on the drive, but it will be practically impossible to remount the drive and view the data as a usable filesystem.

Consequently, it appears to me that detach is more destructive than destroy, as I believe zpool import can recover destroyed pools!

A detach is not a umount, nor a zpool export, nor a zpool offline.

In my experimentation, if I first zpool offline a device and then zpool detach the same device, the rest of the pool forgets the device ever existed. However, because the device itself was offline[d] before it was detach[ed], the device itself is never notified of the detach. Therefore, the device itself still has its pool information, and can be moved to another system and then import[ed] (in a degraded state).

For added protection against detach you can even physically unplug the device after the offline command, yet prior to issuing the detach command.

I hope to use this offline, then detach, then import process to back up my pool. Like the original poster, I plan on using four drives, two in a constant mirror, and two for monthly, rotating, off-site (and off-line) backups. I will verify each backup by importing and scrubbing it on a separate system, prior to transporting it off-site. Unlike the original poster, I do not mind rewriting the entire backup drive every month. In fact, I prefer complete rewrites so as to have fresh bits.

Related videos on Youtube

STW

Updated on September 18, 2022Comments

-

STW almost 2 years

I have a ZFS mirrored pool with four total drives. Two of the drives are intended to be used for rotating offsite backups. My expectation was that after the initial resilvering I could

detachand laterattacha disk and have it only do an incremental resilver--however in testing it appears to perform a full resilver regardless of whether or not the disk being attached already contains nearly all of the pool contents.Would using an

offline/onlineapproach give me the desired result of only updating the disk--rather than fully rebuilding it? Or to have this work as expected will I need to do something entirely different--such as using each backup disk as a 1-disk pool andsending the newest snapshots to it whenever it needs to be brought up to date?-

Philip over 9 years-1 Don't detach/attach drives for backup, use the send/receive commands as the ZFS designers intended.

Philip over 9 years-1 Don't detach/attach drives for backup, use the send/receive commands as the ZFS designers intended. -

STW over 9 years@ChrisS instead of a -1 how about writing an answer with some citations. It sounds like your saying the only options for backups is an online pool somewhere else--which would be great to know if it's true, but I suspect isn't the case.

-

Philip over 9 yearsSorry, I don't intent to be an arrogant jerk, but Server Fault is supposed to be for Professional System Administrators (et al) only. The breaking-mirrors method of backups is so wholly unmanagable, error-prone, and unprofessional that it shouldn't be considered a viable backup method. What I am suggesting is that you format the two backup drives with whatever file system you want, and using the

Philip over 9 yearsSorry, I don't intent to be an arrogant jerk, but Server Fault is supposed to be for Professional System Administrators (et al) only. The breaking-mirrors method of backups is so wholly unmanagable, error-prone, and unprofessional that it shouldn't be considered a viable backup method. What I am suggesting is that you format the two backup drives with whatever file system you want, and using thezfs sendcommand to take full or incremental backup streams saved to the backup disks, or usezfs recvto make a duplicate disk. I would highly recommend using some kind of software to manage this process. -

STW over 9 yearsI think your points are valid, I'd upvote it as an answer. I'm considering rewriting my question to focus less on my specific scenario (which arises from a shoestring budget for a non-critical, yet important, in-house server) and more at the core "can I reattach a drive without requiring a full resilvering?"

-

-

STW over 9 yearsUnfortunately I can't use a snapshot->send approach since I don't have the hardware or bandwidth to run a second ZFS server offsite. However it appears that using offline/online will work, with the tradeoff that the status reports as degraded. I'll see how it goes for the next week or so.

-

ewwhite over 9 yearsUnderstood. But pulling running disks out of a system as a form of backup is not a solid solution. Your risk increases drastically when you do this.

ewwhite over 9 yearsUnderstood. But pulling running disks out of a system as a form of backup is not a solid solution. Your risk increases drastically when you do this. -

STW over 9 yearsGood point, my plan is to offline them, suspend them, unseat their hot-swap tray and then give it a minute to ensure a full stop before fully pulling it

-

Dan Is Fiddling By Firelight over 9 yearsCan you operate a second server onsite (or even a 2nd ZFS array in the same server)? Put your hotswap bays in it, sync between it and the main one, and then rotate the entire backup ZFS array in/out of the server as a unit.

-

the-wabbit over 9 yearsIf the resilver is going to introduce data errors, these are going to heal automatically over time or upon a zpool scrub.

-

STW over 9 yearsI've realized the value of a scrub; I wait until after a successful scrub to offline and remove the backup disk

-

STW over 8 yearsJust a quick update: over the past year this approach has worked well enough. Monthly restore tests of the offsite backup have been successful and consistent. Rotating an array (rather than a single disk) would be better to provide a level of redundancy in the offsite copy, and I would recommend doing that if possible. Overall this is still a hackish approach and does introduce some risk, but has provided a reasonably safe and inexpensive offsite backup of our data.

-

Costin Gușă over 5 yearsI would argue against rotating all drives in the array as the transport can slowly damage all of them. I would not do the rotation even if the drives would remain on-site.