Jacobian and Hessian inputs in `scipy.optimize.minimize`

Solution 1

That error is coming from the calls to Jacobian and Hessian, not in minimize. Replacing Jacobian(fun) with Jacobian(lambda x: fun(x, a)) and similarly for Hessian should do the trick (since now the function being differentiated only has a single vector argument).

One other thing: (a) is just a, if you want it to be a tuple use (a,).

import numpy as np

from scipy.optimize import minimize

from numdifftools import Jacobian, Hessian

def fun(x, a):

return (x[0] - 1) **2 + (x[1] - a) **2

def fun_der(x, a):

return Jacobian(lambda x: fun(x, a))(x).ravel()

def fun_hess(x, a):

return Hessian(lambda x: fun(x, a))(x)

x0 = np.array([2, 0]) # initial guess

a = 2.5

res = minimize(fun, x0, args=(a,), method='dogleg', jac=fun_der, hess=fun_hess)

print(res)

Solution 2

I get that this is a toy example, but I would like to point out that using a tool like Jacobian or Hessian to calculate the derivatives instead of deriving the function itself is fairly costly. For example with your method:

x0 = np.array([2, 0])

a = 2.5

%timeit minimize(fun, x0, args=(a,), method='dogleg', jac=fun_der, hess=fun_hess)

100 loops, best of 3: 13.6 ms per loop

But you could calculate the derivative functions as such:

def fun_der(x, a):

dx = 2 * (x[0] - 1)

dy = 2 * (x[1] - a)

return np.array([dx, dy]

def fun_hess(x, a):

dx = 2

dy = 2

return np.diag([dx, dy])

%timeit minimize(fun, x0, args=(a,), method='dogleg', jac=fun_der, hess=fun_hess)

1000 loops, best of 3: 279 µs per loop

As you can see that is almost 50x faster. It really starts to add up with complex functions. As such I always try to derive the functions explicitly myself, regardless of how difficult that may be. One fun example is the kernel based implementation of Inductive Matrix Completion.

argmin --> sum((A - gamma_u(X) Z gamma_v(Y))**2 - lambda * ||Z||**2)

where gamma_u = (1/sqrt(m_x)) * [cos(UX), sin(UX)] and

gamma_v = (1/sqrt(m_y)) * [cos(VY), sin(VY)]

X.shape = n_x, p; Y.shape = n_y, q; U.shape = m_x, p; V.shape = m_y, q; Z.shape = 2m_x, 2m_y

Calculating the gradient and hessian from this equation is extremely unreasonable in comparison to explicitly deriving and utilizing those functions. So as @bnaul pointed out, if your function does have closed form derivates you really do want to calculate and use them.

Related videos on Youtube

Medulla Oblongata

If you want to build a ship, don't drum up people to collect wood and don't assign them tasks and work, but rather teach them to long for the endless immensity of the sea.

Updated on September 14, 2022Comments

-

Medulla Oblongata over 1 year

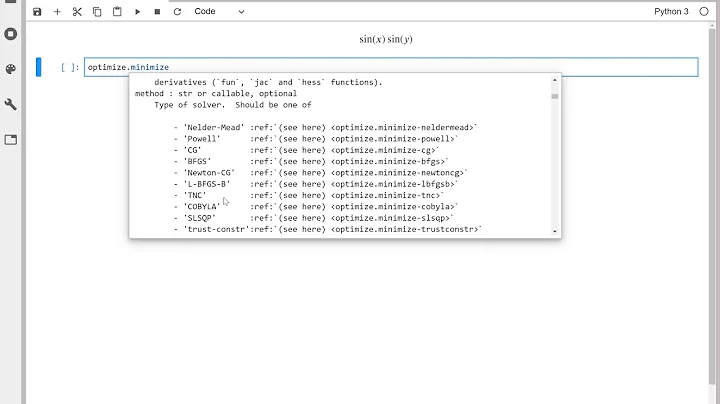

Medulla Oblongata over 1 yearI am trying to understand how the "dogleg" method works in Python's

scipy.optimize.minimizefunction. I am adapting the example at the bottom of the help page.The dogleg method requires a Jacobian and Hessian argument according to the notes. For this I use the

numdifftoolspackage:import numpy as np from scipy.optimize import minimize from numdifftools import Jacobian, Hessian def fun(x,a): return (x[0] - 1)**2 + (x[1] - a)**2 x0 = np.array([2,0]) # initial guess a = 2.5 res = minimize(fun, x0, args=(a), method='dogleg', jac=Jacobian(fun)([2,0]), hess=Hessian(fun)([2,0])) print(res)Edit:

If I make a change as suggested by a post below,

res = minimize(fun, x0, args=a, method='dogleg', jac=Jacobian(lambda x: fun(x,a)), hess=Hessian(lambda x: fun(x,a)))I get an error

TypeError: <lambda>() takes 1 positional argument but 2 were given. What am I doing wrong?Also is it correct to calculate the Jacobian and Hessian at the initial guess

x0? -

Medulla Oblongata over 7 yearsThanks. If I make those changes to

Medulla Oblongata over 7 yearsThanks. If I make those changes toJacobianandHessianI get an errorValueError: The truth value of an array with more than one element is ambiguous. Use a.any() or a.all(). Also if I want to specify more than 1 argument, would I then need to use something likeargs=(a,b,c)? -

bnaul over 7 yearsIn this particular case you actually want to pass in a callable Jacobian function so you should leave off the

([2, 0])(I edited my response to reflect this). -

Medulla Oblongata over 7 yearsOK... If I do that, I get

Medulla Oblongata over 7 yearsOK... If I do that, I getTypeError: <lambda>() takes 1 positional argument but 2 were given. Then if I try usinglambda x,a: fun(x, a)as theJacobianandHessianinputs I getValueError: incompatible dimensions. -

bnaul over 7 yearsThinking a bit more about this: there's really no reason you would ever want to do this anyway. If you don't have a closed-form derivative you should be using a method that doesn't require one; numerically computing the derivatives like this is extremely inefficient.

-

Medulla Oblongata over 7 yearsFair point, but I specifically need to solve my problem using the "dogleg" algorithm in Python (which requires the Jacobian and Hessian). I'm not concerned with efficiency of the code yet, I just want to know how the

Medulla Oblongata over 7 yearsFair point, but I specifically need to solve my problem using the "dogleg" algorithm in Python (which requires the Jacobian and Hessian). I'm not concerned with efficiency of the code yet, I just want to know how thescipy.optimize.minimize(method='dogleg')function works. -

denis over 5 yearsdon't know

numdifftools, but couldn't you calculatejacf = Jacobian(lambda x: fun(x, 2.5))just once, thenminimize( ... jac=jacf, hess=hessf )? -

Grr over 5 years@denis I think you meant to direct that towards bnaul I don't use numdifftools or these methods. As I mention, I like to derive the functions directly if possible to avoid the overhead of repeatedly calculating them at each iteration.