Java BufferedReader back to the top of a text file?

Solution 1

What's the disadvantage of just creating a new BufferedReader to read from the top? I'd expect the operating system to cache the file if it's small enough.

If you're concerned about performance, have you proved it to be a bottleneck? I'd just do the simplest thing and not worry about it until you have a specific reason to. I mean, you could just read the whole thing into memory and then do the two passes on the result, but again that's going to be more complicated than just reading from the start again with a new reader.

Solution 2

The Buffered readers are meant to read a file sequentially. What you are looking for is the java.io.RandomAccessFile, and then you can use seek() to take you to where you want in the file.

The random access reader is implemented like so:

try{

String fileName = "c:/myraffile.txt";

File file = new File(fileName);

RandomAccessFile raf = new RandomAccessFile(file, "rw");

raf.readChar();

raf.seek(0);

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

The "rw" is a mode character which is detailed here.

The reason the sequential access readers are setup like this is so that they can implement their buffers and that things can not be changed beneath their feet. For example the file reader that is given to the buffered reader should only be operated on by that buffered reader. If there was another location that could affect it you could have inconsistent operation as one reader advanced its position in the file reader while the other wanted it to remain the same now you use the other reader and it is in an undetermined location.

Solution 3

The best way to proceed is to change your algorithm, in a way in which you will NOT need the second pass. I used this approach a couple of times, when I had to deal with huge (but not terrible, i.e. few GBs) files which didn't fit the available memory.

It might be hard, but the performance gain usually worths the effort

Solution 4

About mark/reset:

The mark method in BufferedReader takes a readAheadLimit parameter which limits how far you can read after a mark before reset becomes impossible. Resetting doesn't actually mean a file system seek(0), it just seeks inside the buffer. To quote the Javadoc:

readAheadLimit - Limit on the number of characters that may be read while still preserving the mark. After reading this many characters, attempting to reset the stream may fail. A limit value larger than the size of the input buffer will cause a new buffer to be allocated whose size is no smaller than limit. Therefore large values should be used with care.

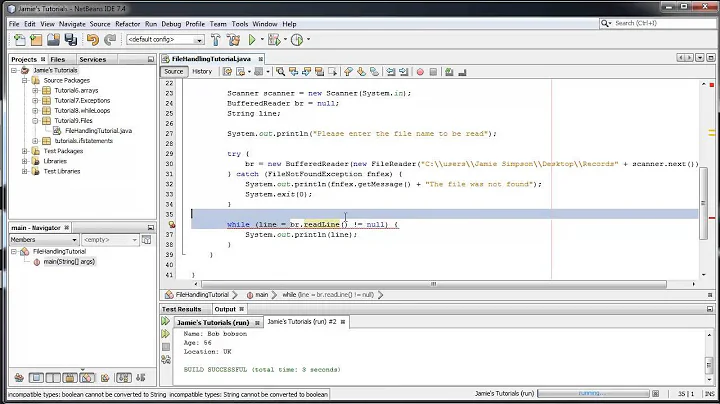

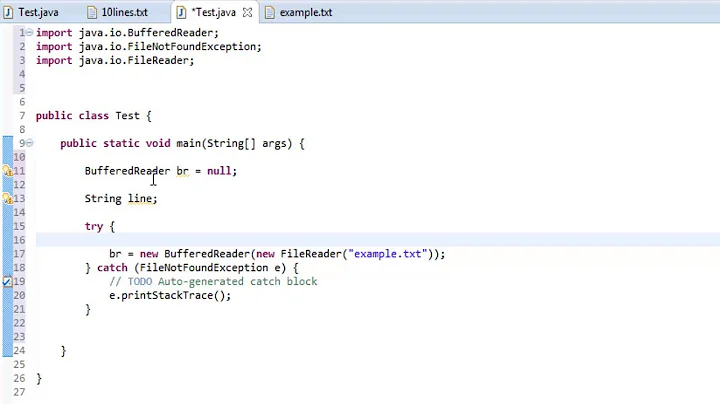

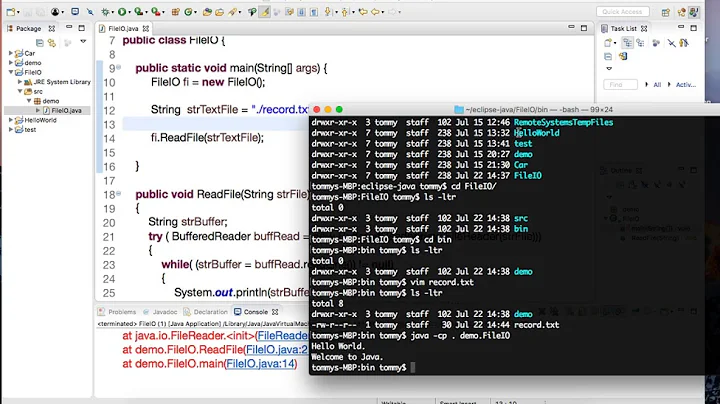

Related videos on Youtube

Jaroslaw Pawlak

I have done MSci in Computer Science at King's College London, graduated in 2013. I am programming since 2009, mostly in Java and JavaScript but I had contact with a few others such as Scala, Prolog, C++ or Python. I strongly believe in agile methodologies and test driven development.

Updated on July 09, 2022Comments

-

Jaroslaw Pawlak almost 2 years

Jaroslaw Pawlak almost 2 yearsI currently have 2

BufferedReaders initialized on the same text file. When I'm done reading the text file with the firstBufferedReader, I use the second one to make another pass through the file from the top. Multiple passes through the same file are necessary.I know about

reset(), but it needs to be preceded with callingmark()andmark()needs to know the size of the file, something I don't think I should have to bother with.Ideas? Packages? Libs? Code?

Thanks TJ

-

over_optimistic over 11 yearsCould you elaborate? I have a file that is 30MB big, I cannot load it all into memory. I have sorted the data, and now want to do a binary search directly on the file. For this I need to randomly seek.

-

Davide over 11 yearsNowadays I assume you mean 30GB, unless you are using really small-embedded hw (but then it'd be diskless) Anyway, random seeks on disks often completely ruin the logarithmic performance of binary search. A couple of alternatives are 1) doing sequential access (yes, on disk a sequential search may be faster than a binary search) or 2) a mixed approach such as using B-tree en.wikipedia.org/wiki/B-tree If these hints aren't enough you might want to pose your question as a separate one instead of a comment (please, post a comment here with a link to the question to ping me)