Java web app in tomcat periodically freezes up

From experience, you may want to look at your database connection pool implementation. It could be that your database has plenty of capacity, but the connection pool in your application is limited to a small number of connections. I can't remember the details, but I seem to recall having a similar problem, which was one of the reasons I switched to using BoneCP, which I've found to be very fast and reliable under load tests.

After trying the debugging suggested below, try increasing the number of connection available in the pool and see if that has any impact.

I identified some server code today that may not have been threadsafe, and I put a fix in for that, but the problem is still happening (though less frequently). Is this the sort of problem that un-threadsafe code can cause?

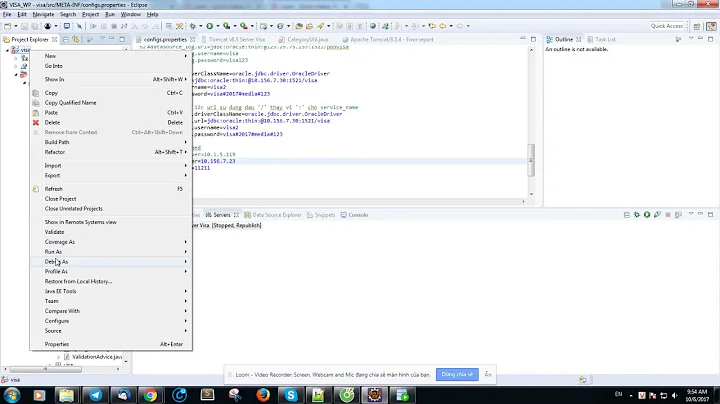

It depends what you mean by thread-safe. It sounds to me as though your application is causing threads to deadlock. You might want to run your production environment with the JVM configured to allow a debugger to connect, and then use JVisualVM, JConsole or another profiling tool (YourKit is excellent IMO) to have a peek at what threads you've got, and what they're waiting on.

Related videos on Youtube

tangent

Updated on July 13, 2020Comments

-

tangent almost 4 years

My Java web app running Tomcat (7.0.28) periodically becomes unresponsive. I'm hoping for some suggestions of possible culprits (synchronization?), as well as maybe some recommended tools for gathering more information about what's occurring during a crash. Some facts that I have accumulated:

When the web app freezes up, tomcat continues to feed request threads into the app, but the app does not release them. The thread pool fills up to the maximum (currently 250), and then subsequent requests immediately fail. During normal operation, there is never more than 2 or 3 active threads.

There are no errors or exceptions of any kind logged to any of our tomcat or web app logs when the problem occurs.

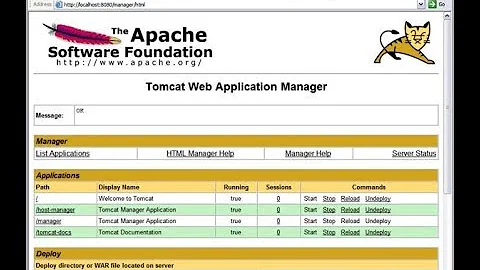

Doing a "Stop" and then a "Start" on our application via the tomcat management web app immediately fixes this problem (until today).

Lately the frequency has been two or three times a day, though today was much worse, probably 20 times, and sometimes not coming back to life immediately.

The problem occurs only during business hours

The problem does not occur on our staging system

When the problem occurs, processor and memory usage on the server remains flat (and fairly low). Tomcat reports plenty of free memory.

Tomcat continues to be responsive when the problem occurs. The management web app works perfectly well, and tomcat continues sending requests into our app until all threads in the pool are filled.

Our database server remains responsive when the problem occurs. We use Spring framework for data access and injection.

Problem generally occurs when usage is high, but there is never an unusually high spike in usage.

Problem history: something similar occurred about a year and a half ago. After many server config and code changes, the problem disappeared until about a month ago. Within the past few weeks it has occurred much more frequently, an average of 2 or 3 times a day, sometimes several times in a row.

I identified some server code today that may not have been threadsafe, and I put a fix in for that, but the problem is still happening (though less frequently). Is this the sort of problem that un-threadsafe code can cause?

UPDATE: With several posts suggesting database connection pool exhaustion, I did some searching in that direction and found this other Stackoverflow question which explains almost all of the problems I'm experiencing.

Apparently, the default values for maxActive and maxIdle connections in Apache's BasicDataSource implementation are each 8. Also, maxWait is set to -1, so when the pool is exhausted and a new request for a connection comes in, it will wait forever without logging any sort of exception. I'm still going to wait for this problem to happen again and perform a jstack dump on the JVM so that I can analyze that information, but it's looking like this is the problem. The only thing it doesn't explain is why the app sometimes doesn't recover from this problem. I suppose the requests just pile up sometimes and once it gets behind it can never catch up.

UPDATE II: I ran a jstack during a crash and found about 250 (max threads) of the following:

"http-nio-443-exec-294" daemon prio=10 tid=0x00002aaabd4ed800 nid=0x5a5d in Object.wait() [0x00000000579e2000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait(Native Method) at java.lang.Object.wait(Object.java:485) at org.apache.commons.pool.impl.GenericObjectPool.borrowObject(GenericObjectPool.java:1118) - locked <0x0000000743116b30> (a org.apache.commons.pool.impl.GenericObjectPool$Latch) at org.apache.commons.dbcp.PoolingDataSource.getConnection(PoolingDataSource.java:106) at org.apache.commons.dbcp.BasicDataSource.getConnection(BasicDataSource.java:1044) at org.springframework.jdbc.datasource.DataSourceUtils.doGetConnection(DataSourceUtils.java:111) at org.springframework.jdbc.datasource.DataSourceUtils.getConnection(DataSourceUtils.java:77) at org.springframework.jdbc.core.JdbcTemplate.execute(JdbcTemplate.java:573) at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:637) at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:666) at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:674) at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:718)To my untrained eye, this looks fairly conclusive. It looks like the database connection pool has hit its cap. I configured a maxWait of three seconds without modifying the maxActive and maxIdle just to ensure that we begin to see exceptions logged when the pool fills up. Then I'll set those values to something appropriate and monitor.

UPDATE III: After configuring maxWait, I began to see these in my logs, as expected:

org.apache.commons.dbcp.SQLNestedException: Cannot get a connection, pool error Timeout waiting for idle object at org.apache.commons.dbcp.PoolingDataSource.getConnection(PoolingDataSource.java:114) at org.apache.commons.dbcp.BasicDataSource.getConnection(BasicDataSource.java:1044) at org.springframework.jdbc.datasource.DataSourceUtils.doGetConnection(DataSourceUtils.java:111) at org.springframework.jdbc.datasource.DataSourceUtils.getConnection(DataSourceUtils.java:77)I've set maxActive to -1 (infinite) and maxIdle to 10. I will monitor for a while, but my guess is that this is the end of the problem.

-

mindas almost 12 years

kill -3 <pid>is your friend. Run this and look at the thread dump. You might want to have a look at the Thread Dump Analyzer for information grouping (java.net/projects/tda). -

Christopher Schultz almost 12 yearsWild-guess without any other information (need thread dumps!): DB connection pool exhaustion.

-

Lauro182 about 9 yearsThis is exactly what was happening to me, webapplication grew too big, max connections was too low and wait time was undifined, threads just kept piling up everynow and then and server would freeze. Increased max cons and set an specific time on max wait and now I'm just monitoring, but server running fine. Thanks for this mini tutorial.

-

redochka over 11 yearsYes BoneCP rocks. commons-dbcp sucks. Tomcat 7 is shipped with a dbcp tomcat.apache.org/tomcat-7.0-doc/jdbc-pool.html but to use it the driver has to be accessible from the same classloader as tomcat-jdbc.jar I do not want to bother to put the driver jar in tomcat lib directory each time I install a new tomcat.

-

DeejUK over 11 yearsRedsonic: That is not true. If your

DeejUK over 11 yearsRedsonic: That is not true. If yourDataSourceis defined within a context, and not as a JNDI resource shared between contexts, it can be in the context's classpath (ie in the .war). This is how I've used it in production systems. -

redochka over 11 yearsah ok. I didn't test myself. I reported what was in the tomcat 7 doc. Ok then, in this case it is an option too :)

![Java Servlets & JSP [2] - Creating a Java Web Application](https://i.ytimg.com/vi/0FpLve7ffoY/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA5zekcn73crN36kY3au8CgWhGouw)