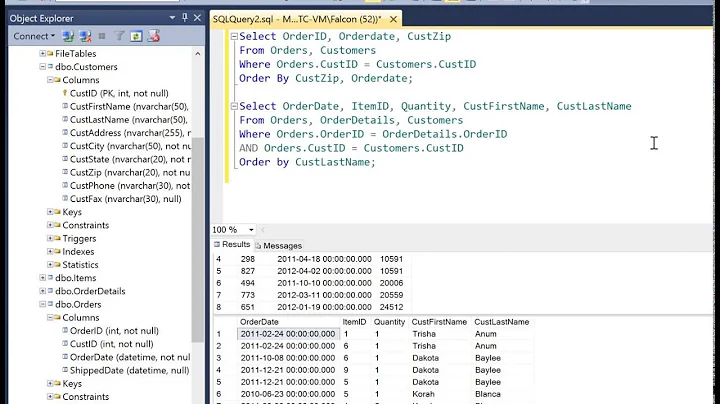

JOIN queries vs multiple queries

Solution 1

This is way too vague to give you an answer relevant to your specific case. It depends on a lot of things. Jeff Atwood (founder of this site) actually wrote about this. For the most part, though, if you have the right indexes and you properly do your JOINs it is usually going to be faster to do 1 trip than several.

Solution 2

For inner joins, a single query makes sense, since you only get matching rows. For left joins, multiple queries is much better... look at the following benchmark I did:

-

Single query with 5 Joins

query: 8.074508 seconds

result size: 2268000

-

5 queries in a row

combined query time: 0.00262 seconds

result size: 165 (6 + 50 + 7 + 12 + 90)

.

Note that we get the same results in both cases (6 x 50 x 7 x 12 x 90 = 2268000)

left joins use exponentially more memory with redundant data.

The memory limit might not be as bad if you only do a join of two tables, but generally three or more and it becomes worth different queries.

As a side note, my MySQL server is right beside my application server... so connection time is negligible. If your connection time is in the seconds, then maybe there is a benefit

Frank

Solution 3

This question is old, but is missing some benchmarks. I benchmarked JOIN against its 2 competitors:

- N+1 queries

- 2 queries, the second one using a

WHERE IN(...)or equivalent

The result is clear: on MySQL, JOIN is much faster. N+1 queries can drop the performance of an application drastically:

That is, unless you select a lot of records that point to a very small number of distinct, foreign records. Here is a benchmark for the extreme case:

This is very unlikely to happen in a typical application, unless you're joining a -to-many relationship, in which case the foreign key is on the other table, and you're duplicating the main table data many times.

Takeaway:

- For *-to-one relationships, always use

JOIN - For *-to-many relationships, a second query might be faster

See my article on Medium for more information.

Solution 4

I actually came to this question looking for an answer myself, and after reading the given answers I can only agree that the best way to compare DB queries performance is to get real-world numbers because there are just to many variables to be taken into account BUT, I also think that comparing the numbers between them leads to no good in almost all cases. What I mean is that the numbers should always be compared with an acceptable number and definitely not compared with each other.

I can understand if one way of querying takes say 0.02 seconds and the other one takes 20 seconds, that's an enormous difference. But what if one way of querying takes 0.0000000002 seconds, and the other one takes 0.0000002 seconds ? In both cases one way is a whopping 1000 times faster than the other one, but is it really still "whopping" in the second case ?

Bottom line as I personally see it: if it performs well, go for the easy solution.

Solution 5

The real question is: Do these records have a one-to-one relationship or a one-to-many relationship?

TLDR Answer:

If one-to-one, use a JOIN statement.

If one-to-many, use one (or many) SELECT statements with server-side code optimization.

Why and How To Use SELECT for Optimization

SELECT'ing (with multiple queries instead of joins) on large group of records based on a one-to-many relationship produces an optimal efficiency, as JOIN'ing has an exponential memory leak issue. Grab all of the data, then use a server-side scripting language to sort it out:

SELECT * FROM Address WHERE Personid IN(1,2,3);

Results:

Address.id : 1 // First person and their address

Address.Personid : 1

Address.City : "Boston"

Address.id : 2 // First person's second address

Address.Personid : 1

Address.City : "New York"

Address.id : 3 // Second person's address

Address.Personid : 2

Address.City : "Barcelona"

Here, I am getting all of the records, in one select statement. This is better than JOIN, which would be getting a small group of these records, one at a time, as a sub-component of another query. Then I parse it with server-side code that looks something like...

<?php

foreach($addresses as $address) {

$persons[$address['Personid']]->Address[] = $address;

}

?>

When Not To Use JOIN for Optimization

JOIN'ing a large group of records based on a one-to-one relationship with one single record produces an optimal efficiency compared to multiple SELECT statements, one after the other, which simply get the next record type.

But JOIN is inefficient when getting records with a one-to-many relationship.

Example: The database Blogs has 3 tables of interest, Blogpost, Tag, and Comment.

SELECT * from BlogPost

LEFT JOIN Tag ON Tag.BlogPostid = BlogPost.id

LEFT JOIN Comment ON Comment.BlogPostid = BlogPost.id;

If there is 1 blogpost, 2 tags, and 2 comments, you will get results like:

Row1: tag1, comment1,

Row2: tag1, comment2,

Row3: tag2, comment1,

Row4: tag2, comment2,

Notice how each record is duplicated. Okay, so, 2 comments and 2 tags is 4 rows. What if we have 4 comments and 4 tags? You don't get 8 rows -- you get 16 rows:

Row1: tag1, comment1,

Row2: tag1, comment2,

Row3: tag1, comment3,

Row4: tag1, comment4,

Row5: tag2, comment1,

Row6: tag2, comment2,

Row7: tag2, comment3,

Row8: tag2, comment4,

Row9: tag3, comment1,

Row10: tag3, comment2,

Row11: tag3, comment3,

Row12: tag3, comment4,

Row13: tag4, comment1,

Row14: tag4, comment2,

Row15: tag4, comment3,

Row16: tag4, comment4,

Add more tables, more records, etc., and the problem will quickly inflate to hundreds of rows that are all full of mostly redundant data.

What do these duplicates cost you? Memory (in the SQL server and the code that tries to remove the duplicates) and networking resources (between SQL server and your code server).

Source: https://dev.mysql.com/doc/refman/8.0/en/nested-join-optimization.html ; https://dev.mysql.com/doc/workbench/en/wb-relationship-tools.html

Related videos on Youtube

Andreas Bonini

Updated on March 29, 2020Comments

-

Andreas Bonini about 4 years

Andreas Bonini about 4 yearsAre JOIN queries faster than several queries? (You run your main query, and then you run many other SELECTs based on the results from your main query)

I'm asking because JOINing them would complicate A LOT the design of my application

If they are faster, can anyone approximate very roughly by how much? If it's 1.5x I don't care, but if it's 10x I guess I do.

-

user3167101 almost 15 yearsI'm assume they would be faster. I know that one INSERT compared to say 10 individual INSERT queries is much faster.

-

Ciro Santilli OurBigBook.com almost 8 years

Ciro Santilli OurBigBook.com almost 8 years -

Radio Controlled over 2 yearsI have a problem where the join is much slower than the sum of the time required for the individual queries, despite primary key. I am guessing that SQLITE is trying to save time by going through the rows and checking for the queried values instead of performing the query multiple times. But this is not working well in many cases if you have a fast index on that particular column.

-

-

cHao almost 13 yearsIf we toss aside the annoying little fact that nobody in their right mind does a cross join between 5 tables (for that very reason, along with that in most cases it just doesn't make sense), your "benchmark" might have some merit. But left or inner joins are the norm, usually by key (making retrieval much faster), and the duplication of data is usually much, much less than you're making it out to be.

-

dudewad almost 11 yearsThat, of course, depending on whether or not you're planning on scaling. Cuz when facebook started out I'm sure they had those kind of queries, but had scaling in mind and went for the more efficient albeit possibly more complex solution.

-

Valentin Flachsel almost 11 years@dudewad Makes sense. It all depends on what you need, in the end.

Valentin Flachsel almost 11 years@dudewad Makes sense. It all depends on what you need, in the end. -

dudewad almost 11 yearsHaha yeah... because at google 1 nanosecond lost is literally equal to something like 10 billion trillion dollars... but that's just a rumor.

-

Natalie Adams over 9 years@cHao says who? I just looked up SMF and phpBB and saw JOINs between 3 tables - if you add plugins or modifications they could easily add to that. Any sort of large application has the potential for many JOINs. Arguably a poorly written/mis-used ORM could JOIN tables that it doesn't actually need (perhaps even every table).

-

cHao over 9 years@NathanAdams: Left and inner joins aren't bad at all. (In fact, if you're not joining tables here and there, you're doing SQL wrong.) What i was talking about is cross joins, which are almost always undesirable even between two tables, let alone 5 -- and which would be about the only way to get the otherwise-totally-bogus "2268000" results mentioned above.

-

user151975 over 9 yearsif you are joining 3 or more tables on different keys, often databases (i.e. mysql) can only use one index per table, meaning maybe one of the joins will be fast (and use an index) whereas the others will be extremely slow. For multiple queries, you can optimize the indexes to use for each query.

-

mindplay.dk almost 9 yearsI think this depends on your definition of "faster" ... for example, 3 PK inner joins may turn around faster than 4 round-trips, because of network overhead, and because you need to stop and prepare and send each query after the previous query completes. If you were to benchmark a server under load, however, in most cases, joins will take more CPU time vs PK queries, and often causes more network overhead as well.

-

HoldOffHunger about 8 yearsLook at the results, though. "result size: 2268000" versus "result size: 165". I think your slowdown with JOINs is because your records have a one-to-many relationship with each other, whereas if they had a one-to-one relationship, the JOIN would absolutely be much faster and it certainly wouldn't have a result size bigger than the SELECT.

-

JustAMartin over 6 yearsMaybe the difference might turn otherwise if selecting a page of rows (like 20 or 50) as if for a typical web view grid, and comparing single LEFT JOIN to two queries - selecting 2 or 3 identifiers with some WHERE criteria and then running the other SELECT query with IN().

-

JustAMartin over 6 yearsYes, we should also account not only for queries themselves but also for data processing inside the application. If fetching data with outer joins, there is some redundancy (sometimes it can get really huge) which has to be sorted out by the app (usually in some ORM library), thus in summary the single SELECT with JOIN query might consume more CPU and time than two simple SELECTs

-

vitoriodachef almost 6 years@cHao Obviously you have not met Magento at the time of your first comment

vitoriodachef almost 6 years@cHao Obviously you have not met Magento at the time of your first comment -

cHao almost 6 years@vitoriodachef: Still haven't, actually. But I stand by the comment. A cross join implies that every possible pairing of rows makes sense -- which is rare enough between two tables that I've never wanted to do it. There is a way of writing queries so that they look like a cross join when they're actually inner joins, but the DB knows better as long as you have unique indexes or primary keys. (And if Magento has survived this long, then its DB schema is such that you will never have reason to do a cross join between five tables.)

-

cHao almost 6 yearsYou miss the point. It's not about one-to-(one|many). It's about whether the sets of rows make sense being paired together. You're asking for two only tangentially related sets of data. If you were asking for comments and, say, their authors' contact info, that makes more sense as a join, even though people can presumably write more than one comment.

-

HoldOffHunger almost 6 years@cHao: Thanks for your comment. My answer above is a summary of the MySQL Documentation found here: dev.mysql.com/doc/workbench/en/wb-relationship-tools.html

-

cHao almost 6 yearsThat's not MySQL documentation. It's documentation for a particular GUI tool for working with MySQL databases. And it doesn't offer any guidance on when joins are (or are not) appropriate.

-

HoldOffHunger almost 6 years@cHao: Sorry, I meant the MySQL (R) documentation for MySQL WorkBench (TM), not MySQL Server (TM).

-

cHao almost 6 yearsPedantry aside, the relevance isn't clear. Both mention one-to-one and one-to-many relationships, but that's where the commonality ends. Either way, the issue is about the relationship between the sets of data. Join two unrelated sets, you're going to get every combination of the two. Break related data up into multiple selects, and now you've done multiple queries for dubious benefit, and started doing MySQL's job for it.

-

HoldOffHunger almost 6 years@cHao: I didn't even really add anything much in my last comment (I imagine we agree on this), but you still seem to have a lot more to say. Maybe you should post your own answer? Comments have a character limit for good reason.

-

dallin over 5 years@dudewad Actually, when Facebook started out, I guarantee they went with the simpler solution. Zuckerberg said he programmed the first version in only 2 weeks. Start ups need to move fast to compete and the ones that survive usually don't worry about scaling until they actually need it. Then they refactor stuff after they have millions of investment dollars and can hire rockstar programmers that specialize in performance. To your point, I would expect Facebook often goes for the more complex solution for minute performance gains now, but then most of us aren't programming Facebook.

dallin over 5 years@dudewad Actually, when Facebook started out, I guarantee they went with the simpler solution. Zuckerberg said he programmed the first version in only 2 weeks. Start ups need to move fast to compete and the ones that survive usually don't worry about scaling until they actually need it. Then they refactor stuff after they have millions of investment dollars and can hire rockstar programmers that specialize in performance. To your point, I would expect Facebook often goes for the more complex solution for minute performance gains now, but then most of us aren't programming Facebook. -

Aarish Ramesh over 5 yearsAre the columns id and other_id indexed ?

-

Emiswelt over 5 yearsThis answer is not specific enough and probably generalizes some corner case: Were indices used? What was the cardinality of the data?

-

Adrian Baker over 4 years"It's about whether the sets of rows make sense being paired together": no, the question is about performance.

Adrian Baker over 4 years"It's about whether the sets of rows make sense being paired together": no, the question is about performance. -

Adrian Baker over 4 yearsIf you retrieve a lot of user columns in the second scenario (and the same users comment more than once), this still leaves open the question as to whether they are best retrieved in a separate query.

Adrian Baker over 4 yearsIf you retrieve a lot of user columns in the second scenario (and the same users comment more than once), this still leaves open the question as to whether they are best retrieved in a separate query. -

cHao over 4 years@AdrianBaker: Like i said, lots of smart people putting lots of hard work in. If i were going to optimize my SQL server, my very first idea would be to use compression, which would eliminate a huge amount of redundancy without changing the code much at all. Next-level optimizations would include reorganizing the result into tables and sending those along with tuples of row ids, which the client library could then easily assemble on its side as needed.

-

cHao over 4 yearsBoth of those optimizations could work wonders with a join to reduce or even eliminate the redundancy, but there's not much that can help with the inherently serial queries you'd have to do to fetch related records.

-

JamesHoux over 3 yearsI want to point out that the problem is even mathematically MORE significant than this answer indicates. @HoldOffHunger points out you're getting 16 rows instead of 8. That's one way of looking at it. But really if you look at the data redundancy, you're getting 32 data points instead of 8. Its already 4x data for just 2 joins!!!!! If you add just one more join to make 3, it will get absolutely ridiculoussssss!

JamesHoux over 3 yearsI want to point out that the problem is even mathematically MORE significant than this answer indicates. @HoldOffHunger points out you're getting 16 rows instead of 8. That's one way of looking at it. But really if you look at the data redundancy, you're getting 32 data points instead of 8. Its already 4x data for just 2 joins!!!!! If you add just one more join to make 3, it will get absolutely ridiculoussssss! -

JamesHoux over 3 yearsIf you join a 3rd column that returned 4 additional records for each of the pairs already demonstrated by @HoldOffHunger, you would technically only have 12 meaningful data points, BUT you would have 64 rows and 192 data points.

JamesHoux over 3 yearsIf you join a 3rd column that returned 4 additional records for each of the pairs already demonstrated by @HoldOffHunger, you would technically only have 12 meaningful data points, BUT you would have 64 rows and 192 data points. -

JamesHoux over 3 yearsOne more thing worth pointing out: More memory = slower performance. Memory is enormously slow compared to processor cycles on cache data. Anything that makes an application have to churn more memory will also make it actually process slower.

JamesHoux over 3 yearsOne more thing worth pointing out: More memory = slower performance. Memory is enormously slow compared to processor cycles on cache data. Anything that makes an application have to churn more memory will also make it actually process slower. -

Admin almost 2 yearsWhy would you have to do more than 1 round-trip? I think it should also be possible to run multiple queries and fetch the resulting sets at once.

Admin almost 2 yearsWhy would you have to do more than 1 round-trip? I think it should also be possible to run multiple queries and fetch the resulting sets at once.