Large Block Size in HDFS! How is the unused space accounted for?

Solution 1

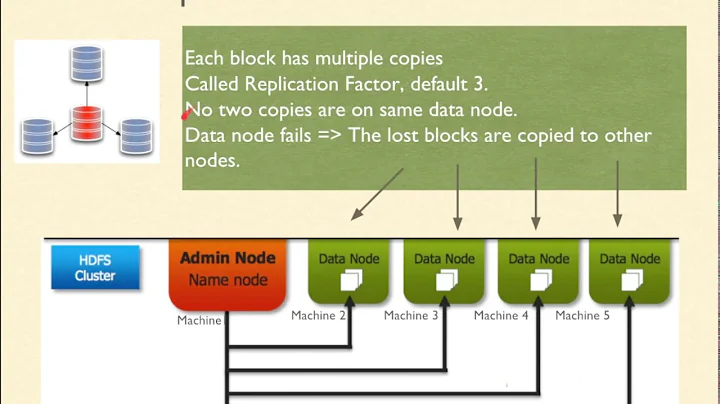

The block division in HDFS is just logically built over the physical blocks of underlying file system (e.g. ext3/fat). The file system is not physically divided into blocks( say of 64MB or 128MB or whatever may be the block size). It's just an abstraction to store the metadata in the NameNode. Since the NameNode has to load the entire metadata in memory therefore there is a limit to number of metadata entries thus explaining the need for a large block size.

Therefore, three 8MB files stored on HDFS logically occupies 3 blocks (3 metadata entries in NameNode) but physically occupies 8*3=24MB space in the underlying file system.

The large block size is to account for proper usage of storage space while considering the limit on the memory of NameNode.

Solution 2

According to the Hadoop - The Definitive Guide

Unlike a filesystem for a single disk, a file in HDFS that is smaller than a single block does not occupy a full block’s worth of underlying storage. When unqualified, the term “block” in this book refers to a block in HDFS.

Each block in HDFS is stored as a file in the Data Node on the underlying OS file system (ext3, ext4 etc) and the corresponding details are stored in the Name Node. Let's assume the file size is 200MB and the block size is 64MB. In this scenario, there will be 4 blocks for the file which will correspond to 4 files in Data Node of 64MB, 64MB, 64MB and 8MB size (assuming with a replication of 1).

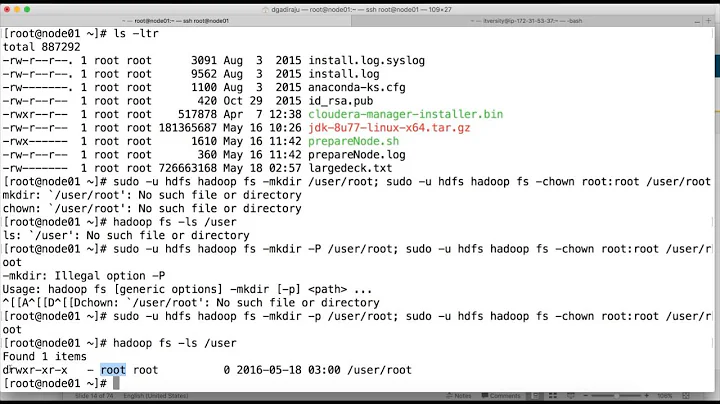

An ls -ltr on the Data Node will show the block details

-rw-rw-r-- 1 training training 11 Oct 21 15:27 blk_-7636754311343966967_1002.meta

-rw-rw-r-- 1 training training 4 Oct 21 15:27 blk_-7636754311343966967

-rw-rw-r-- 1 training training 99 Oct 21 15:29 blk_-2464541116551769838_1003.meta

-rw-rw-r-- 1 training training 11403 Oct 21 15:29 blk_-2464541116551769838

-rw-rw-r-- 1 training training 99 Oct 21 15:29 blk_-2951058074740783562_1004.meta

-rw-rw-r-- 1 training training 11544 Oct 21 15:29 blk_-2951058074740783562

Solution 3

In normal file system if we create a blank file, then also it holds the 4k size, as it is stored on the block. In HDFS it won't happen, for 1GB file only 1GB memory is used, not 4 GB. To be more clear.

IN OS : file size 1KB, Block size : 4KB, Mem Used : 4KB, Wastage : 3 KB. IN HDFS : File size 1GB, Block Size: 4GB, Mem Used : 1GB, Wastage : 0GB, Remaining 3 GB are free to be used by other blocks.

*Don't take numbers seriously, they are cooked up numbers to make point clear.

If you have 2 different file of 1GB then there will be 2 blocks of 1 GB each. In file system if you storing 2 files of 1 KB each, then you will be having 2 different files of 4KB + 4KB = 8KB with 6KB wastage.

SO this make HDFS much better than file system. But irony is HDFS uses local file system and in the end it ends up with the same issue.

Related videos on Youtube

Abhishek Jain

Updated on July 11, 2022Comments

-

Abhishek Jain almost 2 years

We all know that the block size in HDFS is pretty large (64M or 128M) as compared to the block size in traditional file systems. This is done in order to reduce the percentage of seek time compared to the transfer time (Improvements in transfer rate have been on a much larger scale than improvements on the disk seek time therefore, the goal while designing a file system is always to reduce the number of seeks in comparison to the amount of data to be transferred). But this comes with an additional disadvantage of internal fragmentation (which is why traditional file system block sizes are not so high and are only of the order of a few KBs - generally 4K or 8K).

I was going through the book - Hadoop, the Definitive Guide and found this written somewhere that a file smaller than the block size of HDFS does not occupy the full block and does not account for the full block's space but couldn't understand how? Can somebody please throw some light on this.

-

Abhishek Jain over 11 yearsThanks for your answer but let me clarify my question a bit more. Suppose there are 3 8MB files. What will happen in such a case? Would they occupy 3 different blocks on HDFS or the same block which has 64M of capacity be able to accomodate these individual files?

-

Praveen Sripati over 11 yearsIf there are three 8MB files in HDFS, then there will three 8MB files in the underlying file system (ext3, ext4 etc). The blocks for different files are not merged by default. Hadoop Archiving can be used to merge the files into the same same block if required. It's similar to tar.

Praveen Sripati over 11 yearsIf there are three 8MB files in HDFS, then there will three 8MB files in the underlying file system (ext3, ext4 etc). The blocks for different files are not merged by default. Hadoop Archiving can be used to merge the files into the same same block if required. It's similar to tar. -

Abhishek Jain over 11 yearsThen in that case, how is it different from traditional filesystems. The remaining 56 MB of space in each of the blocks is getting wasted in this case i.e. internal fragmentation is happening. Or is there a way which justifies where such a scenario will be rare while using HDFS?

-

Praveen Sripati over 11 yearsThere is no wastage of 56MB since the underlying file size is 8MB and not 64MB.

Praveen Sripati over 11 yearsThere is no wastage of 56MB since the underlying file size is 8MB and not 64MB. -

Abhishek Jain over 11 yearsI still do not get it completely. How are the blocks laid out in this case i.e. I mean how is internal fragmentation of memory taken care of in this case? If the file can be of varied sizes, then how are the blocks laid out i.e. I mean how is data going to be written after this file has been written. As far as I understand, data is written block by block, then how is the remaining 56 MB quota of the previous block utilized. I hope you get my question (Just to clarify I am talking on similar terms of why the block size is just a few KBs in traditional file system).

-

Abhishek Jain over 11 yearsThanks for clarifying. This is exactly what I was looking for.

-

brain storm almost 10 years@Satbir: I need a clarification here: three 8MB files should logically occupy only one block correct (since HDFS block size is 64MB)?

-

Satbir over 9 years@brainstorm : Three 8 MB files can occupy 3 blocks, and each of the block will be of size 8 MB.

Satbir over 9 years@brainstorm : Three 8 MB files can occupy 3 blocks, and each of the block will be of size 8 MB. -

ernesto over 9 yearsSo HDFS block size is irrelevant to reducing disk seek time...etc?

-

mrsrinivas over 8 years@Praveen: Will that 56MB of unused block can be used to another small file with an entry in namenode ?

-

Praveen Sripati over 8 years@MRSrinivas - a block will use only the required space. A file of 100MB will use 64MB block and 36MB block. This is with the block size of 64MB.

Praveen Sripati over 8 years@MRSrinivas - a block will use only the required space. A file of 100MB will use 64MB block and 36MB block. This is with the block size of 64MB. -

Suvarna Pattayil about 8 years@Satbir Wanted to clarify. I assume by 3 metadata entries you mean the 3 replicas right ? ( original + 2 replica which is the default config ).