Latency of CPU instructions on x86 and x64 processors

Solution 1

In general, each of these operations takes a single clock cycle as well to execute if the arguments are in registers at the various stages of the pipeline.

What do you mean by latency? How many cycles an operation spends in the ALU?

You might find this table useful: http://www.agner.org/optimize/instruction_tables.pdf

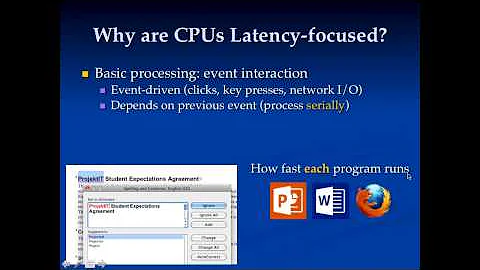

Since modern processors are super scalar and can execute out of order, you can often get total instructions per cycle that exceed 1. The arguments for the macro command are the most important, but the operation also matters since divides take longer than XOR (<1 cycle latency).

Many x86 instructions can take multiple cycles to complete some stages if they are complex (REP commands or worse MWAIT for example).

Solution 2

Calculating the efficiency of assembly code is not the best way to go in these days of Out of Order Execution Super Scalar pipelines. It'll vary by processor type. It'll vary on instructions both before and after (you can add extra code and have it run faster sometimes!). Some operations (division notably) can have a range of execution times even on older more predictable chips. Actually timing of lots of iterations is the only way to go.

Solution 3

You can find information on intel cpu at intel software developer manuals. For instance the latency is 1 cycle for an integer addition and 3 cycles for an integer multiplication.

I don't know about multiplication, but I expect addition to always take one cycle.

Related videos on Youtube

Comments

-

ST3 over 1 year

I'm looking for some table or something similar that could help me to calculate efficiency of assembly code.

As I know bit shifting takes 1 CPU clock, but I really looking how much takes addition (subtraction should take the same), multiplication and how to presumably calculate division time if I know values that are dividing.

I really need info about integer values, but float execution times are welcome too.

-

Ciro Santilli Путлер Капут 六四事 about 9 yearsPossible same on SO: stackoverflow.com/questions/692718/…

Ciro Santilli Путлер Капут 六四事 about 9 yearsPossible same on SO: stackoverflow.com/questions/692718/…

-

-

ST3 over 10 yearsI know that, but I need that not in real project but in one kind a fun programming project.

-

Brian Knoblauch over 10 yearsWhether you need it for real or for fun doesn't change the answer for this processor line. Have you considered switching to a more deterministic processor, such as a Propeller chip, instead?

-

Paul A. Clayton over 10 yearsEven with a scalar, in-order implementation branch mispredictions and cache misses can cause variation in run time.

-

Brian Knoblauch over 10 yearsOne cycle, except when it's "free" (in parallel when pipelines line up correctly) or takes longer due to a cache miss. :-)

-

Peter Cordes almost 7 yearsInteger multiply is at least 3c latency on all recent x86 CPUs (and higher on some older CPUs). On many CPUs it's fully pipelined, so throughput is 1 per clock, but you can only achieve that if you have three independent multiplies in flight. (FP multiply on Haswell is 5c latency, 0.5c throughput, so you need 10 in flight to saturate throughput). Division (

Peter Cordes almost 7 yearsInteger multiply is at least 3c latency on all recent x86 CPUs (and higher on some older CPUs). On many CPUs it's fully pipelined, so throughput is 1 per clock, but you can only achieve that if you have three independent multiplies in flight. (FP multiply on Haswell is 5c latency, 0.5c throughput, so you need 10 in flight to saturate throughput). Division (divandidiv) is even worse: it's microcoded, and much higher latency thanaddorshr, and not even fully pipelined on any CPU. All of this is straight from Agner Fog's instruction tables, so it's a good thing you linked that. -

Peter Cordes almost 7 yearsSee also Why is this C++ code faster than my hand-written assembly for testing the Collatz conjecture? for more about optimizing asm.

Peter Cordes almost 7 yearsSee also Why is this C++ code faster than my hand-written assembly for testing the Collatz conjecture? for more about optimizing asm. -

Peter Cordes almost 7 yearsFor purely CPU-bound stuff (no cache misses, no branch mispredicts), CPU behaviour is understood in enough detail that static analysis can often predict almost exactly how many cycles per iteration a loop will take on a specific CPU (e.g. Intel Haswell). e.g. see this SO answer where looking at the compiler-generated asm let me explain why the branchy version ran almost exactly 1.5x faster than the CMOV version on the OP's Sandybridge CPU, but much closer on my Skylake.

Peter Cordes almost 7 yearsFor purely CPU-bound stuff (no cache misses, no branch mispredicts), CPU behaviour is understood in enough detail that static analysis can often predict almost exactly how many cycles per iteration a loop will take on a specific CPU (e.g. Intel Haswell). e.g. see this SO answer where looking at the compiler-generated asm let me explain why the branchy version ran almost exactly 1.5x faster than the CMOV version on the OP's Sandybridge CPU, but much closer on my Skylake. -

Peter Cordes almost 7 yearsIf you're writing asm by hand for performance reasons, then it is actually useful to look for latency and throughput bottlenecks on Intel and AMD CPUs. It's hard, though, and sometimes what's optimal for AMD isn't what's optimal for Intel.

Peter Cordes almost 7 yearsIf you're writing asm by hand for performance reasons, then it is actually useful to look for latency and throughput bottlenecks on Intel and AMD CPUs. It's hard, though, and sometimes what's optimal for AMD isn't what's optimal for Intel. -

stefanct over 6 yearsCurrently (2018) this information is available in Appendix C named "Instruction Latency and Throughput" of document 248966 "Intel® 64 and IA-32 Architectures Optimization Reference Manual" also available on the page linked in the answer