Limiting bandwidth on Ubuntu Linux

Using tc (because it's the most current, and I'm the most familiar with it), you should be able to slow traffic down without problems.

I have a server acting as the firewall (called 'firewall' -- very creative) and then a second server behind that (called 'mil102'). without any tc commands, scp'ing a file from mil102 to firewall moves at full speed:

root@firewall:/data#scp mil102:/root/test.tgz test.tgz

test.tgz 100% 712MB 71.2MB/s 00:10

Adding the following commands to mil102 (it's easier to shape sending traffic):

#!/bin/sh

DEV=eth0

tc qdisc del dev $DEV root

tc qdisc add dev $DEV handle 1: root htb default 11

tc class add dev $DEV parent 1: classid 1:1 htb rate 4Mbps

tc class add dev $DEV parent 1:1 classid 1:11 htb rate 4Mbit

tc qdisc add dev $DEV parent 1:11 handle 11: sfq perturb 10

Now the same command slows down to 4Mb:

root@firewall:/data#scp mil102:/root/test.tgz test.tgz

test.tgz 0% 6064KB 467.0KB/s 25:48 ETA

I stopped the transfer -- would take far too long. The speed listed in the scp is in bytes, specified in tc in bits, so 467KB * 9 = 4203Kb, close to my 4096Kb limit (thought it would be *8, but I guess there's a parity bit?).

I tried changing to 10Mbit and my scp showed I was moving data at 1.1MB per second (1.1 * 9 = 9.9).

The last line with the 'sfq perturb 10' directive was added to even out traffic flow on a loaded connection. It directs the queue to take packets from each conversation based on a round-robin hash.

You can test with and without -- ssh to a loaded machine without will go in bursts, with will be much smoother (very important for VOIP). The 'perturb 10' tells it to recalculate the hash algorithm every 10 seconds to make it random.

Related videos on Youtube

Kasper Vesth

Updated on September 18, 2022Comments

-

Kasper Vesth over 1 year

I am in the situation where I have to simulate a P2P-environment (for my masters thesis in computer science). To do that I am using Docker with Ubuntu to create a bunch of virtual machines that is gonna be connected in a BitTorrent network. I then need to make sure that the upload and download rate of the peers can be set, and it is not an option for me to do it in the client (as the client uses sleeping to simulate lower bandwidth and that results in spikes in the rate).

Therefore I am trying to do it for each container. To be honest I don't really care how this is accomplished as long as it works, but I have tried different things with no luck. These are the things I have tried so far:

- Trickle Trickle seems to be doing the trick, but for some reason, when I start more than 5 Docker containers Trickle will make a lot of them exit without telling me why. I have tried different settings, but you don't have that many knobs to turn when it comes to configuration, so I don't think Trickle will be an option in this scenario.

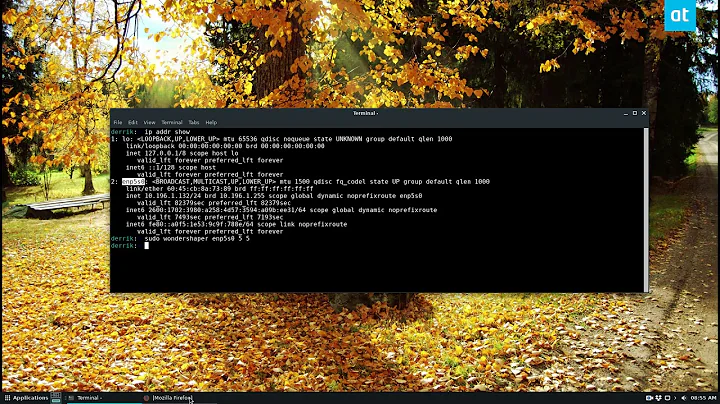

- Wondershaper Using Wondershaper seems to work, or at least it limits the bandwidth. The only problem here is that there are no seemingly understandable correlation between the value set in the options and the actual bandwidth. When I set up to have download of 2048 (which should be kbits) the actual download ranges between 550KB and 900KB which seems really weird.

- tc Using tc, like many people have suggested for similar questions, does indeed limit the bandwidth, but no matter what value I set it always gives me the same bandwidth (around 15-20KB/s).

I have tried following tons of guides and examples, but every single one has either not worked or is described above. I am somewhat at a loss here, so if anyone knows of any reason the above examples should work or have another solution it would be awesome.

What I am looking for is a way to limit a single Linux instance, and then I should be able to make that work for multiple Docker containers.

--------------- EDIT----------------

I have tried a couple of different tc commands, but one of them is like this

DEV=eth0 tc qdisc del dev $DEV root tc qdisc add dev $DEV root handle 1: cbq avpkt 1000 bandwidth 100mbit tc class add dev $DEV parent 1: classid 1:1 cbq rate 256kbit allot 1500 prio 5 bounded isolated tc filter add dev $DEV parent 1: protocol ip prio 16 u32 match ip src 0.0.0.0/0 flowid 1:1 tc qdisc add dev $DEV parent 1:1 sfq perturb 10No matter what rate I set it always gives me download of around 12 KB/s (the default download with no limit set is around 4MB/s)

------------EDIT 2 (what I ended up doing)------------

It turns out that you can't reliable set the bandwidth for the Docker containers inside the containers, but whenever you make a new container a virtual interface is created on the host machine called vethsomething. If you use for instance Wondershaper on those virtual interfaces the limit has the correct behaviour :)

-

Ed King almost 9 yearsWhat tc commands are you using, and are you sure your measuring traffic in the same unit you're specifying in the tc commands?

-

Kasper Vesth almost 9 yearsI have edited the question with an example of the tc commands, and I am using both curl and wget to check the download speed, and the rate never changes no matter what I set the rate to..

-

Ed King almost 9 yearsI'm posting what I've tested on a stock Ubuntu machine with one interface -- I'd put it in comments but don't have formatting here. Note that is limits outbound only on the interface -- if you try this, does your result match mine?

-

Kasper Vesth almost 9 yearsIt seems to be working, but I don't understand the limits I am getting. When I am running with 10Kbps and 10 Kbit the transfer goes with around 110 KB/s and if I set it to 50 instead it is 1.3MB/s.. 10 times more. Do you have any idea why that is? I can't seem to find any consistency

-

Ed King almost 9 yearsCouple of questions -- how big is the file you're transferring for the test (ideally it should take some time for the speed to settle down), and are you running the tests in a virtual machine (is it different on dedicated hardware)?

-

Kasper Vesth almost 9 yearsThe file should be large enough and I have let the transfers run a bit to let it settle. No doubt it works, I was just curious as to why there was such a difference. The tests are running on two virtual machines on the same network. But I will consider your answer as being correct as far as my question goes I ended up doing something a bit different though that worked well with Docker and I will update my question with what I did :) Thank you for the help

-

Houman over 6 years@EdKing Great answer. I finally got my head around it. Ubuntu page says though the perturb's adviced value is 60. But I see a lot of sites set it to 10 like you. manpages.ubuntu.com/manpages/xenial/man8/tc-sfq.8.html Any advice?

-

Ed King over 6 yearsThe hash function for a queue determines which flow to pick data from (like round-robin, the hash function rotates through the queues). There is a possibility that this function will be unfair (give higher priority to certain queues). What perturb does is to alter the hash function (kick it out of a rut) at a specified time interval -- the idea is to keep things as fair as possible. I chose 10 for 10 seconds, you could set it to 60 and it would give the hash function a kick every minute. See linux-ip.net/articles/Traffic-Control-HOWTO/…