Load Balancing Best Practices for Persistence

Solution 1

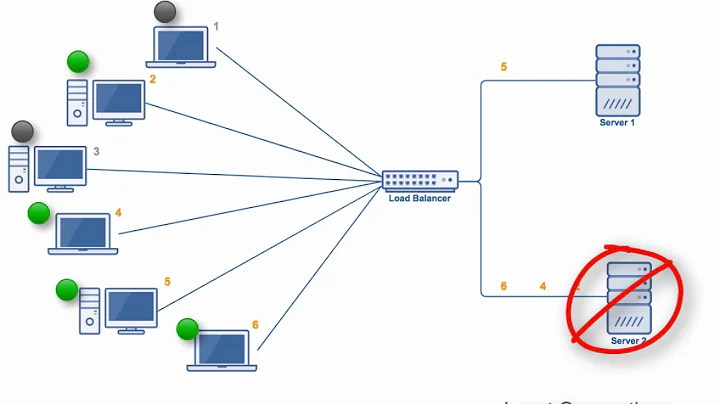

The canonical solution to this is to not rely on end user IP address, but instead use a Layer 7 (HTTP/HTTPS) load balancer with "Sticky Sessions" via a cookie.

Sticky sessions means the load balancer will always direct a given client to the same backend server. Via cookie means the load balancer (which is itself a fully capable HTTP device) inserts a cookie (which the load balancer creates and manages automagically) to remember which backend server a given HTTP connection should use.

The main downside to sticky sessions is that beckend server load can become somewhat un-even. The load balancer can only distribute load fairly when new connections are made, but given that existing connections may be long-lived in your scenario, then in some time periods load will not be distributed entirely fairly.

Just about every Layer 7 load balancer should be able to do this. On Unix/Linux, some common examples are nginx, HAProxy, Apsis Pound, Apache 2.2 with mod_proxy, and many more. On Windows 2008+ there is Microsoft Application Request Routing. As appliances, Coyote Point, loadbalancer.org, Kemp and Barracuda are common in the low-end space; and F5, Citrix NetScaler and others in high-end.

Willy Tarreau, the author of HAProxy, has a nice overview of load balancing techniques here.

About the DNS Round Robin:

Our intent was for the Round Robin DNS TTL value for our api.company.com (which we've set at 1 hour) to be honored by the downstream caching nameservers, OS caching layers, and client application layers.

It will not be. And DNS Round Robin isn't a good fit for load balancing. And if nothing else convinces you, keep in mind that modern clients may prefer one host over all others due to longest prefix match pinning, so if the mobile client changes IP address, it may choose to switch to another RR host.

Basically, it's okay to use DNS round robin as a coarse-grained load distribution, by pointing 2 or more RR records to highly available IP addresses, handled by real load balancers in active/passive or active/active HA. And if that's what you're doing, then you might as well serve those DNS RR records with long Time To Live values, since the associated IP addresses are highly available already.

Solution 2

To answer your question about alternatives: You can get solid layer-7 load balancing through HAProxy.

As far as fixing the LVS affinity issues, I'm a bit dry on solid ideas. It could be as simple as a timeout or overflow. Some mobile clients will switch IP addresses while they're connected to the network; perhaps this may be the source of your woes? I would suggest, at the very least, that you spread the affinity granularity out to at least a class C.

Related videos on Youtube

Comments

-

dmourati almost 2 years

We run a web application serving up web APIs for an increasing number of clients. To start, the clients were generally home, office, or other wireless networks submitting chunked http uploads to our API. We've now branched out into handling more mobile clients. The files ranging from a few k to several gigs, broken down into smaller chunks and reassembled on our API.

Our current load balancing is performed at two layers, first we use round robin DNS to advertise multiple A records for our api.company.com address. At each IP, we host a Linux LVS: http://www.linuxvirtualserver.org/, load-balancer that looks at the source IP address of a request to determine which API server to hand the connection to. This LVS boxes are configured with heartbeatd to take-over external VIPs and internal gateway IPs from one another.

Lately, we've seen two new error conditions.

The first error is where clients are oscillating or migrating from one LVS to another, mid-upload. This in turn causes our load balancers to lose track of the persistent connection and send the traffic to a new API server, thereby breaking the chunked upload across two or more servers. Our intent was for the Round Robin DNS TTL value for our api.company.com (which we've set at 1 hour) to be honored by the downstream caching nameservers, OS caching layers, and client application layers. This error occurs for approximately 15% of our uploads.

The second error we've seen much less commonly. A client will initiate traffic to an LVS box and be routed to realserver A behind it. Thereafter, the client will come in via a new source IP address, which the LVS box does not recognize, thereby routing ongoing traffic to realserver B also behind that LVS.

Given our architecture as described in part above, I'd like to know what are people's experiences with a better approach that will allow us to handle each of the error cases above more gracefully?

Edit 5/3/2010:

This looks like what we need. Weighted GSLB hashing on the source IP address.

-

Frank about 13 yearsYour question isn't really specific for mobile right now. Perhaps you would consider revising and simplifying it?

-

-

dmourati about 13 yearsHAProxy was definitely in my sights. I read a pretty interesting article on L4 v L7 load balancing. blog.loadbalancer.org/why-layer-7-sucks My take: I'd like to leave this in the hands of the application. Any extra "smarts" I add to the LB layer will just have to be patched/readdressed as we change our application. Solving the problem in the application itself means we can optimize and fine-tune things at the LB while remaining confident that even if there is a LB misstep we'll still get the data.

-

Frank about 13 years@dmourati: Sorry, but that blog post is full of inaccurate assumptions. Don't blindly follow it. It's absolutely true that a "shared nothing" architecture for the web application servers is 'best'. In that case, you should use Round Robin or Random load balancing. But, as long as you have multi-GB HTTP uploads you have long-lived HTTP conversations, and a HTTP load balancer is just better positioned to understand this long HTTP exchange and act correctly. Using an HTTP balancer does not preclude making your backend app code 'smarter', you're still free to do so any time.

-

dmourati about 13 yearsThanks. We're in Active/Active mode with LVS. The IPs are highly available and we have lots of control over the clients as we write them ourselves and they rely on our API server which is not entirely stateless as described above. I tested the OS level caching issue on my Linux box at work (it has no caching turned on) as well as my Mac OSX laptop at home (it caches at the OS layer, which "pins" the IP to one result or the other).

-

dmourati about 13 yearsI ended up writing my own custom DNS server to fix the round robin issue. It looks at the source IP address and uses a hash to reply with a consistent a record. Seems to be working and reduced our "pop switch" problem by a factor of 10.