Load balancing Wordpress on Amazon Web Services: Managing changes

Stateless web apps are hard.

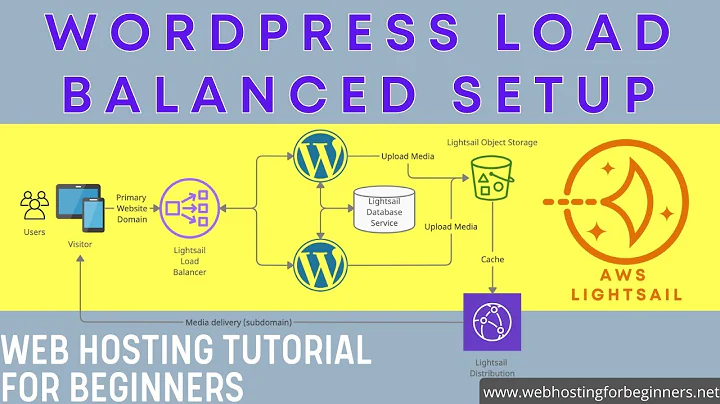

As you know, wordpress relies pretty heavily on things being written to disk. Here is the proposed infrastructure

- Elastic Load balancer

- Autoscaling group comprised of small ec2 instances

- Foreman/Dev micro ec2 instance

- Micro/Small RDS for CMS data

- Elasticache cluster for session storage

- S3 bucket for media uploads

Now the hard part.

Lets forget about updates to the codebase for a second and lets look at how to make the whole thing stateless. You should do the following to make this thing horizontally scalable:

- Start with your micro instance. It will act as deployment mechanism as well as a template

- Set PHP sessions to use memcached for session management and point it towards your elasticache cluster http://www.dotdeb.org/2008/08/25/storing-your-php-sessions-using-memcached/

- Install git on the micro instance

- install some sort of wordpress plugin to put all file uploads in S3 (optional but saves you from redeploying each time you upload a media fie to the cms) try the W3 Total Cache plugin

This takes care of the setup

How to deploy new changes

You will use your micro instance for all future changes to the wordpress install. This includes stuff like updating wordpress, updating your theme files and pretty much anything that is stored on the disk.

You will need to create two scripts:

The first one will be used to deploy the changes to the autoscaling group. It should do the following:

- Commit the changes made to its git repo

- Ping all the production instances and tell them to download the new code base from the micro-instance. You will need to use some form of AWS sdk to get the list of instances in the auto-scaling group and trigger their receive scripts. I personally do this with mine via a HTTP endpoint that i created.

The second script will live on the auto-scaling group's instances and be triggered by the first script as well as run when the instance is initialized for the first time. It should do the following:

- connect to the git repository on the micro instance

- fetch the latest changes and checkout the changes in a detached HEAD state

Each time you make any system file changes, you should run the deploy script above. this will then propagate the changes to all the production instances.

Now create a base AMI for the production instances. It should be very very similar to the micro instance however wordpress should not actually be installed. You will use userdata passed into the ec2 instances on launch to run the second script above to download the latest version of the codebase from the micro instance.

One last thing... If you are running any form of e-commerce you are going to need an SSL certificate installed on the load balancer. have a look at the guide here: http://www.nczonline.net/blog/2012/08/15/setting-up-ssl-on-an-amazon-elastic-load-balancer/

Related videos on Youtube

Lloyd Rees

Updated on September 18, 2022Comments

-

Lloyd Rees over 1 year

Lloyd Rees over 1 yearI am relatively new to Amazon Web Services and I am trying to get my head around how Elastic Load Balancing will work in context of my wordpress setup. In addition, I would like some advice on proposed infrastructure.

My initial proposed infrastructure is as follows:

- 1x EC2 m1.small - Ubuntu 12.04.3 LTS 64bit (with 1 EBS volume)

- 1x EC2 t1.micro - Ubuntu 12.04.3 LTD 64bit (with or without EBS volume?)

- 1x Micro RDS Instance - MySQL 5.6.13

EC2 My current EC2 (t1.micro) is running a LAMP stack and configured to run wordpress.

I would like to load balance this with an m1.small instance, running a clone of the t1.micro instance.

The current unknowns for me are as follows:

- How will a load balanced setup manage changes made on the wordpress CMS across instances? Will I have to keep updating the AMIs every time a change is made in wordpress?

- My website is an ecommerce website. Is there any impact of this in a load balanced setup? I.e., is there a possibility for orders to exist on one instance and not another?

It might be a pretty stupid question, but I am presuming some issues won't be relevant because the infrastructure is referencing one database.

Lastly, is there a better way I should be setting up the infrastructure for load balancing? I.e. should I be considering using Amazon S3 to store all of my files and use Cloudfront as a CDN to ensure efficient operation and resolve any EBS file replication issues.

Any help greatly appreciated.

Lloyd

-

Admin about 10 yearsYou almost never want to run micros in production. Their CPUs get capped severely under load, so your RDS will grind to a halt with any reasonable amount of traffic.

Admin about 10 yearsYou almost never want to run micros in production. Their CPUs get capped severely under load, so your RDS will grind to a halt with any reasonable amount of traffic.

-

Jeremy over 9 years@keeganiden thanks a lot for, your answer give me some direction. As another possible alternative, other CDN services such as maxCDN can be used to delivery content. It's easier because most webapp like wordpress/presta has a add-on so that all static files (i.e. js,css,images) be delivered to their cdn automatically.

Jeremy over 9 years@keeganiden thanks a lot for, your answer give me some direction. As another possible alternative, other CDN services such as maxCDN can be used to delivery content. It's easier because most webapp like wordpress/presta has a add-on so that all static files (i.e. js,css,images) be delivered to their cdn automatically. -

nu everest over 7 yearsSince your on amazon you could use DynamoDB as a session store in place of memcached aws.amazon.com/blogs/aws/…

nu everest over 7 yearsSince your on amazon you could use DynamoDB as a session store in place of memcached aws.amazon.com/blogs/aws/… -

Immutable Brick over 7 yearsVery good answer, the most difficult part would be to add to git and let the user update the files through the user interface, correct me if I am wrong but as soon as you set up permissions for those files to be changed, it kinda stops being stateless since now you have to synchronise those changes to multiple servers.