Locally run all of the spiders in Scrapy

Solution 1

Here is an example that does not run inside a custom command, but runs the Reactor manually and creates a new Crawler for each spider:

from twisted.internet import reactor

from scrapy.crawler import Crawler

# scrapy.conf.settings singlton was deprecated last year

from scrapy.utils.project import get_project_settings

from scrapy import log

def setup_crawler(spider_name):

crawler = Crawler(settings)

crawler.configure()

spider = crawler.spiders.create(spider_name)

crawler.crawl(spider)

crawler.start()

log.start()

settings = get_project_settings()

crawler = Crawler(settings)

crawler.configure()

for spider_name in crawler.spiders.list():

setup_crawler(spider_name)

reactor.run()

You will have to design some signal system to stop the reactor when all spiders are finished.

EDIT: And here is how you can run multiple spiders in a custom command:

from scrapy.command import ScrapyCommand

from scrapy.utils.project import get_project_settings

from scrapy.crawler import Crawler

class Command(ScrapyCommand):

requires_project = True

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders'

def run(self, args, opts):

settings = get_project_settings()

for spider_name in self.crawler.spiders.list():

crawler = Crawler(settings)

crawler.configure()

spider = crawler.spiders.create(spider_name)

crawler.crawl(spider)

crawler.start()

self.crawler.start()

Solution 2

Why didn't you just use something like:

scrapy list|xargs -n 1 scrapy crawl

?

Solution 3

the answer of @Steven Almeroth will be failed in Scrapy 1.0, and you should edit the script like this:

from scrapy.commands import ScrapyCommand

from scrapy.utils.project import get_project_settings

from scrapy.crawler import CrawlerProcess

class Command(ScrapyCommand):

requires_project = True

excludes = ['spider1']

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders'

def run(self, args, opts):

settings = get_project_settings()

crawler_process = CrawlerProcess(settings)

for spider_name in crawler_process.spider_loader.list():

if spider_name in self.excludes:

continue

spider_cls = crawler_process.spider_loader.load(spider_name)

crawler_process.crawl(spider_cls)

crawler_process.start()

Solution 4

this code is works on My scrapy version is 1.3.3 (save it in same directory in scrapy.cfg):

from scrapy.utils.project import get_project_settings

from scrapy.crawler import CrawlerProcess

setting = get_project_settings()

process = CrawlerProcess(setting)

for spider_name in process.spiders.list():

print ("Running spider %s" % (spider_name))

process.crawl(spider_name,query="dvh") #query dvh is custom argument used in your scrapy

process.start()

for scrapy 1.5.x (so you don't get the deprecation warning)

from scrapy.utils.project import get_project_settings

from scrapy.crawler import CrawlerProcess

setting = get_project_settings()

process = CrawlerProcess(setting)

for spider_name in process.spider_loader.list():

print ("Running spider %s" % (spider_name))

process.crawl(spider_name,query="dvh") #query dvh is custom argument used in your scrapy

process.start()

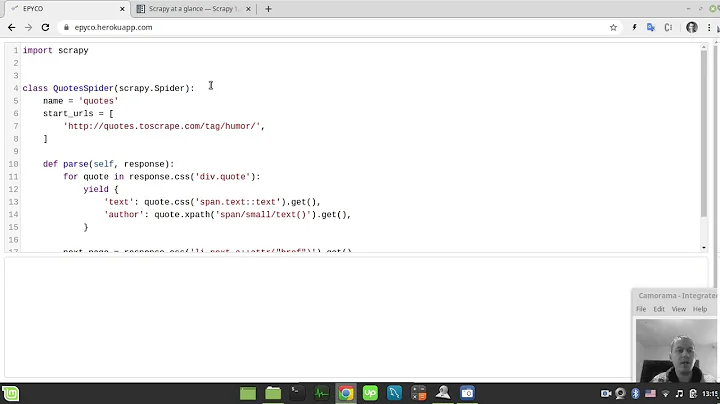

Related videos on Youtube

Blender

Need a freelancer? Send me an email at me@{username}so.33mail.com. You're welcome to skim my SO answers to get a general feel of what I've worked with. I have significant experience with Python and most of its popular packages and workflows (e.g. Django, Flask, SQLAlchemy, Numpy, Scipy, Scrapy, and asyncio), especially in the realms of network applications, web development, and data acquisition/processing.

Updated on September 16, 2022Comments

-

Blender over 1 year

Is there a way to run all of the spiders in a Scrapy project without using the Scrapy daemon? There used to be a way to run multiple spiders with

scrapy crawl, but that syntax was removed and Scrapy's code changed quite a bit.I tried creating my own command:

from scrapy.command import ScrapyCommand from scrapy.utils.misc import load_object from scrapy.conf import settings class Command(ScrapyCommand): requires_project = True def syntax(self): return '[options]' def short_desc(self): return 'Runs all of the spiders' def run(self, args, opts): spman_cls = load_object(settings['SPIDER_MANAGER_CLASS']) spiders = spman_cls.from_settings(settings) for spider_name in spiders.list(): spider = self.crawler.spiders.create(spider_name) self.crawler.crawl(spider) self.crawler.start()But once a spider is registered with

self.crawler.crawl(), I get assertion errors for all of the other spiders:Traceback (most recent call last): File "/usr/lib/python2.7/site-packages/scrapy/cmdline.py", line 138, in _run_command cmd.run(args, opts) File "/home/blender/Projects/scrapers/store_crawler/store_crawler/commands/crawlall.py", line 22, in run self.crawler.crawl(spider) File "/usr/lib/python2.7/site-packages/scrapy/crawler.py", line 47, in crawl return self.engine.open_spider(spider, requests) File "/usr/lib/python2.7/site-packages/twisted/internet/defer.py", line 1214, in unwindGenerator return _inlineCallbacks(None, gen, Deferred()) --- <exception caught here> --- File "/usr/lib/python2.7/site-packages/twisted/internet/defer.py", line 1071, in _inlineCallbacks result = g.send(result) File "/usr/lib/python2.7/site-packages/scrapy/core/engine.py", line 215, in open_spider spider.name exceptions.AssertionError: No free spider slots when opening 'spidername'Is there any way to do this? I'd rather not start subclassing core Scrapy components just to run all of my spiders like this.

-

Blender about 11 yearsThank you, this is exactly what I was trying to do.

-

user1787687 over 10 yearsHow to I star the program?

-

Steven Almeroth over 10 yearsPut the code in a text editor and save as

mycoolcrawler.py. In Linux you probably can runpython mycoolcrawler.pyfrom the command line in the directory you saved it in. In Windows maybe you can just double-click it from file-manager. -

yangmillstheory over 9 yearsIs there any reason to use a Crawler per spider as here, versus using a single crawler for many spiders?

yangmillstheory over 9 yearsIs there any reason to use a Crawler per spider as here, versus using a single crawler for many spiders? -

rgtk almost 9 yearsUse

rgtk almost 9 yearsUse-P 0option forxargsto run all spiders in parallel. -

float13 about 5 yearsI also used the answer given by Yuda Prawira above, which still works in Scrapy 1.5.2, but I got this warning:

float13 about 5 yearsI also used the answer given by Yuda Prawira above, which still works in Scrapy 1.5.2, but I got this warning:ScrapyDeprecationWarning: CrawlerRunner.spiders attribute is renamed to CrawlerRunner.spider_loader.All you have to do is change name in the for loop code:for spider in process.spider_loader.list(): ...Otherwise still works! -

xaander1 over 2 yearsHow to dynamically output json with name of the spider

xaander1 over 2 yearsHow to dynamically output json with name of the spider