logical drives on HP Smart Array P800 not recognized after rebooting

No, I only remember that there were two discs with failure warnings. That is why we decided to reboot the enclosure.

That was the wrong action to take. RAID5 and two failed disks is a dangerous combination. RAID5 can only sustain a single disk failure.

Your external enclosure is likely an HP StorageWorks MSA60, rather than the D2600 unit you linked. The main point is the same, though. You should have corrected the disk failures rather than reboot the enclosure/system. Having a logical drive in failed status means that the array has failed and the data is possibly gone.

My recommendation to you is to power the server down and also physically remove power to the MSA60 enclosure (remove the cables, don't just press the power button). Leave the systems off for 5-10 minutes.

Power the enclosure back on. Give it 60-90 seconds to spin up the disks. Follow by powering on the server.

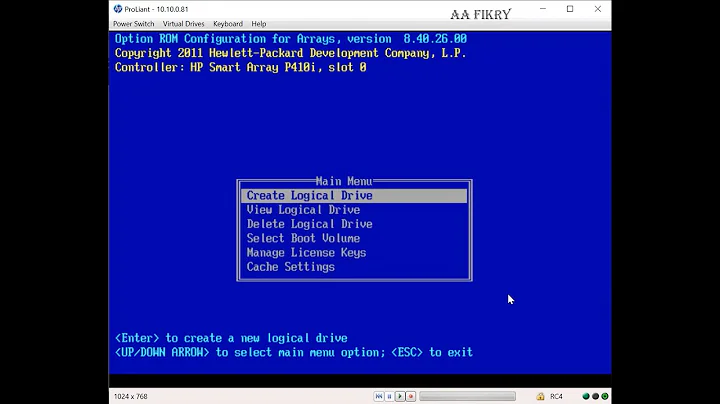

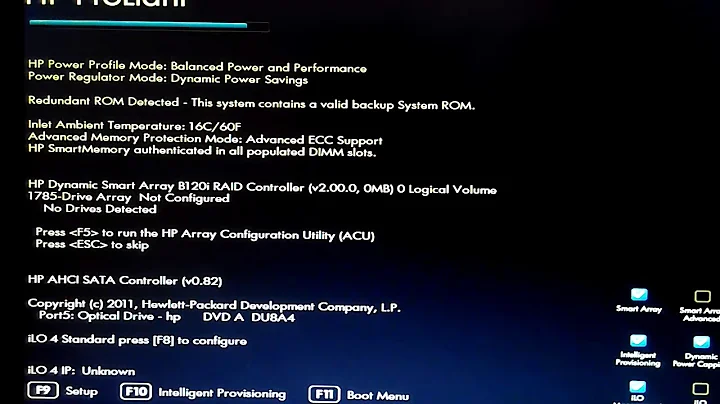

Pay close attention to the POST messages, specifically the initialization of the Smart Array P800 controller. You MAY be prompted to enable a previously failed logical drive.

Logical drive(s) disabled due to possible data loss.

Select "F1" to continue with logical drive(s) disabled

Select "F2" to accept data loss and to re-enable logical drive(s)

RESUME = "F1" OR "F2" KEY

If prompted with this, you will want to press F2 to re-enable the logical drive.

See what happens once the system has completed booting. If this is successful, you'll want to make sure the disks are healthy, then possibly update firmware on the server, controllers, disks and MSA units, since there have been bugs that trigger false drive failures and other undesirable behavior on this equipment.

Related videos on Youtube

Bn_am

Updated on September 18, 2022Comments

-

Bn_am over 1 year

We had a Smart Array P800 configuration working fine (RAID5). Physically, we have a disk enclosure (like this) connected with a Linux Server. Here is what happened:

- This server was accidentally rebooted.

- The hpacucli utility (command line) run on the server immediately rebooted gave some strange disk failures and the logical drive was on a "failed" status.

-

We rebooted the disk enclosure and again the Server. We got this during rebooting

Drive positions appear to have changed. Run Array Diagnostics Utility (ADU) if previous positions are unknown. The hpacucli sees now all the disks as OK, but it lists them as "unassigned".

How can we re-create the logical drive with any loss of data? Is there some utility/command we can run to sort this out?.

-

ewwhite about 10 yearsDo you have the details of what you saw in

ewwhite about 10 yearsDo you have the details of what you saw inhpacucli? -

Bn_am about 10 yearsYes: this is the result of

hpacucli ctrl all show status: Smart Array P400 in Slot 1 Controller Status: OK Cache Status: OK Smart Array P800 in Slot 8 Controller Status: OK Cache Status: Not Configured Battery/Capacitor Status: Failed (Replace Batteries/Capacitors) -

Bn_am about 10 yearsIf you mean at point 2.) of my description: No, I only remember that there were two discs with failure warnings. That is why we decided to reboot the enclosure.

-

Bn_am about 10 yearsif I try to mount from the location where it was before the logical drive

mount /dev/cciss/c0d0p2 /mnt/tmp/, I get this: mount: you must specify the filesystem type

-

Bn_am about 10 yearsHi, thanks for your answer. The POST message is the following: "An invalid drive movement was reported during POST. Modifications to the array configuration will result in loss of old configuration information and contents of the original logical drives." Do you know if it is possible to re-create the logical dirve?

-

ewwhite about 10 years@Bn_am Did you physically move any of the drives? You also have a failed RAID battery. Lots of bad news here.

ewwhite about 10 years@Bn_am Did you physically move any of the drives? You also have a failed RAID battery. Lots of bad news here. -

Bn_am about 10 yearsNo, we did not move any of the drives. Do you think that a failed battery can give to the controller the signal of an "invalid drive movement"?

-

Bn_am about 10 yearsWe are thinking that some of the disk that now are OK were failed, then they went OK, and therefore the controller reports that we have moved something. Do you think this is possible?

-

ewwhite about 10 years@Bn_am If you allowed the server to reboot and bypassed the RAID controller prompts at boot that asked about the array, then yes, you may have lost your data. In either case, rebooting the enclosure with two failed drives in a RAID5 array was a dangerous and bad action. Right now, are all of the drives showing healthy?

ewwhite about 10 years@Bn_am If you allowed the server to reboot and bypassed the RAID controller prompts at boot that asked about the array, then yes, you may have lost your data. In either case, rebooting the enclosure with two failed drives in a RAID5 array was a dangerous and bad action. Right now, are all of the drives showing healthy? -

Bn_am about 10 yearsThe situation has changed, and we have now a logical drive disables as your examples. We either select F1 or F2 and we don't know. We you say "You should have corrected the disk failures rather than reboot the enclosure/system" what do you mean?

-

ewwhite about 10 years@Bn_am I mean that you should not have rebooted the system because you had failed disks. If you are being presented with the

ewwhite about 10 years@Bn_am I mean that you should not have rebooted the system because you had failed disks. If you are being presented with theF1orF2option, please chooseF2. -

ewwhite about 10 years@Bn_am Yes. Choose

ewwhite about 10 years@Bn_am Yes. ChooseF2. -

Bn_am about 10 yearsThat seems to work. The data are there. Thank you very much

-

ewwhite about 10 years@Bn_am I'm glad you were able to recover your data. You may want to fix the bad battery and update system firmware when you have an opportunity.

ewwhite about 10 years@Bn_am I'm glad you were able to recover your data. You may want to fix the bad battery and update system firmware when you have an opportunity.