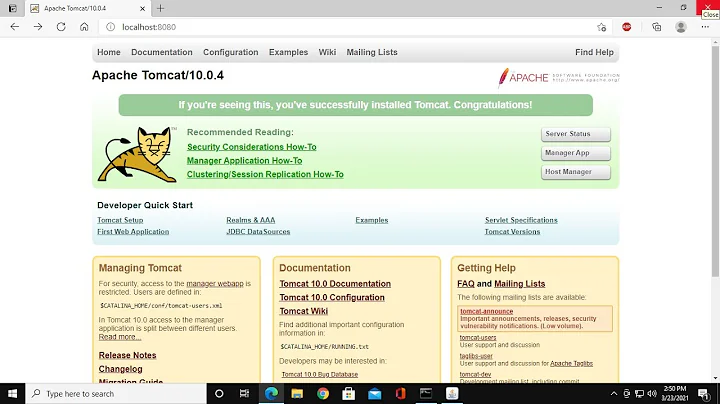

Measuring the number of queued requests for tomcat

Solution 1

This thread on the mailing list and the reply from Charles suggests that no such JMX exists.

Quote from Chuck: "Note that the accept queue is not visible to Tomcat, since it's maintained by the comm stack of the OS."

Quote from David : "Unfortunately, since Tomcat knows nothing about the requests in the accept queue,...."

Is there no way to get this information (How much requests are in the accept queue?) out?

No, the accept queue is completely invisible. Only the comm stack knows anything about it, and there are no APIs I'm aware of to queue the contents - because the content hasn't been received yet, only the connection request.

Depending on what your real problem is (i.e. for measuring requests in the accept queue which Tomcat has not yet begun procesing) if you're looking at a "throttling solution" see this follow-up on the same thread.

Solution 2

The accept queue cannot be monitored, but you can get the number of queued requests for tomcat using Executor.

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-" maxThreads="20" minSpareThreads="10" maxQueueSize="30" />

<Connector port="8080" protocol="HTTP/1.1" executor="tomcatThreadPool" connectionTimeout="20000" redirectPort="8443" maxConnections="50" />

The configuration maxThreads="20" means the threadpool has 20 workers at most, can process 20 requests simultaneously.

maxQueueSize="30" means that the threadpool can queued at most 30 unprocessed requests. Thus, you can monitor the queueSize attribute via JMX to get the number of queued requests.

But by default, the threadpool queue will never hold any requests because the default value of maxConnections is the value of maxThreads, which means when all workers are busy, new requests are queued in the accept queue.

By setting maxConnections="50", tomcat can accept more requests than maxThreads(20). In the example above, the Executor threadpool can handle 20 requests, the extra 30 requests will hold in the threadpool queue, any more requests further will be queue in the accept queue.

So now you can monitor the number of requests queued in threadpool using MBean 'Catalina:type=Executor,name=tomcatThreadPool' and attribute name 'queueSize'

Related videos on Youtube

Comments

-

Michael Neale almost 2 years

So with tomcat you can set the acceptCount value (default is 100) which means when all the worker threads are busy - new connections are placed in a queue (until it is full, after which they are rejected).

What I would like is to monitor the size of items in this queue - but can't work out if there is a way to get at this via JMX (ie not what the queue max size is - that is just config, but what the current number of items are in the queue).

Any ideas appreciated.

Config for tomcat: http://tomcat.apache.org/tomcat-6.0-doc/config/http.html (search for "acceptCount")

-

Michael Neale about 13 yearsInteresting, I suspected that. I know with jetty you can have it queue requests up (which sounds like one of the solutions someone was proposing on the ML) - but that seems a bit different than what tomcat does currently.

-

JoseK about 13 years@Michael: I'm interested in your use case. Do you want to filter out certain URL patterns or prioritize any servlets - i.e. why would one want to monitor pending requests.

-

Michael Neale about 13 yearsactually I am more interested in a metric of overall "load" of the server - ie to trigger monitor events.

-

Michael Neale about 13 yearsIIRC jetty can queue up requests (ie in process in a measurable way) - which is what sparked this for me - to see if there was the same for tomcat (I have no idea if it is a good idea or not - just curious !)

-

rustyx about 11 yearsThink of this differently - the accept queue is by itself a backup solution for situations when no more worker threads are available. It is far more useful to monitor the available worker threads instead and generate alerts when 0 workers are available for a prolonged amount of time. This would mean the server isn't able to handle the load.

![How to Deploy a Spring Boot Application on Tomcat as a WAR Package [Intermediate Spring Boot]](https://i.ytimg.com/vi/05EKZ9Xmfws/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLC_eRDEvHg67xh9Aa86pYoXYzBuyQ)