Monte Carlo Tree Search: Implementation for Tic-Tac-Toe

Solution 1

Ok, I solved the problem by adding the code:

//If this move is terminal and the opponent wins, this means we have

//previously made a move where the opponent can always find a move to win.. not good

if (game.GetWinner() == Opponent(startPlayer))

{

current.parent.value = int.MinValue;

return 0;

}

I think the problem was that the search space was too small. This ensures that even if selection does select a move that is actually terminal, this move is never chosen and resource are used to explore other moves instead :).

Now the AI vs AI always plays tie and the Ai is impossible to beat as human :-)

Solution 2

I think your answer shouldn't be marked as accepted. For Tic-Tac-Toe the search space is relatively small and optimal action should be found within a reasonable number of iterations.

It looks like your update function (backpropagation) adds the same amount of reward to nodes at different tree levels. This is not correct, since states current players are different at different tree levels.

I suggest you take a look at backpropagation in the UCT method from this example: http://mcts.ai/code/python.html

You should update node's total reward based on the reward calculated by previous player at specific level (node.playerJustMoved in the example).

Solution 3

My very first guess is, that the way your algorithm works, chooses the step which leads most likely to win the match (has most wins in endnodes).

Your example which shows the AI 'failing', is therefore not a 'bug', if I am correct. This way of valueing moves proceeds from enemy random moves. This logic fails, because it's obvious for the player which 1-step is to take to win the match.

Therefore you should erase all nodes which contain a next node with win for the player.

Maybe I am wrong, was just a first guess...

Solution 4

So it is possible in any random based heuristic that you simply do not search a representative sample of the game space. E.g. its theoretically possible that you randomly sample exactly the same sequence 100 times, ignoring completely the neighbouring branch which loses. This sets it apart from more typical search algorithms which attempt to find every move.

However, much more likely this is a failed implementation. The game tree of tick tack to is not very large, being about 9! at move one, and shrinking rapidly, so its improbable that the tree search doesn't search every move for a reasonable number of iterations, and hence should find an optimal move.

Without your code, I cannot really provide further comment.

If i was going to guess, i would say that perhaps you are choosing moves based on the largest number of victories, rather than the largest fraction of victories, and hence generally biasing selection towards the moves that were searched most times.

Related videos on Youtube

MortenGR

Updated on October 06, 2022Comments

-

MortenGR over 1 year

Edit: Uploded the full source code if you want to see if you can get the AI to perform better: https://www.dropbox.com/s/ous72hidygbnqv6/MCTS_TTT.rar

Edit: The search space is searched and moves resulting in losses are found. But moves resulting in losses are not visited very often due to the UCT algorithm.

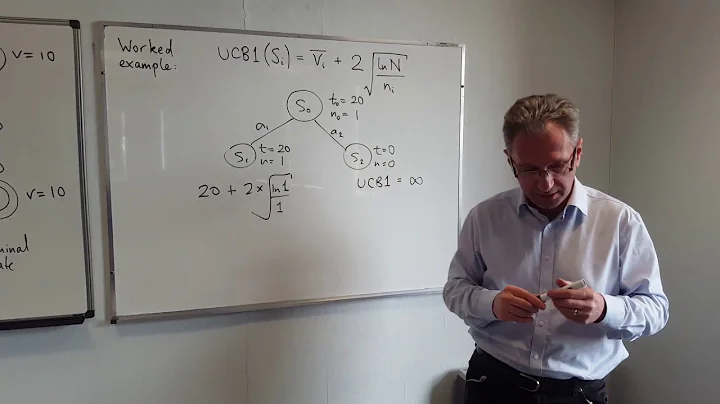

To learn about MCTS (Monte Carlo Tree Search) I've used the algorithm to make an AI for the classic game of tic-tac-toe. I have implemented the algorithm using the following design:

The tree policy is based on UCT and the default policy is to perform random moves until the game ends. What I have observed with my implementation is that the computer sometimes makes errorneous moves because it fails to "see" that a particular move will result in a loss directly.

The tree policy is based on UCT and the default policy is to perform random moves until the game ends. What I have observed with my implementation is that the computer sometimes makes errorneous moves because it fails to "see" that a particular move will result in a loss directly.For instance:

Notice how the action 6 (red square) is valued slightly higher than the blue square and therefore the computer marks this spot. I think this is because the game policy is based on random moves and therefore a good chance exist that the human will not put a "2" in the blue box. And if the player does not put a 2 in the blue box, the computer is gaurenteed a win.

Notice how the action 6 (red square) is valued slightly higher than the blue square and therefore the computer marks this spot. I think this is because the game policy is based on random moves and therefore a good chance exist that the human will not put a "2" in the blue box. And if the player does not put a 2 in the blue box, the computer is gaurenteed a win.My Questions

1) Is this a known issue with MCTS or is it a result of a failed implementation?

2) What could be possible solutions? I'm thinking about confining the moves in the selection phase but I'm not sure :-)

The code for the core MCTS:

//THE EXECUTING FUNCTION public unsafe byte GetBestMove(Game game, int player, TreeView tv) { //Setup root and initial variables Node root = new Node(null, 0, Opponent(player)); int startPlayer = player; helper.CopyBytes(root.state, game.board); //four phases: descent, roll-out, update and growth done iteratively X times //----------------------------------------------------------------------------------------------------- for (int iteration = 0; iteration < 1000; iteration++) { Node current = Selection(root, game); int value = Rollout(current, game, startPlayer); Update(current, value); } //Restore game state and return move with highest value helper.CopyBytes(game.board, root.state); //Draw tree DrawTree(tv, root); //return root.children.Aggregate((i1, i2) => i1.visits > i2.visits ? i1 : i2).action; return BestChildUCB(root, 0).action; } //#1. Select a node if 1: we have more valid feasible moves or 2: it is terminal public Node Selection(Node current, Game game) { while (!game.IsTerminal(current.state)) { List<byte> validMoves = game.GetValidMoves(current.state); if (validMoves.Count > current.children.Count) return Expand(current, game); else current = BestChildUCB(current, 1.44); } return current; } //#1. Helper public Node BestChildUCB(Node current, double C) { Node bestChild = null; double best = double.NegativeInfinity; foreach (Node child in current.children) { double UCB1 = ((double)child.value / (double)child.visits) + C * Math.Sqrt((2.0 * Math.Log((double)current.visits)) / (double)child.visits); if (UCB1 > best) { bestChild = child; best = UCB1; } } return bestChild; } //#2. Expand a node by creating a new move and returning the node public Node Expand(Node current, Game game) { //Copy current state to the game helper.CopyBytes(game.board, current.state); List<byte> validMoves = game.GetValidMoves(current.state); for (int i = 0; i < validMoves.Count; i++) { //We already have evaluated this move if (current.children.Exists(a => a.action == validMoves[i])) continue; int playerActing = Opponent(current.PlayerTookAction); Node node = new Node(current, validMoves[i], playerActing); current.children.Add(node); //Do the move in the game and save it to the child node game.Mark(playerActing, validMoves[i]); helper.CopyBytes(node.state, game.board); //Return to the previous game state helper.CopyBytes(game.board, current.state); return node; } throw new Exception("Error"); } //#3. Roll-out. Simulate a game with a given policy and return the value public int Rollout(Node current, Game game, int startPlayer) { Random r = new Random(1337); helper.CopyBytes(game.board, current.state); int player = Opponent(current.PlayerTookAction); //Do the policy until a winner is found for the first (change?) node added while (game.GetWinner() == 0) { //Random List<byte> moves = game.GetValidMoves(); byte move = moves[r.Next(0, moves.Count)]; game.Mark(player, move); player = Opponent(player); } if (game.GetWinner() == startPlayer) return 1; return 0; } //#4. Update public unsafe void Update(Node current, int value) { do { current.visits++; current.value += value; current = current.parent; } while (current != null); }-

MortenGR almost 10 yearsGroo: If I understand it correctly, Monte Carlo Tree Search does not use heutistics (it can be used in games such as go where domain knowledge is hard to specify). In the roll-out phase, a specific policy is used to simulate the game, and this is often (again, if I understand the algorithm correctly), random moves

-

-

MortenGR almost 10 yearsThanks for the reply. So if I understand it correctly, your solution is to erase all moves that could result in a loss (for the player) on the next turn. I've thought about this also, but I would like something with a little more finesse :-)

-

Martin Tausch almost 10 yearsI am usually not the guy speaking too theoretically, but I will think about it :) It's a very interesting question!

Martin Tausch almost 10 yearsI am usually not the guy speaking too theoretically, but I will think about it :) It's a very interesting question! -

MortenGR almost 10 yearsThanks for the reply. I have added the code to the post if you would like to see it. The search space (and thereby moves that could result in loss) are identified in the tree, but they are not visited often because of the UCT algorithm for selection. Using the previous example see this expanded tree: dropbox.com/s/muwew62f7edaszw/ttt2.png. Performing action 3 CAN lead to the human choosing action 2 resulting in 0 value. But it can also lead to action 5,6 or 8 resulting in a lot more value. Notice how action 2 is only visited 10 times.

-

dotNET over 7 yearsThe link at the top of this page is dead. Can you upload entire project somewhere and share the new link? I'm planning on learning your example and then expanding it to create AI for a cards game.

dotNET over 7 yearsThe link at the top of this page is dead. Can you upload entire project somewhere and share the new link? I'm planning on learning your example and then expanding it to create AI for a cards game. -

MortenGR over 7 yearsYou can download it here: drive.google.com/file/d/0B6Fm7aj1SzBlWGI4bXRzZXBJNTA/… (switched from DropBox to Google Drive a while ago) Even if I got the AI to work, I'm not sure I ever got it to work fully according to the MCTS. If you make progress on the AI, I would be grateful if you could share it (or point out my mistakes :-))

-

dotNET over 7 yearsAI doesn't seem to be doing well. Consider the following board: 1-0-2 2-0-0 1-0-0

dotNET over 7 yearsAI doesn't seem to be doing well. Consider the following board: 1-0-2 2-0-0 1-0-0Compute P1should now result in a1in the bottom-right cell, but instead it suggests it for middle-right. Something you already know? Or is this a result of not correctly implementing MCTS as you suggested? -

MortenGR over 7 yearsI haven't touched the code since 2014. I'm afraid I can't remember and don't have time to look at it. But if you do create a better AI, let me know and i'll update this post.