Most efficient sorting algorithm for a large set of numbers

Solution 1

First, you will need a map of word -> count. 50,000 words is not much - it will easily fit in memory, so there's nothing to worry. In C++ you can use the standard STL std::map.

Then, once you have the map, you can copy all the map keys to a vector.

Then, sort this vector using a custom comparison operator: instead of comparing the words, compare the counts from the map. (Don't worry about the specific sorting algorithm - your array is not that large, so any standard library sort will work for you.)

Solution 2

I'd start with a quicksort and go from there.

Check out the wiki page on sorting algorithms, though, to learn the differences.

Solution 3

You should try an MSD radix sort. It will sort your entries in lexicographical order. Here is a google code project you might be interested in.

Solution 4

Have a look at the link. A Pictorial representation on how different algorithm works. This will give you an hint!

Solution 5

In almost every case I've ever tested, Quicksort worked the best for me. However, I did have two cases where Combsort was the best. Could have been that combsort was better in those cases because the code was so small, or due to some quirk in how ordered the data was.

Any time sorting shows up in my profile, I try the major sorts. I've never had anything that topped both Quicksort and Combsort.

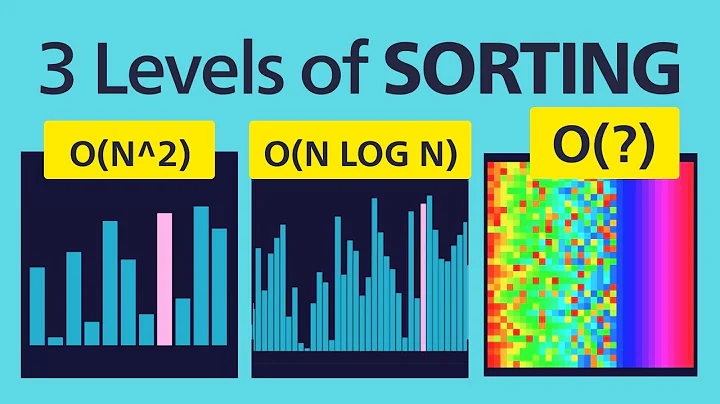

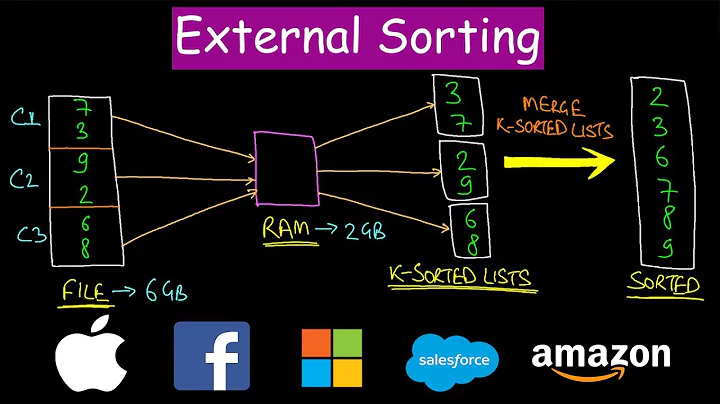

Related videos on Youtube

aterimperator

Updated on January 28, 2020Comments

-

aterimperator over 4 years

I'm working on a large project, I won't bother to summarize it here, but this section of the project is to take a very large document of text (minimum of around 50,000 words (not unique)), and output each unique word in order of most used to least used (probably top three will be "a" "an" and "the").

My question is of course, what would be the best sorting algorithm to use? I was reading of counting sort, and I like it, but my concern is that the range of values will be too large compared to the number of unique words.

Any suggestions?

-

EricWhat language are you using? Some languages have built in handlers for some of these things (like LINQ).

-

-

Matthew Vines almost 15 years+1 for the link. All programmers need at least a basic understanding in sorting algorithms.

-

Tom Leys almost 15 yearsI like this one better vision.bc.edu/~dmartin/teaching/sorting/anim-html/all.html

-

Tom Leys almost 15 yearsFirst step O(n*m) where n is number of words in input, m is number of unique words. Second step uses O(m) memory and does so with a random access pattern - awful for cache. If the third step was used to feed into another peice of code it would need to have o(n) memory allocated. - All this means your code would have poor memory performance which will dominate any apparent code improvements.

-

Tom Leys almost 15 yearsIf you used a really efficient hash the first step might only be O(m), if you are very lucky and there are no hash collisions.

-

aterimperator almost 15 yearsBoth of those seem to suggest shell is best.

-

Olfan about 11 yearsAs of 2013-03-18, both the link in the answer and the link in Tom Leys' comment are dead.

-

arunmoezhi about 11 yearsThis might be a late reply. But I totally agree with you. Combsort is really fast. What is surprising is that combsort is a slight variation of bubblesort which is damn slow. I was not able to find any references which talks about the complexity analysis of combsort. Wiki says the average complexity is

arunmoezhi about 11 yearsThis might be a late reply. But I totally agree with you. Combsort is really fast. What is surprising is that combsort is a slight variation of bubblesort which is damn slow. I was not able to find any references which talks about the complexity analysis of combsort. Wiki says the average complexity isn^2/2^p. But there are no details on how that is achieved.