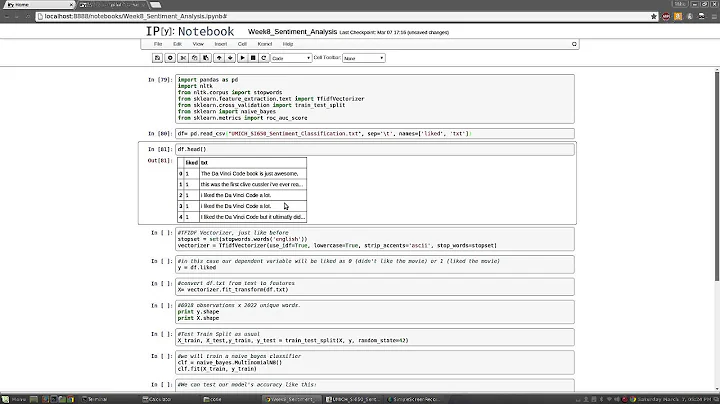

nltk NaiveBayesClassifier training for sentiment analysis

Solution 1

You need to change your data structure. Here is your train list as it currently stands:

>>> train = [('I love this sandwich.', 'pos'),

('This is an amazing place!', 'pos'),

('I feel very good about these beers.', 'pos'),

('This is my best work.', 'pos'),

("What an awesome view", 'pos'),

('I do not like this restaurant', 'neg'),

('I am tired of this stuff.', 'neg'),

("I can't deal with this", 'neg'),

('He is my sworn enemy!', 'neg'),

('My boss is horrible.', 'neg')]

The problem is, though, that the first element of each tuple should be a dictionary of features. So I will change your list into a data structure that the classifier can work with:

>>> from nltk.tokenize import word_tokenize # or use some other tokenizer

>>> all_words = set(word.lower() for passage in train for word in word_tokenize(passage[0]))

>>> t = [({word: (word in word_tokenize(x[0])) for word in all_words}, x[1]) for x in train]

Your data should now be structured like this:

>>> t

[({'this': True, 'love': True, 'deal': False, 'tired': False, 'feel': False, 'is': False, 'am': False, 'an': False, 'sandwich': True, 'ca': False, 'best': False, '!': False, 'what': False, '.': True, 'amazing': False, 'horrible': False, 'sworn': False, 'awesome': False, 'do': False, 'good': False, 'very': False, 'boss': False, 'beers': False, 'not': False, 'with': False, 'he': False, 'enemy': False, 'about': False, 'like': False, 'restaurant': False, 'these': False, 'of': False, 'work': False, "n't": False, 'i': False, 'stuff': False, 'place': False, 'my': False, 'view': False}, 'pos'), . . .]

Note that the first element of each tuple is now a dictionary. Now that your data is in place and the first element of each tuple is a dictionary, you can train the classifier like so:

>>> import nltk

>>> classifier = nltk.NaiveBayesClassifier.train(t)

>>> classifier.show_most_informative_features()

Most Informative Features

this = True neg : pos = 2.3 : 1.0

this = False pos : neg = 1.8 : 1.0

an = False neg : pos = 1.6 : 1.0

. = True pos : neg = 1.4 : 1.0

. = False neg : pos = 1.4 : 1.0

awesome = False neg : pos = 1.2 : 1.0

of = False pos : neg = 1.2 : 1.0

feel = False neg : pos = 1.2 : 1.0

place = False neg : pos = 1.2 : 1.0

horrible = False pos : neg = 1.2 : 1.0

If you want to use the classifier, you can do it like this. First, you begin with a test sentence:

>>> test_sentence = "This is the best band I've ever heard!"

Then, you tokenize the sentence and figure out which words the sentence shares with all_words. These constitute the sentence's features.

>>> test_sent_features = {word: (word in word_tokenize(test_sentence.lower())) for word in all_words}

Your features will now look like this:

>>> test_sent_features

{'love': False, 'deal': False, 'tired': False, 'feel': False, 'is': True, 'am': False, 'an': False, 'sandwich': False, 'ca': False, 'best': True, '!': True, 'what': False, 'i': True, '.': False, 'amazing': False, 'horrible': False, 'sworn': False, 'awesome': False, 'do': False, 'good': False, 'very': False, 'boss': False, 'beers': False, 'not': False, 'with': False, 'he': False, 'enemy': False, 'about': False, 'like': False, 'restaurant': False, 'this': True, 'of': False, 'work': False, "n't": False, 'these': False, 'stuff': False, 'place': False, 'my': False, 'view': False}

Then you simply classify those features:

>>> classifier.classify(test_sent_features)

'pos' # note 'best' == True in the sentence features above

This test sentence appears to be positive.

Solution 2

@275365's tutorial on the data structure for NLTK's bayesian classifier is great. From a more high level, we can look at it as,

We have inputs sentences with sentiment tags:

training_data = [('I love this sandwich.', 'pos'),

('This is an amazing place!', 'pos'),

('I feel very good about these beers.', 'pos'),

('This is my best work.', 'pos'),

("What an awesome view", 'pos'),

('I do not like this restaurant', 'neg'),

('I am tired of this stuff.', 'neg'),

("I can't deal with this", 'neg'),

('He is my sworn enemy!', 'neg'),

('My boss is horrible.', 'neg')]

Let's consider our feature sets to be individual words, so we extract a list of all possible words from the training data (let's call it vocabulary) as such:

from nltk.tokenize import word_tokenize

from itertools import chain

vocabulary = set(chain(*[word_tokenize(i[0].lower()) for i in training_data]))

Essentially, vocabulary here is the same @275365's all_word

>>> all_words = set(word.lower() for passage in training_data for word in word_tokenize(passage[0]))

>>> vocabulary = set(chain(*[word_tokenize(i[0].lower()) for i in training_data]))

>>> print vocabulary == all_words

True

From each data point, (i.e. each sentence and the pos/neg tag), we want to say whether a feature (i.e. a word from the vocabulary) exist or not.

>>> sentence = word_tokenize('I love this sandwich.'.lower())

>>> print {i:True for i in vocabulary if i in sentence}

{'this': True, 'i': True, 'sandwich': True, 'love': True, '.': True}

But we also want to tell the classifier which word don't exist in the sentence but in the vocabulary, so for each data point, we list out all possible words in the vocabulary and say whether a word exist or not:

>>> sentence = word_tokenize('I love this sandwich.'.lower())

>>> x = {i:True for i in vocabulary if i in sentence}

>>> y = {i:False for i in vocabulary if i not in sentence}

>>> x.update(y)

>>> print x

{'love': True, 'deal': False, 'tired': False, 'feel': False, 'is': False, 'am': False, 'an': False, 'good': False, 'best': False, '!': False, 'these': False, 'what': False, '.': True, 'amazing': False, 'horrible': False, 'sworn': False, 'ca': False, 'do': False, 'sandwich': True, 'very': False, 'boss': False, 'beers': False, 'not': False, 'with': False, 'he': False, 'enemy': False, 'about': False, 'like': False, 'restaurant': False, 'this': True, 'of': False, 'work': False, "n't": False, 'i': True, 'stuff': False, 'place': False, 'my': False, 'awesome': False, 'view': False}

But since this loops through the vocabulary twice, it's more efficient to do this:

>>> sentence = word_tokenize('I love this sandwich.'.lower())

>>> x = {i:(i in sentence) for i in vocabulary}

{'love': True, 'deal': False, 'tired': False, 'feel': False, 'is': False, 'am': False, 'an': False, 'good': False, 'best': False, '!': False, 'these': False, 'what': False, '.': True, 'amazing': False, 'horrible': False, 'sworn': False, 'ca': False, 'do': False, 'sandwich': True, 'very': False, 'boss': False, 'beers': False, 'not': False, 'with': False, 'he': False, 'enemy': False, 'about': False, 'like': False, 'restaurant': False, 'this': True, 'of': False, 'work': False, "n't": False, 'i': True, 'stuff': False, 'place': False, 'my': False, 'awesome': False, 'view': False}

So for each sentence, we want to tell the classifier for each sentence which word exist and which word doesn't and also give it the pos/neg tag. We can call that a feature_set, it's a tuple made up of a x (as shown above) and its tag.

>>> feature_set = [({i:(i in word_tokenize(sentence.lower())) for i in vocabulary},tag) for sentence, tag in training_data]

[({'this': True, 'love': True, 'deal': False, 'tired': False, 'feel': False, 'is': False, 'am': False, 'an': False, 'sandwich': True, 'ca': False, 'best': False, '!': False, 'what': False, '.': True, 'amazing': False, 'horrible': False, 'sworn': False, 'awesome': False, 'do': False, 'good': False, 'very': False, 'boss': False, 'beers': False, 'not': False, 'with': False, 'he': False, 'enemy': False, 'about': False, 'like': False, 'restaurant': False, 'these': False, 'of': False, 'work': False, "n't": False, 'i': False, 'stuff': False, 'place': False, 'my': False, 'view': False}, 'pos'), ...]

Then we feed these features and tags in the feature_set into the classifier to train it:

from nltk import NaiveBayesClassifier as nbc

classifier = nbc.train(feature_set)

Now you have a trained classifier and when you want to tag a new sentence, you have to "featurize" the new sentence to see which of the word in the new sentence are in the vocabulary that the classifier was trained on:

>>> test_sentence = "This is the best band I've ever heard! foobar"

>>> featurized_test_sentence = {i:(i in word_tokenize(test_sentence.lower())) for i in vocabulary}

NOTE: As you can see from the step above, the naive bayes classifier cannot handle out of vocabulary words since the foobar token disappears after you featurize it.

Then you feed the featurized test sentence into the classifier and ask it to classify:

>>> classifier.classify(featurized_test_sentence)

'pos'

Hopefully this gives a clearer picture of how to feed data in to NLTK's naive bayes classifier for sentimental analysis. Here's the full code without the comments and the walkthrough:

from nltk import NaiveBayesClassifier as nbc

from nltk.tokenize import word_tokenize

from itertools import chain

training_data = [('I love this sandwich.', 'pos'),

('This is an amazing place!', 'pos'),

('I feel very good about these beers.', 'pos'),

('This is my best work.', 'pos'),

("What an awesome view", 'pos'),

('I do not like this restaurant', 'neg'),

('I am tired of this stuff.', 'neg'),

("I can't deal with this", 'neg'),

('He is my sworn enemy!', 'neg'),

('My boss is horrible.', 'neg')]

vocabulary = set(chain(*[word_tokenize(i[0].lower()) for i in training_data]))

feature_set = [({i:(i in word_tokenize(sentence.lower())) for i in vocabulary},tag) for sentence, tag in training_data]

classifier = nbc.train(feature_set)

test_sentence = "This is the best band I've ever heard!"

featurized_test_sentence = {i:(i in word_tokenize(test_sentence.lower())) for i in vocabulary}

print "test_sent:",test_sentence

print "tag:",classifier.classify(featurized_test_sentence)

Solution 3

It appears that you are trying to use TextBlob but are training the NLTK NaiveBayesClassifier, which, as pointed out in other answers, must be passed a dictionary of features.

TextBlob has a default feature extractor that indicates which words in the training set are included in the document (as demonstrated in the other answers). Therefore, TextBlob allows you to pass in your data as is.

from textblob.classifiers import NaiveBayesClassifier

train = [('This is an amazing place!', 'pos'),

('I feel very good about these beers.', 'pos'),

('This is my best work.', 'pos'),

("What an awesome view", 'pos'),

('I do not like this restaurant', 'neg'),

('I am tired of this stuff.', 'neg'),

("I can't deal with this", 'neg'),

('He is my sworn enemy!', 'neg'),

('My boss is horrible.', 'neg') ]

test = [

('The beer was good.', 'pos'),

('I do not enjoy my job', 'neg'),

("I ain't feeling dandy today.", 'neg'),

("I feel amazing!", 'pos'),

('Gary is a friend of mine.', 'pos'),

("I can't believe I'm doing this.", 'neg') ]

classifier = NaiveBayesClassifier(train) # Pass in data as is

# When classifying text, features are extracted automatically

classifier.classify("This is an amazing library!") # => 'pos'

Of course, the simple default extractor is not appropriate for all problems. If you would like to how features are extracted, you just write a function that takes a string of text as input and outputs the dictionary of features and pass that to the classifier.

classifier = NaiveBayesClassifier(train, feature_extractor=my_extractor_func)

I encourage you to check out the short TextBlob classifier tutorial here: http://textblob.readthedocs.org/en/latest/classifiers.html

Related videos on Youtube

student001

Updated on July 09, 2022Comments

-

student001 almost 2 years

I am training the

NaiveBayesClassifierin Python using sentences, and it gives me the error below. I do not understand what the error might be, and any help would be good.I have tried many other input formats, but the error remains. The code given below:

from text.classifiers import NaiveBayesClassifier from text.blob import TextBlob train = [('I love this sandwich.', 'pos'), ('This is an amazing place!', 'pos'), ('I feel very good about these beers.', 'pos'), ('This is my best work.', 'pos'), ("What an awesome view", 'pos'), ('I do not like this restaurant', 'neg'), ('I am tired of this stuff.', 'neg'), ("I can't deal with this", 'neg'), ('He is my sworn enemy!', 'neg'), ('My boss is horrible.', 'neg') ] test = [('The beer was good.', 'pos'), ('I do not enjoy my job', 'neg'), ("I ain't feeling dandy today.", 'neg'), ("I feel amazing!", 'pos'), ('Gary is a friend of mine.', 'pos'), ("I can't believe I'm doing this.", 'neg') ] classifier = nltk.NaiveBayesClassifier.train(train)I am including the traceback below.

Traceback (most recent call last): File "C:\Users\5460\Desktop\train01.py", line 15, in <module> all_words = set(word.lower() for passage in train for word in word_tokenize(passage[0])) File "C:\Users\5460\Desktop\train01.py", line 15, in <genexpr> all_words = set(word.lower() for passage in train for word in word_tokenize(passage[0])) File "C:\Python27\lib\site-packages\nltk\tokenize\__init__.py", line 87, in word_tokenize return _word_tokenize(text) File "C:\Python27\lib\site-packages\nltk\tokenize\treebank.py", line 67, in tokenize text = re.sub(r'^\"', r'``', text) File "C:\Python27\lib\re.py", line 151, in sub return _compile(pattern, flags).sub(repl, string, count) TypeError: expected string or buffer -

student001 over 10 yearsHello. Thanks for your advice! I have a few questions, being new to this. What is keys? I also tried your method, but I get the following error: ' Traceback (most recent call last): File "C:\Users\5460\Desktop\train01.py", line 16, in <module> all_words = set(word.lower() for passage in train for word in passage[0].keys()) File "C:\Users\5460\Desktop\train01.py", line 16, in <genexpr> all_words = set(word.lower() for passage in train for word in passage[0].keys()) AttributeError: 'set' object has no attribute 'keys'' Any help will be valued! Thanks!

-

Justin O Barber over 10 years@student001 Whoops. Sorry about that. I left out a line when I originally wrote this answer. I have fixed the answer now. The main change was this: all_words = set(word.lower() for passage in train for word in word_tokenize(passage[0])) You don't need to worry about keys now.

Justin O Barber over 10 years@student001 Whoops. Sorry about that. I left out a line when I originally wrote this answer. I have fixed the answer now. The main change was this: all_words = set(word.lower() for passage in train for word in word_tokenize(passage[0])) You don't need to worry about keys now. -

student001 over 10 yearsHello. Thanks for your time! This still gives me an error though. Error: expected string or buffer. Any ideas on this?

-

Justin O Barber over 10 years@student001 If you are still having trouble, I have included my code from top to bottom in the edited answer above, beginning with your train list. If you run these statements one after the other, you should see the same results that I have shown here. I assume you are working with Python 2.x.

Justin O Barber over 10 years@student001 If you are still having trouble, I have included my code from top to bottom in the edited answer above, beginning with your train list. If you run these statements one after the other, you should see the same results that I have shown here. I assume you are working with Python 2.x. -

student001 over 10 yearsHello. I tried the same thing but I get an error(Expected string or buffer) if I try to apply set constructor in all_words as suggested. Any ideas what might be going wrong? Thank you so much for your help!

-

Justin O Barber over 10 yearsAre you using Python 2.7? Or are you using an earlier version?

Justin O Barber over 10 yearsAre you using Python 2.7? Or are you using an earlier version? -

student001 over 10 yearsYes, I am using python 2.7.

-

Justin O Barber over 10 years@student001 Are you sure you are getting the [0] at the end of passage? (If you don't have the [0], you will get a TypeError: expected string or buffer.) word_tokenize(passage) => TypeError, but word_tokenize(passage[0]) => no error. Also, does your train list still look like my train list above?

Justin O Barber over 10 years@student001 Are you sure you are getting the [0] at the end of passage? (If you don't have the [0], you will get a TypeError: expected string or buffer.) word_tokenize(passage) => TypeError, but word_tokenize(passage[0]) => no error. Also, does your train list still look like my train list above? -

student001 over 10 yearsYep I am doing the exact thing. However, if I remove the {} brackets from the training data, it gives no error. But I am unable to use the NaiveBayesClassifier that way.

-

Justin O Barber over 10 years@student001 Can you include the full error message you are receiving? (All the red text [if you are using IDLE], beginning with 'Traceback (most recent call last):'.)

Justin O Barber over 10 years@student001 Can you include the full error message you are receiving? (All the red text [if you are using IDLE], beginning with 'Traceback (most recent call last):'.) -

Justin O Barber over 10 years@student001 Try this: for passage in train: print passage Then post your results (or at least the first result) here, if you can. Thanks.

Justin O Barber over 10 years@student001 Try this: for passage in train: print passage Then post your results (or at least the first result) here, if you can. Thanks. -

student001 over 10 yearsIt gives individual strings as sets but the same error sadly. So, every sentence is an individual set, is that right?

-

Justin O Barber over 10 years@student001 And if you print train[1], you also get the same thing? (Make sure you print train[1] before trying to assign a value to all_words.) And no, no sentence should be a set at this point. The first element of train[1] should be 'This is an amazing place!', and the second element of train[1] should be 'pos'. The two elements are in a tuple together (not a set).

Justin O Barber over 10 years@student001 And if you print train[1], you also get the same thing? (Make sure you print train[1] before trying to assign a value to all_words.) And no, no sentence should be a set at this point. The first element of train[1] should be 'This is an amazing place!', and the second element of train[1] should be 'pos'. The two elements are in a tuple together (not a set). -

student001 over 10 yearsthe result is still a set for train[1].

-

Justin O Barber over 10 years@student001 What does the set look like? It would really help to see all or part of the set.

Justin O Barber over 10 years@student001 What does the set look like? It would really help to see all or part of the set. -

student001 over 10 yearsThe output I get is: (set(['This is an amazing place!']), 'pos') For printing passage, it was the same, but multiple sentences, one sentence per line.

-

Justin O Barber over 10 years@student001, Ah, thank you for sharing the output. That is the problem. Notice that my train[1] is ('This is an amazing place!', 'pos'), which is a tuple. And note that the first element of that tuple is a string, not a set. If you copy my train list (which was your original train list) and assign its value to train again, the rest of the code I have written above should work without any trouble.

Justin O Barber over 10 years@student001, Ah, thank you for sharing the output. That is the problem. Notice that my train[1] is ('This is an amazing place!', 'pos'), which is a tuple. And note that the first element of that tuple is a string, not a set. If you copy my train list (which was your original train list) and assign its value to train again, the rest of the code I have written above should work without any trouble. -

student001 over 10 yearsAssign where exactly?

-

Justin O Barber over 10 years@student001 Assign it either at the top of your .py file (if you are running this code as a module) or in your GUI/command line. Exactly as I have it in the first code snippet: train = [('I love this sandwich.', 'pos'), etc.]

Justin O Barber over 10 years@student001 Assign it either at the top of your .py file (if you are running this code as a module) or in your GUI/command line. Exactly as I have it in the first code snippet: train = [('I love this sandwich.', 'pos'), etc.] -

student001 over 10 yearsOh thanks! Yes, that was the issue. However, will you be able to give me a testing sentence for this? Do I have to put it in a particular way as well?

-

student001 over 10 years@273565 Thanks a lot for your time and help!

-

Shivam Agrawal about 8 years@JustinBarber .. A little out of the context question. Let's assume all the features in my

Shivam Agrawal about 8 years@JustinBarber .. A little out of the context question. Let's assume all the features in mytest_sent_featuresis False, means none of the features in my data was seen before. what would be the ideal outcome is it 0.5 posterior probability for both pos and neg ? -

Chedi Bechikh about 7 yearsthank you for you answer, i tested to import data from a csv file but the probelm the program

print(cl.classify("thermal spray"))i have this NameError: name 'cl' is not defined -

DSM over 6 yearsstrings are hashable, and dictionaries aren't. This answer gets that exactly backwards. Just try

DSM over 6 yearsstrings are hashable, and dictionaries aren't. This answer gets that exactly backwards. Just tryhash('abc')andhash({1:2})at the console. The final structure may work, but the reasons given for why it works don't make any sense. -

Justin O Barber over 6 years@DSM Thanks for catching this error. I totally agree with you. It's hard to keep track of these older answers when I don't participate much in stackoverflow anymore. Cheers.

Justin O Barber over 6 years@DSM Thanks for catching this error. I totally agree with you. It's hard to keep track of these older answers when I don't participate much in stackoverflow anymore. Cheers. -

KCK almost 6 yearsCan you tell me about the time taken by the above data set to train the Naive Bayes classifier? Also an estimate to train with corpus of 1 lakh sentences? I am new to this and want an estimate of this before trying out...

-

alvas almost 6 yearsNope. Not going to tell you how long it trains because (i) you should be able to run this on any modern (4-5 years ago) laptop, (ii) if not you can use kaggle kernel, simply copy and paste the code. Don't need to estimate the time unless you find that it hangs on your machine, if so, use Kaggle kernel. I promise it won't take a lot of time.

-

alvas almost 6 yearsTry before asking. Even better, time and tell others how long it took ;P

-

Marc Maxmeister almost 6 yearsThis DOES hang on my machine, if I replace your 10 sample training sentences with 50,000, or even as little as 5000. It works with 1000 sentences, but that's too pitiful to be useful. nltk has its own classifier that doesn't break with large data sets.

Marc Maxmeister almost 6 yearsThis DOES hang on my machine, if I replace your 10 sample training sentences with 50,000, or even as little as 5000. It works with 1000 sentences, but that's too pitiful to be useful. nltk has its own classifier that doesn't break with large data sets. -

alvas almost 6 yearsAwesome! @MarcMaxson, you've tried it. Yes, it takes very long and this is due to the en.wikipedia.org/wiki/Curse_of_dimensionality =) And I only managed to complete the training because my machine has enough RAM to hold all the features for each document in memory.

-

Marc Maxmeister almost 6 yearsI've described a workaround here: stackoverflow.com/questions/4576077/… @alvas

Marc Maxmeister almost 6 yearsI've described a workaround here: stackoverflow.com/questions/4576077/… @alvas -

alvas almost 6 years@MarcMaxson, I think you posted the wrong question ;P