NodeJS JSON.stringify() bottleneck

Solution 1

You've got two options:

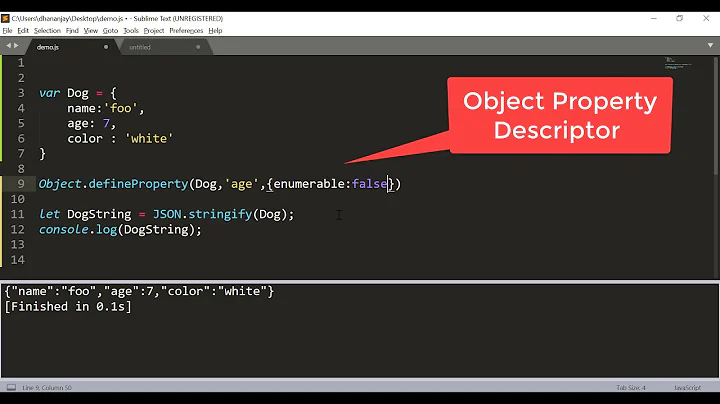

1) find a JSON module that will allow you to stream the stringify operation, and process it in chunks. I don't know if such a module is out there, if it's not you'd have to build it. EDIT: Thanks to Reinard Mavronicolas for pointing out JSONStream in the comments. I've actually had it on my back burner to look for something like this, for a different use case.

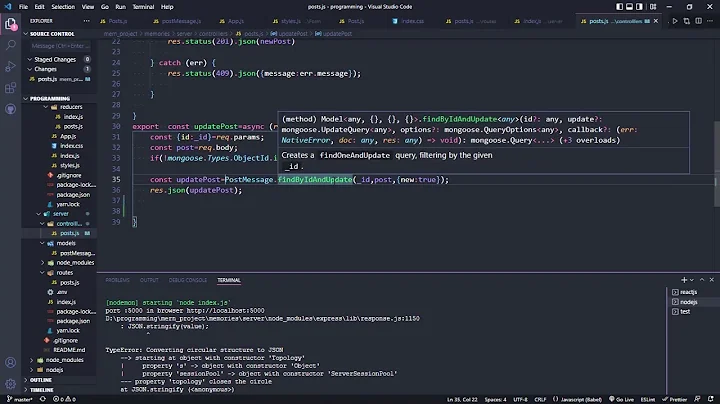

2) async does not use threads. You'd need to use cluster or some other actual threading module to drop the processing into a separate thread. The caveat here is that you're still processing a large amount of data, you're gaining bandwidth using threads but depending on your traffic you still may hit a limit.

Solution 2

After some year, this question has a new answer for the first question: yieldable-json lib. As described by in this talk by Gireesh Punathil (IBM India), this lib can evaluate a JSON of 60MB without blocking the event loop of node.js let you accept new requests in order to upgrade your throughput.

For the second one, with node.js 11 in the experimental phase, you can use the worker thread in order to increase your web server throughput.

Related videos on Youtube

Comments

-

gop over 1 year

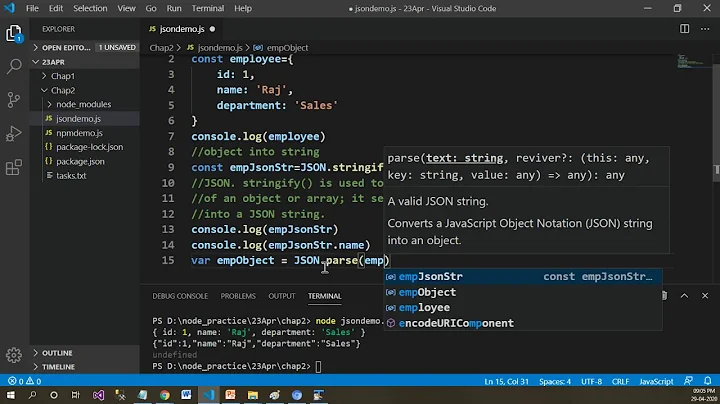

My service returns responses of very large JSON objects - around 60MB. After some profiling I have found that it spends almost all of the time doing the

JSON.stringify()call which is used to convert to string and send it as a response. I have tried custom implementations of stringify and they are even slower.This is quite a bottleneck for my service. I want to be able to handle as many requests per second as possible - currently 1 request takes 700ms.

My questions are:

1) Can I optimize the sending of response part? Is there a more effective way than stringify-ing the object and sending the response?2) Will using async module and performing the

JSON.stringify()in a separate thread improve overall the number of requests/second(given that over 90% of the time is spent at that call)?-

Rei Mavronicolas about 10 yearsHow are you consuming this service.. i.e. Do you have to serialize it as JSON? Maybe try serializing and sending as BSON instead? I would imagine you'd see a performance improvement encoding/decoding it; and the output should be smaller.

-

-

gop about 10 yearsthanks! I have another idea - to gzip the content and make my service return gziped conent instead. I found this library github.com/sapienlab/jsonpack which provides function which takes the json object and returns the gzipped string and it seems to me that it doesn't internally call JSON.stringify(). What do you think?

-

Jason about 10 yearsAs long as it does streamed/evented/chunked processing, that's probably fine. Dealing with that much data all in one operation without breaking it up into pieces is going to take a long time no matter what you do with it.

-

Rei Mavronicolas about 10 years@Jason, you can add to your answer under point number 1 - github.com/dominictarr/JSONStream. Also, seems to be anohter SO question similar to this one: stackoverflow.com/questions/13503844/…

-

hasen almost 7 yearsstreaming to msgpack would probably be even better.

-

hrdwdmrbl about 5 yearsDid you implement either of those? I am experimenting with yieldable-json, worker threads and even a native API extension like github.com/lemire/simdjson

hrdwdmrbl about 5 yearsDid you implement either of those? I am experimenting with yieldable-json, worker threads and even a native API extension like github.com/lemire/simdjson -

Manuel Spigolon about 5 yearsYes, I used

Manuel Spigolon about 5 yearsYes, I usedyieldable-jsonbut I didn't try other approaches