Normalization of input data in Keras

Solution 1

Add BatchNormalization as the first layer and it works as expected, though not exactly like the OP's example. You can see the detailed explanation here.

Both the OP's example and batch normalization use a learned mean and standard deviation of the input data during inference. But the OP's example uses a simple mean that gives every training sample equal weight, while the BatchNormalization layer uses a moving average that gives recently-seen samples more weight than older samples.

Importantly, batch normalization works differently from the OP's example during training. During training, the layer normalizes its output using the mean and standard deviation of the current batch of inputs.

A second distinction is that the OP's code produces an output with a mean of zero and a standard deviation of one. Batch Normalization instead learns a mean and standard deviation for the output that improves the entire network's loss. To get the behavior of the OP's example, Batch Normalization should be initialized with the parameters scale=False and center=False.

Solution 2

There's now a Keras layer for this purpose, Normalization. At time of writing it is in the experimental module, keras.layers.experimental.preprocessing.

https://keras.io/api/layers/preprocessing_layers/core_preprocessing_layers/normalization/

Before you use it, you call the layer's adapt method with the data X you want to derive the scale from (i.e. mean and standard deviation). Once you do this, the scale is fixed (it does not change during training). The scale is then applied to the inputs whenever the model is used (during training and prediction).

from keras.layers.experimental.preprocessing import Normalization

norm_layer = Normalization()

norm_layer.adapt(X)

model = keras.Sequential()

model.add(norm_layer)

# ... Continue as usual.

Solution 3

Maybe you can use sklearn.preprocessing.StandardScaler to scale you data,

This object allow you to save the scaling parameters in an object,

Then you can use Mixin types inputs into you model, lets say:

- Your_model

- [param1_scaler, param2_scaler]

Here is a link https://www.pyimagesearch.com/2019/02/04/keras-multiple-inputs-and-mixed-data/

https://keras.io/getting-started/functional-api-guide/

Related videos on Youtube

Björn Lindqvist

Hello, my name is Björn Lindqvist I'm a consultant. Here is my resume: http://www.bjornlindqvist.se/ I am one of those people who protests a lot against all the closing of perfectly valid and useful questions on SO. Smart people are nice. The world would be a better place if there where more meritocracies in it.

Updated on June 04, 2022Comments

-

Björn Lindqvist about 2 years

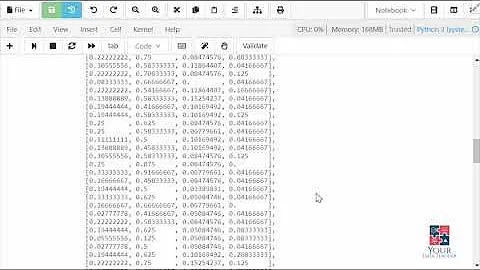

One common task in DL is that you normalize input samples to zero mean and unit variance. One can "manually" perform the normalization using code like this:

mean = np.mean(X, axis = 0) std = np.std(X, axis = 0) X = [(x - mean)/std for x in X]However, then one must keep the mean and std values around, to normalize the testing data, in addition to the Keras model being trained. Since the mean and std are learnable parameters, perhaps Keras can learn them? Something like this:

m = Sequential() m.add(SomeKerasLayzerForNormalizing(...)) m.add(Conv2D(20, (5, 5), input_shape = (21, 100, 3), padding = 'valid')) ... rest of network m.add(Dense(1, activation = 'sigmoid'))I hope you understand what I'm getting at.

-

Josiah Yoder about 4 yearsBatchNormalization does not behave exactly like the OP's example. The OP's code learns a mean and standard deviation, batch normalization layers have no learned parameters. Instead, they compute the mean and standard deviation on each mini-batch, or a running average over recent samples that have passed through the network. This distinction is important -- it is one of the primary improvements in StyleGAN2 over StyleGAN1, for instance.

Josiah Yoder about 4 yearsBatchNormalization does not behave exactly like the OP's example. The OP's code learns a mean and standard deviation, batch normalization layers have no learned parameters. Instead, they compute the mean and standard deviation on each mini-batch, or a running average over recent samples that have passed through the network. This distinction is important -- it is one of the primary improvements in StyleGAN2 over StyleGAN1, for instance. -

Josiah Yoder about 4 yearsTechnically, batch normalization "learns" during feedforward rather than feedback, and continues to "learn" during inference. "Updates to the weights (moving statistics) are based on the forward pass of a model rather than the result of gradient computations."

Josiah Yoder about 4 yearsTechnically, batch normalization "learns" during feedforward rather than feedback, and continues to "learn" during inference. "Updates to the weights (moving statistics) are based on the forward pass of a model rather than the result of gradient computations." -

Denziloe over 3 years@JosiahYoder You are correct that batch norm is different from OP's question, but your statement that "batch norm layers have no learned parameters" is wrong; batch norm layers learn a scale (mean beta and standard deviation gamma) for their output data. In fact that's one of the main reasons that batch norm is significantly different from what OP describes.

Denziloe over 3 years@JosiahYoder You are correct that batch norm is different from OP's question, but your statement that "batch norm layers have no learned parameters" is wrong; batch norm layers learn a scale (mean beta and standard deviation gamma) for their output data. In fact that's one of the main reasons that batch norm is significantly different from what OP describes. -

Denziloe over 3 yearsBatch norm layers don't standardise (i.e. mean = 0, standard deviation = 1) the features, they linearly transform the features to an arbitrary scale, which is learned. This makes it significantly different from what the question asks.

Denziloe over 3 yearsBatch norm layers don't standardise (i.e. mean = 0, standard deviation = 1) the features, they linearly transform the features to an arbitrary scale, which is learned. This makes it significantly different from what the question asks. -

Johannes Bauer over 3 yearsFrom the documentation: "Batch normalization applies a transformation that maintains the mean output close to 0 and the output standard deviation close to 1." It doesn't do per-sample normalization, but as I understand the question, that's not what is asked for.

-

Denziloe over 3 yearsContinue reading that doc page. You're quoting the first step. There's a subsequent step involving scale factor gamma and offset beta. This needs to be explicitly deactivated with

Denziloe over 3 yearsContinue reading that doc page. You're quoting the first step. There's a subsequent step involving scale factor gamma and offset beta. This needs to be explicitly deactivated withscale=False, center=False. -

Josiah Yoder over 3 years@Denziloe I see what you mean. I must have been using a network that set

Josiah Yoder over 3 years@Denziloe I see what you mean. I must have been using a network that setscale=Falseandcenter=False. -

Josiah Yoder over 3 yearsDenziloe's comment could be kinder, but he has good advice on how to improve your answer.

Josiah Yoder over 3 yearsDenziloe's comment could be kinder, but he has good advice on how to improve your answer. -

Josiah Yoder over 3 years@Wenuka Can you update your answer to reflect our comments? Then please let me know by @ mentioning me.

Josiah Yoder over 3 years@Wenuka Can you update your answer to reflect our comments? Then please let me know by @ mentioning me. -

Josiah Yoder over 3 yearsThis layer is at this point in VERY early draft format. And I'm not sure I like adding an

Josiah Yoder over 3 yearsThis layer is at this point in VERY early draft format. And I'm not sure I like adding anadaptmethod beforefit. But nevertheless, it seems to do exactly what the OP is requesting. -

Josiah Yoder over 3 years@Wenuka I have made some edits. Can you accept them or revise them? Thank you!

Josiah Yoder over 3 years@Wenuka I have made some edits. Can you accept them or revise them? Thank you! -

Josiah Yoder over 3 yearsAccording to en.wikipedia.org/wiki/Batch_normalization, batch normalization was motivated by needs of layers within deep neural networks, though exactly why it works so well for them is poorly understood.

Josiah Yoder over 3 yearsAccording to en.wikipedia.org/wiki/Batch_normalization, batch normalization was motivated by needs of layers within deep neural networks, though exactly why it works so well for them is poorly understood. -

Josiah Yoder over 3 yearsAt this point, I recommend deleting this answer as Wenuka's answer says everything this one says and more.

Josiah Yoder over 3 yearsAt this point, I recommend deleting this answer as Wenuka's answer says everything this one says and more.

![[AI] How to normalize and un-normalize a tabular data for neural networks?](https://i.ytimg.com/vi/Tu8Dl3zorgg/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDpreaV20iqgH-FnrbukegWg3fC3A)