NumPy: how to quickly normalize many vectors?

Solution 1

Well, unless I missed something, this does work:

vectors / norms

The problem in your suggestion is the broadcasting rules.

vectors # shape 2, 10

norms # shape 10

The shape do not have the same length! So the rule is to first extend the small shape by one on the left:

norms # shape 1,10

You can do that manually by calling:

vectors / norms.reshape(1,-1) # same as vectors/norms

If you wanted to compute vectors.T/norms, you would have to do the reshaping manually, as follows:

vectors.T / norms.reshape(-1,1) # this works

Solution 2

Computing the magnitude

I came across this question and became curious about your method for normalizing. I use a different method to compute the magnitudes. Note: I also typically compute norms across the last index (rows in this case, not columns).

magnitudes = np.sqrt((vectors ** 2).sum(-1))[..., np.newaxis]

Typically, however, I just normalize like so:

vectors /= np.sqrt((vectors ** 2).sum(-1))[..., np.newaxis]

A time comparison

I ran a test to compare the times, and found that my method is faster by quite a bit, but Freddie Witherdon's suggestion is even faster.

import numpy as np

vectors = np.random.rand(100, 25)

# OP's

%timeit np.apply_along_axis(np.linalg.norm, 1, vectors)

# Output: 100 loops, best of 3: 2.39 ms per loop

# Mine

%timeit np.sqrt((vectors ** 2).sum(-1))[..., np.newaxis]

# Output: 10000 loops, best of 3: 13.8 us per loop

# Freddie's (from comment below)

%timeit np.sqrt(np.einsum('...i,...i', vectors, vectors))

# Output: 10000 loops, best of 3: 6.45 us per loop

Beware though, as this StackOverflow answer notes, there are some safety checks not happening with einsum, so you should be sure that the dtype of vectors is sufficient to store the square of the magnitudes accurately enough.

Solution 3

Alright: NumPy's array shape broadcast adds dimensions to the left of the array shape, not to its right. NumPy can however be instructed to add a dimension to the right of the norms array:

print vectors.T / norms[:, newaxis]

does work!

Solution 4

there is already a function in scikit learn:

import sklearn.preprocessing as preprocessing

norm =preprocessing.normalize(m, norm='l2')*

More info at:

http://scikit-learn.org/stable/modules/preprocessing.html

Solution 5

My preferred way to normalize vectors is by using numpy's inner1d to calculate their magnitudes. Here's what's been suggested so far compared to inner1d

import numpy as np

from numpy.core.umath_tests import inner1d

COUNT = 10**6 # 1 million points

points = np.random.random_sample((COUNT,3,))

A = np.sqrt(np.einsum('...i,...i', points, points))

B = np.apply_along_axis(np.linalg.norm, 1, points)

C = np.sqrt((points ** 2).sum(-1))

D = np.sqrt((points*points).sum(axis=1))

E = np.sqrt(inner1d(points,points))

print [np.allclose(E,x) for x in [A,B,C,D]] # [True, True, True, True]

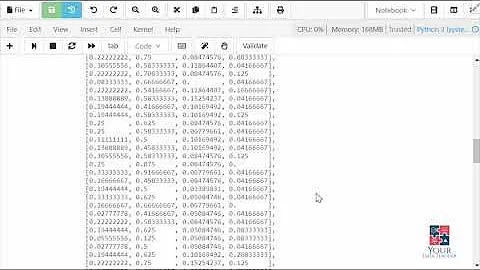

Testing performance with cProfile:

import cProfile

cProfile.run("np.sqrt(np.einsum('...i,...i', points, points))**0.5") # 3 function calls in 0.013 seconds

cProfile.run('np.apply_along_axis(np.linalg.norm, 1, points)') # 9000018 function calls in 10.977 seconds

cProfile.run('np.sqrt((points ** 2).sum(-1))') # 5 function calls in 0.028 seconds

cProfile.run('np.sqrt((points*points).sum(axis=1))') # 5 function calls in 0.027 seconds

cProfile.run('np.sqrt(inner1d(points,points))') # 2 function calls in 0.009 seconds

inner1d computed the magnitudes a hair faster than einsum. So using inner1d to normalize:

n = points/np.sqrt(inner1d(points,points))[:,None]

cProfile.run('points/np.sqrt(inner1d(points,points))[:,None]') # 2 function calls in 0.026 seconds

Testing against scikit:

import sklearn.preprocessing as preprocessing

n_ = preprocessing.normalize(points, norm='l2')

cProfile.run("preprocessing.normalize(points, norm='l2')") # 47 function calls in 0.047 seconds

np.allclose(n,n_) # True

Conclusion: using inner1d seems to be the best option

Related videos on Youtube

Bruno Feroleto

Director, Head of Data Sourcing in an asset management firm. Open-source programmer. Piano music composer. Board game enthusiast. Former Science Advisor in an asset management firm. Former Chief Data Scientist of a large multinational. Former head of Data Science for a startup. Former physicist (quantum mechanics, gravitational waves). Former Science & IT consultant. See LinkedIn profile. Author of the error propagation program "uncertainties", of the real time annotation program realtime_annotate and of the Markdown conversion program md_to_bgg. Have been teaching Python to graduate, doctoral and post-doctoral students, engineers and researchers since 2009. Have been loving science since 1980, and practicing it since 1998 (PhD in quantum physics). Good code design is a major priority for me. Started programming in 1983. Worked with a dozen programming languages (imperative [Python, Fortran, Perl, Pascal, BASIC, C, C++,…], functional [Caml], mathematical (Mathematica, Maple, IDL), stack-based [Postscript, HP RPL], constraint-based [Prolog], flow-based [LabView], and assembly [Motorola 68000, HP Saturn]). Have been working with Unix since 1994. Started programming in Python in 2006, and still loving it!

Updated on July 09, 2022Comments

-

Bruno Feroleto almost 2 years

How can a list of vectors be elegantly normalized, in NumPy?

Here is an example that does not work:

from numpy import * vectors = array([arange(10), arange(10)]) # All x's, then all y's norms = apply_along_axis(linalg.norm, 0, vectors) # Now, what I was expecting would work: print vectors.T / norms # vectors.T has 10 elements, as does norms, but this does not workThe last operation yields "shape mismatch: objects cannot be broadcast to a single shape".

How can the normalization of the 2D vectors in

vectorsbe elegantly done, with NumPy?Edit: Why does the above not work while adding a dimension to

normsdoes work (as per my answer below)?-

Geoff over 10 yearsFYI, a commenter may have a faster method, I edited my answer with more detail.

-

-

Justin Peel about 14 yearswhy not just do (vectors/norms).T if the OP wants this transposed. It seems both simple and elegant to me.

-

Bruno Feroleto about 14 yearsAh, ah! so the dimension extension is done on the left: this indeed explains the observed behavior. Thanks!

-

Bruno Feroleto over 11 yearsInteresting timing results (I obtain respectively 0.8 s and 1.4 s, with the more robust %timeit function of IPython), thanks!

-

Freddie Witherden over 10 yearsI have found

np.sqrt(np.einsum('...i,...i', vectors, vectors))to be ~4 times faster than Method 1 as given above. -

Geoff over 10 years@FreddieWitherden - Thanks for the comment, I didn't know about

einsum. There's in interesting related SO question here: stackoverflow.com/questions/18365073/… It will typically be faster, but may not be safe (depending on thedtypeof the vector). -

Geoff over 10 years@FreddieWitherden, your method gives different (but

np.allclose) values to mine. -

Geoff over 10 years@EOL - Thanks, I switched to ipython, and updated my answer. It turns out my HUGE array was giving some serious overhead. With a smaller one (in my new answer) the speed difference is much more noticeable.

-

Geoff over 10 yearsJust a note, I use

norms[..., np.newaxis]in case the matrix is not just 2D. It would work with a 3D (or more) tensor as well. -

Bruno Feroleto over 10 years@Geoff: The methods called "OP's" and "Mine" do not calculate the same thing (25 norms vs 100 norms). Thus, the order in which the elements are accessed differ, which can result in different speeds. The comparison should obviously be made on calculations that produce identical results. :)

-

Bruno Feroleto over 10 yearsInteresting information, but the question is explicitly about NumPy. It would be better put it in a comment to the original question.

-

Bruno Feroleto about 8 yearsFor reference, the question actually calls for calculating the norm along the first dimension, not the second (see the caveat added to Geoff's answer). How would this change the results? There might be an impact, because of the way memory is accessed, especially if you have a larger second dimension (instead of 3 in your example).