On Unix systems, why do we have to explicitly `open()` and `close()` files to be able to `read()` or `write()` them?

Solution 1

Dennis Ritchie mentions in «The Evolution of the Unix Time-sharing System» that open and close along with read, write and creat were present in the system right from the start.

I guess a system without open and close wouldn't be inconceivable, however I believe it would complicate the design.

You generally want to make multiple read and write calls, not just one, and that was probably especially true on those old computers with very limited RAM that UNIX originated on. Having a handle that maintains your current file position simplifies this. If read or write were to return the handle, they'd have to return a pair -- a handle and their own return status. The handle part of the pair would be useless for all other calls, which would make that arrangement awkward. Leaving the state of the cursor to the kernel allows it to improve efficiency not only by buffering. There's also some cost associated with path lookup -- having a handle allows you to pay it only once. Furthermore, some files in the UNIX worldview don't even have a filesystem path (or didn't -- now they do with things like /proc/self/fd).

Solution 2

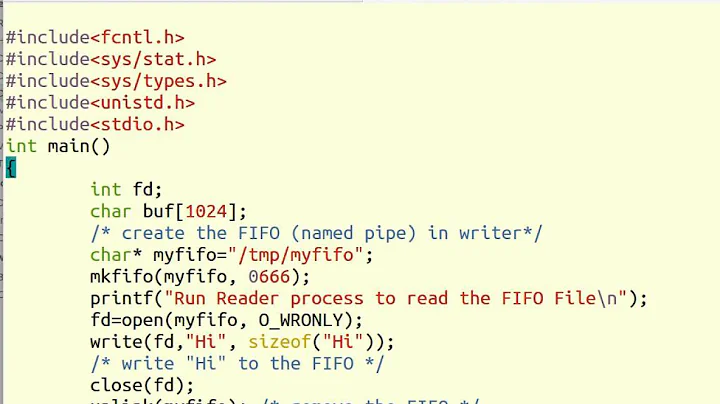

Then all of the read and write calls would have to pass this information on each operation:

- the name of the file

- the permissions of the file

- whether the caller is appending or creating

- whether the caller is done working with the file (to discard unused read-buffers and ensure write-buffers really finished writing)

Whether you consider the independent calls open, read, write and close to be simpler than a single-purpose I/O message is based on your design philosophy. The Unix developers chose to use simple operations and programs which can be combined in many ways, rather than a single operation (or program) which does everything.

Solution 3

The concept of the file handle is important because of UNIX's design choice that "everything is a file", including things that aren't part of the filesystem. Such as tape drives, the keyboard and screen (or teletype!), punched card/tape readers, serial connections, network connections, and (the key UNIX invention) direct connections to other programs called "pipes".

If you look at many of the simple standard UNIX utilities like grep, especially in their original versions, you'll notice that they don't include calls to open() and close() but just read and write. The file handles are set up outside the program by the shell and passed in when it is started. So the program doesn't have to care whether it's writing to a file or to another program.

As well as open, the other ways of getting file descriptors are socket, listen, pipe, dup, and a very Heath Robinson mechanism for sending file descriptors over pipes: https://stackoverflow.com/questions/28003921/sending-file-descriptor-by-linux-socket

Edit: some lecture notes describing the layers of indirection and how this lets O_APPEND work sensibly. Note that keeping the inode data in memory guarantees the system won't have to go and fetch them again for the next write operation.

Solution 4

The answer is no, because open() and close() create and destroy a handle, respectively. There are times (well, all of the time, really) where you may want to guarantee that you are the only caller with a particular access level, as another caller (for instance) writing to a file that you are parsing through unexpectedly could leave an application in an unknown state or lead to a livelock or deadlock, e.g. the Dining Philosophers lemma.

Even without that consideration, there are performance implications to be considered; close() allows the filesystem to (if it is appropriate or if you called for it) flush the buffer that you were occupying, an expensive operation. Several consecutive edits to an in-memory stream are much more efficient than several essentially unrelated read-write-modify cycles to a filesystem that, for all you know, exists half a world away scattered over a datacenter worth of high-latency bulk storage. Even with local storage, memory is typically many orders of magnitude faster than bulk storage.

Solution 5

Open() offers a way to lock files while they are in use. If files were automatically opened, read/written and then closed again by the OS there would be nothing to stop other applications changing those files between operations.

While this can be manageable (many systems support non-exclusive file access) for simplicity most applications assume that files they have open don't change.

Related videos on Youtube

user

Updated on September 18, 2022Comments

-

user over 1 year

user over 1 yearWhy do

open()andclose()exist in the Unix filesystem design?Couldn't the OS just detect the first time

read()orwrite()was called and do whateveropen()would normally do?-

Admin about 8 yearsIt's worth noting that this model is not part of the filesystem but rather of the Unix API. The filesystem is merely concerned with where on disk the bytes go and where to put the filename, etc. It would be perfectly possible to have the alternative model you describe on top of a Unix filesystem like UFS or ext4, it would be up to the kernel to translate those calls into the proper updates for the filesystem (just as it is now).

Admin about 8 yearsIt's worth noting that this model is not part of the filesystem but rather of the Unix API. The filesystem is merely concerned with where on disk the bytes go and where to put the filename, etc. It would be perfectly possible to have the alternative model you describe on top of a Unix filesystem like UFS or ext4, it would be up to the kernel to translate those calls into the proper updates for the filesystem (just as it is now). -

Admin about 8 yearsAs phrased, I think this is more about why

Admin about 8 yearsAs phrased, I think this is more about whyopen()exists. "Couldn't the OS just detect the first time read() or write() and do whatever open() would normally do?" Is there a corresponding suggestion for when closing would happen? -

Admin about 8 yearsThere are programming models / APIs which implement

Admin about 8 yearsThere are programming models / APIs which implementreadfileandwritefilefunctions. The open - modify - close concept is not limited to Unix/Linux and file I/O. -

Admin about 8 yearsHow would you tell

Admin about 8 yearsHow would you tellread()orwrite()which file to access? Presumably by passing the path. What if the file's path changes while you're accessing it (between tworead()orwrite()calls)? -

Admin about 8 yearsAlso you usually don't do access control on

Admin about 8 yearsAlso you usually don't do access control onread()andwrite(), just onopen(). -

Admin about 8 years@michael is not just about when to wrote through though; there's the question of when to release the resource, release locks, etc. It's often good to not hold things open longer than need be, so that other things can use them, and to minimize the amount of time that a program crash can leave something in a weird state.

Admin about 8 years@michael is not just about when to wrote through though; there's the question of when to release the resource, release locks, etc. It's often good to not hold things open longer than need be, so that other things can use them, and to minimize the amount of time that a program crash can leave something in a weird state. -

Admin about 8 years@JoshuaTaylor - Presumably the object would be closed when it goes out of scope (possibly through the same kind of reference counters that garbage collectors use to know when they can deallocate an object). Presumably a language that implements this would have a mechanism to force a close earlier if the programmer desired, which the programmer may need to use to prevent resource starvation if it implicitly opens a lot of files without them going out of scope. I'm doubtful that such a mechanism would solve more problems than it creates, but I can see why it would be convenient in many cases.

Admin about 8 years@JoshuaTaylor - Presumably the object would be closed when it goes out of scope (possibly through the same kind of reference counters that garbage collectors use to know when they can deallocate an object). Presumably a language that implements this would have a mechanism to force a close earlier if the programmer desired, which the programmer may need to use to prevent resource starvation if it implicitly opens a lot of files without them going out of scope. I'm doubtful that such a mechanism would solve more problems than it creates, but I can see why it would be convenient in many cases. -

Admin about 8 years@Johnny in some languages sure, that's similar to Java's try with resources block, or C++'s automated cleanup. C doesn't have anything like that though. And the resources that need be cleaned up after open are things that the OS had to take care of, releasing file descriptors,etc.

Admin about 8 years@Johnny in some languages sure, that's similar to Java's try with resources block, or C++'s automated cleanup. C doesn't have anything like that though. And the resources that need be cleaned up after open are things that the OS had to take care of, releasing file descriptors,etc. -

Admin about 8 years@Johnny: You're perhaps forgetting just how limited the hardware was in those days. The PDP-7 on which Unix was first implemented had (per Google) a maximum 64K of RAM and a 0.333 MHz clock - rather less than a simple microcontroller these days. Doing such garbage collection, or using system code to monitor file access, would have brought the system to its knees.

Admin about 8 years@Johnny: You're perhaps forgetting just how limited the hardware was in those days. The PDP-7 on which Unix was first implemented had (per Google) a maximum 64K of RAM and a 0.333 MHz clock - rather less than a simple microcontroller these days. Doing such garbage collection, or using system code to monitor file access, would have brought the system to its knees. -

Admin about 8 years@jamesqf We did send people to the Moon with fairly similarly spec'd hardware. Granted, the AGC wasn't the only computer used for the missions, let alone the entire Apollo program...

Admin about 8 years@jamesqf We did send people to the Moon with fairly similarly spec'd hardware. Granted, the AGC wasn't the only computer used for the missions, let alone the entire Apollo program... -

Admin about 8 yearsYes, and the AGC didn't have a filesystem.

Admin about 8 yearsYes, and the AGC didn't have a filesystem. -

Admin about 8 years@JoshuaTaylor What resources? And presumably locks should be released when they're released, or when the process that requested them terminates.

Admin about 8 years@JoshuaTaylor What resources? And presumably locks should be released when they're released, or when the process that requested them terminates. -

Admin about 8 years@Michael Kjörling: Sure, but sending people to the moon is "just physics", and so a lot simpler to implement. (And the code was - had to be! - pretty well optimized, unlike today's bloatware.) Consider your LM being a few hundred feet from the lunar surface when your computer system decides it's time for garbage collection.

Admin about 8 years@Michael Kjörling: Sure, but sending people to the moon is "just physics", and so a lot simpler to implement. (And the code was - had to be! - pretty well optimized, unlike today's bloatware.) Consider your LM being a few hundred feet from the lunar surface when your computer system decides it's time for garbage collection. -

Admin about 8 years@el.pescado: Indeed, with the /proc and /sys pseudo-filesystems, just about everything on a Linux system can be treated as a file.

Admin about 8 years@el.pescado: Indeed, with the /proc and /sys pseudo-filesystems, just about everything on a Linux system can be treated as a file. -

Admin about 8 years@jamesqf Well, not exactly a few hundred feet above the surface, but something very similar actually did happen on Apollo 11. The Eagle LM guidance computer restarted multiple times during late descent in response to processor overload conditions.

Admin about 8 years@jamesqf Well, not exactly a few hundred feet above the surface, but something very similar actually did happen on Apollo 11. The Eagle LM guidance computer restarted multiple times during late descent in response to processor overload conditions.

-

-

dave_thompson_085 about 8 yearsAlso

creat, andlistendoesn't create an fd, but when (and if) a request comes in while listeningacceptcreates and returns an fd for the new (connected) socket. -

Peter Cordes about 8 yearsThe cost of path lookup and permission checking etc. etc. is very significant. If you wanted to make a system without

Peter Cordes about 8 yearsThe cost of path lookup and permission checking etc. etc. is very significant. If you wanted to make a system withoutopen/close, you'd be sure to implement stuff like/dev/stdoutto allow piping. -

Bruno about 8 yearsI think another aspect to this is that you can keep that handle to the same file when using multiple reads when you keep the file open. Otherwise, you could have cases where another process unlinks and re-create a file with the same name, and reading a file in chunks could effectively be completely incoherent. (Some of this may depend on the filesystem too.)

-

Peter - Reinstate Monica about 8 yearsThis is THE correct answer. The famous (small) set of operations on file descriptors is a unifying API for all kinds of resources which produce or consume data. This concept is HUGELY successful. A string could conceivably have a syntax defining the resource type together with the actual location (URL anybody?), but to copy strings around which occupy several percent of the available RAM (what was it on the PDP 7? 16 kB?) seems excessive.

Peter - Reinstate Monica about 8 yearsThis is THE correct answer. The famous (small) set of operations on file descriptors is a unifying API for all kinds of resources which produce or consume data. This concept is HUGELY successful. A string could conceivably have a syntax defining the resource type together with the actual location (URL anybody?), but to copy strings around which occupy several percent of the available RAM (what was it on the PDP 7? 16 kB?) seems excessive. -

Joshua about 8 yearsI designed one without close(); you pass the inode number and offset to read() and write(). I can't do without open() very easily because that's where name resolution lives.

Joshua about 8 yearsI designed one without close(); you pass the inode number and offset to read() and write(). I can't do without open() very easily because that's where name resolution lives. -

supercat about 8 yearsCallers would also in most cases have to specify the desired offset within a file. There are some situations (e.g. a UDP protocol that allows access to data) where having each request independently identify a file and offset may be helpful since it eliminates the need for a server to maintain state, but in general it's more convenient to have the server keep track of file position. Further, as noted elsewhere, code which is going to write files often needs to lock them beforehand and lock them afterward; combing those operations with open/close is very convenient.

-

reinierpost about 8 yearsThe "file" may not have a name or permissions in the first place;

readandwritearen't restricted to files that live on a file system, and that is a fundamental design decision in Unix, as pjc50 explains. -

R.. GitHub STOP HELPING ICE about 8 years@Joshua: Such a system has fundamentally different semantics because unix file descriptors do not refer to files (inodes) but to open file descriptions, of which there may be many for a given file (inode).

-

Random832 about 8 yearsAlso where in the file to read/write it - the beginning, the end, or an arbitrary position (to typically be immediately after the end of the last read/write) - the kernel keeps track of this for you (with a mode to direct all writes to the end of the file, or otherwise files are opened with the position at the beginning and advanced with each read/write and can be moved with

lseek) -

Marius about 8 yearsPerhaps it would be, if the low-level calls and the shell were developed at the same time. But

pipewas introduced a few years after development on Unix started. -

jamesqf about 8 years@Thomas Dickey: Which merely shows how good the original design was, since it allowed the simple extension to pipes &c :-)

-

Giacomo Catenazzi about 8 yearsNot to forget that open() and close() keeps also the position in file (for next read or next write). So at the end or the read() and write() would need a struct to handle all parameters, or it need arguments for each parameter. Creating a structure is equivalent (programmer site) to a open, so if OS also know about open, we have only more advantages.

Giacomo Catenazzi about 8 yearsNot to forget that open() and close() keeps also the position in file (for next read or next write). So at the end or the read() and write() would need a struct to handle all parameters, or it need arguments for each parameter. Creating a structure is equivalent (programmer site) to a open, so if OS also know about open, we have only more advantages. -

Marius about 8 yearsBut following that line of argument, this answer provides nothing new.

-

Patrick Collins about 8 yearsAnd conversely, there are file descriptors that come from

open(2)but can't be accessed withread(2)orwrite(2)like file handles on a directory, which requirereaddir. -

vonbrand about 8 years@Joshua, you just renamed

open()toget_inode()and made the whole system more rigid (impossible to read/write the same file at several positions simultaneously). -

vonbrand about 8 years@ThomasDickey, it might not provide anything new, but it has the virtue of explaining it most clearly.

-

vonbrand about 8 years@dave_thompson_085, note that networking came much, much later than even pipes to Unix, and this was there from the very beginning.

-

Joshua about 8 years@vonbrand: Yes you can read/write at several positions simultaneously. The absolute read/write address is passed to read() and write() system calls. The system is very different from what you expect.

Joshua about 8 years@vonbrand: Yes you can read/write at several positions simultaneously. The absolute read/write address is passed to read() and write() system calls. The system is very different from what you expect. -

vonbrand about 8 years@Joshua, then the "inode" being passed around is the file structure (or a handle to it), just like

open(2)returns in Unix...