Optimise PostgreSQL for fast testing

Solution 1

First, always use the latest version of PostgreSQL. Performance improvements are always coming, so you're probably wasting your time if you're tuning an old version. For example, PostgreSQL 9.2 significantly improves the speed of TRUNCATE and of course adds index-only scans. Even minor releases should always be followed; see the version policy.

Don'ts

Do NOT put a tablespace on a RAMdisk or other non-durable storage.

If you lose a tablespace the whole database may be damaged and hard to use without significant work. There's very little advantage to this compared to just using UNLOGGED tables and having lots of RAM for cache anyway.

If you truly want a ramdisk based system, initdb a whole new cluster on the ramdisk by initdbing a new PostgreSQL instance on the ramdisk, so you have a completely disposable PostgreSQL instance.

PostgreSQL server configuration

When testing, you can configure your server for non-durable but faster operation.

This is one of the only acceptable uses for the fsync=off setting in PostgreSQL. This setting pretty much tells PostgreSQL not to bother with ordered writes or any of that other nasty data-integrity-protection and crash-safety stuff, giving it permission to totally trash your data if you lose power or have an OS crash.

Needless to say, you should never enable fsync=off in production unless you're using Pg as a temporary database for data you can re-generate from elsewhere. If and only if you're doing to turn fsync off can also turn full_page_writes off, as it no longer does any good then. Beware that fsync=off and full_page_writes apply at the cluster level, so they affect all databases in your PostgreSQL instance.

For production use you can possibly use synchronous_commit=off and set a commit_delay, as you'll get many of the same benefits as fsync=off without the giant data corruption risk. You do have a small window of loss of recent data if you enable async commit - but that's it.

If you have the option of slightly altering the DDL, you can also use UNLOGGED tables in Pg 9.1+ to completely avoid WAL logging and gain a real speed boost at the cost of the tables getting erased if the server crashes. There is no configuration option to make all tables unlogged, it must be set during CREATE TABLE. In addition to being good for testing this is handy if you have tables full of generated or unimportant data in a database that otherwise contains stuff you need to be safe.

Check your logs and see if you're getting warnings about too many checkpoints. If you are, you should increase your checkpoint_segments. You may also want to tune your checkpoint_completion_target to smooth writes out.

Tune shared_buffers to fit your workload. This is OS-dependent, depends on what else is going on with your machine, and requires some trial and error. The defaults are extremely conservative. You may need to increase the OS's maximum shared memory limit if you increase shared_buffers on PostgreSQL 9.2 and below; 9.3 and above changed how they use shared memory to avoid that.

If you're using a just a couple of connections that do lots of work, increase work_mem to give them more RAM to play with for sorts etc. Beware that too high a work_mem setting can cause out-of-memory problems because it's per-sort not per-connection so one query can have many nested sorts. You only really have to increase work_mem if you can see sorts spilling to disk in EXPLAIN or logged with the log_temp_files setting (recommended), but a higher value may also let Pg pick smarter plans.

As said by another poster here it's wise to put the xlog and the main tables/indexes on separate HDDs if possible. Separate partitions is pretty pointless, you really want separate drives. This separation has much less benefit if you're running with fsync=off and almost none if you're using UNLOGGED tables.

Finally, tune your queries. Make sure that your random_page_cost and seq_page_cost reflect your system's performance, ensure your effective_cache_size is correct, etc. Use EXPLAIN (BUFFERS, ANALYZE) to examine individual query plans, and turn the auto_explain module on to report all slow queries. You can often improve query performance dramatically just by creating an appropriate index or tweaking the cost parameters.

AFAIK there's no way to set an entire database or cluster as UNLOGGED. It'd be interesting to be able to do so. Consider asking on the PostgreSQL mailing list.

Host OS tuning

There's some tuning you can do at the operating system level, too. The main thing you might want to do is convince the operating system not to flush writes to disk aggressively, since you really don't care when/if they make it to disk.

In Linux you can control this with the virtual memory subsystem's dirty_* settings, like dirty_writeback_centisecs.

The only issue with tuning writeback settings to be too slack is that a flush by some other program may cause all PostgreSQL's accumulated buffers to be flushed too, causing big stalls while everything blocks on writes. You may be able to alleviate this by running PostgreSQL on a different file system, but some flushes may be device-level or whole-host-level not filesystem-level, so you can't rely on that.

This tuning really requires playing around with the settings to see what works best for your workload.

On newer kernels, you may wish to ensure that vm.zone_reclaim_mode is set to zero, as it can cause severe performance issues with NUMA systems (most systems these days) due to interactions with how PostgreSQL manages shared_buffers.

Query and workload tuning

These are things that DO require code changes; they may not suit you. Some are things you might be able to apply.

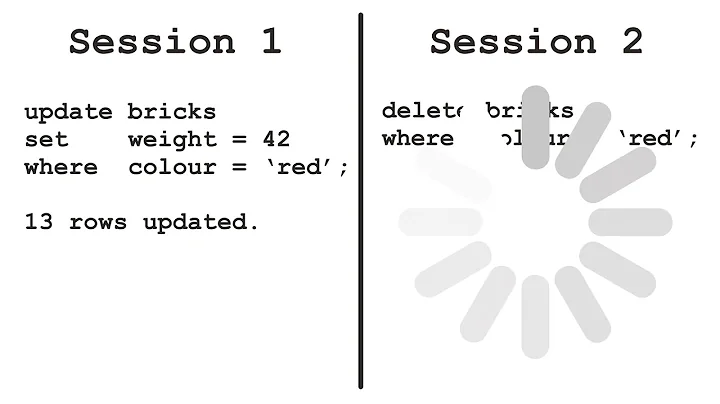

If you're not batching work into larger transactions, start. Lots of small transactions are expensive, so you should batch stuff whenever it's possible and practical to do so. If you're using async commit this is less important, but still highly recommended.

Whenever possible use temporary tables. They don't generate WAL traffic, so they're lots faster for inserts and updates. Sometimes it's worth slurping a bunch of data into a temp table, manipulating it however you need to, then doing an INSERT INTO ... SELECT ... to copy it to the final table. Note that temporary tables are per-session; if your session ends or you lose your connection then the temp table goes away, and no other connection can see the contents of a session's temp table(s).

If you're using PostgreSQL 9.1 or newer you can use UNLOGGED tables for data you can afford to lose, like session state. These are visible across different sessions and preserved between connections. They get truncated if the server shuts down uncleanly so they can't be used for anything you can't re-create, but they're great for caches, materialized views, state tables, etc.

In general, don't DELETE FROM blah;. Use TRUNCATE TABLE blah; instead; it's a lot quicker when you're dumping all rows in a table. Truncate many tables in one TRUNCATE call if you can. There's a caveat if you're doing lots of TRUNCATES of small tables over and over again, though; see: Postgresql Truncation speed

If you don't have indexes on foreign keys, DELETEs involving the primary keys referenced by those foreign keys will be horribly slow. Make sure to create such indexes if you ever expect to DELETE from the referenced table(s). Indexes are not required for TRUNCATE.

Don't create indexes you don't need. Each index has a maintenance cost. Try to use a minimal set of indexes and let bitmap index scans combine them rather than maintaining too many huge, expensive multi-column indexes. Where indexes are required, try to populate the table first, then create indexes at the end.

Hardware

Having enough RAM to hold the entire database is a huge win if you can manage it.

If you don't have enough RAM, the faster storage you can get the better. Even a cheap SSD makes a massive difference over spinning rust. Don't trust cheap SSDs for production though, they're often not crashsafe and might eat your data.

Learning

Greg Smith's book, PostgreSQL 9.0 High Performance remains relevant despite referring to a somewhat older version. It should be a useful reference.

Join the PostgreSQL general mailing list and follow it.

Reading:

Solution 2

Use different disk layout:

- different disk for $PGDATA

- different disk for $PGDATA/pg_xlog

- different disk for tem files (per database $PGDATA/base//pgsql_tmp) (see note about work_mem)

postgresql.conf tweaks:

- shared_memory: 30% of available RAM but not more than 6 to 8GB. It seems to be better to have less shared memory (2GB - 4GB) for write intensive workloads

- work_mem: mostly for select queries with sorts/aggregations. This is per connection setting and query can allocate that value multiple times. If data can't fit then disk is used (pgsql_tmp). Check "explain analyze" to see how much memory do you need

- fsync and synchronous_commit: Default values are safe but If you can tolerate data lost then you can turn then off

- random_page_cost: if you have SSD or fast RAID array you can lower this to 2.0 (RAID) or even lower (1.1) for SSD

- checkpoint_segments: you can go higher 32 or 64 and change checkpoint_completion_target to 0.9. Lower value allows faster after-crash recovery

Related videos on Youtube

Dmytrii Nagirniak

Passionate Software Engineer dreaming about perfect solutions and tools.

Updated on June 04, 2020Comments

-

Dmytrii Nagirniak almost 4 years

I am switching to PostgreSQL from SQLite for a typical Rails application.

The problem is that running specs became slow with PG.

On SQLite it took ~34 seconds, on PG it's ~76 seconds which is more than 2x slower.So now I want to apply some techniques to bring the performance of the specs on par with SQLite with no code modifications (ideally just by setting the connection options, which is probably not possible).

Couple of obvious things from top of my head are:

- RAM Disk (good setup with RSpec on OSX would be good to see)

- Unlogged tables (can it be applied on the whole database so I don't have change all the scripts?)

As you may have understood I don't care about reliability and the rest (the DB is just a throwaway thingy here).

I need to get the most out of the PG and make it as fast as it can possibly be.Best answer would ideally describe the tricks for doing just that, setup and the drawbacks of those tricks.

UPDATE:

fsync = off+full_page_writes = offonly decreased time to ~65 seconds (~-16 secs). Good start, but far from the target of 34.UPDATE 2: I tried to use RAM disk but the performance gain was within an error margin. So doesn't seem to be worth it.

UPDATE 3:* I found the biggest bottleneck and now my specs run as fast as the SQLite ones.

The issue was the database cleanup that did the truncation. Apparently SQLite is way too fast there.

To "fix" it I open a transaction before each test and roll it back at the end.

Some numbers for ~700 tests.

- Truncation: SQLite - 34s, PG - 76s.

- Transaction: SQLite - 17s, PG - 18s.

2x speed increase for SQLite. 4x speed increase for PG.

-

Craig Ringer about 12 yearsI really doubt you'll get it to go as fast as SQLite. SQLite with a single user is insanely fast. SQLite's design is very fast with low user counts and scales poorly; Pg's design scales well but isn't as fast for simple bulk work with just one user.

-

Dmytrii Nagirniak about 12 yearsI realise that, but there's a particular case that I hope to optimise PG for (test runs) so it is as fast as it can possibly be. I don't mind it to be slightly slower there, but 2.2x is a bit too slow. See what I mean?

-

tscho about 12 years+1 I'd be very interested in updates on the RAM disk approach if you've got any results concerning that.

-

Dmytrii Nagirniak about 12 years@tscho I'll definitely post it here. But need some time since I'm working on other stuff and "researching" the PG stuff in the "background".

-

a_horse_with_no_name about 12 yearsis inserting the data your problem or querying? It's not clear from your question.

a_horse_with_no_name about 12 yearsis inserting the data your problem or querying? It's not clear from your question. -

Dmytrii Nagirniak about 12 yearsIt's both. Some specs create records then delete those. Most insert, query, delete.

-

Daniel Vérité about 12 yearsIf having the tablespace in a RAM disk provides no gain, it probably means that it's the WAL files (pg_xlog directory) that should be moved to a ramdisk. Also turn off autovacuum if not done already.

-

Joe Van Dyk almost 12 yearsi wonder why truncation is so slow on postgresql.

-

Omar over 11 yearsDmytrii, how did you go about setting up the transactions exactly? I thought that's what this line was for in spec_helper:

config.use_transactional_fixtures = true. I'm having the same issue as you with postgres being more than twice as slow as sqlite and bit confused on how to fix it. -

Dmytrii Nagirniak over 11 years@Omar, yes the transactional features are enabled by default in Rails. But I was using DatabaseCleaner and had to set it back.

-

B Seven over 11 yearsAwesome. I had the exact same experience when switching to Postgres from SQLite. Relative speed was 1.9x slower without spork, 2.1 slower with spork. I'll try your fix.

-

malkia about 11 yearsI'm in similar situation. One thing that helped me quite a lot is using enums instead of text for cases where I can - we have about 40 tables for each game asset type, and the material type has 700 columns, a lot of them are texture quality settings (miplevels, filtering, etc). I've turned them all into pg enums, and this reduced the size of the database significantly (4 bytes for enum oid, rather than varying char there). Overall I'm happy :) but sqlite is a power in itself too - two very awesome projects

-

yankee almost 7 yearsIn my case

yankee almost 7 yearsIn my casefsync = offcut integration test time in less than half (including compiling).full_page_writes = offhad no measurable additional effect. A ramdisk (I just used tmpfs) brought varying results (sometimes worse than without for some reason). The ramdisk also get's the full speed gain without settingfsync = off.

-

tscho about 12 yearsI can also recommend PostgreSQL 9.0 High Performance by @GregSmith, it's really a great read. The book covers every aspect of performance tuning from disk layout to query tuning and gives you a very good understanding of the PG internals.

-

Dmytrii Nagirniak about 12 yearsThanks guys, will definitely put it on my reading queue. I suppose the fact the book is 2 years old isn't an issue since probably not much has changes in PG itself. Right?

-

Daniel Lyons about 12 yearsI love that book too. No, it's not an issue. The book covers up through 9.0 and PG is only up to 9.1.

-

intgr about 12 yearsNote that if you're already running with

fsync=off, putting pg_xlog on a separate disk doesn't improve much anymore. -

Daechir about 12 yearsI didn't release an update to the book for PostgreSQL 9.1, the only release since its publication, because there weren't enough performance related changes in 9.1 to warrant it.

-

Abdul Saqib over 11 yearsWhile I totally agree the newer version always have the best, they may also contain the worst(some new issue\bug). Is it really sane to try and stay lock step with the Postgres release cycle? For sure not in production. I'm honestly just curious of your input on this not trying to poke a stick at the lion.

Abdul Saqib over 11 yearsWhile I totally agree the newer version always have the best, they may also contain the worst(some new issue\bug). Is it really sane to try and stay lock step with the Postgres release cycle? For sure not in production. I'm honestly just curious of your input on this not trying to poke a stick at the lion. -

Craig Ringer over 11 years@JustBob No, it isn't, but I find it wise to stay within a major version or two. I'm really aiming at those people still on 8.3 or older, which is just absurd. 8.4 is marginal, I'd want to be on 9.1 at the moment and using 9.2 in my testing in preparation for rolling it out in the next six months or so. You save so much time using newer versions because issues you're facing are already solved in the new version; witness the vismap and fsm, autovac improvements, etc etc.

-

Craig Ringer over 11 years@JustBob Also, after a few months after release the issues are generally largely sorted out in the new major release. There are a few signs there might be a couple of nasty planner regressions in 9.2, but the trouble is that if nobody tests it seriously with their apps then issues aren't found. I won't jump straight to a new major in production, but I'll start testing it very promptly.

-

Gunnlaugur Briem over 10 yearsGreat writeup. Just as a tiny update, “You may need to increase the OS's maximum shared memory limit if you increase shared_buffers” is no longer true (for most users) under PostgreSQL 9.3: postgresql.org/docs/9.3/static/release-9-3.html#AEN114343

-

Asclepius over 9 yearsThe value of 1.1 for SSD seems very unqualified. I acknowledge that it's what some professionals have blindly recommended. Even SSDs are significantly faster for sequential reads than random reads.

Asclepius over 9 yearsThe value of 1.1 for SSD seems very unqualified. I acknowledge that it's what some professionals have blindly recommended. Even SSDs are significantly faster for sequential reads than random reads. -

brauliobo almost 9 yearsunfortunetely

commit_delayonly allow a maximum of100000(100ms), so it is not useful... -

Craig Ringer almost 9 years@brauliobo Why isn't that useful? It's enough to group up concurrent sets of commits, and that's what it's for.

-

brauliobo almost 9 years@CraigRinger it is useful in production, but not for testing

-

Craig Ringer almost 9 years@brauliobo My tests often do many tx's at high TPS ... because I try to simulate production, including concurrency-heavy workloads. If you mean "single-connection, linear testing" then I'd agree with you.

-

brauliobo almost 9 years@CraigRinger yeah, it is automated testing on rake tasks (rails)

-

Jonathan Crosmer almost 8 yearsstackoverflow.com/questions/11419536/… DELETE may be faster than TRUNCATE for tables with few rows, which is likely to be the case in tests.

-

Jonathan Crosmer almost 8 yearsIn fact, DELETE was much faster than TRUNCATE for our case

-

maniek over 7 yearsIs fsync=off safe against unclean postgres restarts (as opposed to OS restarts)?

-

Craig Ringer over 7 years@maniek It should be, but I'd avoid relying on it for anything really important, just to be sure.

-

Sajeev over 7 yearsSee this also: tekadempiere.blogspot.ae/2014/09/…

-

John Smith almost 7 yearsCan you maybe help me with this question as well: stackoverflow.com/questions/44318816/…

-

Craig Ringer almost 7 years@A-B-B Yes, but you've also got OS buffer caching effects at work. All those params are a bit hand-wavey anyway...

-

DylanYoung over 5 years"For production use you can possibly use synchronous_commit=off and set a commit_delay" <-- commit_delay doesn't do anything when sync commit is off. Should that "and" be an "or"? ;)

-

Craig Ringer over 5 years@DylanYoung Er... yeah. That.

![Slow things down to make them go faster [#FOSDEM 2022] #postgres #postgresql](https://i.ytimg.com/vi/YvUTsl0isyc/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAHVR8vLXD3rKW1x7IIfK2bgD7owA)