Optimizing away a "while(1);" in C++0x

Solution 1

Does someone have a good explanation of why this was necessary to allow?

Yes, Hans Boehm provides a rationale for this in N1528: Why undefined behavior for infinite loops?, although this is WG14 document the rationale applies to C++ as well and the document refers to both WG14 and WG21:

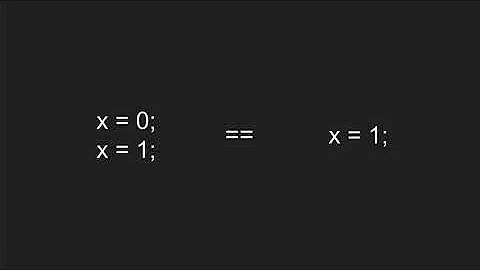

As N1509 correctly points out, the current draft essentially gives undefined behavior to infinite loops in 6.8.5p6. A major issue for doing so is that it allows code to move across a potentially non-terminating loop. For example, assume we have the following loops, where count and count2 are global variables (or have had their address taken), and p is a local variable, whose address has not been taken:

for (p = q; p != 0; p = p -> next) { ++count; } for (p = q; p != 0; p = p -> next) { ++count2; }Could these two loops be merged and replaced by the following loop?

for (p = q; p != 0; p = p -> next) { ++count; ++count2; }Without the special dispensation in 6.8.5p6 for infinite loops, this would be disallowed: If the first loop doesn't terminate because q points to a circular list, the original never writes to count2. Thus it could be run in parallel with another thread that accesses or updates count2. This is no longer safe with the transformed version which does access count2 in spite of the infinite loop. Thus the transformation potentially introduces a data race.

In cases like this, it is very unlikely that a compiler would be able to prove loop termination; it would have to understand that q points to an acyclic list, which I believe is beyond the ability of most mainstream compilers, and often impossible without whole program information.

The restrictions imposed by non-terminating loops are a restriction on the optimization of terminating loops for which the compiler cannot prove termination, as well as on the optimization of actually non-terminating loops. The former are much more common than the latter, and often more interesting to optimize.

There are clearly also for-loops with an integer loop variable in which it would be difficult for a compiler to prove termination, and it would thus be difficult for the compiler to restructure loops without 6.8.5p6. Even something like

for (i = 1; i != 15; i += 2)or

for (i = 1; i <= 10; i += j)seems nontrivial to handle. (In the former case, some basic number theory is required to prove termination, in the latter case, we need to know something about the possible values of j to do so. Wrap-around for unsigned integers may complicate some of this reasoning further.)

This issue seems to apply to almost all loop restructuring transformations, including compiler parallelization and cache-optimization transformations, both of which are likely to gain in importance, and are already often important for numerical code. This appears likely to turn into a substantial cost for the benefit of being able to write infinite loops in the most natural way possible, especially since most of us rarely write intentionally infinite loops.

The one major difference with C is that C11 provides an exception for controlling expressions that are constant expressions which differs from C++ and makes your specific example well-defined in C11.

Solution 2

To me, the relevant justification is:

This is intended to allow compiler transfor- mations, such as removal of empty loops, even when termination cannot be proven.

Presumably, this is because proving termination mechanically is difficult, and the inability to prove termination hampers compilers which could otherwise make useful transformations, such as moving nondependent operations from before the loop to after or vice versa, performing post-loop operations in one thread while the loop executes in another, and so on. Without these transformations, a loop might block all other threads while they wait for the one thread to finish said loop. (I use "thread" loosely to mean any form of parallel processing, including separate VLIW instruction streams.)

EDIT: Dumb example:

while (complicated_condition()) {

x = complicated_but_externally_invisible_operation(x);

}

complex_io_operation();

cout << "Results:" << endl;

cout << x << endl;

Here, it would be faster for one thread to do the complex_io_operation while the other is doing all the complex calculations in the loop. But without the clause you have quoted, the compiler has to prove two things before it can make the optimisation: 1) that complex_io_operation() doesn't depend on the results of the loop, and 2) that the loop will terminate. Proving 1) is pretty easy, proving 2) is the halting problem. With the clause, it may assume the loop terminates and get a parallelisation win.

I also imagine that the designers considered that the cases where infinite loops occur in production code are very rare and are usually things like event-driven loops which access I/O in some manner. As a result, they have pessimised the rare case (infinite loops) in favour of optimising the more common case (noninfinite, but difficult to mechanically prove noninfinite, loops).

It does, however, mean that infinite loops used in learning examples will suffer as a result, and will raise gotchas in beginner code. I can't say this is entirely a good thing.

EDIT: with respect to the insightful article you now link, I would say that "the compiler may assume X about the program" is logically equivalent to "if the program doesn't satisfy X, the behaviour is undefined". We can show this as follows: suppose there exists a program which does not satisfy property X. Where would the behaviour of this program be defined? The Standard only defines behaviour assuming property X is true. Although the Standard does not explicitly declare the behaviour undefined, it has declared it undefined by omission.

Consider a similar argument: "the compiler may assume a variable x is only assigned to at most once between sequence points" is equivalent to "assigning to x more than once between sequence points is undefined".

Solution 3

I think the correct interpretation is the one from your edit: empty infinite loops are undefined behavior.

I wouldn't say it's particularly intuitive behavior, but this interpretation makes more sense than the alternative one, that the compiler is arbitrarily allowed to ignore infinite loops without invoking UB.

If infinite loops are UB, it just means that non-terminating programs aren't considered meaningful: according to C++0x, they have no semantics.

That does make a certain amount of sense too. They are a special case, where a number of side effects just no longer occur (for example, nothing is ever returned from main), and a number of compiler optimizations are hampered by having to preserve infinite loops. For example, moving computations across the loop is perfectly valid if the loop has no side effects, because eventually, the computation will be performed in any case.

But if the loop never terminates, we can't safely rearrange code across it, because we might just be changing which operations actually get executed before the program hangs. Unless we treat a hanging program as UB, that is.

Solution 4

The relevant issue is that the compiler is allowed to reorder code whose side effects do not conflict. The surprising order of execution could occur even if the compiler produced non-terminating machine code for the infinite loop.

I believe this is the right approach. The language spec defines ways to enforce order of execution. If you want an infinite loop that cannot be reordered around, write this:

volatile int dummy_side_effect;

while (1) {

dummy_side_effect = 0;

}

printf("Never prints.\n");

Solution 5

I think this is along the lines of the this type of question, which references another thread. Optimization can occasionally remove empty loops.

Related videos on Youtube

Johannes Schaub - litb

I'm a C++ programmer, interested in linux, compilers and toolchains and generally the embedded software stack. Standardese answers: How does boost::is_base_of work? Injected class name and constructor lookup weirdness What happens when op[] and op T* are both there. FAQ answers: Where to put "template" and "typename" on dependent names (now also covers C++11) Undefined behavior and sequence points Favourite answers: Plain new, new[], delete and delete[] in a nutshell. Assertion failure on T(a) but allowing T t(a) - forbids (accidental) temporaries. Explicitly instantiating a typedef to a class type Doing RAII the lazy way. C for-each over arrays. inline and the ODR in C++, and inline in C99

Updated on January 28, 2020Comments

-

Johannes Schaub - litb over 4 years

Johannes Schaub - litb over 4 yearsUpdated, see below!

I have heard and read that C++0x allows an compiler to print "Hello" for the following snippet

#include <iostream> int main() { while(1) ; std::cout << "Hello" << std::endl; }It apparently has something to do with threads and optimization capabilities. It looks to me that this can surprise many people though.

Does someone have a good explanation of why this was necessary to allow? For reference, the most recent C++0x draft says at

6.5/5A loop that, outside of the for-init-statement in the case of a for statement,

- makes no calls to library I/O functions, and

- does not access or modify volatile objects, and

- performs no synchronization operations (1.10) or atomic operations (Clause 29)

may be assumed by the implementation to terminate. [ Note: This is intended to allow compiler transfor- mations, such as removal of empty loops, even when termination cannot be proven. — end note ]

Edit:

This insightful article says about that Standards text

Unfortunately, the words "undefined behavior" are not used. However, anytime the standard says "the compiler may assume P," it is implied that a program which has the property not-P has undefined semantics.

Is that correct, and is the compiler allowed to print "Bye" for the above program?

There is an even more insightful thread here, which is about an analogous change to C, started off by the Guy done the above linked article. Among other useful facts, they present a solution that seems to also apply to C++0x (Update: This won't work anymore with n3225 - see below!)

endless: goto endless;A compiler is not allowed to optimize that away, it seems, because it's not a loop, but a jump. Another guy summarizes the proposed change in C++0x and C201X

By writing a loop, the programmer is asserting either that the loop does something with visible behavior (performs I/O, accesses volatile objects, or performs synchronization or atomic operations), or that it eventually terminates. If I violate that assumption by writing an infinite loop with no side effects, I am lying to the compiler, and my program's behavior is undefined. (If I'm lucky, the compiler might warn me about it.) The language doesn't provide (no longer provides?) a way to express an infinite loop without visible behavior.

Update on 3.1.2011 with n3225: Committee moved the text to 1.10/24 and say

The implementation may assume that any thread will eventually do one of the following:

- terminate,

- make a call to a library I/O function,

- access or modify a volatile object, or

- perform a synchronization operation or an atomic operation.

The

gototrick will not work anymore!-

Potatoswatter almost 14 yearsAuto-parallelization maybe? I wish I knew more about that… but eliminating that loop is certainly equivalent to executing it on a parallel thread that never reports back. On the other hand, a thread that does generate information for the caller would be imbued with some kind of synchronization. And a thread with proper side effects couldn't be parallelized.

Potatoswatter almost 14 yearsAuto-parallelization maybe? I wish I knew more about that… but eliminating that loop is certainly equivalent to executing it on a parallel thread that never reports back. On the other hand, a thread that does generate information for the caller would be imbued with some kind of synchronization. And a thread with proper side effects couldn't be parallelized. -

Martin York almost 14 yearsWill in the general case that loop would be a programming error.

Martin York almost 14 yearsWill in the general case that loop would be a programming error. -

Daniel Earwicker almost 14 years

Daniel Earwicker almost 14 yearswhile(1) { MyMysteriousFunction(); }must be independently compilable without knowing the definition of that mysterious function, right? So how can we determine if it makes calls to any library I/O functions? In other words: surely that first bullet could be phrased makes no calls to functions. -

Potatoswatter almost 14 years@Daniel: If it has access to the function's definition, it can prove a lot of things. There is such a thing as interprocedural optimization.

Potatoswatter almost 14 years@Daniel: If it has access to the function's definition, it can prove a lot of things. There is such a thing as interprocedural optimization. -

Philip Potter almost 14 yearsThe section you have quoted gives an explanation for why it's allowed. Did you not understand it, disagree with it, or did it not satisfy you in some other way?

-

Potatoswatter almost 14 years@Philip: loops which do absolutely nothing, yet don't have an easy termination case, are uncommon and not a major issue for optimization.

Potatoswatter almost 14 years@Philip: loops which do absolutely nothing, yet don't have an easy termination case, are uncommon and not a major issue for optimization. -

Johannes Schaub - litb almost 14 years@Philip i do not understand it. What are those compiler transformations that this optimization makes easier? And why was it only for C++0x that this is allowed? Now I'm a big threading noob, so I've no clue about that.

Johannes Schaub - litb almost 14 years@Philip i do not understand it. What are those compiler transformations that this optimization makes easier? And why was it only for C++0x that this is allowed? Now I'm a big threading noob, so I've no clue about that. -

Dennis Zickefoose almost 14 yearsRight now, in C++03, is a compiler allowed to change

int x = 1; for(int i = 0; i < 10; ++i) do_something(&i); x++;intofor(int i = 0; i < 10; ++i) do_something(&i); int x = 2;? Or possibly the other way, withxbeing initialized to2before the loop. It can telldo_somethingdoesn't care about the value ofx, so its a perfectly safe optimization, ifdo_somethingdoesn't cause the value ofito change such that you end up in an infinite loop. -

Gabe almost 14 yearsSo does this mean that

main() { start_daemon_thread(); while(1) { sleep(1000); } }might just exit immediately instead of running my daemon in a background thread? -

MSalters almost 14 years"This insightful article" assumes that a specific behavior is Undefined Behavior merely because there is no explicit, defined behavior. That's an incorrect assumption. In general, when the standard leaves open a finite number of behaviors, an implemenation must choose any of those (Unspecified behavior). This need not be deterministic. Whether a do-nothing loop terminates is arguably a boolean choice; either it does or it does not. Doing something else isn't allowed.

-

KitsuneYMG almost 14 years@Gabe. I doubt it. What the optimization means is that anything that happens after your

while(1)loop could be moved to before the loop by the compiler. Thewhile(1)still executes, it just may execute before or after other instructions. The real question is what happens if you throwexit(EXIT_SUCCESS)after the loop. -

Johannes Schaub - litb almost 14 years@kts no it means the compiler can assume the loop terminates. If a loop that has no side effect terminates you cannot notice how many iterations it had, apart of some delay in execution which is entirely specific to how performant your computer is. Under the as-if rule, the compiler is then allowed to optimize away the loop entirely. So i think, in the above comment, if

Johannes Schaub - litb almost 14 years@kts no it means the compiler can assume the loop terminates. If a loop that has no side effect terminates you cannot notice how many iterations it had, apart of some delay in execution which is entirely specific to how performant your computer is. Under the as-if rule, the compiler is then allowed to optimize away the loop entirely. So i think, in the above comment, ifsleep's definition does any I/O calls or if the implementation defines "sleep" as an I/O function itself, the loop in @Gabe's comment cannot be assumed to terminate. -

supercat almost 14 years@kts: Code after the loop which neither affects nor is affected by the loop may be moved before it. Code after the loop which would affects or be affected by the loop must wait for the loop to terminate. So I would expect the throw to happen when the loop terminates, if it ever does.

-

doug65536 over 11 yearsThis is fascinating but is there a practical case of a compiler throwing away a loop that actually does something? Imagine filing a bug with a compiler team and getting a will-not-fix because the standard says it has to throw it away. Not likely, compilers will flow analyze and will treat unknowns as the bad case.

doug65536 over 11 yearsThis is fascinating but is there a practical case of a compiler throwing away a loop that actually does something? Imagine filing a bug with a compiler team and getting a will-not-fix because the standard says it has to throw it away. Not likely, compilers will flow analyze and will treat unknowns as the bad case. -

M.M over 9 yearsCan I suggest reorgnizing this post to just contain C++11 (or C++14) quotes; the historical progression of the rule through drafts is not crucial

-

vpalmu almost 6 years@Gabe: No. sleep() expands to a system call so it won't optimize it away.

-

h0r53 almost 3 yearsThis seems dangerous/problematic. I don't want the compiler "optimizing away" my loops if they were intentional, even if they perhaps included a logical bug. I should be responsible for identifying and resolving my bug, and not reliant on the compiler to make assumptions about the behavior of my algorithm.

h0r53 almost 3 yearsThis seems dangerous/problematic. I don't want the compiler "optimizing away" my loops if they were intentional, even if they perhaps included a logical bug. I should be responsible for identifying and resolving my bug, and not reliant on the compiler to make assumptions about the behavior of my algorithm. -

supercat over 2 years@h0r53: If e.g. a program defines a function like

unsigned normalize_lsb(unsigned x) { while(!(x & 1)) x>>=1; return x;}, and a compiler can ascertain that the return value from some particular call is never used, should a compiler be required to generate code that hangs ifxis zero? Being able to ignore the value ofxin cases where the return value isn't used (and use the fact thatxis ignored to in turn omit code whose sole purpose would be to compute it) is a useful optimization, on compilers whose behavior is limited to cleanly omitting or deferring such loops.

-

Johannes Schaub - litb almost 14 yearsNice question. Seems like that guy had exactly the problem that this paragraph allows that compiler to cause. In the linked discussion by one of the answers, it is written that "Unfortunately, the words 'undefined behavior' are not used. However, anytime the standard says 'the compiler may assume P,' it is implied that a program which has the property not-P has undefined semantics." . This surprises me. Does this mean my example program above has undefined behavior, and may just segfault out of nowhere?

Johannes Schaub - litb almost 14 yearsNice question. Seems like that guy had exactly the problem that this paragraph allows that compiler to cause. In the linked discussion by one of the answers, it is written that "Unfortunately, the words 'undefined behavior' are not used. However, anytime the standard says 'the compiler may assume P,' it is implied that a program which has the property not-P has undefined semantics." . This surprises me. Does this mean my example program above has undefined behavior, and may just segfault out of nowhere? -

Steve Jessop almost 14 years"Proving 1) is pretty easy" - in fact doesn't it follow immediately from the 3 conditions for the compiler to be allowed to assume loop termination under the clause Johannes is asking about? I think they amount to, "the loop has no observable effect, except perhaps spinning forever", and the clause ensures that "spinning forever" isn't guaranteed behaviour for such loops.

-

Steve Jessop almost 14 years@Johannes: the text "may be assumed" doesn't occur anywhere else in the draft I have to hand, and "may assume" only occurs a couple of times. Although I checked this with a search function which fails to match across line breaks, so I may have missed some. So I'm not sure the author's generalisation is warranted on the evidence but as a mathematician I have to concede the logic of the argument, that if the compiler assumes something false then in general it may deduce anything...

-

Steve Jessop almost 14 years...Permitting a contradiction in the compiler's reasoning about the program certainly hints very strongly at UB, since in particular it allows the compiler, for any X, to deduce that the program is equivalent to X. Surely permitting the compiler to deduce that is permitting it to do that. I agree with the author, too, that if UB is intended it should be explicitly stated, and if it's not intended then the spec text is wrong and should be fixed (perhaps by the spec-language equivalent of, "the compiler may replace the loop with code that has no effect", I'm not sure).

-

Philip Potter almost 14 years@Steve: it's easy if the loop doesn't terminate; but if the loop does terminate then it may have nontrivial behaviour which affects the processing of the

complex_io_operation. -

Steve Jessop almost 14 yearsOops, yes, I missed that it might modify non-volatile locals/aliases/whatever which are used in the IO op. So you're right: although it doesn't necessarily follow, there are many cases in which compilers can and do prove that no such modification occurs.

-

KitsuneYMG almost 14 years"It does, however, mean that infinite loops used in learning examples will suffer as a result, and will raise gotchas in beginner code. I can't say this is entirely a good thing." Just compile with optimizations off and it should still work

-

Donal Fellows almost 14 years“empty infinite loops are undefined behavior”? Alan Turing would beg to differ, but only when he gets over spinning in his grave.

-

josesuero almost 14 years@Donal: I never said anything about its semantics in a Turing machine. We're discussing the semantics of an infinite loop with no side effcts in C++. And as I read it, C++0x chooses to say that such loops are undefined.

-

supercat almost 14 years@Philip Potter: I don't think Undefined Behavior is indicated. I don't think the standard allows for a compiler to "assume" something it knows to be false, and a compiler which doesn't know a loop won't terminate must generate code whose ultimate behavior will end up being correct if the loop ever does.

-

supercat almost 14 yearsEmpty infinite loops would be silly, and there'd be no reason to have special rules for them. The rule is designed to deal with useful loops of unbounded (hopefully not infinite) duration, which calculate something that will be needed in future but not immediately.

-

Philip Potter almost 14 years@supercat: what then does it mean to allow the compiler to assume something if the compiler still has to check if it's actually true? To me the very definition of assumption is to take something as true without having to check.

-

Philip Potter almost 14 yearsI think that your progress bar case could not be separated, because displaying a progress bar is a library I/O call. Optimisations should not change visible behaviour in this way.

-

supercat almost 14 years@Philip Potter: Suppose a some code generates strings to see if it can find one which matches a particular 64-bit hash, and will run until it finds a match. A compiler looking at that code probably can't know whether it will ever terminate. The compiler's behavior must be such that if and when the code does find a match, the sequence of visible events prescribed by the code will end up occurring. The rule allows the visible events may occur before the match is found, even if they're coded to occur after, provided that the final sequence will be correct if/when a match is found.

-

supercat almost 14 years@Philip Potter: If the slow routine had side-effects, that would certainly be true. In my example before, it would be meaningless if it didn't, so I changed it. My interpretation of the spec is that the system is allowed to defer the execution of the slow code until such time as its effects (other than the time it takes to execute) would become visible, i.e. the show_result() call. If the progress-bar code made use of the running total, or at least pretended to do so, that would force it to sync up with the slow code.

-

Philip Potter almost 14 years@supercat: I'm not sure what your point is. I don't disagree with anything you say. If the loop does terminate, there is no UB.

-

supercat almost 14 years@Philip Potter: The compiler may only meaningfully "assume" the loop will terminate if it doesn't know. If the compiler doesn't know whether or not the loop will terminate, its code must be confined to those operations which would not prove to be erroneous if the loop does terminate. Even if the loop does not terminate, I see no permission for the compiler to do anything that would be erroneous if by some miracle the loop did. The rules grant to compiler some latitude, but not nearly as much as UB. UB means the compiler is allowed to do ANYTHING and still comply with the spec.

-

Philip Potter almost 14 years@supercat: i agree to the extent that this is what will happen in practice. However, if we assume the loop terminates, but it does not terminate, then we have a contradiction, and from a contradiction anything follows. xkcd.com/704

-

supercat almost 14 years@Philip Potter: I guess the question would be whether a compiler is allowed to "assume" something it "knows" to be false or, to put it another way, whether permission to "assume" something implies permission to deliberately act adversely if it's KNOWN to be false. Frankly, I think the standard would be clearer and more useful if it said that the compiler is required to maintain all visible effects and side-effects of a piece of code, but time required to execute it--even if infinite--shall not in and of itself be considered an effect the compiler is required to maintain.

-

Philip Potter almost 14 years@supercat: Of course the compiler can assume false things. That's what assuming is. It wouldn't be an assumption if the things were necessarily true. As for "knowing", the compiler's knowledge is irrelevent. The standard doesn't require the compiler to "know" anything about loop termination, and it would be really stupid if it did.

-

supercat almost 14 years@Philip Potter: Suppose a program is supposed to perform a lengthy computation, print "The answer is", then print the answer, then print some more stuff. If the loops is going to terminate, then if the program's printout can't start with anything other than "The answer is" and the correct answer. Is there any way in which a conforming compiler that doesn't know whether a loop will terminate or what it will compute could print out anything other than "The answer is", prior to the termination of the loop? Even if the loop doesn't terminate, the behavior of the program is highly constrained.

-

Philip Potter almost 14 years@supercat: What you describe is what will happen in practice, but it's not what the draft standard requires. We can't assume the compiler doesn't know whether a loop will terminate. If the compiler does know the loop won't terminate, it can do whatever it likes. The DS9K will create nasal demons for any infinite loop with no I/O etc. (Therefore, the DS9K solves the halting problem.)

-

phkahler over 13 years@supercat. If the programmer can not be certain weather a deliberate infinite loop will be removed, that's undefined behavior. One compiler may eliminate the loop, while another leaves it in - or worse, the same compiler on different optimization settings. While restricted to 2 options, the specific compiler behavior is undefined by the standard.

-

Philip Potter over 13 years@phkahler: undefined behaviour is totally unrestricted. Those 2 options are not the only valid options for a compiler. That's kind of what "undefined behaviour" means: behaviour that the standard doesn't define. If the implementation must choose from a set of known options, that's "unspecified" rather than undefined behaviour.

-

supercat over 13 years@Philip: I personally dislike language like "the compiler may assume", unless the context makes clear what specifically the compiler may do on the basis of that assumption. Frankly, I think the whole "endless loop" thing would have been more clearly dealt with by saying that the execution of any piece of code may be deferred until its first visible side-effect, and a program may be considered to have finished execution once all visible side-effects that are ever going to occur have done so.

-

supercat over 13 years@Philip: If the standard were written that way, there would be no confusion about "compiler assumptions". If a loop has no side-effects other than the time required to execute it, its "execution" may be deferred until the program has performed all required side-effect-inducing actions. At that point, even if there are still one or more side-effect-less endless loops that haven't yet executed, one could perfectly well pretend that those loops will execute in parallel with the execution of the next program to run.

-

BCoates over 12 yearsIf they're interacting with other threads, you shouldn't make them volatile, make them atomics or protect them with a lock.

-

supercat over 10 years@JohannesSchaub-litb: If a loop--endless or not--doesn't read or write any volatile variables during execution, and does not call any functions that might do so, a compiler is free to defer any portion of the loop until the first effort to access something computed therein. Given

unsigned int dummy; while(1){dummy++;} fprintf(stderror,"Hey\r\n"); fprintf(stderror,"Result was %u\r\n",dummy);, the firstfprintfcould execute, but the second could not (the compiler could move the computation ofdummybetween the twofprintf, but not past the one that prints its value). -

supercat over 10 years@SteveJessop: What would you think of simply saying that execution of any piece of code--including infinite loops--may be postponed until such time as something the piece of code did would affect an observable program behavior, and that for purposes of that rule, the time required to execute a piece of code--even if infinite--is not an "observable side-effect". If a compiler can demonstrate that a loop cannot exit without a variable holding a certain value, the variable may be deemed to hold that value, even it could also be shown that the loop could not exit with it holding that value.

-

Steve Jessop over 10 years@supercat: as you've stated that conclusion, I don't think it improves things. If the loop provably never exits then for any object

Xand bit-patternx, the compiler can demonstrate the loop does not exit withoutXholding bit-patternx. It's vacuously true. SoXcould be deemed to hold any bit pattern, and that's as bad as UB in the sense that for the wrongXandxit will swiftly cause some. So I believe you need to be more precise in your standardese. It's difficult to talk about what happens "at the end of" an infinite loop, and show it equivalent to some finite operation. -

supercat over 10 years@SteveJessop: E.g. after

unsigned int blah=0; while(blah != 42 || blah != 24601) blah++; printf("%d",blah);could legally print either 42 or 24601; afterunsigned int blah=1; while(blah != 0 && blah != 2) blah+=2; printf("%d",blah & mask);a compiler could legally print 0 if (mask & 2) was zero, but would otherwise have to wait for the loop to complete to find the value of bit 1 of mask. -

supercat over 10 years@SteveJessop: Perhaps things need to be better worded to say that a if a compiler deduces that a loop cannot end, it cannot reschedule it, but otherwise the compiler may legitimately make any combination of inferences. Thus, a compiler could in the second code example deem that bit 0 of

blahto be set (since that's a loop invariant) or clear (required by exit condition), but it could not deduce that some unrelated variable was equal to 42, since the only way to deduce that the loop couldn't end without that variable being 42 would be to deduce that it couldn't end at all. -

supercat over 10 years@SteveJessop: Do you think that rule would seem workable? I see no basis for allowing endless loops to trigger nasal demons (very few programs could be statically analyzed and shown not to cause nasal demons were that the case), and I think that allowing compilers to postpone loops while making any combination of deductions which would not require a deduction that a loop cannot terminate at all would accommodate all useful inferences about loops which might or might not terminate, without allowing nasal demons for loops which happen not to do so.

-

Steve Jessop over 10 years@supercat: I don't think it's helpful to say "if a compiler deduces X then it cannot do Y", unless the standard specifies what every compiler must do in order to attempt to deduce X. And I don't think the authors want to do that. Otherwise you're allowing bad optimizers to do something that good optimizers are forbidden, which doesn't make sense. Sounds like you could define certain kinds of loop invariants and exit conditions and allow the re-ordered code to rely on them. Since no compiler would be required to deduce these things I'd guess it wouldn't be an implementation burden...

-

Steve Jessop over 10 years... and the desired optimization in Philip Potter's answer would be permitted provided the compiler can prove that the "externally visible operation" really doesn't affect the observable behavior of the "complex io operation". Which it has to prove at the moment anyway before parallelizing them, just in case the loop does terminate. So yeah, give it a go and see what you come up with :-) I suspect it's academic for C++ though, since the standard has been published allowing UB and so narrowing the permitted behavior now would be an incompatible change from the implementer's POV.

-

supercat over 10 years@SteveJessop: I think the goal of the standards committee is (or at least should be) to allow compiler writers to make any and all useful inferences that would hold if the loop in fact terminates, without authorizing nasal demons if it doesn't; thus, there must be some limit on what compilers are or are not allowed to do with endless loops. How about, instead of saying "if the compiler deduces...", saying "The compiler may not reschedule a loop if it makes use of any inference that the loop cannot exit". Given

uint32_t x=0,y=0; while (foo(y) || (y != 42)) y++;... -

supercat over 10 years...a compiler that can't figure out anything about

foo(y)beyond its lack of side-effects could (but would not be required to) deduce that the loop cannot exit when y isn't 42, and thus deduce that y must be 42 when it exits. If the compiler determined thatfoocould never return zero when y is 42, that would imply that the loop cannot terminate when x isn't 57 (vacuously true, since it can't terminate at all), but couldn't make that inference without making use of the inference that the loop never terminates. Can you see any way a compiler could infer anything about the value of x... -

supercat over 10 years...when the loop terminates, other than through an inference that the loop doesn't terminate? The idea that endless loops should be unqualified UB seems massively overkill, and makes static verifiers essentially useless on any program outside of those which can be mathematically proven to be incapable of getting stuck in an endless loop.

-

vsz about 10 yearsDoes this mean that C++0x is not suited for embedded devices? Almost all embedded devices are non-terminating and do their job inside a big fat

while(1){...}. They even routinely usewhile(1);to invoke a watchdog-assisted reset. -

josesuero about 10 years@vsz: the first form is fine. Infinite loops are perfectly well defined, as long as they have some sort of observable behavior. The second form is trickier, but I can think of two very easy ways out: (1) a compiler targeting embedded devices could just choose to define stricter behavior in that case, or (2) you create a body which calls some dummy library function. As long as the compiler doesn't know what that function does, it has to assume that it may have some side effect, and so it can't mess with the loop.

-

vsz about 10 years@jalf : I asked it and got a pretty good answer for it: stackoverflow.com/questions/24298314/…

-

JCx almost 10 yearsExample of where you might want an infinite loop: an embedded system where you don't want to sleep for performance reasons and all the code is hanging off an interrupt or two?

-

Shafik Yaghmour over 9 yearsNote, I added a new answer to the second question you mention ... C11 provided an explicit exception for loops with controlling expressions that are constant expressions.

-

paulm almost 8 yearsThis explains all of those progress bars that go fast from 0 to 100 and then hang for ages ;)

-

David Schwartz almost 8 yearsThjs is terrible advice. Making them

volatileis neither necessary nor suffiicent, and it hurts performance dramatically. -

supercat over 5 yearsAre there any safe and useful optimizations that are facilitated by the present language that would not be facilitated just as well by saying "If the termination of a loop depends upon the state of any objects, the time required to execute the loop is not considered an observable side-effect, even if such time happens to be infinite". Given

do { x = slowFunctionWithNoSideEffects(x);} while(x != 23);Hoisting code after the loop which would not depend uponxwould seem safe and reasonable, but allowing a compiler to assumex==23in such code seems more dangerous than useful. -

M.M over 4 years@JCx in Standard C the interrupts should set a flag which the main loop checks , therefore the main loop would have observable behaviour in the case of the flags being set. Running substantial code in interrupts is non-portable .

-

supercat over 2 years@DavidSchwartz: The semantics of

volatileare "implementation-defined", and the sufficiency of that qualifier for various purposes will depend upon how an implementation chooses to define it. There are many freestanding implementations for whichvolatileis both necessary and sufficient to accomplish what programs will need to do. -

supercat over 2 yearsBTW, since responding to this question, I've discovered that in some cases where nothing in the code as written will rely upon any post-conditions that would be guaranteed by successful completion of a loop, clang will sometimes combine optimizations that rely upon such post-conditions with optimizations that remove the loop. The Standard doesn't forbid such nonsense, but it means that inputs that would cause code to get stuck in an infinite loop may cause memory corruption, in ways not bound by ordinary laws of causality.

-

supercat over 2 years@jalf: There are many situations where cleanly omitting the code for a loop that may or may not terminate, but will definitely have no side effects if it does, may be a useful optimization. If programmers must include dummy side effects in such loops to prevent completely arbitrary behavior in response to inputs that might cause them to hang, such useful optimizations would be precluded and the only effect of the rule would be to require that programmers expend needless effort to achieve performance which is no better than would have been achieved if compilers processed loops as written.

-

supercat over 2 years@PhilipPotter: Under the as-if rule, if an optimization would cause a compiler to produce code for a construct whose observable behavior would be inconsistent with sequentially processing the code as written, the only way to allow the optimization is to "undefine" program behavior in any cases where such inconsistencies might arise. Unfortunately, the Standard uses the same terminology to allow generally-harmless deviations from what would otherwise be defined sequential program behavior, as it uses to describe constructs that are genuinely meaningless.

-

David Schwartz over 2 years@supercat Can you really name a single implementation where

volatileis necessary? -

supercat over 2 years@DavidSchwartz: On many commercial compilers, consecutive accesses to static-duration objects will be consolidated unless either the objects are volatile, the accesses are separated by a volatile write, or one of the accesses is a write and they are separated by a volatile read. For platforms that use a consistent memory model (including single Except in cases where volatile reads trigger actions,

volatilewill often be necessary and sufficient. -

supercat over 2 years@M.M: On embedded systems with prioritized interrupts, it's not terribly uncommon to have systems where, after initialization, everything is done within some kind of interrupt. Many ARM-family controllers even have a mode explicitly for such designs which won't need an endless loop in main, because once a flag is set the system won't execute any more code that isn't in interrupts.

-

David Schwartz over 2 years@supercat Again, can you name a single platform where

volatileis necessary? Every one I know of includes better ways to do this. (Andvolatileis truly awful because it is not going to be clear what it is doing or why it is there while specific functions (such as an atomic fetch function used where the atomic fetch is needed) are self-documenting.) -

supercat over 2 years@DavidSchwartz: Most compilers I've used for embedded platforms worked as I described, and for many of them in the 1990s the two ways of achieving the proper semantics would be to use

volatilewith standard syntax, orasmwith non-standard syntax. Using avolatilequalifier on objects that are e.g. manipulated in ISR's and inspected in main-line code, or vice versa, seems cleaner than using a non-standardasmsyntax to force an "optimization barrier", and I'm not sure what alternative you think would be better on platforms without native compare-and-swap semantics. -

David Schwartz over 2 years@supercat What would be better is to code what you actually want. If you actually want an atomic read, then use the platform's atomic read function. Can you name a platform where

volatileis really the best way? Anything in the past 15 years? -

supercat over 2 years@DavidSchwartz: What kind of "atomic" read function does a Motorola 65HC11 or 68000 have? Or an Intel 8088? Or an ARM Cortex-M0, the latter of whic is still used in new designs?

-

David Schwartz over 2 years@supercat It depends on the compiler. GCC, for example, has built in atomic intrinsics that provide you all, and only, the semantics you require. They're much better than

volatilebecause they don't impose any costs you don't need and they're self-documenting whilevolatileis always a mystery. You need to check the compiler's documentation anyway to determine ifvolatileis sufficient since we agreed it was platform-specific, right? -

supercat over 2 years@DavidSchwartz: Commercial compiler vendors of the 1980s and 1990s processed

volatilein such a manner as to be sufficient, and there was never any reason for any compiler intended for use with user-code interrupt routines not to support such semantics, at least as an option. Volatile semantics will be adequate for any compiler that makes a bona fide effort to make them so, and the fact that a program to accomplish some task is incompatible with compilers that make no effort to support such a task is only a defect if no better compiler is available. -

supercat over 2 years@DavidSchwartz: If every compiler but one supports a construct using the same syntax, bjut the author of one oddball compiler requires a non-standard syntax, coe using the commonly-supported sequence should be viewed as portable, and the oddball compiler should be recognized as aberrant. The Standard doesn't require that all implementations be suitable for all tasks, and suitability for tasks involving interrupts is outside the Standard's jurisdiction. As a consequence, it has no authority to say that such suitability should not be viewed as requiring the common treatment of

volatile. -

supercat over 2 years@DavidSchwartz: If all compilers will process a program to accomplish a certain task in the same semantically-useful fashion with optimizations disabled, without requiring non-standard syntax, compilers that can efficiently accomplish such task without jumping through hoops or mandating vendor-specific syntax should be recognized as being more suitable for that task than those which can't, regardless of whether the Standard mandates such support.

-

David Schwartz over 2 yearsAlmost by definition, it's what the standard mandates that you can rely on being processed the same. Otherwise, you're just throwing your hands up in the air and hoping for the best. And there are the huge disadvantages I mentioned -- you pay for much more than you need and your code is not self-documenting. In any event, if there are other options, it's not necessary. If no standard or compiler-specific document says it's sufficient, you're just hoping it's sufficient.

![#4 [Lập Trình C]. Giải Thích Chi Tiết Cách Hoạt Động Vòng Lặp For Trong C | Break Và Continue](https://i.ytimg.com/vi/NYCRZWPs8_w/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDbZQFWWzH3THqox6ERWwr4CZ142g)

![#5 [Lập trình C]. Nẵm Vững Cách Hoạt Động Của Vòng Lặp While Và Vòng Lặp Do While Trong C](https://i.ytimg.com/vi/dCveScuUE4U/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCdbpKclTxD18azM9RDS3TruDK5xw)