Overhead to using std::vector?

Solution 1

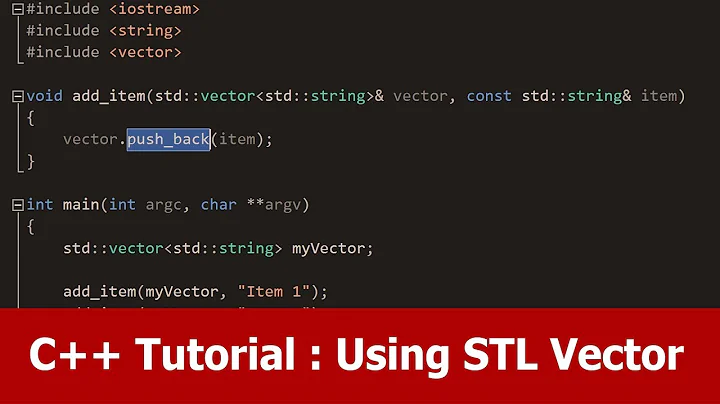

It is always better to use std::vector/std::array, at least until you can conclusively prove (through profiling) that the T* a = new T[100]; solution is considerably faster in your specific situation. This is unlikely to happen: vector/array is an extremely thin layer around a plain old array. There is some overhead to bounds checking with vector::at, but you can circumvent that by using operator[].

Solution 2

I can't think of any case where dynamically allocating a C style

vector makes sense. (I've been working in C++ for over 25

years, and I've yet to use new[].) Usually, if I know the

size up front, I'll use something like:

std::vector<int> data( n );

to get an already sized vector, rather than using push_back.

Of course, if n is very small and is known at compile time,

I'll use std::array (if I have access to C++11), or even

a C style array, and just create the object on the stack, with

no dynamic allocation. (Such cases seem to be rare in the

code I work on; small fixed size arrays tend to be members of

classes. Where I do occasionally use a C style array.)

Solution 3

If you know the size in advance (especially at compile time), and don't need the dynamic re-sizing abilities of std::vector, then using something simpler is fine.

However, that something should preferably be std::array if you have C++11, or something like boost::scoped_array otherwise.

I doubt there'll be much efficiency gain unless it significantly reduces code size or something, but it's more expressive which is worthwhile anyway.

Solution 4

You should try to avoid C-style-arrays in C++ whenever possible. The STL provides containers which usually suffice for every need. Just imagine reallocation for an array or deleting elements out of its middle. The container shields you from handling this, while you would have to take care of it for yourself, and if you haven't done this a hundred times it is quite error-prone.

An exception is of course, if you are adressing low-level-issues which might not be able to cope with STL-containers.

There have already been some discussion about this topic. See here on SO.

Solution 5

Is it absolutely always a better idea to use a std::vector or could there be practical situations where manually allocating the dynamic memory would be a better idea, to increase efficiency?

Call me a simpleton, but 99.9999...% of the times I would just use a standard container. The default choice should be std::vector, but also std::deque<> could be a reasonable option sometimes. If the size is known at compile-time, opt for std::array<>, which is a lightweight, safe wrapper of C-style arrays which introduces zero overhead.

Standard containers expose member functions to specify the initial reserved amount of memory, so you won't have troubles with reallocations, and you won't have to remember delete[]ing your array. I honestly do not see why one should use manual memory management.

Efficiency shouldn't be an issue, since you have throwing and non-throwing member functions to access the contained elements, so you have a choice whether to favor safety or performance.

Related videos on Youtube

Vivek Ghaisas

Updated on June 04, 2022Comments

-

Vivek Ghaisas almost 2 years

I know that manual dynamic memory allocation is a bad idea in general, but is it sometimes a better solution than using, say,

std::vector?To give a crude example, if I had to store an array of

nintegers, wheren<= 16, say. I could implement it usingint* data = new int[n]; //assuming n is set beforehandor using a vector:

std::vector<int> data;Is it absolutely always a better idea to use a

std::vectoror could there be practical situations where manually allocating the dynamic memory would be a better idea, to increase efficiency? -

us2012 about 11 years+1 for the link at the end, that should destroy once and for all the myth that accessing vector elements is somehow slow.

-

James Kanze about 11 yearsUnless you know that

nis very, very small, you probably shouldn't declare local variables asstd::array. Unless there is some very specific reason for doing otherwise, I'd just usestd::vector---if I know the size, I'll initialize the vector with the correct size. (This also supposes that the type has a default constructor.) -

James Kanze about 11 yearsThe usual reason for using C style arrays has nothing to do with speed; it's for static initialization, and for the compiler to determine the size according to the number of initializers. (Which, of course, never applies to dynamically allocated arrays).

-

us2012 about 11 years@James If I'm reading your comment correctly, you are objecting to the fact that I seem to be bashing C-style arrays without saying that I mean dynamically allocated ones? If so, I have edited my answer regarding this. (Also, +1 to your answer.)

-

Vivek Ghaisas about 11 yearsThat clears it up. I didn't know that

vector/arrayis a thin layer. I kinda assumed that with all the functionality, it must have a significant overhead. -

James Kanze about 11 yearsYou said "It is always...until...solution is considerably faster". I didn't read it as being restricted to dynamic allocation. (As I said in my answer, I have never used an array

new. Beforestd::vectorandstd::string, the first thing one did was to write something equivalent.) But while I never use arraynew, there are cases where C style arrays are justified (some, but not all of which can be replaced bystd::arrayin C++11).

![#13 [C++]. Hướng Dẫn Sử Dụng Thành Thạo Vector Trong C++ | Lớp Vector Và Iterator](https://i.ytimg.com/vi/053Tcz4omzk/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDRs4FTLNZB7zqHbGApi2yTigdAGg)