Pacemaker Active/Active haproxy load balancing

I have active-active 2*virtIP cluster

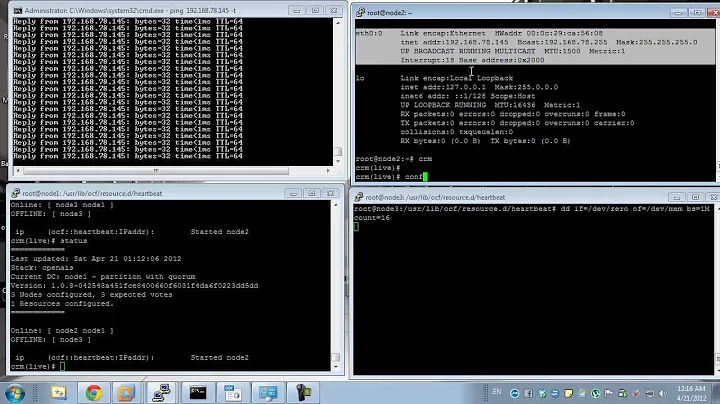

For CRM config:

I'm using two virtual IPs as primitive IPaddr2 services

and for the service which should run on both nodes:

- create primitive for it, you'll then use it's ID

- make "clone" from it like:

clone any_name_of_the_clone your_primitive_service_id \

meta clone-max="2" clone-node-max="1"

You may add order (to start virt IP after starting clone - NOT primitive, after you create clone you should not use it's child's ID)

It is working, failover works (assigning 2 IPs on one node when other fails).

However I have problem how to make colocation - I mean to have contrained services: I cannot have virtIP on node with subservice failed.

It is OK when the service is down - cluster brings it up but when starting fails (e.g. broken config for the service) - cluster notes error but brings IP up.

Anyone knows what's the cause?

- Is it a matter of bad monitoring/start/stop control or it is a matter of configuring constraints?

EDIT:

I have added to Primitive option to 'op start': on-fail="standby". Now when my service (the only primitive in clone) cannot start the node looses also virtIP

This seems to solve my problem now.

Related videos on Youtube

user53864

Updated on September 18, 2022Comments

-

user53864 over 1 year

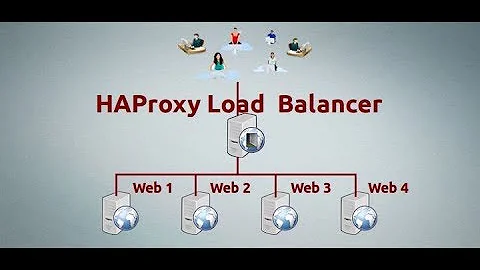

I am using Haproxy to load balance replicated mysql master servers. I am also using Heartbeat and Pacemaker for Active/Active ip failover with two virtual ips on the two load balancers for web server high availability. I used location in pacemaker to stay VIPs one on each load balancer and I'm using round-robin DNS domains pointing to VIPs to load balance the load balancers. Everything looks fine so far!

|LB1: | Round-Robin -->| 1.2.3.4 | Heartbeat Pacemaker | Haproxy | 192.168.1.1

| | | | ||LB2: | Round-Robin -->| 5.6.7.8 | Heartbeat Pacemaker | Haproxy | 192.168.1.2

crm configure show

node $id="394647e0-0a08-451f-a5bf-6c568854f8d1" lb1 node $id="9e95dc4f-8a9a-4727-af5a-40919ac902ba" lb2 primitive vip1 ocf:heartbeat:IPaddr2 \ params ip="1.2.3.4" cidr_netmask="255.255.255.0" nic="eth0:0" \ op monitor interval="40s" timeout="20s" primitive vip2 ocf:heartbeat:IPaddr2 \ params ip="5.6.7.8" cidr_netmask="255.255.255.0" nic="eth0:1" \ op monitor interval="40s" timeout="20s" location vip1_pref vip1 100: lb1 location vip2_pref vip2 100: lb2 property $id="cib-bootstrap-options" \ dc-version="1.0.8-042548a451fce8400660f6031f4da6f0223dd5dd" \ cluster-infrastructure="Heartbeat" \ stonith-enabled="false" \ no-quorum-policy="ignore" \ expected-quorum-votes="1"How to configure Pacemaker so that if Haproxy on any load balancer is corrupted, it should still work either using haproxy on another lb or moving both the vips to working haproxy lb node. I DON'T want Active/Passive BUT Active/Active configuration as running haproxy on both the lbs responding to mysql requests.

Is it possible to do with Pacemaker? Anybody know?

Any help is greatly appreciated!. Thanks!

Update 1

That was a nice hint by

@Arek B.usingclone. I appended below line to pacemaker configuration but still couldn't exactly get what is actually required. I checked stoppinghaproxyon both the LBs and it's automatically started by pacemaker but when I checked permanently stopping it(/etc/defaults/haproxy, enabled=0), haproxy failed to start and in those case when it couldn't start haproxy, I want the resource(ipaddr2) to be moved to another running haproxy lb. Any more hint?primitive mysql_proxy lsb:haproxy \ op monitor interval="10s" clone clone_mysql_proxy mysql_proxy \ meta clone-max="2" clone-node-max="1" location mysql_proxy_pref1 clone_mysql_proxy 100: lb1 location mysql_proxy_pref2 clone_mysql_proxy 50: lb2-

Khaled over 12 yearsI have done similar setup (active-active) using keepalived if you are interested.

-

user53864 over 12 yearsAnyhow the configuration might be same in case of pacemaker probably it's better I give a try of your keepalived please.

-

phemmer over 12 yearsIt is possible for pacemaker to do this, but you would need to do a little coding. ocf:pacemaker:pingd does something similar in which it would ping something (configurable target) and if the pings started failing, it would move a resource (like an IPaddr2 resource) to another node. You would need to write a script which does connection health checking instead of pinging, and then set it up the same way.

-

user53864 about 12 yearsNobody using pacemaker?

-

Admin over 11 years@khaled can you give me the manual installation of active-active keepalived?

Admin over 11 years@khaled can you give me the manual installation of active-active keepalived? -

Khaled over 11 years@marnaglaya: The idea is to define two VRRP group in keepalived. Each node will be the master of one group and thus get initially the VIP. So, you will have two VIPs assigned to two nodes.

-

-

Kendall about 12 yearsCouldn't you put the sub service and the VIP in a group? If the sub service fails to start the VIP will not come up. Groups are commonly used for expressing dependencies.

-

user53864 about 12 yearsclone is a bit confusing, I couldn't get what I want.

-

user53864 about 12 yearsUse

colocationfor each VIP with cloned name of service separately. Uselocationfor clone to stay on each node(100 priority). Use an extra location for each vips to migrate to other node(50) and this helps in migrating the vips to other node if service used with colocation fails. Finally use theorderusing clone after the vips.