Pandas pd.read_csv does not work for simple sep=','

Solution 1

Here's my quick solution for your problem -

import numpy as np

import pandas as pd

### Reading the file, treating header as first row and later removing all the double apostrophe

df = pd.read_csv('file.csv', sep='\,', header=None).apply(lambda x: x.str.replace(r"\"",""))

df

0 1 2 3

0 id feature_1 feature_2 feature_3

1 00100429 PROTO Proprietary Phone

2 00100429 PROTO Proprietary Phone

### Putting column names back and dropping the first row.

df.columns = df.iloc[0]

df.drop(index=0, inplace=True)

df

## You can reset the index

id feature_1 feature_2 feature_3

1 00100429 PROTO Proprietary Phone

2 00100429 PROTO Proprietary Phone

### Converting `id` column datatype back to `int` (change according to your needs)

df.id = df.id.astype(np.int)

np.result_type(df.id)

dtype('int64')

Solution 2

It should work without any issue with sep until there is anything really bad on the CSV file you have, However simulating your data example it works file for me:

As per your data sample, you don't need to escape char \ for comma delimited Values.

>>> import pandas as pd

>>> data = pd.read_csv("sample.csv", sep=",")

>>> data

id feature_1 feature_2 feature_3

0 100429 PROTO Proprietary Phone

1 100429 PROTO Proprietary Phone

>>> pd.__version__

'0.23.3'

There is a problem here as i noticed sep="\,"

Alternatively Try:

Here

skipinitialspace=True- this "deals with the spaces after the comma-delimiter"quotechar='"': string (length 1) The character used to denote the start and end of a quoted item. Quoted items can include the delimiter and it will be ignored.

So, in that case worth trying..

>>> data1 = pd.read_csv("sample.csv", skipinitialspace = True, quotechar = '"')

>>> data1

id feature_1 feature_2 feature_3

0 100429 PROTO Proprietary Phone

1 100429 PROTO Proprietary Phone

Note from Pandas doc:

Separators longer than 1 character and different from '\s+' will be interpreted as regular expressions, will force use of the python parsing engine and will ignore quotes in the data.

Solution 3

Here's just an alternative way to dataLeo's answer -

import pandas as pd

import numpy as np

Reading the file in a dataframe, and later removing all the double apostrophe from row values

df = pd.read_csv("file.csv", sep="\,").apply(lambda x: x.str.replace(r"\"",""))

df

"id" "feature_1" "feature_2" "feature_3"

0 00100429 PROTO Proprietary Phone

1 00100429 PROTO Proprietary Phone

Removing all the double apostrophe from column names

df.columns = df.columns.str.replace('\"', '')

df

id feature_1 feature_2 feature_3

0 00100429 PROTO Proprietary Phone

1 00100429 PROTO Proprietary Phone

Converting id column datatype back to int (change according to your needs)

df.id = df.id.astype('int')

np.result_type(df.id)

dtype('int32')

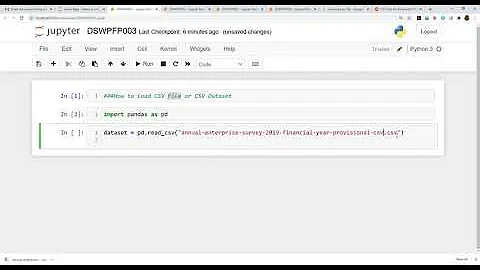

Related videos on Youtube

Kakalukia

Updated on June 04, 2022Comments

-

Kakalukia almost 2 years

Good afternoon, everybody.

I know that it is quite an easy question, although, I simply do not understand why it does not work the way I expected.

The task is as following:

I have a file data.csv presented in this format:

id,"feature_1","feature_2","feature_3" 00100429,"PROTO","Proprietary","Phone" 00100429,"PROTO","Proprietary","Phone"The thing is to import this data using pandas. I know that by default pandas read_csv uses comma separator, so I just imported it as following:

data = pd.read_csv('data.csv')And the result I got is the one I presented at the beginning with no change at all. I mean one column which contains everything.

I tried many other separators using regex, and the only one that made some sort of improvement was:

data = pd.read_csv('data.csv',sep="\,",engine='python')On the one hand it finally separated all columns, on the other hand the way data is presented is not that convenient to use. In particular:

"id ""feature_1"" ""feature_2"" ""feature_3""" "00100429 ""PROTO"" ""Proprietary"" ""Phone"""Therefore, I think that somewhere must be a mistake, because the data seems to be fine.

So the question is - how to import csv file with separated columns and no triple quote symbols?

Thank you.

-

jezrael over 5 yearsI think there is another format like you mentioned

jezrael over 5 yearsI think there is another format like you mentionedhave a file data.csv presented in this format:, because yur sample data working withsep=','very nice. Can you create better data sample which return your bad output? -

Karn Kumar over 5 yearsYour Problem is here

Karn Kumar over 5 yearsYour Problem is heresep="\,", simply usesep=","dont put `` -

Karn Kumar over 5 yearsUsing

Karn Kumar over 5 yearsUsingdata = pd.read_csv("sample.csv", sep="\,",engine='python')gives me same output as your because or of that ``.

-

-

Kakalukia over 5 yearsThank you for your help, I tried this solution, and it worked perfectly. In fact, I tried to open this dataset with excel and it did not show me any problems with it (that's why I though that problem is with the code), however, when I opened it using python's open('file.csv','r'), I found that lines were presented like this - '"tac,""vendor"",""platform"",""type"""\n' That's clearly shows why I had such an issue with reading it using pandas. Thanks again for help.

-

sync11 over 5 years@kakalukia good to hear that it helped. Also if it's a small dataset which excel can handle then you can simply split one column into distinct columns and later import in Python. That way much of the things will be simplified. Good going and you can also upvote this answer :)

sync11 over 5 years@kakalukia good to hear that it helped. Also if it's a small dataset which excel can handle then you can simply split one column into distinct columns and later import in Python. That way much of the things will be simplified. Good going and you can also upvote this answer :)