Parallel processing from a command queue on Linux (bash, python, ruby... whatever)

Solution 1

I would imagine you could do this using make and the make -j xx command.

Perhaps a makefile like this

all : usera userb userc....

usera:

imapsync usera

userb:

imapsync userb

....

make -j 10 -f makefile

Solution 2

On the shell, xargs can be used to queue parallel command processing. For example, for having always 3 sleeps in parallel, sleeping for 1 second each, and executing 10 sleeps in total do

echo {1..10} | xargs -d ' ' -n1 -P3 sh -c 'sleep 1s' _

And it would sleep for 4 seconds in total. If you have a list of names, and want to pass the names to commands executed, again executing 3 commands in parallel, do

cat names | xargs -n1 -P3 process_name

Would execute the command process_name alice, process_name bob and so on.

Solution 3

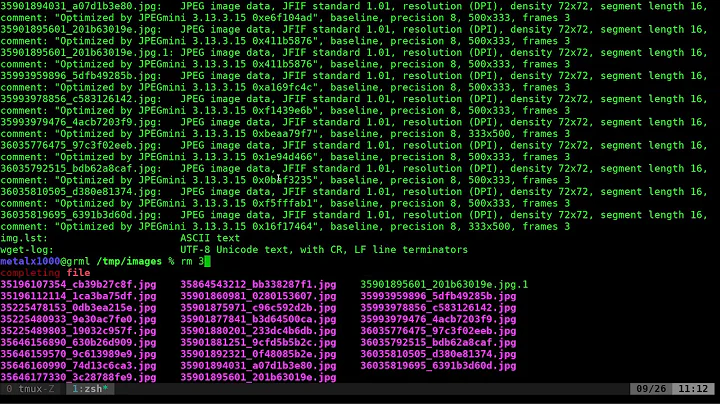

Parallel is made exatcly for this purpose.

cat userlist | parallel imapsync

One of the beauties of Parallel compared to other solutions is that it makes sure output is not mixed. Doing traceroute in Parallel works fine for example:

(echo foss.org.my; echo www.debian.org; echo www.freenetproject.org) | parallel traceroute

Solution 4

For this kind of job PPSS is written: Parallel processing shell script. Google for this name and you will find it, I won't linkspam.

Solution 5

GNU make (and perhaps other implementations as well) has the -j argument, which governs how many jobs it will run at once. When a job completes, make will start another one.

Related videos on Youtube

mlambie

I like Brazilian jiu jitsu, Xbox, coffee, Lego, comics, knitting, cycling, helicopters, skateboarding and skydiving.

Updated on September 14, 2020Comments

-

mlambie over 3 years

mlambie over 3 yearsI have a list/queue of 200 commands that I need to run in a shell on a Linux server.

I only want to have a maximum of 10 processes running (from the queue) at once. Some processes will take a few seconds to complete, other processes will take much longer.

When a process finishes I want the next command to be "popped" from the queue and executed.

Does anyone have code to solve this problem?

Further elaboration:

There's 200 pieces of work that need to be done, in a queue of some sort. I want to have at most 10 pieces of work going on at once. When a thread finishes a piece of work it should ask the queue for the next piece of work. If there's no more work in the queue, the thread should die. When all the threads have died it means all the work has been done.

The actual problem I'm trying to solve is using

imapsyncto synchronize 200 mailboxes from an old mail server to a new mail server. Some users have large mailboxes and take a long time tto sync, others have very small mailboxes and sync quickly. -

mlambie over 15 yearsThis worked exactly as I hoped it would. I wrote some code to generate the Makefile. It ended up being over 1000 lines. Thanks!

mlambie over 15 yearsThis worked exactly as I hoped it would. I wrote some code to generate the Makefile. It ended up being over 1000 lines. Thanks! -

Cristian Ciupitu about 15 years1) os.system could be replaced with the new improved subprocess module. 2) It doesn't matter that CPython has a GIL because you're running external commands, not Python code (functions).

-

jfs about 15 yearsIf you replace

threading.Threadbymultiprocessing.ProcessandQueuebymultiprocessing.Queuethen the code will run using multiple processes. -

Devrim over 14 yearsawesome! can we set it up dynamic threading that e.g. 80% CPU/Ram allow ?

Devrim over 14 yearsawesome! can we set it up dynamic threading that e.g. 80% CPU/Ram allow ? -

chiggsy over 13 yearsMan I love this tool. I've known about it for like 3h and I am going to use it until the very stones of the earth cry out for me to stop.

chiggsy over 13 yearsMan I love this tool. I've known about it for like 3h and I am going to use it until the very stones of the earth cry out for me to stop. -

Yuvi almost 13 yearsAlmost exactly what I was looking for. /me goes back to struggling to get it working.

-

rogerdpack over 12 yearspssh is written in python I think

-

Kyle Simek over 12 yearsI found that if any of the commands exits with an error code, make will exit, preventing execution of future jobs. In some situations, this solution is less than ideal. Any recommendations for this scenario?

-

myroslav about 12 yearsFedora 16 included the tool into package repository

-

Joseph Lisee almost 12 years@redmoskito If you run make with the "-k" option it will keep running even if there are errors.

-

Yauhen Yakimovich over 11 yearsif one starts thinking of make not as "task scheduler" but as of "parallel compilation" tool.. I guess the bigger picture is that 'make -j' respects dependencies, which makes this solution mind blowing once applied universally.

-

Warrick almost 11 yearsWow, I use

xargsall the time and never expected it would have this option! -

Brian Minton over 10 yearsyou can use the wait command for a specific child process too. It can be given any number of arguments, each of which can be a pid or job id.

-

Jonathan Leffler over 10 years@BrianMinton: you're right that you can list specific PIDs with

Jonathan Leffler over 10 years@BrianMinton: you're right that you can list specific PIDs withwait, but you still get the 'all of them dead' behaviour, not 'first one dead' which is what this code really needs. -

Eddy almost 9 yearsFor the second example you give, how do you modify the command so that

process_namecan take more than one argument? I want to do something like this:cat commands.txt | xargs -n1 -P3 evalwherecommands.txthas a bunch of commands in it (one on each line, each with multiple arguments). The problem is thatevaldoesn't work as it's a shell builtin command -

staticfloat about 5 years@Eddy try using a shell as the program to run; this allows you to use arbitrary shell commands as the inputs. The first answer above does this with

sh, but you can do it withbashas well. E.g. if yourcommands.txthas a bunch of lines in it that look likeecho test1; sleep1, you can use that via something likecat commands.txt | xargs -d'\n' -P3 -n1 /bin/bash -c