Parallel shell loops

Solution 1

A makefile is a good solution to your problem. You could program this parallel execution in a shell, but it's hard, as you've noticed. A parallel implementation of make will not only take care of starting jobs and detecting their termination, but also handle load balancing, which is tricky.

The requirement for globbing is not an obstacle: there are make implementations that support it. GNU make, which has wildcard expansion such as $(wildcard *.c) and shell access such as $(shell mycommand) (look up functions in the GNU make manual for more information). It's the default make on Linux, and available on most other systems. Here's a Makefile skeleton that you may be able to adapt to your needs:

sources = $(wildcard *.src)

all: $(sources:.src=.tgt)

%.tgt: %.src

do_something $< $$(derived_params $<) >$@

Run something like make -j4 to execute four jobs in parallel, or make -j -l3 to keep the load average around 3.

Solution 2

I am not sure what your derived arguments are like. But with GNU Parallel http:// www.gnu.org/software/parallel/ you can do this to run one job per cpu core:

find . | parallel -j+0 'a={}; name=${a##*/}; upper=$(echo "$name" | tr "[:lower:]" "[:upper:]");

echo "$name - $upper"'

If what you want to derive is simply changing the .extension the {.} may be handy:

parallel -j+0 lame {} -o {.}.mp3 ::: *.wav

Watch the intro video to GNU Parallel at http://www.youtube.com/watch?v=OpaiGYxkSuQ

Solution 3

Wouldn't using the shell's wait command work for you?

for i in *

do

do_something $i &

done

wait

Your loop executes a job then waits for it, then does the next job. If the above doesn't work for you, then yours might work better if you move pwait after done.

Solution 4

Why has nobody mentioned xargs yet?

Assuming you have exactly three arguments,

for i in *.myfiles; do echo -n $i `derived_params $i` other_params; done | xargs -n 3 -P $PROCS do_something

Otherwise use a delimiter (null is handy for that):

for i in *.myfiles; do echo -n $i `derived_params $i` other_params; echo -ne "\0"; done | xargs -0 -n 1 -P $PROCS do_something

EDIT: for the above, each parameter should be separated by a null character, and then the number of parameters should be specified with the xargs -n.

Related videos on Youtube

math

Updated on September 17, 2022Comments

-

math over 1 year

I want to process many files and since I've here a bunch of cores I want to do it in parallel:

for i in *.myfiles; do do_something $i `derived_params $i` other_params; doneI know of a Makefile solution but my commands needs the arguments out of the shell globbing list. What I found is:

> function pwait() { > while [ $(jobs -p | wc -l) -ge $1 ]; do > sleep 1 > done > } >To use it, all one has to do is put & after the jobs and a pwait call, the parameter gives the number of parallel processes:

> for i in *; do > do_something $i & > pwait 10 > doneBut this doesn't work very well, e.g. I tried it with e.g. a for loop converting many files but giving me error and left jobs undone.

I can't belive that this isn't done yet since the discussion on zsh mailing list is so old by now. So do you know any better?

-

JRobert almost 14 yearsSimilar to this question: superuser.com/questions/153630/… See if that technique works for you.

-

Dennis Williamson almost 14 yearsIt would be helpful if you posted the error messages.

Dennis Williamson almost 14 yearsIt would be helpful if you posted the error messages. -

math almost 14 years@JRobert yes I knew this but this doesn't actually helps as the makefile approach won't work as I said! @Dennis: Ok, first I let run a top beside showing me more than the specified number of processes. Second it doesn't return to the prompt properly. Third that I said it leaves jobs undone was not right: I just placed an indicator

echo "DONE"after the loop which was executed before active jobs aren't finished. => This made me think that jobs werent done.

-

-

math almost 14 yearsno with 1 million files I would have 1 million processes running, or am I wrong?

-

Dennis Williamson almost 14 years@brubelsabs: Well, it would try to do a million processes. You didn't say in your question how many files you needed to process. I would think you'd need to use nested

Dennis Williamson almost 14 years@brubelsabs: Well, it would try to do a million processes. You didn't say in your question how many files you needed to process. I would think you'd need to use nestedforloops to limit that:for file in *; do for i in {1..10}; do do_something "$i" & done; wait; done(untested) That should do ten at a time and wait until all ten of each group is done before starting the next ten. Your loop does one at a time making the&moot. See the question that JRobert linked to for other options. Search on Stack Overflow for other questions similar to yours (and that one). -

Admin over 13 yearsIf the OP anticipates a million of files then he would have a problem with

Admin over 13 yearsIf the OP anticipates a million of files then he would have a problem withfor i in *. He would have to pass arguments to the loop with a pipe or something. Then instead of an internal loop you could run an incrementing counter and run"micro-"wait"-s"every "$((i % 32))" -eq '0' -

math over 12 yearsYes in our project someone have had the same idea, and it works great even under Windows with MSys.

-

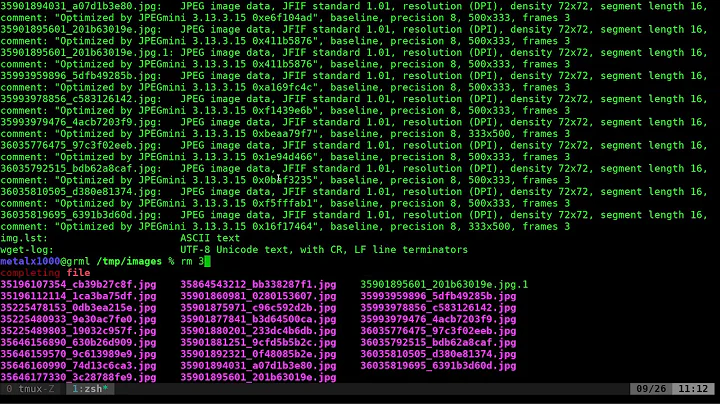

guhur over 2 yearsSounds great but your video has no content about parallel. I don't understand about you got the pipe content

guhur over 2 yearsSounds great but your video has no content about parallel. I don't understand about you got the pipe content