Parallel wget in Bash

Solution 1

Much preferrable to pushing wget into the background using & or -b, you can use xargs to the same effect, and better.

The advantage is that xargs will synchronize properly with no extra work. Which means that you are safe to access the downloaded files (assuming no error occurs). All downloads will have completed (or failed) once xargs exits, and you know by the exit code whether all went well. This is much preferrable to busy waiting with sleep and testing for completion manually.

Assuming that URL_LIST is a variable containing all the URLs (can be constructed with a loop in the OP's example, but could also be a manually generated list), running this:

echo $URL_LIST | xargs -n 1 -P 8 wget -q

will pass one argument at a time (-n 1) to wget, and execute at most 8 parallel wget processes at a time (-P 8). xarg returns after the last spawned process has finished, which is just what we wanted to know. No extra trickery needed.

The "magic number" of 8 parallel downloads that I've chosen is not set in stone, but it is probably a good compromise. There are two factors in "maximising" a series of downloads:

One is filling "the cable", i.e. utilizing the available bandwidth. Assuming "normal" conditions (server has more bandwidth than client), this is already the case with one or at most two downloads. Throwing more connections at the problem will only result in packets being dropped and TCP congestion control kicking in, and N downloads with asymptotically 1/N bandwidth each, to the same net effect (minus the dropped packets, minus window size recovery). Packets being dropped is a normal thing to happen in an IP network, this is how congestion control is supposed to work (even with a single connection), and normally the impact is practically zero. However, having an unreasonably large number of connections amplifies this effect, so it can be come noticeable. In any case, it doesn't make anything faster.

The second factor is connection establishment and request processing. Here, having a few extra connections in flight really helps. The problem one faces is the latency of two round-trips (typically 20-40ms within the same geographic area, 200-300ms inter-continental) plus the odd 1-2 milliseconds that the server actually needs to process the request and push a reply to the socket. This is not a lot of time per se, but multiplied by a few hundred/thousand requests, it quickly adds up.

Having anything from half a dozen to a dozen requests in-flight hides most or all of this latency (it is still there, but since it overlaps, it does not sum up!). At the same time, having only a few concurrent connections does not have adverse effects, such as causing excessive congestion, or forcing a server into forking new processes.

Solution 2

Just running the jobs in the background is not a scalable solution: If you are fetching 10000 urls you probably only want to fetch a few (say 100) in parallel. GNU Parallel is made for that:

seq 10000 | parallel -j100 wget https://www.example.com/page{}.html

See the man page for more examples: http://www.gnu.org/software/parallel/man.html#example__download_10_images_for_each_of_the_past_30_days

Solution 3

You can use -b option:

wget -b "https://www.example.com/page$i.html"

If you don't want log files, add option -o /dev/null.

-o FILE log messages to FILE.

Solution 4

Adding an ampersand to a command makes it run in the background

for i in {1..42}

do

wget "https://www.example.com/page$i.html" &

done

Solution 5

wget version 2 implements multiple connections.

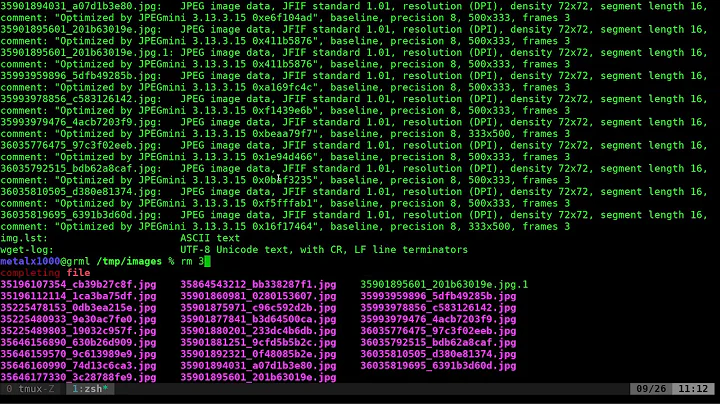

Related videos on Youtube

Jonathon Vandezande

I have bounced around the fields of computational chemistry and polymer chemistry, and now work as a computational materials scientist at the biotech startup Zymergen.

Updated on July 05, 2022Comments

-

Jonathon Vandezande almost 2 years

I am getting a bunch of relatively small pages from a website and was wondering if I could somehow do it in parallel in Bash. Currently my code looks like this, but it takes a while to execute (I think what is slowing me down is the latency in the connection).

for i in {1..42} do wget "https://www.example.com/page$i.html" doneI have heard of using xargs, but I don't know anything about that and the man page is very confusing. Any ideas? Is it even possible to do this in parallel? Is there another way I could go about attacking this?

-

SineSwiper over 11 yearsThis is definitely the best way, since it uses xargs, a universal tool, and this method can be applied to many other commands.

SineSwiper over 11 yearsThis is definitely the best way, since it uses xargs, a universal tool, and this method can be applied to many other commands. -

user7610 almost 11 yearsWhen downloading multiple files over HTTP, wget can reuse the HTTP connection thanks to the Keep-Alive mechanic. When you launch a new process per each file, this mechanism cannot be used and the connection (TCP triple way handshake) has to be established again and again. So I suggest bumping up the -n parameter to about 20 or so. In default configuration Apache HTTP server will serve only up to 100 requests in one kept-alive session, so there is probably no point in going over a hundered here.

user7610 almost 11 yearsWhen downloading multiple files over HTTP, wget can reuse the HTTP connection thanks to the Keep-Alive mechanic. When you launch a new process per each file, this mechanism cannot be used and the connection (TCP triple way handshake) has to be established again and again. So I suggest bumping up the -n parameter to about 20 or so. In default configuration Apache HTTP server will serve only up to 100 requests in one kept-alive session, so there is probably no point in going over a hundered here. -

uzsolt over 10 yearsNo, it's ok - check man page ('-o logfile...').

uzsolt over 10 yearsNo, it's ok - check man page ('-o logfile...'). -

ipruthi over 10 yearsSorry, I did not read correctly. I thought you said "if you do not want output files, add option -o". Because I did that and ended up with hundreds of thousands of files in /root. Thanks for clarifying.

-

Ricky almost 10 yearsGreat answer, but what if I want to pass two variable values to wget? I want to specify the destination path as well as the URL. Is this still possible with the xargs technique?

-

Damon almost 10 years@Ricky:

xargsjust forwards everything after options and executable name to the executable, so that should work. -

Ricky almost 10 years@Damon Putting this in a loop doesn't result in anything for me:

xargs -n 1 -P 8 wget -P $localFileURL $cleanURL -

Justin about 8 yearsYou good sir have the correct answer. Here is what I went with:

URLS=$(cat ./urls) && echo "$URLS" | xargs -n 1 -P 8 wget --no-cache --no-cookies --timeout=3 --retry-connrefused --random-wait --user-agent=Fetchr/1.3.0 -

DomainsFeatured over 7 yearsI know this is a bit old, but, is there any way to make the outputs group together? As in, group the output by parallel? Currently, the output goes by whatever ran first so the information can be chopped up.

-

Ole Tange over 7 years@Ricky With GNU Parallel you can: parallel wget -O {1} {2} ::: file1 file2 :::+ url1 url2

Ole Tange over 7 years@Ricky With GNU Parallel you can: parallel wget -O {1} {2} ::: file1 file2 :::+ url1 url2 -

Ole Tange over 7 years@DomainsFeatured There no option for

Ole Tange over 7 years@DomainsFeatured There no option forxargsthat does this. This is one of the reasons for developing GNU Parallel in the first place. -

Hitechcomputergeek almost 7 yearsWhy use

Hitechcomputergeek almost 7 yearsWhy use-n 1and fork a process for every single item? Just leave it out, using only-P 8, and xargs will cram as many items as can fit into the command line for 8 different simultaneous wget processes. -

Hitechcomputergeek almost 7 years@Justin You could just use

Hitechcomputergeek almost 7 years@Justin You could just usecat ./urls | xargs -n 1 -P 8 wget [...], or even betterxargs -a ./urls -n 1 -P 8 wget [...], instead of reading the file into a variable. -

Jim over 5 yearsHow do I build the URL_LIST in the for loop variable so xargs can parse it as individual urls? Do I add " " or "\n"?

-

ka3ak over 5 yearsSorry, I have nothing to download now, but I'll have to do it in the future for sure. Assuming I run seq 30 | parallel -j5 mkdir /tmp/{} Should it create 30 folders /tmp/1 /tmp/2 etc. ? If so, it doesn't do it on my system.

ka3ak over 5 yearsSorry, I have nothing to download now, but I'll have to do it in the future for sure. Assuming I run seq 30 | parallel -j5 mkdir /tmp/{} Should it create 30 folders /tmp/1 /tmp/2 etc. ? If so, it doesn't do it on my system. -

ka3ak over 5 yearsWhat wget version is used in the example? From my wget's man page (1.19.4): -P prefix --directory-prefix=prefix Set directory prefix to prefix. The directory prefix is the directory where all other files and subdirectories will be saved to, i.e. the top of the retrieval tree. The default is . (the current directory).

ka3ak over 5 yearsWhat wget version is used in the example? From my wget's man page (1.19.4): -P prefix --directory-prefix=prefix Set directory prefix to prefix. The directory prefix is the directory where all other files and subdirectories will be saved to, i.e. the top of the retrieval tree. The default is . (the current directory). -

Ole Tange over 5 years@ka3ak You may have found a bug. Please follow: gnu.org/software/parallel/man.html#REPORTING-BUGS

Ole Tange over 5 years@ka3ak You may have found a bug. Please follow: gnu.org/software/parallel/man.html#REPORTING-BUGS -

ka3ak over 5 years@OleTange It seems there was another tool with the same name which was pre-installed on my system. It even had the option -j for jobs. I just ran sudo apt install parallel to install the right one.

ka3ak over 5 years@OleTange It seems there was another tool with the same name which was pre-installed on my system. It even had the option -j for jobs. I just ran sudo apt install parallel to install the right one. -

Blademaster almost 3 years@Damon is there a way to show the progress of this process while running?