Pass parameters to Airflow Experimental REST api when creating dag run

11,893

Solution 1

Judging from the source code, it would appear as though parameters can be passed into the dag run.

If the body of the http request contains json, and that json contains a top level key conf the value of the conf key will be passed as configuration to trigger_dag. More on how this works can be found here.

Note the value of the conf key must be a string, e.g.

curl -X POST \

http://localhost:8080/api/experimental/dags/<DAG_ID>/dag_runs \

-H 'Cache-Control: no-cache' \

-H 'Content-Type: application/json' \

-d '{"conf":"{\"key\":\"value\"}"}'

Solution 2

I had the same issue. "conf" value must be in string

curl -X POST \

http://localhost:8080/api/experimental/dags/<DAG_ID>/dag_runs \

-H 'Cache-Control: no-cache' \

-H 'Content-Type: application/json' \

-d '{"conf":"{\"key\":\"value\"}"}'

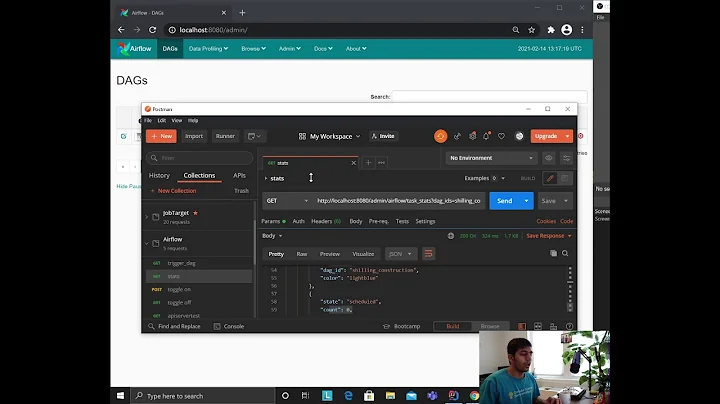

Related videos on Youtube

Author by

Peter Berg

Updated on September 15, 2022Comments

-

Peter Berg over 1 year

Peter Berg over 1 yearLooks like Airflow has an experimental REST api that allow users to create dag runs with https POST request. This is awesome.

Is there a way to pass parameters via HTTP to the create dag run? Judging from the official docs, found here, it would seem the answer is "no" but I'm hoping I'm wrong.

-

Nabin over 5 yearsSaved my day :)

Nabin over 5 yearsSaved my day :) -

mildewey over 4 yearsI still had trouble understanding. For future readers, this does NOT mean that you just have a regular json dictionary as the payload. You must have a json dictionary with one key "conf" and one string value which must be valid json. IE json-in-json. In my own (python) code, that meant something like this:

payload = dict(conf=json.dumps(dict(key="value"))requests.post(url, json=payload, headers=headers)