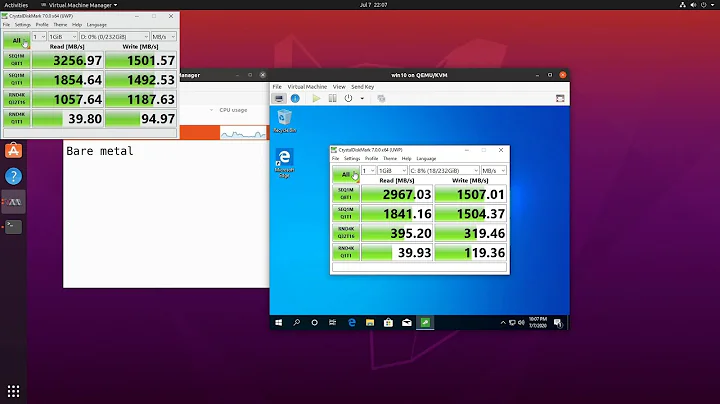

Performance comparison of e1000 and virtio-pci drivers

You did perform a bandwidth test, that does not stress PCI.

You need to simulate an environment with many concurrent sessions. There you should see a difference.

Perhaps -P 400 might simulate that kind of test using iperf.

Related videos on Youtube

comeback4you

Updated on September 18, 2022Comments

-

comeback4you almost 2 years

comeback4you almost 2 yearsI made a following setup to compare a performance of

virtio-pciande1000drivers:I expected to see much higher throughput in case of

virtio-pcicompared toe1000, but they performed identically.Test with

virtio-pci(192.168.0.126is configured toT60and192.168.0.129is configured toPC1):root@PC1:~# grep hype /proc/cpuinfo flags : fpu de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pse36 clflush mmx fxsr sse sse2 syscall nx lm rep_good nopl pni vmx cx16 x2apic hypervisor lahf_lm tpr_shadow vnmi flexpriority ept vpid root@PC1:~# lspci -s 00:03.0 -v 00:03.0 Ethernet controller: Red Hat, Inc Virtio network device Subsystem: Red Hat, Inc Device 0001 Physical Slot: 3 Flags: bus master, fast devsel, latency 0, IRQ 11 I/O ports at c000 [size=32] Memory at febd1000 (32-bit, non-prefetchable) [size=4K] Expansion ROM at feb80000 [disabled] [size=256K] Capabilities: [40] MSI-X: Enable+ Count=3 Masked- Kernel driver in use: virtio-pci root@PC1:~# iperf -c 192.168.0.126 -d -t 30 -l 64 ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ------------------------------------------------------------ ------------------------------------------------------------ Client connecting to 192.168.0.126, TCP port 5001 TCP window size: 85.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.0.129 port 41573 connected with 192.168.0.126 port 5001 [ 5] local 192.168.0.129 port 5001 connected with 192.168.0.126 port 44480 [ ID] Interval Transfer Bandwidth [ 3] 0.0-30.0 sec 126 MBytes 35.4 Mbits/sec [ 5] 0.0-30.0 sec 126 MBytes 35.1 Mbits/sec root@PC1:~#Test with

e1000(192.168.0.126is configured toT60and192.168.0.129is configured toPC1):root@PC1:~# grep hype /proc/cpuinfo flags : fpu de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pse36 clflush mmx fxsr sse sse2 syscall nx lm rep_good nopl pni vmx cx16 x2apic hypervisor lahf_lm tpr_shadow vnmi flexpriority ept vpid root@PC1:~# lspci -s 00:03.0 -v 00:03.0 Ethernet controller: Intel Corporation 82540EM Gigabit Ethernet Controller (rev 03) Subsystem: Red Hat, Inc QEMU Virtual Machine Physical Slot: 3 Flags: bus master, fast devsel, latency 0, IRQ 11 Memory at febc0000 (32-bit, non-prefetchable) [size=128K] I/O ports at c000 [size=64] Expansion ROM at feb80000 [disabled] [size=256K] Kernel driver in use: e1000 root@PC1:~# iperf -c 192.168.0.126 -d -t 30 -l 64 ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ------------------------------------------------------------ ------------------------------------------------------------ Client connecting to 192.168.0.126, TCP port 5001 TCP window size: 85.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.0.129 port 42200 connected with 192.168.0.126 port 5001 [ 5] local 192.168.0.129 port 5001 connected with 192.168.0.126 port 44481 [ ID] Interval Transfer Bandwidth [ 3] 0.0-30.0 sec 126 MBytes 35.1 Mbits/sec [ 5] 0.0-30.0 sec 126 MBytes 35.1 Mbits/sec root@PC1:~#With large packets the bandwidth was ~900Mbps in case of both drivers.

When does the theoretical higher performance of

virtio-pcicomes into play? Why did I see equal performance withe1000andvirtio-pci?-

drHogan over 7 yearsCould you watch the CPU usage of the host and vm while doing this benchmark? Maybe non-virtio does need more CPU but your one is fast enough.

-

comeback4you over 7 yearsI did watch the CPU usage and for both drivers it was pretty much the same. I executed

comeback4you over 7 yearsI did watch the CPU usage and for both drivers it was pretty much the same. I executediperf -c 192.168.0.126 -d -t 300 -l 64; uptimewith both drivers and in case ofe1000the results wereload average: 0.04, 0.07, 0.05and in case ofvirtio-pcithey wereload average: 0.23, 0.11, 0.05. CPU usage on host machine was also basically the same(I checked this withtop). -

phk over 7 yearsNumber of connections, number of IPs?

phk over 7 yearsNumber of connections, number of IPs? -

drHogan over 7 yearsAnother guess. Maybe virtio is able to pass through "hardware features" of the host's NIC.(like segmentation and checksum Offloading) if the host NIC does supprt them. In other words if the host NIC does not have whatever advanced features then virto cannot be better than e1000.

-

phk over 7 years@rudimeier If this was the case this would only apply to traffic coming through these host's nics I guess.

phk over 7 years@rudimeier If this was the case this would only apply to traffic coming through these host's nics I guess. -

sean almost 6 yearsCan you please post your qemu config?

-

ceving almost 5 yearsSee here for another comparison: linux-kvm.org/page/Using_VirtIO_NIC

ceving almost 5 yearsSee here for another comparison: linux-kvm.org/page/Using_VirtIO_NIC -

jrglndmnn over 3 yearsDid you installed the kvm kernel module system or a bare qemu?

jrglndmnn over 3 yearsDid you installed the kvm kernel module system or a bare qemu?

-