Possible to get PrimaryKey IDs back after a SQL BulkCopy?

Solution 1

In that scenario, I would use SqlBulkCopy to insert into a staging table (i.e. one that looks like the data I want to import, but isn't part of the main transactional tables), and then at the DB to a INSERT/SELECT to move the data into the first real table.

Now I have two choices depending on the server version; I could do a second INSERT/SELECT to the second real table, or I could use the INSERT/OUTPUT clause to do the second insert , using the identity rows from the table.

For example:

-- dummy schema

CREATE TABLE TMP (data varchar(max))

CREATE TABLE [Table1] (id int not null identity(1,1), data varchar(max))

CREATE TABLE [Table2] (id int not null identity(1,1), id1 int not null, data varchar(max))

-- imagine this is the SqlBulkCopy

INSERT TMP VALUES('abc')

INSERT TMP VALUES('def')

INSERT TMP VALUES('ghi')

-- now push into the real tables

INSERT [Table1]

OUTPUT INSERTED.id, INSERTED.data INTO [Table2](id1,data)

SELECT data FROM TMP

Solution 2

If your app allows it, you could add another column in which you store an identifier of the bulk insert (a guid for example). You would set this id explicitly.

Then after the bulk insert, you just select the rows that have that identifier.

Solution 3

I had the same issue where I had to get back ids of the rows inserted with SqlBulkCopy. My ID column was an identity column.

Solution:

I have inserted 500+ rows with bulk copy, and then selected them back with the following query:

SELECT TOP InsertedRowCount *

FROM MyTable

ORDER BY ID DESC

This query returns the rows I have just inserted with their ids. In my case I had another unique column. So I selected that column and id. Then mapped them with a IDictionary like so:

IDictionary<string, int> mymap = new Dictionary<string, int>()

mymap[Name] = ID

Hope this helps.

Solution 4

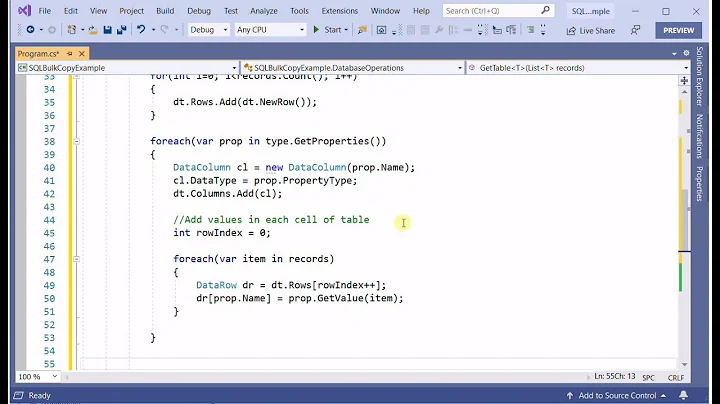

My approach is similar to what RiceRiceBaby described, except one important thing to add is that the call to retrieve Max(Id) needs to be a part of a transaction, along with the call to SqlBulkCopy.WriteToServer. Otherwise, someone else may insert during your transaction and this would make your Id's incorrect. Here is my code:

public static void BulkInsert<T>(List<ColumnInfo> columnInfo, List<T> data, string

destinationTableName, SqlConnection conn = null, string idColumn = "Id")

{

NLogger logger = new NLogger();

var closeConn = false;

if (conn == null)

{

closeConn = true;

conn = new SqlConnection(_connectionString);

conn.Open();

}

SqlTransaction tran =

conn.BeginTransaction(System.Data.IsolationLevel.Serializable);

try

{

var options = SqlBulkCopyOptions.KeepIdentity;

var sbc = new SqlBulkCopy(conn, options, tran);

var command = new SqlCommand(

$"SELECT Max({idColumn}) from {destinationTableName};", conn,

tran);

var id = command.ExecuteScalar();

int maxId = 0;

if (id != null && id != DBNull.Value)

{

maxId = Convert.ToInt32(id);

}

data.ForEach(d =>

{

maxId++;

d.GetType().GetProperty(idColumn).SetValue(d, maxId);

});

var dt = ConvertToDataTable(columnInfo, data);

sbc.DestinationTableName = destinationTableName;

foreach (System.Data.DataColumn dc in dt.Columns)

{

sbc.ColumnMappings.Add(dc.ColumnName, dc.ColumnName);

}

sbc.WriteToServer(dt);

tran.Commit();

if(closeConn)

{

conn.Close();

conn = null;

}

}

catch (Exception ex)

{

tran.Rollback();

logger.Write(LogLevel.Error, $@"An error occurred while performing a bulk

insert into table {destinationTableName}. The entire

transaction has been rolled back.

{ex.ToString()}");

throw ex;

}

}

Related videos on Youtube

chobo2

Updated on June 22, 2020Comments

-

chobo2 almost 4 years

I am using C# and using SqlBulkCopy. I have a problem though. I need to do a mass insert into one table then another mass insert into another table.

These 2 have a PK/FK relationship.

Table A Field1 -PK auto incrementing (easy to do SqlBulkCopy as straight forward) Table B Field1 -PK/FK - This field makes the relationship and is also the PK of this table. It is not auto incrementing and needs to have the same id as to the row in Table A.So these tables have a one to one relationship but I am unsure how to get back all those PK Id that the mass insert made since I need them for Table B.

Edit

Could I do something like this?

SELECT * FROM Product WHERE NOT EXISTS (SELECT * FROM ProductReview WHERE Product.ProductId = ProductReview.ProductId AND Product.Qty = NULL AND Product.ProductName != 'Ipad')This should find all the rows that where just inserted with the sql bulk copy. I am not sure how to take the results from this then do a mass insert with them from a SP.

The only problem I can see with this is that if a user is doing the records one at a time and a this statement runs at the same time it could try to insert a row twice into the "Product Review Table".

So say I got like one user using the manual way and another user doing the mass way at about the same time.

manual way. 1. User submits data 2. Linq to sql Product object is made and filled with the data and submited. 3. this object now contains the ProductId 4. Another linq to sql object is made for the Product review table and is inserted(Product Id from step 3 is sent along).

Mass way. 1. User grabs data from a user sharing the data. 2. All Product rows from the sharing user are grabbed. 3. SQL Bulk copy insert on Product rows happens. 4. My SP selects all rows that only exist in the Product table and meets some other conditions 5. Mass insert happens with those rows.

So what happens if step 3(manual way) is happening at the same time as step 4(mass way). I think it would try to insert the same row twice causing a primary constraint execption.

-

Ralf de Kleine almost 14 yearsWhat binds the tables on forehand?

Ralf de Kleine almost 14 yearsWhat binds the tables on forehand?

-

-

chobo2 almost 14 yearsHmm. I been working on something do you think it would work(see my edit). If not I guess I will try a staging table.

-

Marc Gravell almost 14 years@chobo2 - well, except in a few scenarios I would use a staging table anyway - so that a: I'm not impacting the real table during network IO time, and b: to get full transaction logs.

Marc Gravell almost 14 years@chobo2 - well, except in a few scenarios I would use a staging table anyway - so that a: I'm not impacting the real table during network IO time, and b: to get full transaction logs. -

chobo2 almost 14 yearsOk I just got my edit up. Going through I am thinking I might have to do a staging tbl way. Not sure yet. I have some questions on your way though. Is the -- Are these dummy table created on the file or just used for example purposes? 2nd how do you do a SQlbulkCopy in a stored procedure. 3rd how do this push thing work. You just insert the entire table or something? 4th how about concurrent connections where maybe a couple users? It would all go the staging table so there would need to be some sort of way to know which data to add then delete?

-

Marc Gravell almost 14 years@chobo2 - I would have the staging table there as a permanent table. You might need some extra work if you need parallel usage, though. Re SP; I would use

Marc Gravell almost 14 years@chobo2 - I would have the staging table there as a permanent table. You might need some extra work if you need parallel usage, though. Re SP; I would useSqlBulkCopythen call an SP, but you can use bulk-insert (notSqlBulkCopy) from TSQL. 3: don't understand the question, but you write the code you need for your scenario... 4: in that case I would add a column to the staging table to identify the different requests (all rows for request A would have the same value). Alternatively, enforce (separately) that only one happens at once (but parallel is nicer). -

chobo2 almost 14 yearsSo how take my results from a select statement and then do a insert with them? I am not sure how to store them in a sp and then do a bulk-insert.

-

Marc Gravell almost 14 years@chobo2 - the example does a select/insert; is there something more specific?

Marc Gravell almost 14 years@chobo2 - the example does a select/insert; is there something more specific? -

chobo2 almost 14 yearsAh maybe I am just getting confused by this line. OUTPUT INSERTED.id, INSERTED.data INTO [Table2](id1,data)

-

marknuzz almost 11 yearsThis is a good solution, but ONLY if you can guarantee that no records are being inserted from another thread after you insert but before you select the items.

-

Usman over 7 yearsI am woundering, how can people market thier products on this forum.:-) SklBulkCopy related features are just wrapped for that much cost... Incredible.

-

JohnLBevan over 7 yearsFYI: Example code for using a staging table along with bulk insert from c# here: stackoverflow.com/a/41289532/361842

-

Transformer almost 5 yearsHi Can you please expand on how to get the inserted Row ID back, with date and time please, I cant seem to get the rows - ID's for those which were successfully inserted when I tried your code.

Transformer almost 5 yearsHi Can you please expand on how to get the inserted Row ID back, with date and time please, I cant seem to get the rows - ID's for those which were successfully inserted when I tried your code. -

Jonathan Magnan almost 5 yearsHere is a Fiddle that shows how to return the value: dotnetfiddle.net/g5pSS1, to return more column, you just need to map the column with the direction

Output -

DonBoitnott almost 5 yearsI considered an approach like yours, but I don't think this solves the problem of ID collisions. Using a

DonBoitnott almost 5 yearsI considered an approach like yours, but I don't think this solves the problem of ID collisions. Using aTransactiondoesn't prevent anyone else from using the table, inserting rows, and consuming IDs before you complete your insert. Any single insert prior to completion and this blows up. Also,sbcmust be disposed. -

BeccaGirl almost 5 yearsWhat am I missing? According to Microsoft docs if System.Data.IsolationLevel.Serializable is used, then "A range lock is placed on the DataSet, preventing other users from updating or inserting rows into the dataset until the transaction is complete." I also tested by attempting to insert from SSMS while my bulkinsert was being made, and my insert always occurred after the entire bulkinsert was completed.

-

DonBoitnott almost 5 yearsApparently my understanding of serializable was incomplete. Now that I've studied it more closely I see what you mean. Looks like it should block subsequent writes until the batch insert is done. My mistake.

DonBoitnott almost 5 yearsApparently my understanding of serializable was incomplete. Now that I've studied it more closely I see what you mean. Looks like it should block subsequent writes until the batch insert is done. My mistake. -

BeccaGirl almost 5 yearsNo problem! It's been pretty durable, I've been using it in production for a while with no issues.

-

Dirk Boer almost 5 yearsbesides the valid point given by @nuzzolilo, this answer deserves more upvotes.

Dirk Boer almost 5 yearsbesides the valid point given by @nuzzolilo, this answer deserves more upvotes. -

BassGod over 2 years@MarcGravell You didn't add an FK between Table1 and Table2 like OP's example. Using the "INSERT/OUTPUT" construct results in the error "The target table 'Table2' of the OUTPUT INTO clause cannot be on either side of a (primary key, foreign key) relationship. Found reference constraint 'FK_Field1_Table1"...