Protecting executable from reverse engineering?

Solution 1

What Amber said is exactly right. You can make reverse engineering harder, but you can never prevent it. You should never trust "security" that relies on the prevention of reverse engineering.

That said, the best anti-reverse-engineering techniques that I've seen focused not on obfuscating the code, but instead on breaking the tools that people usually use to understand how code works. Finding creative ways to break disassemblers, debuggers, etc is both likely to be more effective and also more intellectually satisfying than just generating reams of horrible spaghetti code. This does nothing to block a determined attacker, but it does increase the likelihood that J Random Cracker will wander off and work on something easier instead.

Solution 2

but they can all be worked around and or figured out by code analysists given the right time frame.

If you give people a program that they are able to run, then they will also be able to reverse-engineer it given enough time. That is the nature of programs. As soon as the binary is available to someone who wants to decipher it, you cannot prevent eventual reverse-engineering. After all, the computer has to be able to decipher it in order to run it, and a human is simply a slower computer.

Solution 3

Safe Net Sentinel (formerly Aladdin). Caveats though - their API sucks, documentation sucks, and both of those are great in comparison to their SDK tools.

I've used their hardware protection method (Sentinel HASP HL) for many years. It requires a proprietary USB key fob which acts as the 'license' for the software. Their SDK encrypts and obfuscates your executable & libraries, and allows you to tie different features in your application to features burned into the key. Without a USB key provided and activated by the licensor, the software can not decrypt and hence will not run. The Key even uses a customized USB communication protocol (outside my realm of knowledge, I'm not a device driver guy) to make it difficult to build a virtual key, or tamper with the communication between the runtime wrapper and key. Their SDK is not very developer friendly, and is quite painful to integrate adding protection with an automated build process (but possible).

Before we implemented the HASP HL protection, there were 7 known pirates who had stripped the dotfuscator 'protections' from the product. We added the HASP protection at the same time as a major update to the software, which performs some heavy calculation on video in real time. As best I can tell from profiling and benchmarking, the HASP HL protection only slowed the intensive calculations by about 3%. Since that software was released about 5 years ago, not one new pirate of the product has been found. The software which it protects is in high demand in it's market segment, and the client is aware of several competitors actively trying to reverse engineer (without success so far). We know they have tried to solicit help from a few groups in Russia which advertise a service to break software protection, as numerous posts on various newsgroups and forums have included the newer versions of the protected product.

Recently we tried their software license solution (HASP SL) on a smaller project, which was straightforward enough to get working if you're already familiar with the HL product. It appears to work; there have been no reported piracy incidents, but this product is a lot lower in demand..

Of course, no protection can be perfect. If someone is sufficiently motivated and has serious cash to burn, I'm sure the protections afforded by HASP could be circumvented.

Solution 4

Making code difficult to reverse-engineer is called code obfuscation.

Most of the techniques you mention are fairly easy to work around. They center on adding some useless code. But useless code is easy to detect and remove, leaving you with a clean program.

For effective obfuscation, you need to make the behavior of your program dependent on the useless bits being executed. For example, rather than doing this:

a = useless_computation();

a = 42;

do this:

a = complicated_computation_that_uses_many_inputs_but_always_returns_42();

Or instead of doing this:

if (running_under_a_debugger()) abort();

a = 42;

Do this (where running_under_a_debugger should not be easily identifiable as a function that tests whether the code is running under a debugger — it should mix useful computations with debugger detection):

a = 42 - running_under_a_debugger();

Effective obfuscation isn't something you can do purely at the compilation stage. Whatever the compiler can do, a decompiler can do. Sure, you can increase the burden on the decompilers, but it's not going to go far. Effective obfuscation techniques, inasmuch as they exist, involve writing obfuscated source from day 1. Make your code self-modifying. Litter your code with computed jumps, derived from a large number of inputs. For example, instead of a simple call

some_function();

do this, where you happen to know the exact expected layout of the bits in some_data_structure:

goto (md5sum(&some_data_structure, 42) & 0xffffffff) + MAGIC_CONSTANT;

If you're serious about obfuscation, add several months to your planning; obfuscation doesn't come cheap. And do consider that by far the best way to avoid people reverse-engineering your code is to make it useless so that they don't bother. It's a simple economic consideration: they will reverse-engineer if the value to them is greater than the cost; but raising their cost also raises your cost a lot, so try lowering the value to them.

Now that I've told you that obfuscation is hard and expensive, I'm going to tell you it's not for you anyway. You write

current protocol I've been working on must not ever be inspected or understandable, for the security of various people

That raises a red flag. It's security by obscurity, which has a very poor record. If the security of the protocol depends on people not knowing the protocol, you've lost already.

Recommended reading:

- The security bible: Security Engineering by Ross Anderson

- The obfuscation bible: Surreptitious software by Christian Collberg and Jasvir Nagra

Solution 5

The best anti disassembler tricks, in particular on variable word length instruction sets are in assembler/machine code, not C. For example

CLC

BCC over

.byte 0x09

over:

The disassembler has to resolve the problem that a branch destination is the second byte in a multi byte instruction. An instruction set simulator will have no problem though. Branching to computed addresses, which you can cause from C, also make the disassembly difficult to impossible. Instruction set simulator will have no problem with it. Using a simulator to sort out branch destinations for you can aid the disassembly process. Compiled code is relatively clean and easy for a disassembler. So I think some assembly is required.

I think it was near the beginning of Michael Abrash's Zen of Assembly Language where he showed a simple anti disassembler and anti-debugger trick. The 8088/6 had a prefetch queue what you did was have an instruction that modified the next instruction or a couple ahead. If single stepping then you executed the modified instruction, if your instruction set simulator did not simulate the hardware completely, you executed the modified instruction. On real hardware running normally the real instruction would already be in the queue and the modified memory location wouldnt cause any damage so long as you didnt execute that string of instructions again. You could probably still use a trick like this today as pipelined processors fetch the next instruction. Or if you know that the hardware has a separate instruction and data cache you can modify a number of bytes ahead if you align this code in the cache line properly, the modified byte will not be written through the instruction cache but the data cache, and an instruction set simulator that did not have proper cache simulators would fail to execute properly. I think software only solutions are not going to get you very far.

The above are old and well known, I dont know enough about the current tools to know if they already work around such things. The self modifying code can/will trip up the debugger, but the human can/will narrow in on the problem and then see the self modifying code and work around it.

It used to be that the hackers would take about 18 months to work something out, dvds for example. Now they are averaging around 2 days to 2 weeks (if motivated) (blue ray, iphones, etc). That means to me if I spend more than a few days on security, I am likely wasting my time. The only real security you will get is through hardware (for example your instructions are encrypted and only the processor core well inside the chip decrypts just before execution, in a way that it cannot expose the decrypted instructions). That might buy you months instead of days.

Also, read Kevin Mitnick's book The Art of Deception. A person like that could pick up a phone and have you or a coworker hand out the secrets to the system thinking it is a manager or another coworker or hardware engineer in another part of the company. And your security is blown. Security is not all about managing the technology, gotta manage the humans too.

Related videos on Youtube

graphitemaster

Updated on October 22, 2020Comments

-

graphitemaster about 2 years

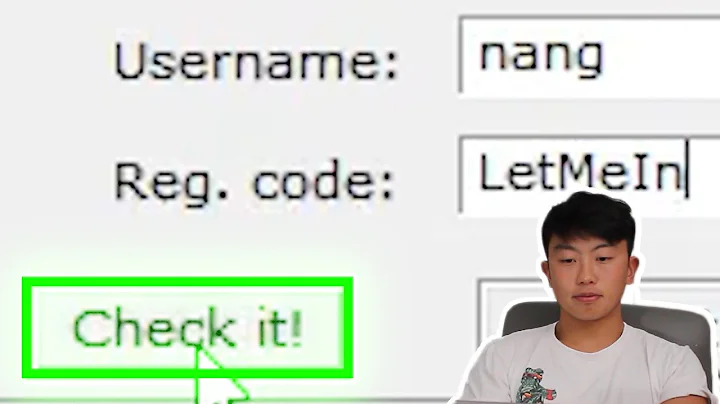

graphitemaster about 2 yearsI've been contemplating how to protect my C/C++ code from disassembly and reverse engineering. Normally I would never condone this behavior myself in my code; however the current protocol I've been working on must not ever be inspected or understandable, for the security of various people.

Now this is a new subject to me, and the internet is not really resourceful for prevention against reverse engineering but rather depicts tons of information on how to reverse engineer

Some of the things I've thought of so far are:

- Code injection (calling dummy functions before and after actual function calls)

- Code obfustication (mangles the disassembly of the binary)

Write my own startup routines (harder for debuggers to bind to)

void startup(); int _start() { startup( ); exit (0) } void startup() { /* code here */ }Runtime check for debuggers (and force exit if detected)

Function trampolines

void trampoline(void (*fnptr)(), bool ping = false) { if(ping) fnptr(); else trampoline(fnptr, true); }Pointless allocations and deallocations (stack changes a lot)

- Pointless dummy calls and trampolines (tons of jumping in disassembly output)

- Tons of casting (for obfuscated disassembly)

I mean these are some of the things I've thought of but they can all be worked around and or figured out by code analysts given the right time frame. Is there anything else alternative I have?

-

Amber over 11 years" however the current protocol I've been working on must not ever be inspected or understandable, for the security of various people." -- good luck with that.

Amber over 11 years" however the current protocol I've been working on must not ever be inspected or understandable, for the security of various people." -- good luck with that. -

Michael Petrotta over 11 yearsYou can make your application hard to reverse engineer. You can't make it impossible, not as long as the other guy has a substantial portion of your bits in their hands. Careful about guaranteeing full security, especially if lives are at stake - you can't deliver.

Michael Petrotta over 11 yearsYou can make your application hard to reverse engineer. You can't make it impossible, not as long as the other guy has a substantial portion of your bits in their hands. Careful about guaranteeing full security, especially if lives are at stake - you can't deliver. -

Lightness Races in Orbit over 11 yearsIf your computer can understand the code, so can a person.

Lightness Races in Orbit over 11 yearsIf your computer can understand the code, so can a person. -

Jeremy Friesner over 11 yearsAny chance you can avoid the issue by simply never giving anyone access to the secret binary? Eg install it only on your own (secure) servers only, and let clients access the servers via tcp, rather than running the code themselves.

Jeremy Friesner over 11 yearsAny chance you can avoid the issue by simply never giving anyone access to the secret binary? Eg install it only on your own (secure) servers only, and let clients access the servers via tcp, rather than running the code themselves. -

Dustin Davis over 11 years"for the security of various people" what security? Intellectual or physical? If intellectual, why not get a patetent?

Dustin Davis over 11 years"for the security of various people" what security? Intellectual or physical? If intellectual, why not get a patetent? -

Sebastian over 11 years@DustinDavis: graphitemaster did not specify his home country. Software patents aren't easy to obtain in other jurisdictions other than the US (e.g. in Europe you got to be very creative and explain the mandatory Technical Character of your invention, which is probably a good thing).

Sebastian over 11 years@DustinDavis: graphitemaster did not specify his home country. Software patents aren't easy to obtain in other jurisdictions other than the US (e.g. in Europe you got to be very creative and explain the mandatory Technical Character of your invention, which is probably a good thing). -

Jeff over 11 yearsbut rather depicts tons of information on how to reverse engineer - Well there you go - find out how its done, analyze the steps, and do what you can to make those steps harder to complete. :)

Jeff over 11 yearsbut rather depicts tons of information on how to reverse engineer - Well there you go - find out how its done, analyze the steps, and do what you can to make those steps harder to complete. :) -

user unknown over 11 yearsMake the code Open Source, and nobody will reverse engineer it.

user unknown over 11 yearsMake the code Open Source, and nobody will reverse engineer it. -

pQuestions123 over 11 years"Security by obscurity never worked."

pQuestions123 over 11 years"Security by obscurity never worked." -

pQuestions123 over 11 years@Robert Fraser: Security by obscurity makes peer review impossible. History showed that things like DECT, GSM A5/1 cipher mifare RFID chips, to name a few, were all broken mostly because they were never reviewed. Kerckhoffs's Principle's second claim, which is widely accepted to be a good idea, says this for cryptography but it's also true for any other system.

pQuestions123 over 11 years@Robert Fraser: Security by obscurity makes peer review impossible. History showed that things like DECT, GSM A5/1 cipher mifare RFID chips, to name a few, were all broken mostly because they were never reviewed. Kerckhoffs's Principle's second claim, which is widely accepted to be a good idea, says this for cryptography but it's also true for any other system.

-

Nemo over 11 years+1. Go read about the glory days of copy protection on the Apple II, the ever-escalating war between the obfuscators and the crackers, the crazy tricks with the floppy disk's stepper motor and undocumented 6502 instructions and so on... And then cry yourself to sleep, because you are not going to implement anything nearly so elaborate and they all got cracked eventually.

Nemo over 11 years+1. Go read about the glory days of copy protection on the Apple II, the ever-escalating war between the obfuscators and the crackers, the crazy tricks with the floppy disk's stepper motor and undocumented 6502 instructions and so on... And then cry yourself to sleep, because you are not going to implement anything nearly so elaborate and they all got cracked eventually. -

graphitemaster over 11 yearsI understand this, and I've read a few papers on Skype security explained and I've been contemplating the same ideas Skype has already tried as a method to not prevent but rather protect my protocol. Something that has proven worthy enough given the obvious circumstances for Skype.

graphitemaster over 11 yearsI understand this, and I've read a few papers on Skype security explained and I've been contemplating the same ideas Skype has already tried as a method to not prevent but rather protect my protocol. Something that has proven worthy enough given the obvious circumstances for Skype. -

graphitemaster over 11 yearsI mean, this is a viable and applicable answer, but the line you draw between protection and earing an income of a couple million to have others protect your product for you is a really long line.

graphitemaster over 11 yearsI mean, this is a viable and applicable answer, but the line you draw between protection and earing an income of a couple million to have others protect your product for you is a really long line. -

Tim Post over 11 yearsModerator Note: Comments under this answer have been removed due to digressing into antagonistic noise.

Tim Post over 11 yearsModerator Note: Comments under this answer have been removed due to digressing into antagonistic noise. -

Ricket over 11 yearsSkype is actually the first example that came to mind, so I'm glad you are already looking into emulating their methods.

Ricket over 11 yearsSkype is actually the first example that came to mind, so I'm glad you are already looking into emulating their methods. -

old_timer over 11 yearseasier to use a simulator and get better visibility than try to reverse engineer visually or with a disassembler. If the security is not built into the hardware you are using, I think the world is averaging about two days to two weeks to reverse engineer and defeat pretty much everything that comes out. If it takes you more than two days to create and implement this, you have spent too much time.

old_timer over 11 yearseasier to use a simulator and get better visibility than try to reverse engineer visually or with a disassembler. If the security is not built into the hardware you are using, I think the world is averaging about two days to two weeks to reverse engineer and defeat pretty much everything that comes out. If it takes you more than two days to create and implement this, you have spent too much time. -

asmeurer over 11 yearsAlso, you don't have to have access to the source code (or even disassembled source code) to find a security hole. It could be by accident, or by using the fact that most holes come from the same problems in the code (like buffer overflows).

asmeurer over 11 yearsAlso, you don't have to have access to the source code (or even disassembled source code) to find a security hole. It could be by accident, or by using the fact that most holes come from the same problems in the code (like buffer overflows). -

Martin James over 11 yearsThere are big problems with self-modifying code. Most modern OS/hardware will not let you do it without very high privilege, there can be cache issues and the code is not thread-safe.

Martin James over 11 yearsThere are big problems with self-modifying code. Most modern OS/hardware will not let you do it without very high privilege, there can be cache issues and the code is not thread-safe. -

jilles over 11 yearsWith modern x86 processors, tricks like these are often bad for performance. Using the same memory location as part of more than one instruction likely has an effect similar to a mispredicted branch. Self-modifying code causes the processor to discard cache lines to maintain coherence between the instruction and data caches (if you execute the modified code much more often than you modify it, it may still be a win).

jilles over 11 yearsWith modern x86 processors, tricks like these are often bad for performance. Using the same memory location as part of more than one instruction likely has an effect similar to a mispredicted branch. Self-modifying code causes the processor to discard cache lines to maintain coherence between the instruction and data caches (if you execute the modified code much more often than you modify it, it may still be a win). -

Bayquiri over 11 years"Whatever the compiler can do, a decompiler can do" -- definitely wrong.

Bayquiri over 11 years"Whatever the compiler can do, a decompiler can do" -- definitely wrong. -

Bayquiri over 11 years@Gilles, that's your statement, which is very strong, so the burden of proof lies on you. However, I will provide a simple example:

Bayquiri over 11 years@Gilles, that's your statement, which is very strong, so the burden of proof lies on you. However, I will provide a simple example:2+2can be simplified by the compiler to4, but the decompiler can't bring it back to2+2(what if it actually was1+3?). -

Gilles 'SO- stop being evil' over 11 years@Rotsor

Gilles 'SO- stop being evil' over 11 years@Rotsor4and2+2are observationally equivalent, so they are the same for this purpose, namely to figure out what the program is doing. Yes, of course, the decompiler can't reconstruct the source code, but that's irrelevant. This Q&A is about reconstructing the behavior (i.e. the algorithm, and more precisely a protocol). -

Bayquiri over 11 yearsYou don't have to do anything to reconstruct the behaviour. You already have the program! What you usually need is to understand the protocol and change something in it (like replacing a 2 in

Bayquiri over 11 yearsYou don't have to do anything to reconstruct the behaviour. You already have the program! What you usually need is to understand the protocol and change something in it (like replacing a 2 in2+2with 3, or replace the+with a*). -

Gilles 'SO- stop being evil' over 11 years1. Your example is not relevant here; the two programs you show are behaviorly equivalent, and this question is about figuring out the behavior of a program, not reconstructing its source code (which, obviously, is impossible). 2. This paper is a theoretical paper; it's impossible to write the perfect obfuscator, but it's also impossible to write the perfect decompiler (for much the same reasons that it's impossible to write the perfect program analyser). In practice, it's an arms race: who can write the better (de)obfuscator.

Gilles 'SO- stop being evil' over 11 years1. Your example is not relevant here; the two programs you show are behaviorly equivalent, and this question is about figuring out the behavior of a program, not reconstructing its source code (which, obviously, is impossible). 2. This paper is a theoretical paper; it's impossible to write the perfect obfuscator, but it's also impossible to write the perfect decompiler (for much the same reasons that it's impossible to write the perfect program analyser). In practice, it's an arms race: who can write the better (de)obfuscator. -

Gilles 'SO- stop being evil' over 11 years@Rotsor No, having the program doesn't tell you its behavior. Analogy: having an apple in your hand doesn't give you the theory of gravity. There's a huge gap between being able to perform experiments, and understanding how something works.

Gilles 'SO- stop being evil' over 11 years@Rotsor No, having the program doesn't tell you its behavior. Analogy: having an apple in your hand doesn't give you the theory of gravity. There's a huge gap between being able to perform experiments, and understanding how something works. -

Bayquiri over 11 years@Gilles, the result of the (correct) deobfuscation will always be behaviourally equivalent to the obfuscated code. I don't see how that undermines the importance of the problem.

Bayquiri over 11 years@Gilles, the result of the (correct) deobfuscation will always be behaviourally equivalent to the obfuscated code. I don't see how that undermines the importance of the problem. -

Bayquiri over 11 yearsAlso, about arms race: this is not about who invests more into the research, but rather about who is right. Correct mathematical proofs don't go wrong just because someone wants them to really badly.

Bayquiri over 11 yearsAlso, about arms race: this is not about who invests more into the research, but rather about who is right. Correct mathematical proofs don't go wrong just because someone wants them to really badly. -

Bayquiri over 11 yearsIf you consider all behaviourally-equivalent programs the same, then yes, the compiler can't do anything because it performs just an indentity transformation. The decompiler is useless then too, as it is an identity transformation again. If you don't, however, then

Bayquiri over 11 yearsIf you consider all behaviourally-equivalent programs the same, then yes, the compiler can't do anything because it performs just an indentity transformation. The decompiler is useless then too, as it is an identity transformation again. If you don't, however, then2+2->4is a valid example of unreversible transformation performed by the compiler. Whether it makes understanding more easy or more difficult is a separate argument. -

Bayquiri over 11 years@Gilles I can't extend your analogy with apple because I can't imagine a structurally different, but behaviourally equvalent apple. :)

Bayquiri over 11 years@Gilles I can't extend your analogy with apple because I can't imagine a structurally different, but behaviourally equvalent apple. :) -

Bayquiri over 11 yearsOkay, maybe you are right about arms race in practice. I think I misunderstood this one. :) I hope some kind of cryptographically-safe obfuscation is possible though.

Bayquiri over 11 yearsOkay, maybe you are right about arms race in practice. I think I misunderstood this one. :) I hope some kind of cryptographically-safe obfuscation is possible though. -

Gilles 'SO- stop being evil' over 11 yearsFor an interesting case of obfuscation, try smart cards, where the problem is that the attacker has physical access (white-box obfuscation). Part of the response is to limit access by physical means (the attacker can't read secret keys directly); but software obfuscation plays a role too, mainly to make attacks like DPA not give useful results. I don't have a good reference to offer, sorry. The examples in my answer are vaguely inspired from techniques used in that domain.

Gilles 'SO- stop being evil' over 11 yearsFor an interesting case of obfuscation, try smart cards, where the problem is that the attacker has physical access (white-box obfuscation). Part of the response is to limit access by physical means (the attacker can't read secret keys directly); but software obfuscation plays a role too, mainly to make attacks like DPA not give useful results. I don't have a good reference to offer, sorry. The examples in my answer are vaguely inspired from techniques used in that domain. -

Robert Fraser over 11 years@Nemo - When did this stop? Even today, every PC game comes out with ever more sophisticated DRM, and in less than a week (or even before release), it ends up on torrent sites.

Robert Fraser over 11 years@Nemo - When did this stop? Even today, every PC game comes out with ever more sophisticated DRM, and in less than a week (or even before release), it ends up on torrent sites. -

Robert Fraser over 11 years+1 for experience, but I'd like to echo that it's not perfect. Maya (3D suite) used a hardware dongle (not sure if it was HASP), which didn't deter pirates for very long. When there's a will, there's a way.

Robert Fraser over 11 years+1 for experience, but I'd like to echo that it's not perfect. Maya (3D suite) used a hardware dongle (not sure if it was HASP), which didn't deter pirates for very long. When there's a will, there's a way. -

RyanR over 11 yearsAutoCAD uses a similar system, which has been cracked numerous times. HASP and others like it will keep honest people honest, and prevent casual piracy. If you're building the next multiple-billion dollar design product, you'll always have crackers to contend with. Its all about diminishing returns - how many hours of effort is it worth to crack your software protection vs just paying for it.

RyanR over 11 yearsAutoCAD uses a similar system, which has been cracked numerous times. HASP and others like it will keep honest people honest, and prevent casual piracy. If you're building the next multiple-billion dollar design product, you'll always have crackers to contend with. Its all about diminishing returns - how many hours of effort is it worth to crack your software protection vs just paying for it. -

matbrgz over 11 yearsThe only reasonably functioning DRM today is the combination of a key and an internet server verifying that only one instance of the key is active at one timem.

matbrgz over 11 yearsThe only reasonably functioning DRM today is the combination of a key and an internet server verifying that only one instance of the key is active at one timem. -

Adam Robinson over 11 years@Rotsor: Amber said that if a computer can execute it, a human can reverse-engineer it. Nothing about a computer reverse-engineering it.

Adam Robinson over 11 years@Rotsor: Amber said that if a computer can execute it, a human can reverse-engineer it. Nothing about a computer reverse-engineering it. -

Fake Name over 11 yearsI also want to chime in from the perspective of someone who has used HASP secured software. HASPs are a royal pain in the ass to the end user. I've dealt with a Dallas iButton and an Aladdin HASP, and both were really buggy, and caused the software to randomly stop working, requiring disconnecting and reconnecting the HASP.

Fake Name over 11 yearsI also want to chime in from the perspective of someone who has used HASP secured software. HASPs are a royal pain in the ass to the end user. I've dealt with a Dallas iButton and an Aladdin HASP, and both were really buggy, and caused the software to randomly stop working, requiring disconnecting and reconnecting the HASP. -

Fake Name over 11 yearsAlso, It's worth noting that HASP security measures are not necessarily any more secure then code obfuscation - sure they require a different methodology to reverse engineer, but it is very possible to reverse them - See: flylogic.net/blog/?p=14 flylogic.net/blog/?p=16 flylogic.net/blog/?p=11

Fake Name over 11 yearsAlso, It's worth noting that HASP security measures are not necessarily any more secure then code obfuscation - sure they require a different methodology to reverse engineer, but it is very possible to reverse them - See: flylogic.net/blog/?p=14 flylogic.net/blog/?p=16 flylogic.net/blog/?p=11 -

RyanR over 11 years@Fake Name, As I said in my answer, nothing is impossible to reverse engineer. These devices aren't intended to keep the NSA or a serious reverse engineering team from getting the source to your software, just to prevent casual piracy. As far as making the software buggy, I can't speak for other projects, but my customers flagship product goes through 500+ hours of continuous UI test automation every month, in addition to being used more than 150,000 total hours per month by their customers; The only crashes they have reported are confirmed to be caused by bugs in my code.

RyanR over 11 years@Fake Name, As I said in my answer, nothing is impossible to reverse engineer. These devices aren't intended to keep the NSA or a serious reverse engineering team from getting the source to your software, just to prevent casual piracy. As far as making the software buggy, I can't speak for other projects, but my customers flagship product goes through 500+ hours of continuous UI test automation every month, in addition to being used more than 150,000 total hours per month by their customers; The only crashes they have reported are confirmed to be caused by bugs in my code. -

Dizzley over 11 yearsI would agree with this. I think you may have a conceptual or a design problem. Is there an analog with a private-public key pair solution? You never divulge the private key, it stays with the owner whose secure client processes it. Can you keep the secure code off their computer and only pass results back to the user?

Dizzley over 11 yearsI would agree with this. I think you may have a conceptual or a design problem. Is there an analog with a private-public key pair solution? You never divulge the private key, it stays with the owner whose secure client processes it. Can you keep the secure code off their computer and only pass results back to the user? -

jwenting over 11 yearswell said, the only way to avoid people disassembling your code is to never let them have physical access to it at all which means offering your application exclusively as a SAAS, taking requests from remote clients and handing back the processed data. Place the server in a locked room in an underground bunker surrounded by an alligator ditch and 5m tall electrified razor wire to which you throw away the key before covering it all by 10m of reinforced concrete, and then hope you didn't forget to install tons of software systems to prevent intrusion over the network.

jwenting over 11 yearswell said, the only way to avoid people disassembling your code is to never let them have physical access to it at all which means offering your application exclusively as a SAAS, taking requests from remote clients and handing back the processed data. Place the server in a locked room in an underground bunker surrounded by an alligator ditch and 5m tall electrified razor wire to which you throw away the key before covering it all by 10m of reinforced concrete, and then hope you didn't forget to install tons of software systems to prevent intrusion over the network. -

Gui13 over 11 years@Nemo: do you have a link about the copy war on Apple ][ ?

Gui13 over 11 years@Nemo: do you have a link about the copy war on Apple ][ ? -

Colin Pickard over 11 yearsI hope I never get the contract to maintain your servers

Colin Pickard over 11 yearsI hope I never get the contract to maintain your servers -

Necrolis over 11 yearsyour first point makes no sense, optimized code cuts out cruft, this makes it easier to reverse (I speak from experience). your thrid point is also a waste of time, and reverse engineer worth his salt know how to do memory access breakpointing. this is why is probably best to not design a system yourself but us 3rd party libraries that have yet to be 'cracked', becuase thats likely to last a little longer than anything a 'rookie' could create...

Necrolis over 11 yearsyour first point makes no sense, optimized code cuts out cruft, this makes it easier to reverse (I speak from experience). your thrid point is also a waste of time, and reverse engineer worth his salt know how to do memory access breakpointing. this is why is probably best to not design a system yourself but us 3rd party libraries that have yet to be 'cracked', becuase thats likely to last a little longer than anything a 'rookie' could create... -

Necrolis over 11 years+1 for common sense: why make it harder for yourself when you could just design a better system.

Necrolis over 11 years+1 for common sense: why make it harder for yourself when you could just design a better system. -

Amber over 11 years@Rotsor: No, it's not - the "human is simply a slower computer" argument is there to point out that there has to be a known mapping between the bytecode of the program and certain functions, and thus there is nothing in the program that is "invisible" to a human.

Amber over 11 years@Rotsor: No, it's not - the "human is simply a slower computer" argument is there to point out that there has to be a known mapping between the bytecode of the program and certain functions, and thus there is nothing in the program that is "invisible" to a human. -

Olof Forshell over 11 yearsSince it appears I don't know anything on the subject matter perhaps I should to turn to a professional such as you for my software development needs instead of writing any code myself.

Olof Forshell over 11 yearsSince it appears I don't know anything on the subject matter perhaps I should to turn to a professional such as you for my software development needs instead of writing any code myself. -

Adam Robinson over 11 years@Rotsor: The computer cannot understand it because we have not been able to reduce this sort of intelligence to an algorithm (yet), not because there is some sort of physical or technological barrier in place. The human can understand it because he can do anything the computer can (albeit slower) as well as reason.

Adam Robinson over 11 years@Rotsor: The computer cannot understand it because we have not been able to reduce this sort of intelligence to an algorithm (yet), not because there is some sort of physical or technological barrier in place. The human can understand it because he can do anything the computer can (albeit slower) as well as reason. -

Bo Persson over 11 yearsI ran into this 20 years ago. Took us almost half an hour to figure out what happened. Not very good if you need longer protection.

Bo Persson over 11 yearsI ran into this 20 years ago. Took us almost half an hour to figure out what happened. Not very good if you need longer protection. -

Gilles 'SO- stop being evil' over 11 years@BoPersson With considerable difficulty (if someone pretends obfuscation is easy, don't listen to him). But it does help to have the source code and a very good debugger.

Gilles 'SO- stop being evil' over 11 years@BoPersson With considerable difficulty (if someone pretends obfuscation is easy, don't listen to him). But it does help to have the source code and a very good debugger. -

liamzebedee over 11 yearsAs I always say, if you keep everything server side, its more secure

liamzebedee over 11 yearsAs I always say, if you keep everything server side, its more secure -

Alex W over 10 yearsThe only theoretical way to prevent reverse-engineering would be to only run the program on a special computer that hides its own instruction set from its users.

Alex W over 10 yearsThe only theoretical way to prevent reverse-engineering would be to only run the program on a special computer that hides its own instruction set from its users. -

Amber over 10 yearsAt which point someone will try to reverse-engineer the computer, unless it's only available in an environment you control.

Amber over 10 yearsAt which point someone will try to reverse-engineer the computer, unless it's only available in an environment you control. -

Gallium Nitride over 9 yearsThe first thing i would recommend, is to avoid at all costs the use of commercial obfuscators! Because if you crack the obfuscator, you can crack all applications obfuscated with it!

Gallium Nitride over 9 yearsThe first thing i would recommend, is to avoid at all costs the use of commercial obfuscators! Because if you crack the obfuscator, you can crack all applications obfuscated with it! -

Albert van der Horst almost 9 years"If you give people a program that they are able to run, then they will also be able to reverse-engineer it given enough time. " This is actually not true, see my answer below.

Albert van der Horst almost 9 years"If you give people a program that they are able to run, then they will also be able to reverse-engineer it given enough time. " This is actually not true, see my answer below. -

cmaster - reinstate monica over 8 yearsThe International Obfuscated C Code Contest ioccc.org is another great source of neat obfuscation tricks. But that's definitely on the artistic side and not on the (flawed) security through obscurity side. And those tricks will definitely render the code entirely unmaintainable.

cmaster - reinstate monica over 8 yearsThe International Obfuscated C Code Contest ioccc.org is another great source of neat obfuscation tricks. But that's definitely on the artistic side and not on the (flawed) security through obscurity side. And those tricks will definitely render the code entirely unmaintainable. -

Ben Voigt about 7 years"the real instruction would already be in the queue and the modified memory location wouldnt cause any damage" Until an interrupt occurs in between, flushing the instruction pipeline, and causing the new code to become visible. Now your obfuscation has caused a bug for your legitimate users.

Ben Voigt about 7 years"the real instruction would already be in the queue and the modified memory location wouldnt cause any damage" Until an interrupt occurs in between, flushing the instruction pipeline, and causing the new code to become visible. Now your obfuscation has caused a bug for your legitimate users. -

Ted over 6 yearsThe link is broken.

Ted over 6 yearsThe link is broken. -

Mawg says reinstate Monica about 6 yearsVery good advice (and I upvoted it), but it doesn't really adress this particular question

Mawg says reinstate Monica about 6 yearsVery good advice (and I upvoted it), but it doesn't really adress this particular question -

Bertram Gilfoyle over 3 years"Try lowering the value to them" seems like an important point. But how?

Bertram Gilfoyle over 3 years"Try lowering the value to them" seems like an important point. But how? -

Scott Stensland over 2 yearsthis is the real solution ... namely put your crown jewels into your own server up on your own VPS machine and only expose API calls into this server from the client ( browser or api client )

Scott Stensland over 2 yearsthis is the real solution ... namely put your crown jewels into your own server up on your own VPS machine and only expose API calls into this server from the client ( browser or api client )