Pyspark - Aggregation on multiple columns

90,740

- Follow the instructions from the README to include

spark-csvpackage -

Load data

df = (sqlContext.read .format("com.databricks.spark.csv") .options(inferSchema="true", delimiter=";", header="true") .load("babynames.csv")) -

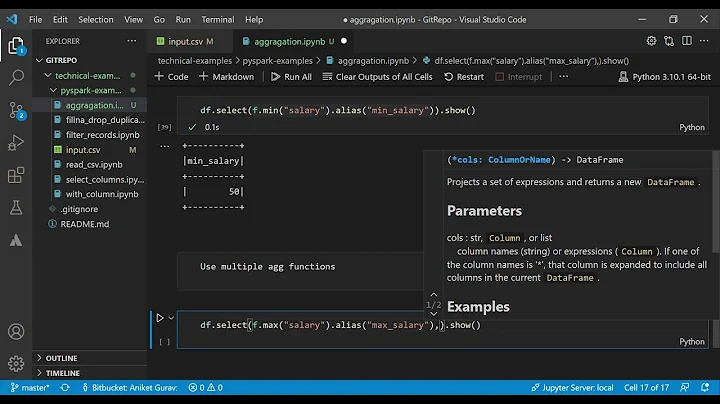

Import required functions

from pyspark.sql.functions import count, avg -

Group by and aggregate (optionally use

Column.alias:df.groupBy("year", "sex").agg(avg("percent"), count("*"))

Alternatively:

- cast

percentto numeric - reshape to a format ((

year,sex),percent) -

aggregateByKeyusingpyspark.statcounter.StatCounter

Related videos on Youtube

Author by

Mohan

Updated on July 05, 2022Comments

-

Mohan almost 2 years

I have data like below. Filename:babynames.csv.

year name percent sex 1880 John 0.081541 boy 1880 William 0.080511 boy 1880 James 0.050057 boyI need to sort the input based on year and sex and I want the output aggregated like below (this output is to be assigned to a new RDD).

year sex avg(percentage) count(rows) 1880 boy 0.070703 3I am not sure how to proceed after the following step in pyspark. Need your help on this

testrdd = sc.textFile("babynames.csv"); rows = testrdd.map(lambda y:y.split(',')).filter(lambda x:"year" not in x[0]) aggregatedoutput = ???? -

zero323 over 6 years