pyspark import user defined module or .py files

Solution 1

It turned out that since I'm submitting my application in client mode, then the machine I run the spark-submit command from will run the driver program and will need to access the module files.

I added my module to the PYTHONPATH environment variable on the node I'm submitting my job from by adding the following line to my .bashrc file (or execute it before submitting my job).

export PYTHONPATH=$PYTHONPATH:/home/welshamy/modules

And that solved the problem. Since the path is on the driver node, I don't have to zip and ship the module with --py-files or use sc.addPyFile().

The key to solving any pyspark module import error problem is understanding whether the driver or worker (or both) nodes need the module files.

Important

If the worker nodes need your module files, then you need to pass it as a zip archive with --py-files and this argument must precede your .py file argument. For example, notice the order of arguments in these examples:

This is correct:

./bin/spark-submit --py-files wesam.zip mycode.py

this is not correct:

./bin/spark-submit mycode.py --py-files wesam.zip

Solution 2

Put mycode.py and wesam.py in the same path location and try

sc.addPyFile("wesam.py")

It might work.

Related videos on Youtube

Comments

-

Sam almost 4 years

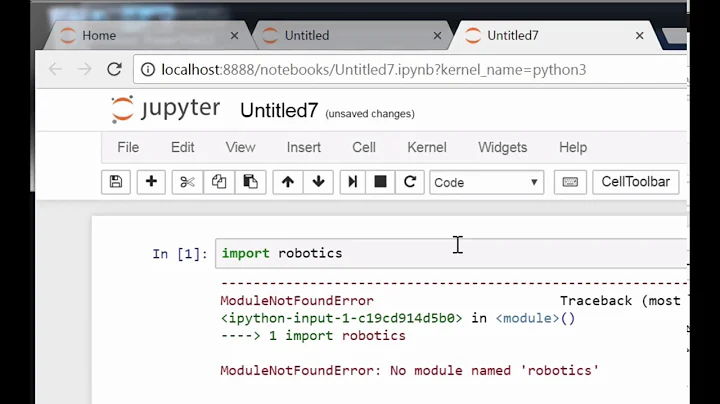

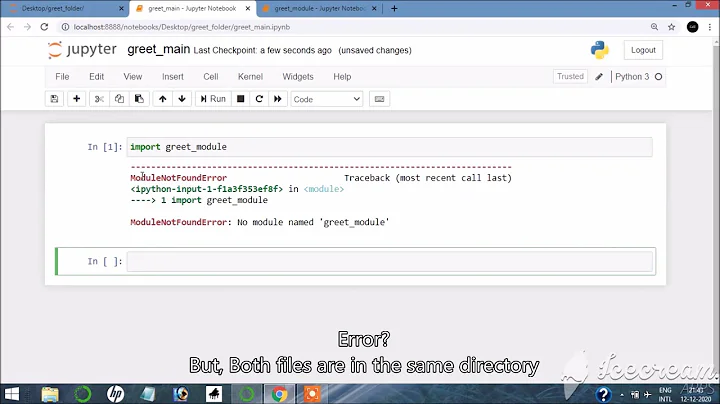

I built a python module and I want to import it in my pyspark application.

My package directory structure is:

wesam/ |-- data.py `-- __init__.pyA simple

import wesamat the top of my pyspark script leads toImportError: No module named wesam. I also tried to zip it and ship it with my code with--py-filesas recommended in this answer, with no luck../bin/spark-submit --py-files wesam.zip mycode.pyI also added the file programmatically as suggested by this answer, but I got the same

ImportError: No module named wesamerror..sc.addPyFile("wesam.zip")What am I missing here?

-

mathtick over 6 yearsWhile this might work, you are effectively disting your env through your (presumably) globally disted $HOME/.bashrc. Is there really no way to dynamically set the PYTHONPATH of the worker modules? The reason you would want to do this is that you are interacting from the ipython REPL and want to ship parallel jobs that depend on a modules sitting on NFS in the PYTHONPATH (think python setup.py develop mode).

mathtick over 6 yearsWhile this might work, you are effectively disting your env through your (presumably) globally disted $HOME/.bashrc. Is there really no way to dynamically set the PYTHONPATH of the worker modules? The reason you would want to do this is that you are interacting from the ipython REPL and want to ship parallel jobs that depend on a modules sitting on NFS in the PYTHONPATH (think python setup.py develop mode). -

RNHTTR almost 6 years@Wesam Great answer! You mention "The key to solving any pyspark module import error problem is understanding whether the driver or worker (or both) nodes need the module files." -- Can you recommend a good way to learn this?

-

vikrant rana over 5 years@Wesam-nee your suggestion to break down a application in pyspark. Lets say I have break down my big code into three pyscripts. what would be the best way to run these scripts? using three shell and spark-submit to execute them as different application or running all together in one spark shell?

-

Michael Hoffman over 5 yearsThis works well for me, thank you. I was able to specify a s3 location to a single module as well.

Michael Hoffman over 5 yearsThis works well for me, thank you. I was able to specify a s3 location to a single module as well. -

MonkandMonkey over 4 yearsClear and helpful! Thanks a lot!

-

genomics-geek about 4 yearsThis is extremely clear and helpful. This helped me finally realize what was going on...

-

Harsha Reddy over 3 yearsI had been struggling since forever to be able to import modules in spark. This was very clear. Thank you!!