PySpark: Take average of a column after using filter function

86,971

Solution 1

Aggregation function should be a value and a column name a key:

dataframe.filter(df['salary'] > 100000).agg({"age": "avg"})

Alternatively you can use pyspark.sql.functions:

from pyspark.sql.functions import col, avg

dataframe.filter(df['salary'] > 100000).agg(avg(col("age")))

It is also possible to use CASE .. WHEN

from pyspark.sql.functions import when

dataframe.select(avg(when(df['salary'] > 100000, df['age'])))

Solution 2

You can try this too:

dataframe.filter(df['salary'] > 100000).groupBy().avg('age')

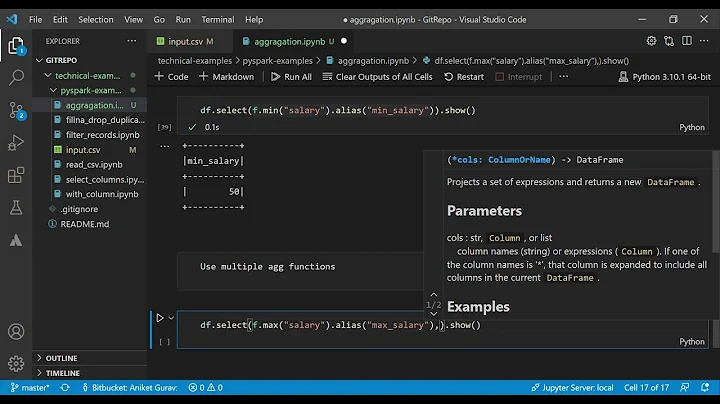

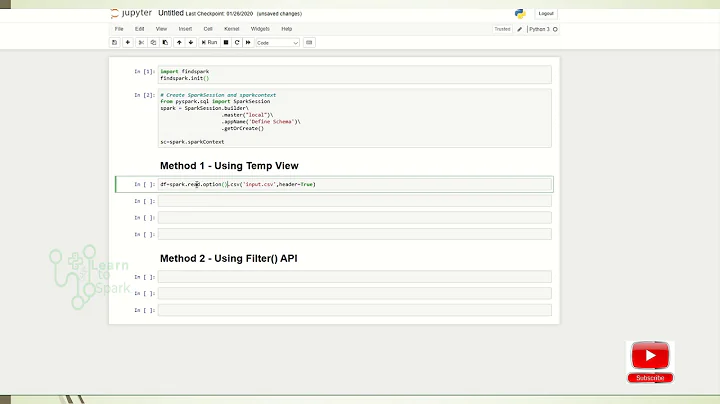

Related videos on Youtube

Author by

Harit Vishwakarma

Find out more here https://www.linkedin.com/in/harit7/

Updated on May 13, 2020Comments

-

Harit Vishwakarma almost 4 years

I am using the following code to get the average age of people whose salary is greater than some threshold.

dataframe.filter(df['salary'] > 100000).agg({"avg": "age"})the column age is numeric (float) but still I am getting this error.

py4j.protocol.Py4JJavaError: An error occurred while calling o86.agg. : scala.MatchError: age (of class java.lang.String)Do you know any other way to obtain the avg etc. without using

groupByfunction and SQL queries.