Python read from subprocess stdout and stderr separately while preserving order

Solution 1

Here's a solution based on selectors, but one that preserves order, and streams variable-length characters (even single chars).

The trick is to use read1(), instead of read().

import selectors

import subprocess

import sys

p = subprocess.Popen(

["python", "random_out.py"], stdout=subprocess.PIPE, stderr=subprocess.PIPE

)

sel = selectors.DefaultSelector()

sel.register(p.stdout, selectors.EVENT_READ)

sel.register(p.stderr, selectors.EVENT_READ)

while True:

for key, _ in sel.select():

data = key.fileobj.read1().decode()

if not data:

exit()

if key.fileobj is p.stdout:

print(data, end="")

else:

print(data, end="", file=sys.stderr)

If you want a test program, use this.

import sys

from time import sleep

for i in range(10):

print(f" x{i} ", file=sys.stderr, end="")

sleep(0.1)

print(f" y{i} ", end="")

sleep(0.1)

Solution 2

The code in your question may deadlock if the child process produces enough output on stderr (~100KB on my Linux machine).

There is a communicate() method that allows to read from both stdout and stderr separately:

from subprocess import Popen, PIPE

process = Popen(command, stdout=PIPE, stderr=PIPE)

output, err = process.communicate()

If you need to read the streams while the child process is still running then the portable solution is to use threads (not tested):

from subprocess import Popen, PIPE

from threading import Thread

from Queue import Queue # Python 2

def reader(pipe, queue):

try:

with pipe:

for line in iter(pipe.readline, b''):

queue.put((pipe, line))

finally:

queue.put(None)

process = Popen(command, stdout=PIPE, stderr=PIPE, bufsize=1)

q = Queue()

Thread(target=reader, args=[process.stdout, q]).start()

Thread(target=reader, args=[process.stderr, q]).start()

for _ in range(2):

for source, line in iter(q.get, None):

print "%s: %s" % (source, line),

See:

- Python: read streaming input from subprocess.communicate()

- Non-blocking read on a subprocess.PIPE in python

- Python subprocess get children's output to file and terminal?

Solution 3

The order in which a process writes data to different pipes is lost after write.

There is no way you can tell if stdout has been written before stderr.

You can try to read data simultaneously from multiple file descriptors in a non-blocking way as soon as data is available, but this would only minimize the probability that the order is incorrect.

This program should demonstrate this:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import os

import select

import subprocess

testapps={

'slow': '''

import os

import time

os.write(1, 'aaa')

time.sleep(0.01)

os.write(2, 'bbb')

time.sleep(0.01)

os.write(1, 'ccc')

''',

'fast': '''

import os

os.write(1, 'aaa')

os.write(2, 'bbb')

os.write(1, 'ccc')

''',

'fast2': '''

import os

os.write(1, 'aaa')

os.write(2, 'bbbbbbbbbbbbbbb')

os.write(1, 'ccc')

'''

}

def readfds(fds, maxread):

while True:

fdsin, _, _ = select.select(fds,[],[])

for fd in fdsin:

s = os.read(fd, maxread)

if len(s) == 0:

fds.remove(fd)

continue

yield fd, s

if fds == []:

break

def readfromapp(app, rounds=10, maxread=1024):

f=open('testapp.py', 'w')

f.write(testapps[app])

f.close()

results={}

for i in range(0, rounds):

p = subprocess.Popen(['python', 'testapp.py'], stdout=subprocess.PIPE

, stderr=subprocess.PIPE)

data=''

for (fd, s) in readfds([p.stdout.fileno(), p.stderr.fileno()], maxread):

data = data + s

results[data] = results[data] + 1 if data in results else 1

print 'running %i rounds %s with maxread=%i' % (rounds, app, maxread)

results = sorted(results.items(), key=lambda (k,v): k, reverse=False)

for data, count in results:

print '%03i x %s' % (count, data)

print

print "=> if output is produced slowly this should work as whished"

print " and should return: aaabbbccc"

readfromapp('slow', rounds=100, maxread=1024)

print

print "=> now mostly aaacccbbb is returnd, not as it should be"

readfromapp('fast', rounds=100, maxread=1024)

print

print "=> you could try to read data one by one, and return"

print " e.g. a whole line only when LF is read"

print " (b's should be finished before c's)"

readfromapp('fast', rounds=100, maxread=1)

print

print "=> but even this won't work ..."

readfromapp('fast2', rounds=100, maxread=1)

and outputs something like this:

=> if output is produced slowly this should work as whished

and should return: aaabbbccc

running 100 rounds slow with maxread=1024

100 x aaabbbccc

=> now mostly aaacccbbb is returnd, not as it should be

running 100 rounds fast with maxread=1024

006 x aaabbbccc

094 x aaacccbbb

=> you could try to read data one by one, and return

e.g. a whole line only when LF is read

(b's should be finished before c's)

running 100 rounds fast with maxread=1

003 x aaabbbccc

003 x aababcbcc

094 x abababccc

=> but even this won't work ...

running 100 rounds fast2 with maxread=1

003 x aaabbbbbbbbbbbbbbbccc

001 x aaacbcbcbbbbbbbbbbbbb

008 x aababcbcbcbbbbbbbbbbb

088 x abababcbcbcbbbbbbbbbb

Solution 4

from https://docs.python.org/3/library/subprocess.html#using-the-subprocess-module

If you wish to capture and combine both streams into one, use stdout=PIPE and stderr=STDOUT instead of capture_output.

so the easiest solution would be:

process = subprocess.Popen(command, stdout=subprocess.PIPE, stderr=subprocess.STDOUT)

stdout_iterator = iter(process.stdout.readline, b"")

for line in stdout_iterator:

# Do stuff with line

print line

Solution 5

This works for Python3 (3.6):

p = subprocess.Popen(cmd, stdout=subprocess.PIPE,

stderr=subprocess.PIPE, universal_newlines=True)

# Read both stdout and stderr simultaneously

sel = selectors.DefaultSelector()

sel.register(p.stdout, selectors.EVENT_READ)

sel.register(p.stderr, selectors.EVENT_READ)

ok = True

while ok:

for key, val1 in sel.select():

line = key.fileobj.readline()

if not line:

ok = False

break

if key.fileobj is p.stdout:

print(f"STDOUT: {line}", end="")

else:

print(f"STDERR: {line}", end="", file=sys.stderr)

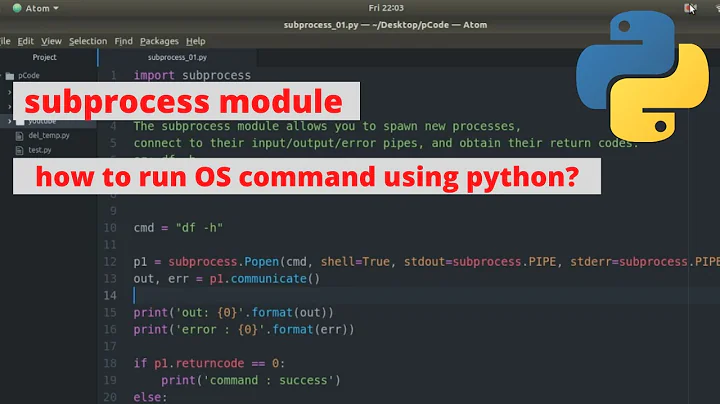

Related videos on Youtube

Luke Sapan

Updated on July 09, 2022Comments

-

Luke Sapan almost 2 years

I have a python subprocess that I'm trying to read output and error streams from. Currently I have it working, but I'm only able to read from

stderrafter I've finished reading fromstdout. Here's what it looks like:process = subprocess.Popen(command, stdout=subprocess.PIPE, stderr=subprocess.PIPE) stdout_iterator = iter(process.stdout.readline, b"") stderr_iterator = iter(process.stderr.readline, b"") for line in stdout_iterator: # Do stuff with line print line for line in stderr_iterator: # Do stuff with line print lineAs you can see, the

stderrfor loop can't start until thestdoutloop completes. How can I modify this to be able to read from both in the correct order the lines come in?To clarify: I still need to be able to tell whether a line came from

stdoutorstderrbecause they will be treated differently in my code.-

jfs over 8 years

-

-

Luke Sapan over 8 yearsUnfortunately this answer doesn't preserve the order that the lines come in from

stdoutandstderr. It is very close to what I need though! It's just important for me to know when anstderrline is piped relative to anstdoutline. -

jfs over 8 years@LukeSapan: I don't see any way to preserve the order and to capture stdout/stderr separately. You can get one or the other easily. On Unix you could try a select loop that can make the effect less apparent. It is starting to look like XY problem: edit your question and provide some context on what you are trying to do.

-

jfs over 8 years@LukeSapan: here's the answer with a select loop. As I said, It won't preserve order in the general case but it might be enough in some cases.

-

glglgl over 8 years@LukeSapan As both FDs are independent of each other, a message coming through one may be delayed, so there is no concept of "before" and "after" in this case...

-

jfs over 8 yearsunrelated: use

if not s:instead ofif len(s) == 0:here. Usewhile fds:instead ofwhile True: ... if fds == []: break. Useresults = collections.defaultdict(int); ...; results[data]+=1instead ofresults = {}; ...; results[data] = results[data] + 1 if data in results else 1 -

jfs over 8 yearsOr use

results = collections.Counter(); ...; results[data]+=1; ...; for data, count in results.most_common(): -

jfs over 8 yearsyou could use

data = b''.join([s for _, s in readfds(...)]) -

jfs over 8 yearsyou should close the pipes to avoid relying on the garbage colleciton to free file descriptors in the parent and call

p.wait()to reap the child process explicitly. -

jfs over 8 years

-

nurettin over 5 years@LukeSapan why preserve the order? Just add timestamps and sort at the end.

-

Luke Sapan over 5 years@nurettin we were dealing with large volumes of data so I'm not sure it would've been practical back then, but that's a great solution for most use cases.

-

Dev Aggarwal over 4 yearsLooks like this is the culprit - stackoverflow.com/questions/375427/…. selectors don't work on windows for pipes :(

Dev Aggarwal over 4 yearsLooks like this is the culprit - stackoverflow.com/questions/375427/…. selectors don't work on windows for pipes :( -

tripleee over 4 yearsAs an obvious and trivial improvement, get rid of the

tripleee over 4 yearsAs an obvious and trivial improvement, get rid of theshell=True -

MoTSCHIGGE over 4 yearsIs there any solution how to interrupt the process while queue.get blocks?

-

jfs over 4 yearsnote: 1- it doesn't work on Windows 2- it won't preserve the order (it just makes it less likely that you notice the order is wrong). See related comments under my answer

-

jfs over 4 years@MoTSCHIGGE: yes, there is, for example: Non-blocking read on a subprocess.PIPE in python

-

Dev Aggarwal over 4 yearsIs there a reproducible way to get the wrong order? Maybe some sort of fuzzing?

Dev Aggarwal over 4 yearsIs there a reproducible way to get the wrong order? Maybe some sort of fuzzing? -

Dev Aggarwal about 4 years@shouldsee, Does an arbitrary size of 1024 work with python 3.5?

Dev Aggarwal about 4 years@shouldsee, Does an arbitrary size of 1024 work with python 3.5? -

shouldsee about 4 years@DevAggarwal Did not try it because I found an alternative solution to my problem that does not require combining the streams.

shouldsee about 4 years@DevAggarwal Did not try it because I found an alternative solution to my problem that does not require combining the streams. -

Nikolas over 2 years#python3.8: Using read1() sometimes truncates my long output. It might be fixed by increasing the buffer size (i.e: read1(size=1000000), however it might disable the feature where "data is read and returned until EOF is reached", as stated in docs.python.org/3/library/…. Enventually I switched to read().

Nikolas over 2 years#python3.8: Using read1() sometimes truncates my long output. It might be fixed by increasing the buffer size (i.e: read1(size=1000000), however it might disable the feature where "data is read and returned until EOF is reached", as stated in docs.python.org/3/library/…. Enventually I switched to read(). -

Tim over 2 yearsThis seems super cool but it doesn't seem to preserve the order :(

Tim over 2 yearsThis seems super cool but it doesn't seem to preserve the order :(