Raid 5 with hot spare or RAID 10 with no hot spare?

Solution 1

What is the downtime tolerance of the array? Is it physically close, or in a remote data center? Bascially, if you can tolerate it, a cold spare allows you to do RAID10. The spare is sitting close by, but you have to physically do the swap. If that is not an acceptable scenario, then RAID5 with a hot spare is the only answer left.

Since you already have two RAID1 sets that have 1 drive failure tolerance, you really gain nothing by going RAID10 with no hot spare. Your entire array can still only survive a single drive failure.

Solution 2

These raids are all relative to each-other assuming the same disks and controller in the array.

Raid5: Good read speed, rotten write speed, can survive any double disk failure if the failures occur over enough time for the raid to rebuild between failures. (ie disk fails, raid rebuilds, disk fails, you're okay). If you have simultaneous double disk failures, you're SOL unless one of the failures is the hot spare. With a 4 disk array, half the double disk failures will ruin your day.

Raid6: Good read speed, really rotten write speed. Can survive any double disk failure. Not as commonly implemented as the other raids.

Raid10: Good read and write speeds, can survive any single disk failure, can survive (in the case of a 4 disk raid) half of potential double disk failures.

three-way-mirror + hot spare: lots less space, can survive any double disk failure and failure of up to 3 disks if the failures occur over enough time for the mirror to rebuild once. I'm not sure how many controllers / operating systems support this, but it was a feature I used in solaris with the MD stuff before ZFS.

There are a couple issues to worry about when looking at this:

how long does it take to rebuild an array? Sun started developing ZFS when they realized that under some situations, the time to rebuild a raid5 array is greated than the MTBF of the disks in the array, virtually guaranteeing that a disk failure results in an array failure.

disks from the same manufacturing lot may all have the same flaw (either the pallet was dropped or they put too much glue on the platters when they were making the disks)

The more complex the raid array, the more complex the software on the controller / implementation; I've seen as many raid controllers kill arrays as failed disks kill arrays. I've seen individual disks spin for years and years and years -- most do that in fact. The most reliable system I ever had was a box with redundant nothing that just never had a component failure. I've seen plenty of UPSs and raids and redundant (insert random components) cause failure because they made the system enough more complex that the complexity was the source of the failure.

You pays your moneys, you takes your chances... The question is,

Solution 3

I'd have to disagree with CHopper3. Since there are only 4 drives in this situation your failure capabilities are the same (2 drives) with either scenario, except with raid 10 if you happen to lose the wrong 2 drives then you'll have a real problem. Also there is definitely an added benefit of having a global spare for your other RAIDs as well.

Solution 4

I think it depends as well on what you want to put on this RAID. In one situation my customer and I decided to go for two RAID1. The situation was like this: It was one Vmware server with 4 VMs on it. Two of those VMs have been considered very important (= valuable data on it and read/write intensive) and the other two less important. So we have put one important and one unimportant VM together on one RAID1 and the other two on the other RAID1. Our arguments where that in in a case of a 2 disk failure there is still a chance that everything will be working. The worst thing is that one RAID array is not working at all. Then we still have two VMs running.

So, my case is that it depends as well what you want to put on those disks maybe womething else would be the best solution.

What operating system is running on the server? If you have Linux than you could make two RAID1 and then combine them with LVM too.

Else I would recommend you to stick with the answer from Chris.

Solution 5

Some other things need to be considered too.. how big/fast are each of the drives? 1TB SATA drives could take forever and a day to rebuild off the hotspare in a RAID5 leaving a large window open to a second drive failure..

You say performance isn't an issue, but I've seen some considerable performance hits going on during a RAID5 rebuild (especially on writes).

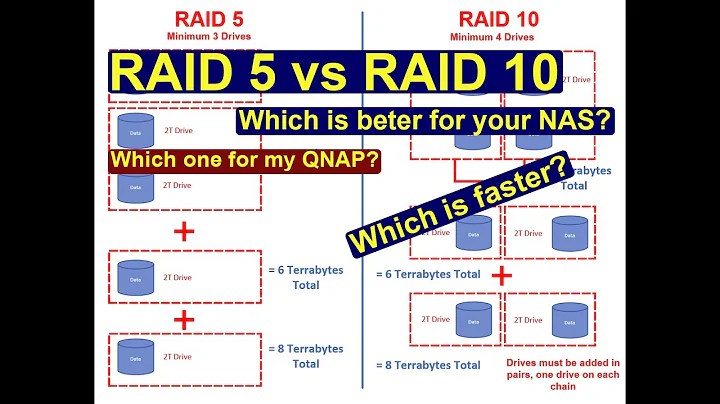

Related videos on Youtube

Fox32

Updated on September 17, 2022Comments

-

Fox32 almost 2 years

Yes, this is on of those "do my job for me" questions, have some pity:)

I'm at the limit for what I can do with the number of hard drives in a server without spending a substantial amount of money. I have four drives left to configure, and I can either set them up as a RAID 5 and dedicate a hot spare, or a RAID 10 with no hot spare. The size of each will be the same, and the RAID 5 will offer enough performance.

I'm RAID 5 shy, but I also don't like the idea of running without a hot spare. I'm not so interested in degraded performance, but the amount of time the system is without adequate redundancy. The server and drives are under a 13x5 4 hour response contract (although I happen to know that the nearest service provider is at least 2-3 hours away by car in the winter).

I should note that the server also has two RAID 1 arrays which would also be protected by the hot spare. Why don't they make drive cages with 9 bays! Heh.

-

MDMarra over 13 years"I should note that the server also has two RAID 1 arrays which would also be protected by the hot spare. Why don't they make drive cages with 9 bays! Heh." - Most controllers will allow you to assign a global hot-spare so that you can have your single hot-spare protect either array in case of failure.

MDMarra over 13 years"I should note that the server also has two RAID 1 arrays which would also be protected by the hot spare. Why don't they make drive cages with 9 bays! Heh." - Most controllers will allow you to assign a global hot-spare so that you can have your single hot-spare protect either array in case of failure.

-

-

ThorstenS over 14 years+1 never again Raid10 !

-

Fox32 over 14 yearsThe server is right here, but I am the only one who can service it until the vendor can arrive. I wouldn't say that the entire array can only survive one drive failure, but that each array can only survive one drive failure (if I go RAID 5). What are the odds that two drives will fail at the same time, and what are the odds that those two drives will be part of the same array.... it's a gamble. I prefer the hot spare option, I've just been "burned" by RAID 5 in the past.

-

chris over 14 years@ThorstenS: I've seen performance problems with raid5 that make for a very bad day.

-

chris over 14 yearsUm, with R10, if the two disks that have both copies of your data fail, the array fails. With raid5, if you have a disk fail, then rebuild the array, then the next disk fails, you're still fine. Only if three disks fail over time or 2 fail at the same time do you lose data.

-

Fox32 over 14 yearsI've been burned by a controller "losing" a RAID 5, hence my hesitation. I'm aware of the differences between the RAID levels. So my question to you then, is, barring performance characteristics, would you feel better about a RAID 10 without a hot spare than a RAID 5 with a hot spare?

-

Decebal over 14 yearsum, if 2 RAID10 disks die, you've a 50/50 chance of disaster. With RAID5, if 2 disks die, you've 100% chance of disaster. The chance of 2 disks dying at the same time is not to be underestimated.

-

Dan Carley over 14 yearsThe phrase double disk failure is commonly used to describe simultaneous. It doesn't really make sense using it to describe failures with a comfortable lapse of time between them.

-

chris over 14 yearsWhat can your controller do? If it can do a 3 way mirror with a hot spare, that's the most reliable. If it can do a mirror of mirrors, that's almost as good. If you need the space, raid 10 and 5 are the same, raid 10 is less reliable in one sort of failure, raid 5 is more reliable in theory and less reliable in practice. How lucky do you feel and how comfortable are you with your hardware? I would use a raid 10 because it is easier to recover data from if everything else explodes. But I'd actually use a 3 way mirror before all the rest...

-

Alberto over 14 yearsA note on drive manufacturers: I've some personal experience with bad lots of drives - what I've learned is that the drive manufacturer won't admit there's a problem until and unless you beat them over the head with the bad drives. And if you don't have enough of them that you can prove your point, they'll just replace the ones that die and leave you waiting for the next one(s) to go.

-

chris over 14 yearsA raid 5 survives 25% of double disk failures, a raid 10 survives 50% of them. A raid 5 survives all failures of 2 disks if the array rebuilds between failures. A raid 10 always dies if 2 disks fail. All things equal, I'd put my valuable data on a 3 way mirror if I can afford it.

-

Fox32 over 14 yearsThanks for the advice. The drives in question are 146GB 10K SAS.

-

Piskvor left the building over 13 years@Dan Carley: Well, if your drives are the same model and same age, they are anything but independent in this regard. Disks from the same bad production batch can start having problems at approximately the same time, especially when one fails and the others have to work extra to resync. Thus, a single disk failure can set off a cascading failure throughout the array.

Piskvor left the building over 13 years@Dan Carley: Well, if your drives are the same model and same age, they are anything but independent in this regard. Disks from the same bad production batch can start having problems at approximately the same time, especially when one fails and the others have to work extra to resync. Thus, a single disk failure can set off a cascading failure throughout the array. -

azethoth over 13 yearsAgreed. Raid 10 everytime is not the best answer here.

-

Zypher over 13 years@chris err an R10 survives 100% of multi disk failures - if you allow it to rebuild in between too

-

Chopper3 over 13 yearsChris & Tatas you're both wrong sorry, think of it this way; With R5+HS if you lose a disk the HS steps in (or doesn't if it's the HS disk that died) and you have a basic R5 at which point if you lose any other disk before the rebuild you have a 100% chance you're dead - with R10 if you lose a disk you have a 33% chance that if you lose another disk before the disk is replaced and the array rebuilt you're dead. Plus of course R10 performs much better than R5 when in a good state and much, much better in a degraded state plus rebuilds are quicker.

-

Zoredache over 13 years@Tatas, Perhaps something a double failure before the rebuild is complete? zdnet.com/blog/storage/why-raid-5-stops-working-in-2009/162

-

chris over 13 years@chopper: If you lose a disk in R5, then rebuild from spare, then lose another, you now effectively have an intact raid0. That's 3 discrete disk failure events before you lose data. If you have a single simultaneous failure of more than one disk with R5, you're probably hosed (there's the tiny chance you'll lose the hot-spare, in which case you should also buy a lottery ticket...) With R10 and you have a simultaneous failure of 2 disks, you may be hosed.

-

chris over 13 years@zypher: the point is you've only got a limited number of disks in the array, and if you go raid 10 you don't have a hot spare. If you lose one disk, you've got a 33% chance of the next failure destroying 50% your data.