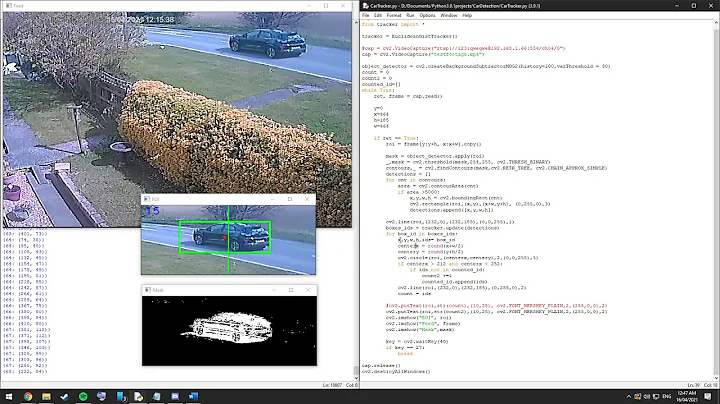

Read Frames from RTSP Stream in Python

Solution 1

Using the same method listed by "depu" worked perfectly for me. I just replaced "video file" with "RTSP URL" of actual camera. Example below worked on AXIS IP Camera. (This was not working for a while in previous versions of OpenCV) Works on OpenCV 3.4.1 Windows 10)

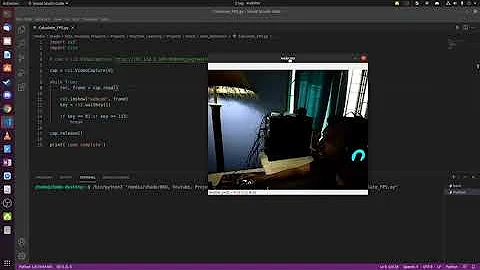

import cv2

cap = cv2.VideoCapture("rtsp://root:[email protected]:554/axis-media/media.amp")

while(cap.isOpened()):

ret, frame = cap.read()

cv2.imshow('frame', frame)

if cv2.waitKey(20) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

Solution 2

Bit of a hacky solution, but you can use the VLC python bindings (you can install it with pip install python-vlc) and play the stream:

import vlc

player=vlc.MediaPlayer('rtsp://:8554/output.h264')

player.play()

Then take a snapshot every second or so:

while 1:

time.sleep(1)

player.video_take_snapshot(0, '.snapshot.tmp.png', 0, 0)

And then you can use SimpleCV or something for processing (just load the image file '.snapshot.tmp.png' into your processing library).

Solution 3

use opencv

video=cv2.VideoCapture("rtsp url")

and then you can capture framse. read openCV documentation visit: https://docs.opencv.org/3.0-beta/doc/py_tutorials/py_gui/py_video_display/py_video_display.html

Solution 4

Depending on the stream type, you can probably take a look at this project for some ideas.

https://code.google.com/p/python-mjpeg-over-rtsp-client/

If you want to be mega-pro, you could use something like http://opencv.org/ (Python modules available I believe) for handling the motion detection.

Solution 5

Here is yet one more option.

It's much more complicated than the other answers. But this way, with just one connection to the camera, you could "fork" the same stream simultaneously to several multiprocesses, to the screen, recast it into multicast, write it to disk, etc. Of course, just in the case you would need something like that (otherwise you'd prefer the earlier answers)

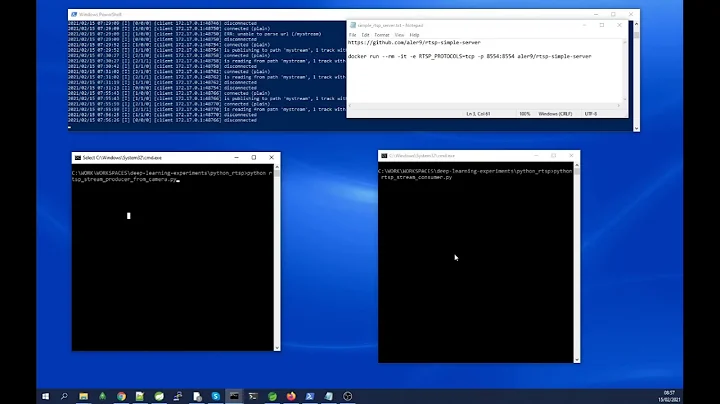

Let's create two independent python programs:

- Server program (rtsp connection, decoding) server.py

- Client program (reads frames from shared memory) client.py

Server must be started before the client, i.e.

python3 server.py

And then in another terminal:

python3 client.py

Here is the code:

(1) server.py

import time

from valkka.core import *

# YUV => RGB interpolation to the small size is done each 1000 milliseconds and passed on to the shmem ringbuffer

image_interval=1000

# define rgb image dimensions

width =1920//4

height =1080//4

# posix shared memory: identification tag and size of the ring buffer

shmem_name ="cam_example"

shmem_buffers =10

shmem_filter =RGBShmemFrameFilter(shmem_name, shmem_buffers, width, height)

sws_filter =SwScaleFrameFilter("sws_filter", width, height, shmem_filter)

interval_filter =TimeIntervalFrameFilter("interval_filter", image_interval, sws_filter)

avthread =AVThread("avthread",interval_filter)

av_in_filter =avthread.getFrameFilter()

livethread =LiveThread("livethread")

ctx =LiveConnectionContext(LiveConnectionType_rtsp, "rtsp://user:[email protected]", 1, av_in_filter)

avthread.startCall()

livethread.startCall()

avthread.decodingOnCall()

livethread.registerStreamCall(ctx)

livethread.playStreamCall(ctx)

# all those threads are written in cpp and they are running in the

# background. Sleep for 20 seconds - or do something else while

# the cpp threads are running and streaming video

time.sleep(20)

# stop threads

livethread.stopCall()

avthread.stopCall()

print("bye")

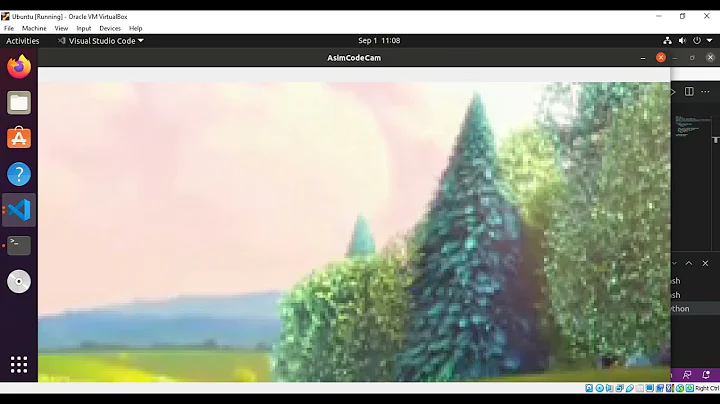

(2) client.py

import cv2

from valkka.api2 import ShmemRGBClient

width =1920//4

height =1080//4

# This identifies posix shared memory - must be same as in the server side

shmem_name ="cam_example"

# Size of the shmem ringbuffer - must be same as in the server side

shmem_buffers =10

client=ShmemRGBClient(

name =shmem_name,

n_ringbuffer =shmem_buffers,

width =width,

height =height,

mstimeout =1000, # client timeouts if nothing has been received in 1000 milliseconds

verbose =False

)

while True:

index, isize = client.pull()

if (index==None):

print("timeout")

else:

data =client.shmem_list[index][0:isize]

img =data.reshape((height,width,3))

img =cv2.GaussianBlur(img, (21, 21), 0)

cv2.imshow("valkka_opencv_demo",img)

cv2.waitKey(1)

If you got interested, check out some more in https://elsampsa.github.io/valkka-examples/

Related videos on Youtube

fmorstatter

Updated on March 17, 2022Comments

-

fmorstatter about 2 years

I have recently set up a Raspberry Pi camera and am streaming the frames over RTSP. While it may not be completely necessary, here is the command I am using the broadcast the video:

raspivid -o - -t 0 -w 1280 -h 800 |cvlc -vvv stream:///dev/stdin --sout '#rtp{sdp=rtsp://:8554/output.h264}' :demux=h264This streams the video perfectly.

What I would now like to do is parse this stream with Python and read each frame individually. I would like to do some motion detection for surveillance purposes.

I am completely lost on where to start on this task. Can anyone point me to a good tutorial? If this is not achievable via Python, what tools/languages can I use to accomplish this?

-

hek2mgl over 10 yearsLook here: superuser.com/questions/225367/… ... Seems that even vlc is able to do that..

hek2mgl over 10 yearsLook here: superuser.com/questions/225367/… ... Seems that even vlc is able to do that..

-

-

Shai M. about 6 yearshow can I use the

Shai M. about 6 yearshow can I use the.snapshot.tmp.pngfile? -

squirl about 6 years@ShaiM. The same way you'd use any other PNG file

-

Shai M. about 6 yearsCan it be used with ubuntu server as well?

Shai M. about 6 yearsCan it be used with ubuntu server as well? -

squirl about 6 yearsIf you install the libraries, probably. Try it and see

-

Shai M. about 6 yearsbtw, can you see my question over here: stackoverflow.com/questions/48620863/… thanks

Shai M. about 6 yearsbtw, can you see my question over here: stackoverflow.com/questions/48620863/… thanks -

Zac about 6 yearsalas the hard part (Read Frames from RTSP Stream) is not covered here

-

Josh Davis over 4 yearsThis almost worked for me. I had to append a resolution query parameter to the end of the URL, rtsp://user@[email protected]/axis-media/media.amp?resolution=1280x720. I'm using OpenCV 4.1.2 on Ubuntu 18.04, and the camera is Axis M5525-E.

Josh Davis over 4 yearsThis almost worked for me. I had to append a resolution query parameter to the end of the URL, rtsp://user@[email protected]/axis-media/media.amp?resolution=1280x720. I'm using OpenCV 4.1.2 on Ubuntu 18.04, and the camera is Axis M5525-E. -

Aakash Basu almost 4 yearsIs there a chance of frame loss while using RTSP? If yes, is it mandatory to use a pub sub to handle that?

-

SGrebenkin about 2 yearsWorked for me! Thanks!

SGrebenkin about 2 yearsWorked for me! Thanks!